MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,466

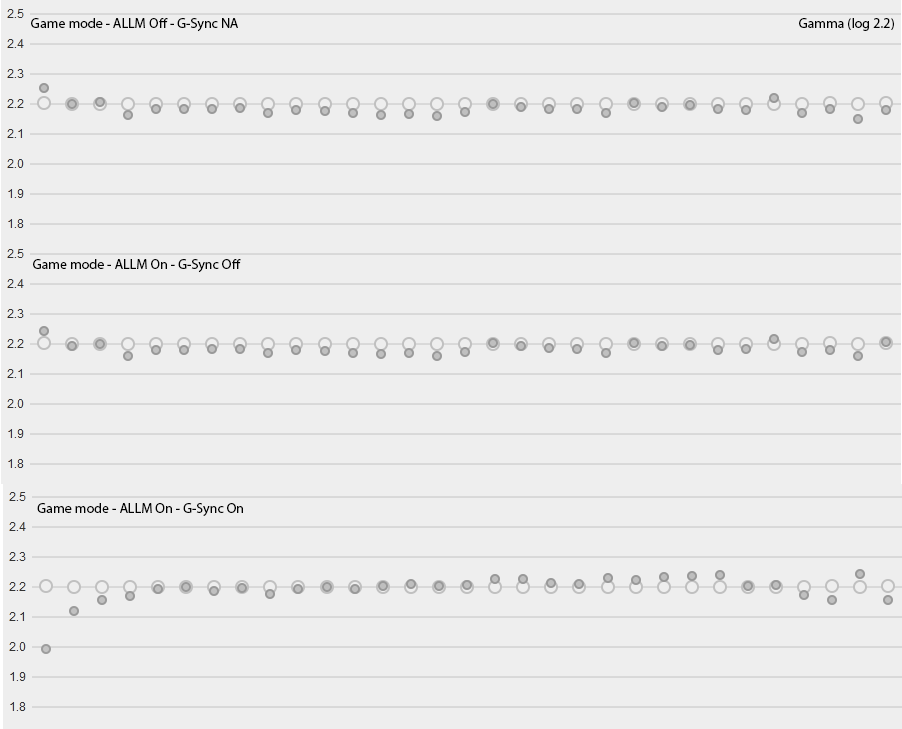

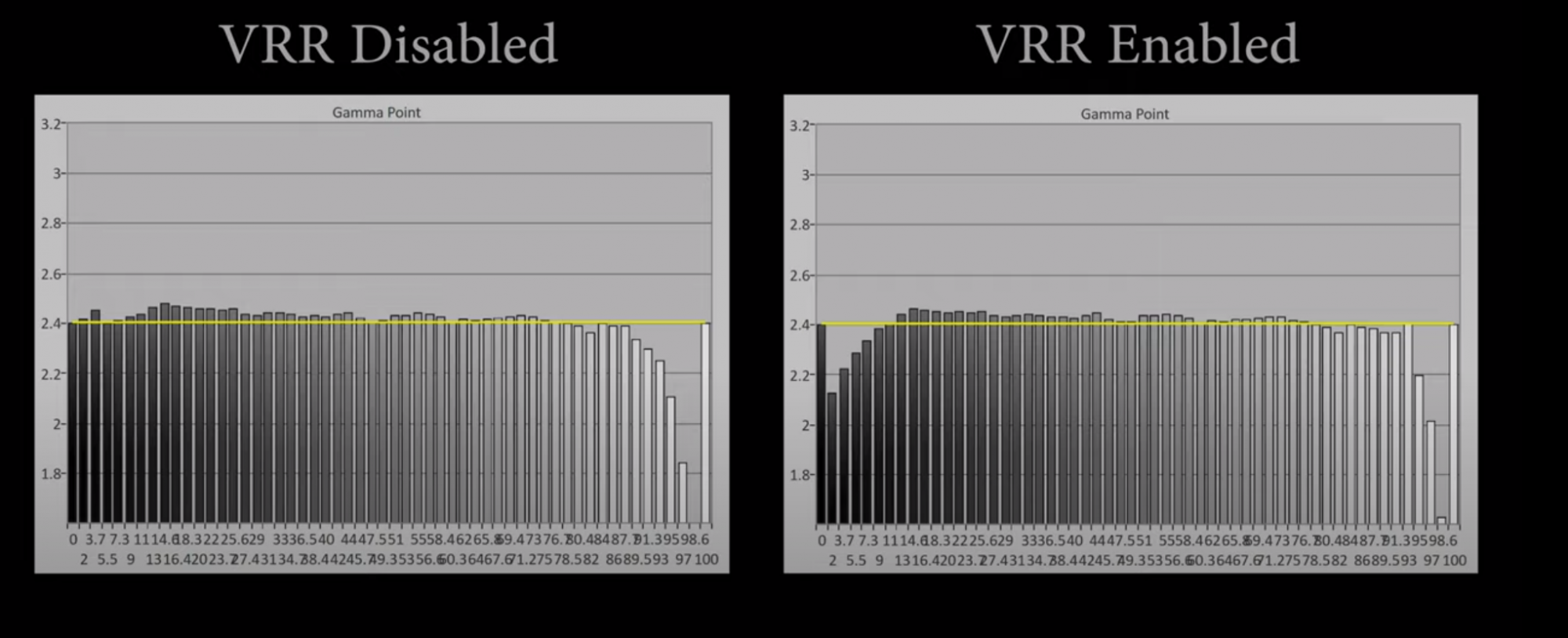

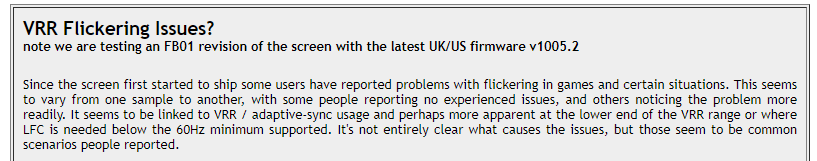

From TFTC's review of the Samsung G7:

I believe this is the same kind of flickering that I am experiencing in Guild Wars 2 on my CX, where running at frame rates near the bottom end of the VRR window and transitioning in and out of LFC is causing it. Not sure if LG can fix this with a firmware update if the issue is gsync itself.

I believe this is the same kind of flickering that I am experiencing in Guild Wars 2 on my CX, where running at frame rates near the bottom end of the VRR window and transitioning in and out of LFC is causing it. Not sure if LG can fix this with a firmware update if the issue is gsync itself.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)