Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

LG 48CX

- Thread starter gan7114

- Start date

I mentioned at the top of the previous page that John from Digital Foundry found that BFI + VRR worked on his CX's latest firmware at least from the Xbox Series X, but only when Dolby Vision was enabled:I'm not sure what you're referring to but you can't even enable BFI without disabling VRR first.

https://hardforum.com/threads/lg-48cx.1991077/page-197#post-1045180420

There was no clarification as to whether this could also work on a PC whether with Dolby Vision-enabled software or via some CRU tweaking or the like.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

I mentioned at the top of the previous page that John from Digital Foundry found that BFI + VRR worked on his CX's latest firmware at least from the Xbox Series X, but only when Dolby Vision was enabled:

https://hardforum.com/threads/lg-48cx.1991077/page-197#post-1045180420

There was no clarification as to whether this could also work on a PC whether with Dolby Vision-enabled software or via some CRU tweaking or the like.

I asked if there's any Dolby Vision titles on PC that I could try it out with as I do not own an XSX but I got no reply to that but instead the BFI = bad, HDR = more better response lol. I'm willing to test it out if anyone can give me a Dolby Vision title for PC, but I heard even Forza 5 doesn't support Dolby Vision on PC. Supposely Halo Infinite will be a Dolby Vision PC title so I guess that will be the first and perhaps only test we can do of BFI + VRR combo. And then of course I'm expecting to the get bombared with the "PLAYING HALO INFINITE IN HDR IS BETTER THAN BFI".

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,757

I would love simultaneous BFI VRR, but I don't have time to experiment to try to get it working. I'm hoping someone else does though.

Monstieur

Limp Gawd

- Joined

- Jun 10, 2011

- Messages

- 440

It's likely a bug and will result in variable brightness when the frame rate fluctuates.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

I mentioned at the top of the previous page that John from Digital Foundry found that BFI + VRR worked on his CX's latest firmware at least from the Xbox Series X, but only when Dolby Vision was enabled:

https://hardforum.com/threads/lg-48cx.1991077/page-197#post-1045180420

There was no clarification as to whether this could also work on a PC whether with Dolby Vision-enabled software or via some CRU tweaking or the like.

I let this thread stew a little bit, but on my return I'm kind of disappointed now the thread as a whole seems to have doubled on HDR and isn't even willing to experiment to see if this BFI + VRR thing even works let alone if it's useful.

I find it very interesting that, with high-refresh monitors, BFI + VRR is seen as a sort of "holy grail", and I figured there's be more of those types of people here considering OLED's crazy-fast response time. But it seems I was mistaken and that the crowd here is a third niche between the cinema buff's "BFI good, HDR good, high framerate bad" and the high refresh gamer's "BFI good, high framerate good, HDR unnecessary".

This is making me think that most OLED users actually fall into the more 60fps-to-100fps gaming category rather than the 100fps+ category which, as I'm typing this, I'm kind of coming to the conclusion may be an audience that is exactly as I said - they value high framerate up to a point but will put more focus on static image quality such as resolution and HDR and will very much sacrifice top-end framerates to do so (one only has to note the kinds of GPUs most people here are using... and I very much don't have interest in such high-end GPUs). And it's this 60fps-to-100fps that's simply not going to be as useful with any sort of BFI+VRR combination.

Basically, I made the mistake of figuring that the high frame-rate gamer would be the main PC OLED customer - it turns out it's the high graphics settings gamer instead. And while I'm not really a high frame-rate gamer, I'm even less of a high graphics settings gamer. Really I'm just a "I want to be able to use a screen in the dark without it either blinding me or looking like crap without sacrificing the motion resolution I've become used to on a CRT" and I've been in that camp for over a decade now.

I want to make one thing very clear though - I'm not married to the idea of BFI and will happily ditch it if the screen has a high enough refresh rate to compensate, but "only" 120Hz ain't gonna cut it since that's already what I've been doing on my CRT for ages.

Also while typing this, I'm reminded that the flickering on a CRT is less noticeable than BFI on at least an LED-backlit LCD screen of comparable flicker-rate, presumably because a CRT's PWM is more akin to a sine wave while LEDs output much more of a square wave. And IIRC I'm pretty sure I found the flickering on CRTs to be reduced as you decrease brightness and/or contrast as I do in dark environments as it's simply reducing the amplitude of the PWM waveform, but on LED-backlit LCDs the flicker instead increases as it instead increases the "off" time during the backlight strobing in order to reduce brightness.

For reference I have no idea how a flicker-less LED backlight would behave with any sort of backlight strobing, but with OLED I would imagine that it would be both similar to a CRT where it just simply reduces the amplitude but also like an LED-backlit LCD whereby it operates as square wave on/off rather than a smoother CRT-like sine wave.

=========================

This is making me think that most OLED users actually fall into the more 60fps-to-100fps gaming category rather than the 100fps+ category

If you can maintain 120fps minimum, even without BFI, you'd already be cutting the sample-and-hold blur by 50% compared to 60fpsHz solid to more of a "soften" blur at speed rather than smearing at 60. That full soften is not all of the time but rather during moving the viewport at speed. At lower panning rates and depending on the game genre that can be less aggressive (isometric rpg, 3rd person locked on to target souls-like games and other slower pan by nature of orbiting follow camera games, etc) .. so even that soften blur during mouse-look/game pad panning FoV movement will be exhibited less. And with oled's response time that 50% reduction vs 60fpsHz is probably slightly tighter than doing so on most lcds,. especially VA lcds that get any kind of black depth and contrast outside of very few FALD IPS gaming screens.

However - outside of a few highly optimized corridor shooters (i.e. narrow view distance), modern demanding games at 4k resolutions are lucky if they can get over 100fps average let alone 120fps minimum at "high+" to ultra graphics settings. BFI works best at 120fps minimum which is more like 150fps average or more.

These are 120hz 4K HDR + VRR screens designed to give you a glorious eye-candy viewing and gaming experience :

...at up to double the motion definition compared to 60fpsHz ("glassy" movement smoothness, up to double the pathing articulation: more dots on a dotted line/curve, and even greater animation cycle def/smoothness)

...much less aggressive FoV movement blurring at higher frame rates ("soften" vs "smearing").

...a TON more colors and color detail above the very low SDR ceiling in HDR material

...a TON more black depth and "low-lights" detail in dark areas in HDR material well beyond the narrow "2D" SDR limitation. And down to "infinite" black depth.

...those tall and deep 3D color volumes side by side displayed down to the pixel generating greater depth perception effect and much higher texture ID.

...somewhat higher graphics settings vs frame rates "unlocked" or thrown into the mix by being able to lean on VRR and ride a frame rate roller coaster smoothly.

...at 4k resolution for greater detail overall and better PPD at reasonable distances, but at the cost of much greater gpu power demand. One goes with the other.

HDR is a greater advancement to movie and game visualizations than any of the other facets, and the fact that it can be combined with them all at the same time (save BFI) - it's even more of a landslide.

HDR (high color and "lowlight" volumes, color detail and dark details in all areas and surfaces gained, depth perception increase, material/texture ID) + VRR (smoothing of varying fps to hz and making higher graphics settings available when leaning on VRR) are features that eliminate BFI , but using BFI properly eliminates both VRR and it's higher graphics settings along with HDR capability (and can be eye-fatiguing due to continual strobe throughout every second of every scene).

Using 90 - 120fps average not only reduces sample-and-hold blur but it increases motion definition for "glassy" movement, more articulated pathing (more dots per dotted line/curve), and even more animation cycle definition (more unique pages in an animation flip book).

Combining that with reduction of sample-and-hold blur (the worst blur which is exhibited during high speed FoV movement periods) to more of a "soften" blur photoshop effect isolated to individual on screen objects and architecture rather than a full smearing viewport and you are getting appreciable gains.

I had a 23.5" 16:10 fw900 graphics profession crt for years next to various LCDs. I got a 27" 120hz 1080p samsung, one of the first 120hz LCD gaming screens relatively early on. I got one of the first 27" 144hz 2560x1440p g-sync LCDs too, and later a 31.5" 1440p g-sync VA for the black depth/contrast. I eventually dropped the crt in the long run. CRTs like the FW900 require maintenance internally and even so with age they eventually start to fade and/or bloom and it proceeds to get worse and worse. They also all take a half hour to "warm up" before they are fully saturated and contrasted among other things like being subject to electrical and magnetic interference/noise. In regard to LCDs - even back when I got my 31.5" va and had 60hz VA alongside it for desktop/apps - I swore I'd never go back to a pale IPS/TN screen again. Now I'll never go back to regular LCD tech again (outside of some future pixel-level microLED or something) at all for my media and gaming "stage" screen.. I'm never backpedaling from infinite black depth, per pixel emission, and I'll never have a non-HDR color volume screen as my media/gaming display if I can help it. Eventually everything will be HDR including photography, graphic art, posted photos and video clips online in social media, youtube videos, news media, etc. Just like high color displays, 4:3 -> 16:9 , lesser resolutions to 1080p "HD", 1440p gaming screens, now 4k "uhd".. etc. and in the very long run everything will likely go to AR/mixed reality with virtual screens in real space and "living documents", avatars, assistants,etc as well as "holographic games" either in small scale wherever you are (like on a table or floor on a floating virtual screen) or more VR like immersed in them.

I can understand if you feel the same way about not backpedaling in regard to zero blur but for me that ship has sailed in regard to "zero" for the foreseeable future until we get something like duplication-interpolation of 100fps solid x5 or x10 for up to 500fps or 1000fps on 500hz or 1000hz screens for negligible to 1px "zero" blur at those rates without bfi/strobing/pwm. When I first got my 48CX OLED I had a 1080ti sc and played a few great HDR games at 60hz (60fps minimum). I would have gone with a 120hz ceiling and used VRR and more graphics horsepower if I could have but that was what I was stuck with for awhile due to gpu shortages. While not optimal being stuck at 60fpsHz solid (especially in the motion definition facet), they were still great games and they looked gorgeous in HDR (jedi:fallen order and Nioh2). They were also very challenging games (jedi on jedi master with zero hud ini mod, nioh2 is just a challenging game out of the gate esp as a heavy 2-handed axe user) - so it's not like I'm playing some tourist mode games on my screen

Last edited:

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

Well I guess my hunch from back then about my CX feeling far more responsive than my X27 wasn't just placebo.

RTings measured lag to be 11ms at 4k with VRR. I have an Acer X27 and RTings measured that monitor to have 14ms of lag at 4k with VRR. Using both my CX and X27 side by side, the CX feels vastly more responsive than my X27, more than a 3ms difference. OLED's instant response time probably has something to do with it, but yeah the CX feels plenty responsive and only the 0.1% of super hardcore competitive gamers will find it to be "not responsive enough".

I guess it also goes to show that the need for BFI on an OLED isn't needed as much as it is on an LCD since the image is just so clean already, the only limiting factor is the 120Hz refresh rate itself.

equinox654

Gawd

- Joined

- Apr 7, 2005

- Messages

- 890

I tried just BFI its self when I first got the tv. It is indeed smooth, but the brightness drop is too severe and most of us here don't like maxing out the brightness (oled light) anyway.I let this thread stew a little bit, but on my return I'm kind of disappointed now the thread as a whole seems to have doubled on HDR and isn't even willing to experiment to see if this BFI + VRR thing even works let alone if it's useful.

I find it very interesting that, with high-refresh monitors, BFI + VRR is seen as a sort of "holy grail", and I figured there's be more of those types of people here considering OLED's crazy-fast response time. But it seems I was mistaken and that the crowd here is a third niche between the cinema buff's "BFI good, HDR good, high framerate bad" and the high refresh gamer's "BFI good, high framerate good, HDR unnecessary".

This is making me think that most OLED users actually fall into the more 60fps-to-100fps gaming category rather than the 100fps+ category which, as I'm typing this, I'm kind of coming to the conclusion may be an audience that is exactly as I said - they value high framerate up to a point but will put more focus on static image quality such as resolution and HDR and will very much sacrifice top-end framerates to do so (one only has to note the kinds of GPUs most people here are using... and I very much don't have interest in such high-end GPUs). And it's this 60fps-to-100fps that's simply not going to be as useful with any sort of BFI+VRR combination.

Basically, I made the mistake of figuring that the high frame-rate gamer would be the main PC OLED customer - it turns out it's the high graphics settings gamer instead. And while I'm not really a high frame-rate gamer, I'm even less of a high graphics settings gamer. Really I'm just a "I want to be able to use a screen in the dark without it either blinding me or looking like crap without sacrificing the motion resolution I've become used to on a CRT" and I've been in that camp for over a decade now.

I want to make one thing very clear though - I'm not married to the idea of BFI and will happily ditch it if the screen has a high enough refresh rate to compensate, but "only" 120Hz ain't gonna cut it since that's already what I've been doing on my CRT for ages.

Also while typing this, I'm reminded that the flickering on a CRT is less noticeable than BFI on at least an LED-backlit LCD screen of comparable flicker-rate, presumably because a CRT's PWM is more akin to a sine wave while LEDs output much more of a square wave. And IIRC I'm pretty sure I found the flickering on CRTs to be reduced as you decrease brightness and/or contrast as I do in dark environments as it's simply reducing the amplitude of the PWM waveform, but on LED-backlit LCDs the flicker instead increases as it instead increases the "off" time during the backlight strobing in order to reduce brightness.

For reference I have no idea how a flicker-less LED backlight would behave with any sort of backlight strobing, but with OLED I would imagine that it would be both similar to a CRT where it just simply reduces the amplitude but also like an LED-backlit LCD whereby it operates as square wave on/off rather than a smoother CRT-like sine wave.

On top of that if I remember correctly it had a dreadful pwm like flicker.

I am a high refresh rate evangelist, but the way I see it is I am using a display with the best image quality that I have ever seen. If I wanted to mar the image quality. I would pick up a 240hz or better display for competitive games that require that clarity.

One thing to keep in mind is that due to oled response times, it seems faster than the 120hz refresh rate would imply.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

I tried just BFI its self when I first got the tv. It is indeed smooth, but the brightness drop is too severe and most of us here don't like maxing out the brightness (oled light) anyway.

On top of that if I remember correctly it had a dreadful pwm like flicker.

I am a high refresh rate evangelist, but the way I see it is I am using a display with the best image quality that I have ever seen. If I wanted to mar the image quality. I would pick up a 240hz or better display for competitive games that require that clarity.

One thing to keep in mind is that due to oled response times, it seems faster than the 120hz refresh rate would imply.

If you were using BFI at 60Hz it's going to flicker like crazy. At 120Hz the flicker shouldn't be noticeable and I even used BFI on High which is suppose to be the worst for flickering but couldn't really notice it at 120Hz. Also I would say BFI isn't just used for some competitive advantage in a multiplayer game. For me I would use it in really old single player titles that completely lack any form of HDR or perhaps super easy to run side scrollers where the side to side camera panning can greatly benefit from BFI giving the best clarity possible. Even top-down isometric RPG's can also benefit from BFI when you pan the camera around the map, zero sample and hold blur. BFI is just a great option to have even if I don't use it very often and BFI + VRR would've sweetened the deal even more as long as I could maintain at least 90fps at all times.

Last edited:

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

Well I guess my hunch from back then about my CX feeling far more responsive than my X27 wasn't just placebo.

I guess it also goes to show that the need for BFI on an OLED isn't needed as much as it is on an LCD since the image is just so clean already, the only limiting factor is the 120Hz refresh rate itself.

Well there is response time trailing and blurring which is a more mechanical thing, and there is sample and hold blur which is from the way our eyes and brains work. You are correct that OLED response time is beyond crisp. It's actually "too fast" for low frame rate content like 24p movie content, so shows stuttering where LCDs are so slow that they blend the stuttering out a little. That speed when using high hz gaming on an OLED probably eliminates any response time related blur and trailing issues that a LCD would have besides sample and hold blur. I wouldn't say an LCD always has sample-and-hold blur PLUS response time trailing and blurring though because sample and hold blur can potentially mask those issues depending how many pixels of blur it is (which in regard to your whole screen blurring is also in effect related to how fast you are moving the viewport in 1st/3rd person games at any given time).

So it for example on a LCD screen would depend on:

-how bad the response time was

-how slow of a fpsHz you were running at any given time in your game/frame-rate graph

-how fast the viewport is moving in a particular game at any given time

From RTings c1 review

The LG C1 can completely remove 24p judder though, which is a different side effect from stutter. It can completely remove it when you enable cinema screen mode which multiplies the 24fps x5 to match the 120hz panel refresh rate exactly.Because of the TV's fast response time, low frame rate content can appear to stutter since each frame is held on for longer. If it bothers you, motion interpolation can help.

from blurbusters.com:

Even instant pixel response (0 ms) can have lots of motion blur due to sample-and-hold

Your eyes are always moving when you track moving objects on a screen. Sample-and-hold means frames are statically displayed until the next refresh. Your eyes are in a different position at the beginning of a refresh than at the end of a refresh; this causes the frame to be blurred across your retinas

Sample and hold blur will still happen but at 120fpsHz solid it is more of a soften blur where the baseline 60fpsHz solid is smearing blur. The slower the fpsHz and the faster the viewport movement (or movie panning), the worse it appears. So it happens to one degree or another on the whole viewport when moving it at speed. Otherwise it's less obnoxious.. in games where you are 3rd person with an orbiting tracking camera that moves while you move the fov it's usually much less aggressive in effect. Isometric rpgs and full map rts games suffer less of this too usually, depending on the frame rate of the moving map and the movement speed it pans by default (or that you customize). When spinning the FoV like a dervish at speed in a first person shooter or 3rd person "dumb camera" action game/mmo it's typically the most blur in my experience. As you slow your movement arc (which is in effect moving the entire game world around relative to you) and then just move your weapon or reticule around it's not happening or to that degree, at least not viewport wide. Similarly if you are flick aiming from point to point like a gnat you aren't really going to have as much of a chance to see the soften (120fpsHz) or smearing (60fpsHz) blur of the viewport/game-world spinning.

As that video shows, oled eliminates any blur exacerbating factors that aren't sample-and-hold from the equation so 120fpsHz on an OLED could appear in actual practice a little less sample-and-hold blurred than an LCD at 120fpsHz, and it could feel a lot more responsive. That video is very impressive. You'll notice that vincent did his tests at 120fpsHz solid. At lower frame rates, sample-and-hold-blur (to our eyes and our brains rather than his tracking hardware) would be worse on both oled and lcd. At some point the frame rate would be so slow that the sample-and-hold blur could overtake the lcd response time trailing/blurring/ghosting and essentially mask it.

Roflcopter_Down

[H]ard|Gawd

- Joined

- Jan 7, 2008

- Messages

- 1,563

When streaming music to the C1 with Apple AirPlay or whatever its called, is there a way to stop the TV from going into the firework screensaver? I ordered a service remote...

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

<...>At 120Hz the flicker shouldn't be noticeable and I even used BFI on High which is suppose to be the worst for flickering but couldn't really notice it at 120Hz.

I feel like it fatigues your (especially my) eyes even if you supposedly aren't consciously "seeing" it. People claim to "not really notice it" , etc. as if it is borderline. While you might try to be obvlivious to it I don't think your eyes and brain are.

BFI + VRR would've sweetened the deal even more.

Idk if they could do it without changing the rate of the black frame insertion which would require the brightness to change on the fly in relation to that rate. Varying the rate could be like PWM but in relation to your varying frame rate graph. Changing the brightness down/up to match, even if normalized to what we think we are seeing could have a negative effect on our eyes/fatigue. I don't like the effect of BFI on my eyes by default even at lower graphics settings(lacking VRR) or simple games able to achieve 120fpsHz solid - let alone running lower Hz VRR BFI. VRR varying all over the place could make it even worse depending how they implemented it. They could do rolling scans independent of the refresh rate but non-global can cause artifacts so probably wouldn't be great in the end result.

For me I'm prioritizing HDR games anyway as you can tell from my replies and hopefully auto HDR will get better over time for a lot more games. (I'll also mess with speacialK hdr mod).

------------------------------------------------------

I am a high refresh rate evangelist, but the way I see it is I am using a display with the best image quality that I have ever seen. If I wanted to mar the image quality. I would pick up a 240hz or better display for competitive games that require that clarity.

One thing to keep in mind is that due to oled response times, it seems faster than the 120hz refresh rate would imply.

I agree wholeheartedly with most of this. I wouldn't say *require* though. You can be just as competitive at 120fpsHz - especailly in online games.

Online game's tick rates are at best 128tick (7.8ms) and on the worst tick games 20 to 22 tick (50ms). That is slow. Then they take another tick worth of buffer 8ms to 50ms more which combines as 16ms to 100ms total (respective to 128tick - 20 tick servers) in order to prevent adding another 250ms of latency on any network hiccups. So now you are at around 62Hz to 10Hz rate, 16ms to 100ms depending on the game/tick rate. Then they add your ping time, say another ~ 40ms, and the server then calculates everyone's actions with biased by dev's netcode choices "best guess" and "rewinds time" a bit, sending you that new game action "slice" state back. Meanwhile you finally see that new interpolated "best guess" frame and the fastest gamers take another 150ms - 180ms to react to it (up to 200ms).

So in online games as opposed to LAN gaming tournaments (or even single player gaming), outside of reduction from the worst smearing blur viewport movement - very high hz benefits in regard to scoring and reaction times (in online games) are more marketing than anything imo. You can't react to what you haven't been delivered yet and even then you are getting an interpolated/biased guess result from the server as to what is happening so it's a fuzzy rubberband to one degree or another depending on the server tick and netcode, and is slower than your local action states. Even the linus tech tips "tests" comparing different Hz monitors were done locally I believe with bots/AI opponents, not even with ~ 7ms of LAN latency let alone that large online gaming latency chain I outlined above.

-------------------------------------------------------------------------

https://hardforum.com/threads/lg-48cx.1991077/post-1044802892

Command Execution Time = Current Server Time – (Packet Latency + Client View Interpolation)

Put into English this means that once you pull the trigger and this information package gets sent to the server, it then goes back from the current server time (the time the pulling the trigger package was received) by your ping plus your interpolation time. Only then it is determined if the client hit the shot or not.

If you were playing on a LAN against other players on the same LAN, on a server on the same LAN, you'd get latency of 3ms to 7ms. Marketing for monitors acts like you are being served at 144hz or 360Hz of game world states in online game competitions . That's just false advertising... (and with lower frame rates locally for most people on most games besides).

Imagine you want to reach the boxes on top mid on Mirage while an AWP is perched in window. You jump out of the cover of the wall, fly and land safe behind the boxes. In the moment you land, the AWP shot goes off and you somehow die and end up right on the edge of the boxes, a good half meter away from where you stood on your screen. In the German scene, you would have just been “interped”, even though “being lag compensated” might be the more accurate term (I acknowledge that is way more clunky and less easy to complain about).

As the peeking CT moves into the gap of the double doors, his lag compensated hitbox and model are still behind the door, giving the Terrorist no real chance to respond. However, it is imperative in this scenario for the peeking player to actually hit (and in most cases kill) his opponent in the time it takes the server to compute all executed commands and the appropriate lag compensation. Of course, the showcased example is taken with a ping of 150ms, which is unrealistically high for most people, artificially lengthening that time.

Should any of you reading this have the good fortune to play on LAN one day, you should keep in mind that peeker's advantage is solely dependent on lag compensation, a big part of which is made up by a players ping. With the typical LAN connection ping of 3-7ms, peeker's advantage is practically non-existent anymore. Together with many other factors, this is one of the reasons why CS:GO has to be played differently in certain aspects on LAN than on the internet.

--------------------------

https://www.reddit.com/r/Overwatch/comments/3u5kfg/everything_you_need_to_know_about_tick_rate/

Note: In an example where two players shoot each other, and both shots are hits, the game may behave differently. In some games. e.g. CSGO, if the first shot arriving at the server kills the target, any subsequent shots by that player that arrive to the server later will be ignored. In this case, there cannot be any "mutual kills", where both players shoot within 1 tick and both die. In Overwatch, mutual kills are possible. There is a tradeoff here.

- If you use the CSGO model, people with better latency have a significant advantage, and it may seem like "Oh I shot that guy before I died, but he didn't die!" in some cases. You may even hear your gun go "bang" before you die, and still not do any damage.

- If you use the current Overwatch model, tiny differences in reaction time matter less. I.e. if the server tick rate is 64 for example, if Player A shoots 15ms faster than player B, but they both do so within the same 15.6ms tick, they will both die.

- If lag compensation is overtuned, it will result in "I shot behind the target and still hit him"

- If it is undertuned, it results in "I need to lead the target to hit them".

Last edited:

Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

I would love simultaneous BFI VRR, but I don't have time to experiment to try to get it working. I'm hoping someone else does though.

This would be awesome, hope a solution to trick via edid or something is created.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

When streaming music to the C1 with Apple AirPlay or whatever its called, is there a way to stop the TV from going into the firework screensaver? I ordered a service remote...

I don't know if this helps your scenario or not but I use the "turn off the screen" feature which turns the oled emitters off. You can set that turn off the screen command icon to the quick menu so it's only 2 clicks to activate with the remote (I set mine to the bottom-most icon on the quick menu), or you can enable voice commands and then hold the mic button and say "turn off the screen". I wish there was a way to set it to one of the colored buttons so you could just hit one button but otherwise it works well enough. Clicking any button on the remote wakes up the emitters instantly. I usually hit the right side of the navigation wheel personally.

https://www.reddit.com/r/OLED/comments/j0mia1/quick_tip_for_a_fast_way_to_turn_off_the_screen/

While the emitters are off everything is still running, including sound. This works great to pause games or movies and go afk/out of the room for awhile for example. I sometimes cast tidalHD to my nvidia shield in my living room from my tablet utilizing the "turn off the screen" (emitters) feature. That allows me to control the playlists, find other material, pause, skip etc from my tablet with the TV emitters off when I'm not watching tv. You can do the same with youtube material that is more about people talking than viewing anything. I do that sometimes when cooking in my kitchen that is adjacent to my living room tv. You can probably cast or airplay to the tv webOS itself similarly. Some receivers also do airplay/tidal etc directly to the receiver.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

<...>

I feel like it fatigues your (especially my) eyes even if you supposedly aren't consciously "seeing" it. People claim to "not really notice it" , etc. as if it is borderline. While you might try to be obvlivious to it I don't think your eyes and brain are.

Well I don't notice it and I don't get any fatigue even after hours of use but that's just me. I have been using backlight strobing tech since the very first LCD monitor did it which was the Asus VG248QE back in 2013 and called it Lightboost. I will say that over the years I have used backlight strobing less and less due to how much more demanding games have become making it nearly impossible to maintain triple digit frame rates at all times. But the thing is that the hardware we have today is now more than capable of running those older titles at 4k maxed settings and 100+ fps so if I wanted to enjoy those older games with BFI then I could. And again as someone who has used this tech for years now I can confidently say that it does not bother me nor cause me any eye strain/fatigue. However, like you I am probably going to prioritize HDR now because after doing those AutoHDR tweaks on my CX and having played around with it for over 2 weeks now, games just look absolutely FANTASTIC now with AutoHDR. So given the choice between BFI and AutoHDR, it's going to be AutoHDR. I've been having a blast playing Fallout 4 with some fan made DLC mods and AutoHDR. Sure maybe the DTM ON + Low Black Level tweak isn't the most "accurate" way of doing it compared to CRU or Special K, but when we are literally taking a game that never had any HDR to begin with and are now shoehorning HDR into it through AutoHDR, should we REALLY be debating "accuracy" here? I say just use whatever looks the best to you and for me these tweaks give me a good enough picture 90% of the way there that I don't feel the need to go messing with CRU/Special K to get that extra 10%.

Attachments

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

like you I am probably going to prioritize HDR now because after doing those AutoHDR tweaks on my CX and having played around with it for over 2 weeks now, games just look absolutely FANTASTIC now with AutoHDR. So given the choice between BFI and AutoHDR, it's going to be AutoHDR. I've been having a blast playing Fallout 4 with some fan made DLC mods and AutoHDR. Sure maybe the DTM ON + Low Black Level tweak isn't the most "accurate" way of doing it compared to CRU or Special K, but when we are literally taking a game that never had any HDR to begin with and are now shoehorning HDR into it through AutoHDR, should we REALLY be debating "accuracy" here? I say just use whatever looks the best to you and for me these tweaks give me a good enough picture 90% of the way there that I don't feel the need to go messing with CRU/Special K to get that extra 10%.

......

......Sounds good to me. The "problem" is not always just that the accuracy is alittle "off" from what should be shown but that changing the color volume scale and saturation on the fly can cause the top and bottom ends to be cut off prematurely clipping or crushing and otherwise losing detail. Still like you said way better and more pleasing than SDR.

Hopefully auto hdr will eventually get some more simple slider tools to adjust things like nvidia freestyle or reshade (or specialK) have.

nighty2k20

n00b

- Joined

- Dec 2, 2020

- Messages

- 28

Hi there,

can you confirm or not confirm the following: I changed from a Nvidia 3060ti to a Amd 6600XT. For me text sharpness seems WAY better than with the nvidia card. I am using 4k@120Hz.

Thanks

Marco

can you confirm or not confirm the following: I changed from a Nvidia 3060ti to a Amd 6600XT. For me text sharpness seems WAY better than with the nvidia card. I am using 4k@120Hz.

Thanks

Marco

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

Hi there,

can you confirm or not confirm the following: I changed from a Nvidia 3060ti to a Amd 6600XT. For me text sharpness seems WAY better than with the nvidia card. I am using 4k@120Hz.

Thanks

Marco

Text sharpness is going to be affected by 3 things:

1. RGB 444 Chroma. Make sure you have the AMD card to output that format in the Radeon software, it might be outputting YCbCr 422 or 420.

2. PC Mode on the HDMI input you are using. Changing GPUs means that it was reset from PC mode back to Generic HDMI input so you will have to go back and relabel it as PC input.

3. Cleartype settings.

nighty2k20

n00b

- Joined

- Dec 2, 2020

- Messages

- 28

Text sharpness is going to be affected by 3 things:

1. RGB 444 Chroma. Make sure you have the AMD card to output that format in the Radeon software, it might be outputting YCbCr 422 or 420.

2. PC Mode on the HDMI input you are using. Changing GPUs means that it was reset from PC mode back to Generic HDMI input so you will have to go back and relabel it as PC input.

3. Cleartype settings.

Display info (green button) says ycbcr444 10b 4L10

Changed from HDMI 1 to PC, no noteable change

Cleartype: Also menu elements not affected by cleartype seem way sharper

Will change back to 3060tio tomorrow to have the AB BA effect

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

Display info (green button) says ycbcr444 10b 4L10

Changed from HDMI 1 to PC, no noteable change

Cleartype: Also menu elements not affected by cleartype seem way sharper

Will change back to 3060tio tomorrow to have the AB BA effect

Like they suggested - Changing the named input to be named PC puts it in 4:4:4/RGB. If it hadn't been on that setting before it should be been pretty obvious in text. It appears tattered when not in RGB mode so I would triple check that. Pulling the hdmi cable out and plugging it back in should drop PC/RGB named setting and make it tattered looking. It also happens in graphics, not just text, but text is more obvious. Setting the name of the hdmi input on the TV to "PC" again will but it back to RGB and remove the tattered look.

https://www.rtings.com/tv/learn/chroma-subsampling

I'll also add a few other things to mess with:

..your resolution in the display settings (there can also be a different TV version of some resolutions in addition to the same PC version of that resolution if you scroll down far enough, also make sure it 's on 3840x2160 4k and not 4096x)

..any scaling options that changed the display scaling from 1:1 should be turned off

..your windows desktop/text scaling settings (e.g. if you set text too small or the default is too small for your ppi it will look bad).

..make sure the new gpu doesn't have sharpness settings enabled by default (I think amd kept a sharpness slider where nvidia dropped it at some point, and their "zero" sharpness might be 10, would have to look it up)

..make sure your tv settings don't have any processing enabled in the details settings (incl sharpness), color settings, etc accidentally somehow. Use HGiG/game mode with no processing.

..That and your viewing distance being too close vs the ppi (getting a bad PPD) will show a more aggravated pixel structure that makes text look worse with more aggressive text fringing/aliasing but I assume you are at the same distance you were at with the nvidia GPU previously.

A good reference for settings here that I linked in this thread previously:

(Reddit) LG OLED gaming/PC monitor recommended settings guide

Last edited:

Roflcopter_Down

[H]ard|Gawd

- Joined

- Jan 7, 2008

- Messages

- 1,563

I mean the opposite...to keep the screen on, like a mad man, so it shows the album art and text of song playing. The screensaver turns on before one song finishes. I haven't used the service remote since it smells like someone dropped it in a vat of perfume, somehow. I am guessing the only solution is to void the warranty and disable the power/screen saving stuff?I don't know if this helps your scenario or not but I use the "turn off the screen" feature which turns the oled emitters off. You can set that turn off the screen command icon to the quick menu so it's only 2 clicks to activate with the remote (I set mine to the bottom-most icon on the quick menu), or you can enable voice commands and then hold the mic button and say "turn off the screen". I wish there was a way to set it to one of the colored buttons so you could just hit one button but otherwise it works well enough. Clicking any button on the remote wakes up the emitters instantly. I usually hit the right side of the navigation wheel personally.

https://www.reddit.com/r/OLED/comments/j0mia1/quick_tip_for_a_fast_way_to_turn_off_the_screen/

While the emitters are off everything is still running, including sound. This works great to pause games or movies and go afk/out of the room for awhile for example. I sometimes cast tidalHD to my nvidia shield in my living room from my tablet utilizing the "turn off the screen" (emitters) feature. That allows me to control the playlists, find other material, pause, skip etc from my tablet with the TV emitters off when I'm not watching tv. You can do the same with youtube material that is more about people talking than viewing anything. I do that sometimes when cooking in my kitchen that is adjacent to my living room tv. You can probably cast or airplay to the tv webOS itself similarly. Some receivers also do airplay/tidal etc directly to the receiver.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

I mean the opposite...to keep the screen on, like a mad man, so it shows the album art and text of song playing. The screensaver turns on before one song finishes. I haven't used the service remote since it smells like someone dropped it in a vat of perfume, somehow. I am guessing the only solution is to void the warranty and disable the power/screen saving stuff?

I don't recommend doing that. This is not the type of screen technology for use as a static billboard (e.g. using in a bar or terminal somewhere as a static marquee). You could do it of course but you'll be burning through your top 25% reserved brightness buffer for the wear-evening routine faster, diminishing the lifetime of your screen before permanent burn in starts to happen.

For individual 2.5 - 3min songs that cycle to a different screen/background it prob wouldn't be so bad, especially if you set a named setting with dimmer settings on it for that usage scenario.. but stuck on a single static album art for an entire album or playlist, interface would probably not be great as a daily thing lifespan wise.

I use my tablet to cast TidalHD flac to the tv/receiver with the "turn off the screen" trick (just the emitters off). I can see the album art and all of the controls, playlist, whatever on my tablet. You can get a cheap android tablet for ~ $130, more if you want a better one but for simple things that would probably do.

At my pc I have other 4k VA screens flanking the OLED media/gaming "stage" so I can leave them on though I usually have them sorted into thirds in portrait widow layout wise or 2/3 + 1/3 windows.

If I wanted to do a billboard type display I'd probably do an over/under setup with another screen above or below my oled in my pc room using a floor to ceiling pole mount with two vesa mounts on it. I'm prob going to do that someday in the long run but not for awhile yet.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

~ 37" width on a 42" diagonal screen

~ 42" width on a 48" diagonal screen so the 42" screen is 2.5" shorter on each end.

~ 20.5" height on a 42" diagonal screen

~ 23.5" height on a 48" diagonal screen.

That 42" screen should be a little more manageable for some people but it's not that huge of a difference viewing distance wise vs PPD at the nearer 60 PPD end. It's still an appreciable difference of 6" at 80PPD where you are more or less sitting the same distance away as the screen's diagonal measurement.

60 PPD on a 42" 4k screen = 29.3"

60 PPD on a 48" 4k screen = 33.5"

80 PPD on a 42" 4k screen = 41.1"

80 PPD on a 48" 4k screen = 47"

Being a marginal reduction in view distances I still don't see it as a size that is going to allow people to do the up against the wall like a bookshelf / upright~player piano style desk setup if they are going to view it properly. I don't prefer that kind of setup though personally as long as I have the room and resources to do a longer view command center style setup. Still, saving (4" or) 6" can make a difference in your room layout and could allow for deep desk mounting or more varied screen mounting options in general, as well being different in how it can relate to other screens if you do a multi-monitor setup.

===================================

View Distances

----------------------

Below 60PPD

= below 20/20 vision; most everyone can see individual pixels obviously. You likely need strong anti-aliasing to hide artifacts (at a performance hit).

= requires aggressive text subsampling tweaking, experimenting with alternate forms of subsampling, etc but it will never look as good.

= jumbo pixels to your eyes, aliased graphics, bad text fringing

= bad viewing angle like sitting near to a wall

60 PPD+ is above the 20/20 vision threshold.

..Requires moderate anti aliasing (at a performance hit) and tweaking text sub-sampling.

..The minimum view distance you can use without having a more aggresive pixel structure with jumbo pixels that result in pixelated and fringed graphics that AA struggles to compensate for and text subsampling can't compensate for enough.

..Still displays some more visible pixelization on desktop (or tv viewing) lacking AA or softening - though subsampling helps text look good enough so overall not bad looking even outside of game's AA.

..Not the best viewing angle to the extents of the screen for HUDs. It's pretty narrow.

..64 deg viewing angle at 4k.

80 PPD+

..moderate, slightly above the average visual acuity; few can see individual pixels obviously.

..Anti-aliasing is only necessary in medium- and high-contrast areas

..you still see some fringing where the contrasted areas mesh but can probably get away with more modest and less demanding AA levels or no AA depending on the game and how you feel about it

..approaching the same view distance as the screen's diagonal measurement this results in a much better viewing angle to the extents of the screen and HUD elements (more or less an equilateral triangle/pyramid viewing angle cone)

..48 degree viewing angle at 4k.

120PPD+

..better than 20/10 vision; no one can "see" individual pixels as an obvious/aggressive structure. Anti-aliasing is only necessary in very high-contrast cases if at all.

..pretty extreme and practically unusable distance for a pc on 4k screens (that will change with 8k screens someday, where all these PPD distances will be halved).

..more useful for living room/home theater room environment viewing distances where you watch media without AA and text subsampling

..32 degree viewing angle at 4k.

Viewing Angle regardless of PPD:

..in my opinion, the best case is 45deg - 50 deg viewing angle so you can actually see the extents of the screen and the HUD elements, pointers, notifications, chat, etc without turning away from center in a more extreme amount to see them.

..as a rule of thumb you get what's more or less an equilateral triangle or pyramid viewing angle cone when your view distances is nearing the diagonal screen measurement.

=================================

~ 42" width on a 48" diagonal screen so the 42" screen is 2.5" shorter on each end.

~ 20.5" height on a 42" diagonal screen

~ 23.5" height on a 48" diagonal screen.

That 42" screen should be a little more manageable for some people but it's not that huge of a difference viewing distance wise vs PPD at the nearer 60 PPD end. It's still an appreciable difference of 6" at 80PPD where you are more or less sitting the same distance away as the screen's diagonal measurement.

60 PPD on a 42" 4k screen = 29.3"

60 PPD on a 48" 4k screen = 33.5"

80 PPD on a 42" 4k screen = 41.1"

80 PPD on a 48" 4k screen = 47"

Being a marginal reduction in view distances I still don't see it as a size that is going to allow people to do the up against the wall like a bookshelf / upright~player piano style desk setup if they are going to view it properly. I don't prefer that kind of setup though personally as long as I have the room and resources to do a longer view command center style setup. Still, saving (4" or) 6" can make a difference in your room layout and could allow for deep desk mounting or more varied screen mounting options in general, as well being different in how it can relate to other screens if you do a multi-monitor setup.

===================================

View Distances

----------------------

Below 60PPD

= below 20/20 vision; most everyone can see individual pixels obviously. You likely need strong anti-aliasing to hide artifacts (at a performance hit).

= requires aggressive text subsampling tweaking, experimenting with alternate forms of subsampling, etc but it will never look as good.

= jumbo pixels to your eyes, aliased graphics, bad text fringing

= bad viewing angle like sitting near to a wall

60 PPD+ is above the 20/20 vision threshold.

..Requires moderate anti aliasing (at a performance hit) and tweaking text sub-sampling.

..The minimum view distance you can use without having a more aggresive pixel structure with jumbo pixels that result in pixelated and fringed graphics that AA struggles to compensate for and text subsampling can't compensate for enough.

..Still displays some more visible pixelization on desktop (or tv viewing) lacking AA or softening - though subsampling helps text look good enough so overall not bad looking even outside of game's AA.

..Not the best viewing angle to the extents of the screen for HUDs. It's pretty narrow.

..64 deg viewing angle at 4k.

80 PPD+

..moderate, slightly above the average visual acuity; few can see individual pixels obviously.

..Anti-aliasing is only necessary in medium- and high-contrast areas

..you still see some fringing where the contrasted areas mesh but can probably get away with more modest and less demanding AA levels or no AA depending on the game and how you feel about it

..approaching the same view distance as the screen's diagonal measurement this results in a much better viewing angle to the extents of the screen and HUD elements (more or less an equilateral triangle/pyramid viewing angle cone)

..48 degree viewing angle at 4k.

120PPD+

..better than 20/10 vision; no one can "see" individual pixels as an obvious/aggressive structure. Anti-aliasing is only necessary in very high-contrast cases if at all.

..pretty extreme and practically unusable distance for a pc on 4k screens (that will change with 8k screens someday, where all these PPD distances will be halved).

..more useful for living room/home theater room environment viewing distances where you watch media without AA and text subsampling

..32 degree viewing angle at 4k.

Viewing Angle regardless of PPD:

..in my opinion, the best case is 45deg - 50 deg viewing angle so you can actually see the extents of the screen and the HUD elements, pointers, notifications, chat, etc without turning away from center in a more extreme amount to see them.

..as a rule of thumb you get what's more or less an equilateral triangle or pyramid viewing angle cone when your view distances is nearing the diagonal screen measurement.

=================================

Last edited:

I bought an RX 6900XT because I was getting sick of nvidia driver issues on Linux. Only to discover the HDMI 2.1 spec doesn't allow open source drivers (amd on linux) to implement it. So imagine my surprise when I can only get 4:2:0 at 120hz. So, so dumb. Why is this a thing.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,512

With the 48" C1 going for $1100 I think it's highly possible that the 42" C2 will get discounted to under $1000 by the end of next year. Guess I'll be replacing my CX with that.

Gatecrasher3000

Gawd

- Joined

- Mar 18, 2013

- Messages

- 580

Now do I buy a new 42" or get a used 48" CX from one of you people?

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

considering what I wrote above it's not really worth it for me to ante up again just to go from sitting (33.5" 60PPD) .. 38" - 48" away ~ 80PPD down to ~ 41" .

PPD wise it's 4" to 6" difference resulting in 29.3" view distance at 60PPD, 41.1" view distance at 80PPD on a 42" 4k screen.

This might be of interest to someone though going in the other direction for a living room:

https://slickdeals.net/f/15439591-2...smart-webos-tv-oled77cxpua-2499?src=frontpage

PPD wise it's 4" to 6" difference resulting in 29.3" view distance at 60PPD, 41.1" view distance at 80PPD on a 42" 4k screen.

This might be of interest to someone though going in the other direction for a living room:

https://slickdeals.net/f/15439591-2...smart-webos-tv-oled77cxpua-2499?src=frontpage

Last edited:

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

I'm waiting for it! Hopefully I can hold out till it's sub-$1k.That 42" OLED will be the default gaming monitor. Hard to beat it with anything but insane refresh rate and hardware g-sync.

42" seems like the perfect size to me. While I love my 48CX, I do struggle with it in FPS mouse+keyboard games. My desk, deepest I could get, is 80cm/31.5". I have no issue with text or anything at that distance and as a productivity monitor it is amazing. Anything non keyboard/mouse I push my chair back. With the 42", FPS games should be just about perfect for me I think. I'll probably hold out for the discounts as well though.

Lateralus

More [H]uman than Human

- Joined

- Aug 7, 2004

- Messages

- 18,503

I haven't yet decided whether I'll "upgrade" to the 42" model yet. I'm perfectly comfortable with 48" at this point and love how it fills up my FOV for complete and total immersion. I did use a 40" for a while and loved it, but that was 6 years ago, and before I had used anything larger. It felt like the perfect size at the time but now I'd be afraid it'd feel small-ish coming from the 48".

I do have a 32" bedroom TV that I'd LOVE to replace with an OLED though.

I do have a 32" bedroom TV that I'd LOVE to replace with an OLED though.

Monstieur

Limp Gawd

- Joined

- Jun 10, 2011

- Messages

- 440

The OLEDs are effectively hardware G-SYNC, just not made by NVIDIA. They need LG's custom controller to drive the individual pixels with G-SYNC certified VRR, unlike LCD monitors from manufacturers who make half-baked VRR firmware for existing controllers. NVIDIA's hardware G-SYNC module just standardizes them all.That 42" OLED will be the default gaming monitor. Hard to beat it with anything but insane refresh rate and hardware g-sync.

Last edited:

Lateralus

More [H]uman than Human

- Joined

- Aug 7, 2004

- Messages

- 18,503

Yes, as of this morning, and no not that I’ve noticed yet. LG has been really good about not breaking previously working features on these, so I went ahead and took the plunge.Anyone update to firmware 4.30.35 or 4.30.40 yet? Any negative side effects?

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

FYI for anyone interested for multi monitor setups and window management etc. Displayfusion is 50% of right now, black Friday deal.

Nice, but I'm using the free Microsoft Powertoys which includes FancyZones. Seems to work seamlessly recently, even with Windows 11. Any advantages to using Displayfusion?FYI for anyone interested for multi monitor setups and window management etc. Displayfusion is 50% of right now, black Friday deal.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

Nice, but I'm using the free Microsoft Powertoys which includes FancyZones. Seems to work seamlessly recently, even with Windows 11. Any advantages to using Displayfusion?

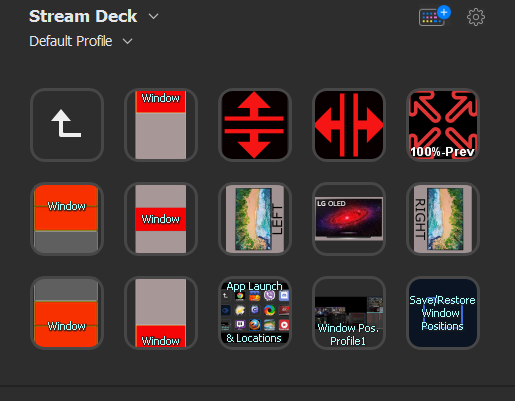

It has a large library of functions and you can build your own window placement function using an easy drag and drop a targeting reticule onto where you have windows placed and sized. It also has saved window position profiles where you can save your whole multi-screen window position layout to several named profiles. There are user submitted functions that you can add to it too and if you want to get tricky you can edit those to relate to different screen #'s or apps, or cobble together code from a few different functions. Even at it's out of the box state with it's included functions it can do a ton of things. If you combine it with a stream deck via hotkeys and add all of the function library of a streamdeck it's quite a command module.

I use displayfusion pro which has a window position save function where you can save window positions to one or multiple named profiles.

I also set my regularly used apps to displayfusion window moving functions so they all have their own "home" position. I set those each to their own hotkey in displayfusion and link those hotkeys each to their own streamdeck button with a little icon for each app.

I cobbled together some displayfusion user scripts from different functions so that each time I hit an apps button it:

--checks to see if the app is open or not, if not, opens it and moves it to set position.

--if it's open it checks to see if it's minimized.

--If it's open and not minimize it.

--If it's open and minimized, restore it to the set position.

... I can hit the same button a few times and get the particular app window back to the home position or minimize/restore it bascially

... I also have a window position profile button on the streamdeck set to the displayfusion window position profile hotkey - so I can simply shuffle them all back at once without having to use the scripted function buttons.

From a reply of mine regarding stream deck:

I just posted a bunch of things I do with it for regular system use outside of obs/streaming, about 3 replies ago. Any action or function you can trigger, toggles, multi-actions/functions.

I integrate it with displayfusion multi monitor / window management app via hotkeys for a lot of useful functions.

https://www.displayfusion.com/Features/Functions/

https://www.displayfusion.com/ScriptedFunctions/

There is also a growing library of useful streamdeck plugins you use directly in the streamdeck app:

https://apps.elgato.com/plugins

The big draw is tactile hardware buttons with cutomizable graphics that can change when you for example toggle which sound device you are using on a multi-press... mute/unmute mic, etc but there are a ton of window management and other things you can do with it. I use it to launch and place every app in my 3 monitor setup, restore everything to a saved window position profile, etc. I use it to launch (and place) practically everything now. I have button icons to place the active window to any of a number of set locations and screen-ratios on my 3 screens on the fly. I never have to drag or resize windows ever. I also launch steam big picture or back to desktop version with a toggle button, terminate and re-launch steam button, launch other game stores/libraries. Pop apps or windows system panels up and minimize/restore toggle-cycle them, etc. etc. There is a ton you can trigger with these buttons and the elgato streamdeck library has a lot of stuff already. They are also useful as the toolbox for things like photoshop or gimp or other graphics/video editors, streaming apps, etc etc. There are a lot of app intergration plugins already on the plugin site I linked.

Last edited:

The Nvidia module is the most effective one. One thing for sure, Nvidia hardware module starts synchronizing frames with 1fps, LG - with 28fps, below that you get the usual v-sync stutter.The OLEDs are effectively hardware G-SYNC, just not made by NVIDIA. They need LG's custom controller to drive the individual pixels with G-SYNC certified VRR, unlike LCD monitors from manufacturers who make half-baked VRR firmware for existing controllers. NVIDIA's hardware G-SYNC module just standardizes them all.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,311

The Nvidia module is the most effective one. One thing for sure, Nvidia hardware module starts synchronizing frames with 1fps, LG - with 28fps, below that you get the usual v-sync stutter.

As I remember it, the g--sync hardware module version starts doubling the frame rate at sub-30fps and even tripling it at extremely low frame rates, so when you dip that low it's still at or near 60fpsHz . While still technically smooth, it will be a slideshow at those rates, what I like to call "frozen" frames compared to higher frame rates comprrised of unique frames. Anyway that's how I remember is unless g-sync changed. Freesync does have a lower limit and some of the earlier ones were pretty bad at like 48hz on the low end. There are freesync premium screens that will duplicate frames at lower rates though so that confuses the whole freesync thing.

https://www.gpumag.com/freesync-premium-pro/

One of these innovations is low framerate compensation (LFC), which addresses the framerate dropping below the monitor’s range. For example, if the FPS drops below the monitor’s 30Hz range, LFC will increase the monitor’s refresh rate with a consistent ratio. So, if the game is at 25 FPS, LFC will set the refresh rate to 50Hz and that will still prevent the gamer from being affected by screen tearing.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)