At least with the Xbox Series X, John from Digital Foundry says that firmware 04.30.10 on his CX allowed him to enable VRR and black frame insertion at the same time and, better yet, it worked.

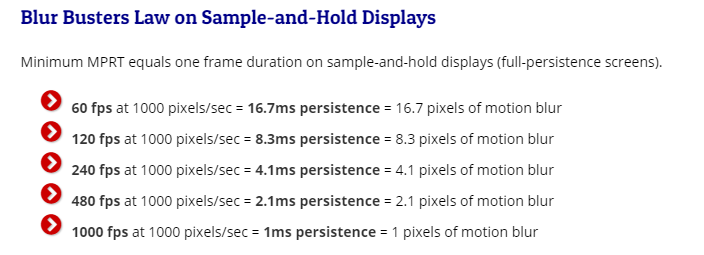

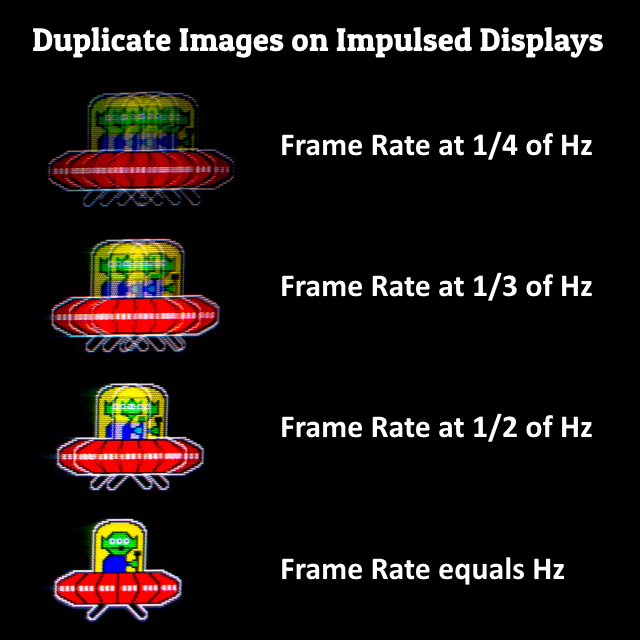

However, he was only able to do so when Dolby Vision was enabled (not even HDR10), so he thinks this VRR + BFI may not necessarily be an intentional feature. Furthermore he did mention that you really need to keep the frame rate up, like maybe 90fps and above, or else the flicker gets bad (though obviously different people have different tolerances to flicker).

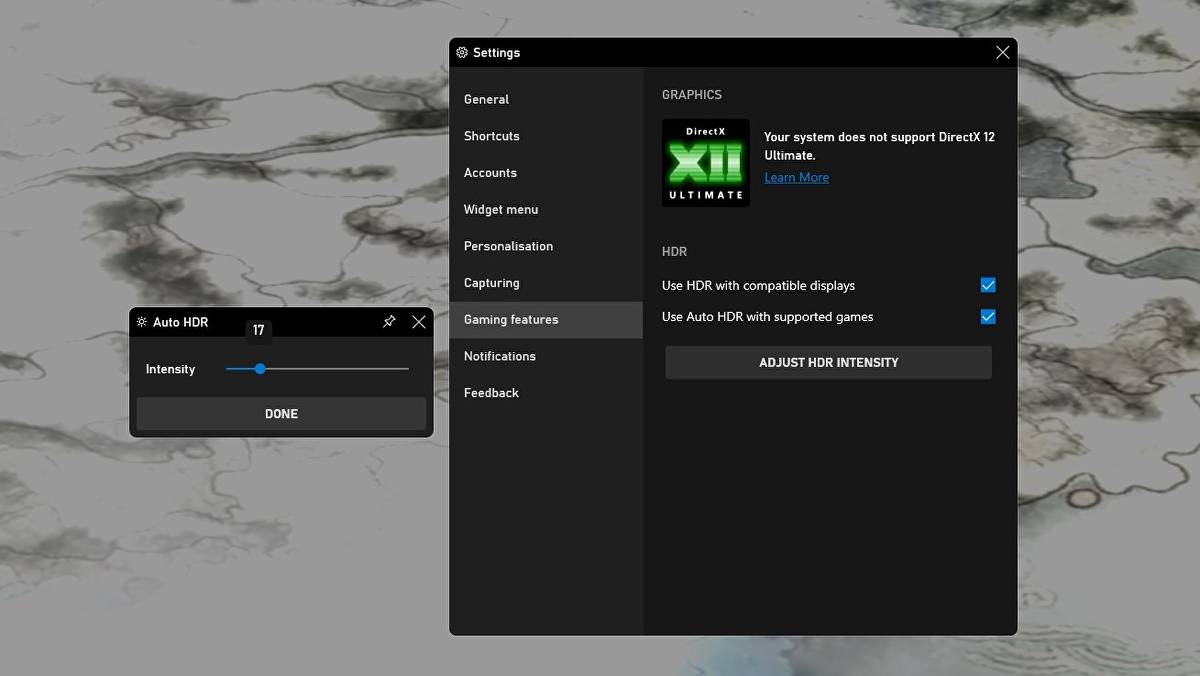

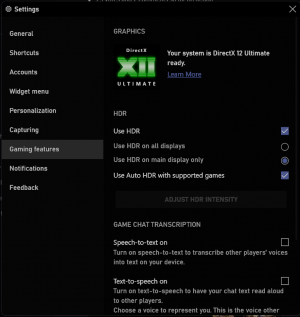

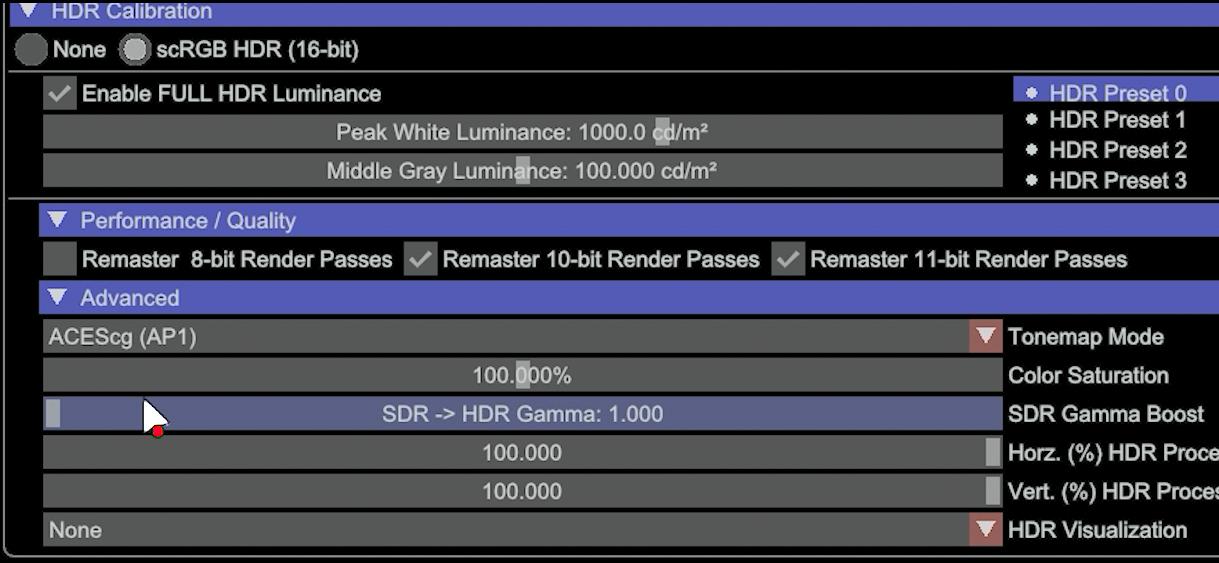

So now the question is if we could enable this VRR + BFI but on a PC? Perhaps making a custom EDID via CRU could help in this endeavor? I only have access to a 2019 OLED, so I can't test any of this out myself.

EDIT: The real question is if, possibly through EDID trickery, we could get VRR + BFI working withOUT HDR.

Digital Foundry video in question @ 42:31:

However, he was only able to do so when Dolby Vision was enabled (not even HDR10), so he thinks this VRR + BFI may not necessarily be an intentional feature. Furthermore he did mention that you really need to keep the frame rate up, like maybe 90fps and above, or else the flicker gets bad (though obviously different people have different tolerances to flicker).

So now the question is if we could enable this VRR + BFI but on a PC? Perhaps making a custom EDID via CRU could help in this endeavor? I only have access to a 2019 OLED, so I can't test any of this out myself.

EDIT: The real question is if, possibly through EDID trickery, we could get VRR + BFI working withOUT HDR.

Digital Foundry video in question @ 42:31:

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)