elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,286

Yeah that's what I was getting at but I don't think that is the case, at least not much past the reserved 25% if at all.Actually elvn Rtings did not manage to reduce peak brightness on their units even the ones that suffered burn-in. But that was on older models, maybe they let you eat away at peak brightness to increase lifespan on recent sets. I'd prefer that personally as I'm mostly consuming SDR content anyway. Ideally, let the user decide? IDK.

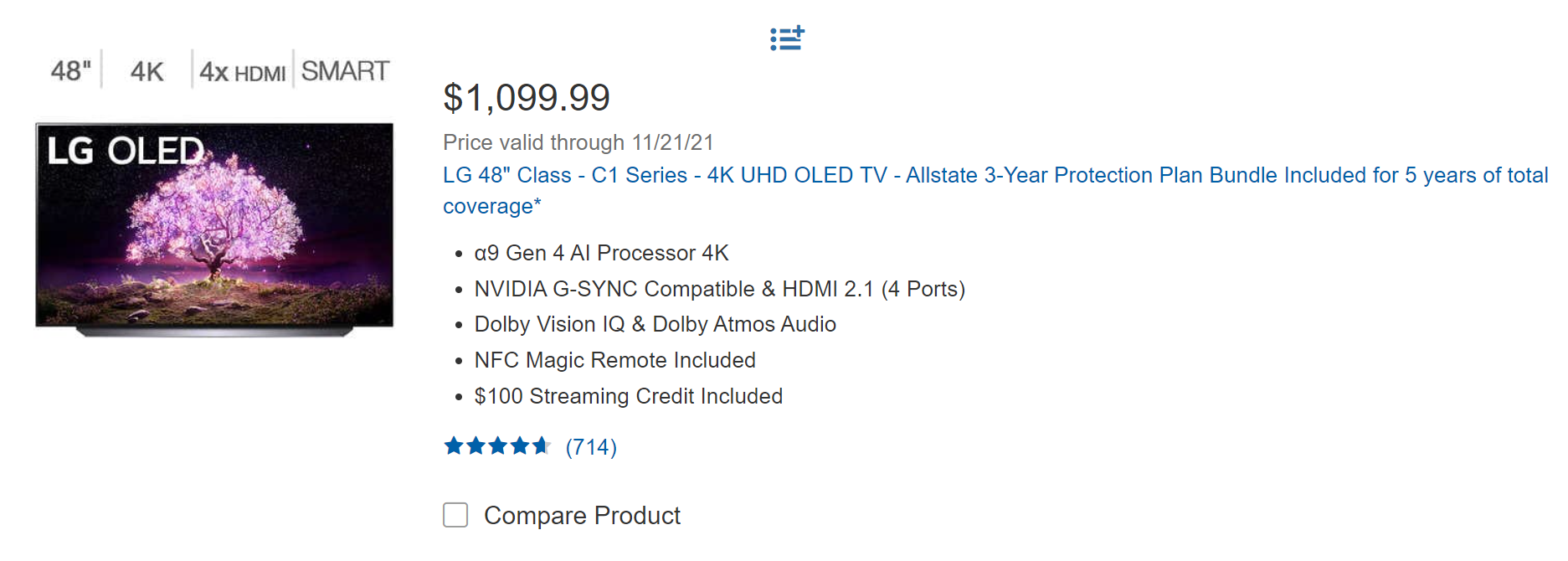

I think once you "melt"past your buffer you will get permanent burn in ghost images. If only watching movies, media, and gaming with the burn in limiting features enabled that should last the lifetime of the set though, maybe 5 yrs though I've heard people at 6 with no burn in.

Needless to say, I'm leaving all the protections on the 77" I ordered for the living room and primarily using it for movies and YouTube, a few console games maybe. Too pricey for me to be a short term throw away.

5 years from now people will be upgrading to 8k tvs and will probably also be adopting lightweight microOLED 4k and greater glasses to watch virtual screens in real space / mixed reality. Also years later than that microLEDs in panels.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)