XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

Will LG provide fix for earlier models?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

If I were to buy a screen right now, it would most likely be a 77" LG G3 for the media/home theater room. The 83" G3 doesn't have MLA this year.

OLED Dynamic Tone Mapping Pro allows the G3 and C3 to break the image up into 20,000 blocks, or zones. By contrast, the previous Alpha 9 Gen 5 processor found in last year's C2 model could only manage 5000 blocks.

These new processing features pale into insignificance when compared to the hardware upgrade that LG is bringing with the G3 (but not the C3). Called Micro Lens Array, it's basically a layer of tiny lenses that help improve the focus of the light that is being emitted by the OLEDs, essentially meaning that more of the light that's generated actually makes it to your eyes, apparently resulting in a big increase in brightness over last year's already-very-bright G2. Like the G2, the G3 also boasts a brightness-boosting heatsink that its C-series sibling has to do without.

As an example from one of their new panels, LG.Display’s third-generation 77” 4K OLED TV panel based on META technology has a total of 42.4 billion micro lenses, approximately 5,117 micro lenses per pixel, which work to emit even the lost light due to internal reflections to produce the clearest and most detailed pictures. Keep in mind this number of MLA lenses will vary depending on panel size and pixel density.

As far as we know this would only fix the ASBL from kicking in during HDR scenes with APL that doesn't change much, I don't think it would fix the ASBL problem on desktop use like say you have spreadsheets open or something and no moving content.

Don't know as of yet.Will LG provide fix for earlier models?

That's more applicable to the original question of this thread for sure. That would still be nice for media and gaming though. It darkens a few popular show's scenes famously where it shouldn't which is annoying. If you step back a step in the player/services and then resume it will be light again but having to do that to kickstart the brightness again, even in very rare scenes. . sucks.

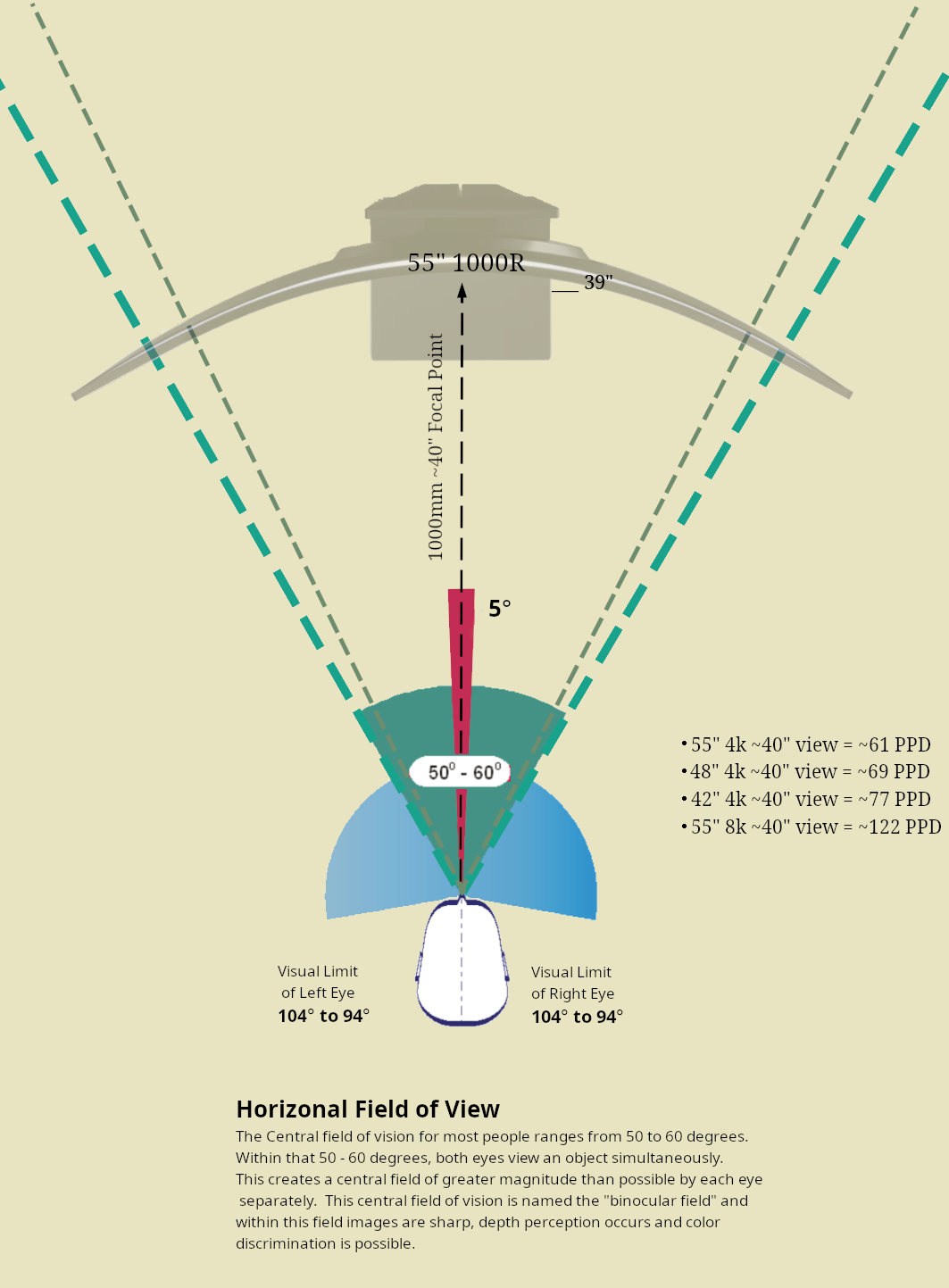

Using 60 PPD 4k as a reference point for what I consider a minimum here (optimally, not always possible obviously) :

. . . . . . . . . . . . . . . . . . . . . .

98" 4k screen at ~ 69" away has the same PPD and viewing angle and looks the same as

80" 4k screen at ~ 56" away

77" 4k screen at ~ 54" away (60PPD, 64deg viewing angle) ~ > 4.5'

65" 4k screen at ~ 45" away

55" 4k screen at ~ 39" away ~ > 3ft 3"

48" 4k screen at ~ 34" away

42" 4k screen at ~ 29" away

31.5" 4k screen at ~ 22" away

27" 4k screen at ~ 19" away

. . . . . . . . . . . . . . . . . . . . . .

Just something to consider with very large screens if you value the tighter pixel grid look most people think of when they think of a 4k screen. That said, people used 720p tvs and 1080p tv for years so it could depend on how much you value picture quality pixel density wise.

There will probably be more 8k tvs starting to hit the market in 2024 - 25 also even though they stalled out on those for 2023.

Most people with a 70"+ screen are probably not setting it up closer than 5' away but you might be surprised.

Those obsessed over pixel density would almost certainly cringe at me being ~1m away from a 50" display.I very much see 8k as a useless gimmick.

If you get close enough to a 4k screen that it fills your field of view, I very much doubt blockiness is an issue.

I have vision corrected to 20/20+ and sit ~18-24" (depending on seating position) from my 42" 4k screen and have no issues at all with pixel density. I mean, sure aliasing is present in rendered titles, but that's kind of a red herring, as aliasing will never be solved by higher resolution. You need some sort of smoothing or anti-aliasing effect to eliminate that.

I used to sit at the same distance from a curved 48" 4k screen, and that was admittedly a little much.

The only way I can see 4k having insufficient pixel density is in corner cases where people sit close enough that they focus on only a small portion of the screen. This may be a real use case, but for movies and games it probably isn't.

Those obsessed over pixel density would almost certainly cringe at me being ~1m away from a 50" display.

There's nothing wrong with it, honestly. I would be on a 43" monitor but every single 43" LCD panel is flawed so I went up instead of down. Pixel density doesn't cross my mind when either gaming or working.

Not everything has to achieve the pitch of my phone. It's frankly a waste of resources to do so, especially at 40"+.

It's mostly an issue with MacOS. MacOS's scaling system is complete garbage where integer scaling is really the only thing that does not cause extra blur. On Windows 4K @ 150% ("looks like 2560x1440") looks nice and sharp, on MacOS it's a little bit more blurry. I can easily test this out just by swapping inputs between my Mac and PC. MacOS instead seems to just naively do "target resolution * 2" so 150% scaling renders at 5120x2880 which the display then downscales to native res.It's just me but I truly do not understand the obsession with super high PPI displays. I have a 12.9" iPad Pro so yes I do know what a good high PPI display looks like. Does it look super crisp and clear? Absolutely. Would I say it is an definite must have for my desktop monitor? Not even close, just feels like quite a waste of resources indeed.

I very much see 8k as a useless gimmick.

If you get close enough to a 4k screen that it fills your field of view, I very much doubt blockiness is an issue.

I have vision corrected to 20/20+ and sit ~18-24" (depending on seating position) from my 42" 4k screen and have no issues at all with pixel density. I mean, sure aliasing is present in rendered titles, but that's kind of a red herring, as aliasing will never be solved by higher resolution. You need some sort of smoothing or anti-aliasing effect to eliminate that.

I used to sit at the same distance from a curved 48" 4k screen, and that was admittedly a little much.

The only way I can see 4k having insufficient pixel density is in corner cases where people sit close enough that they focus on only a small portion of the screen. This may be a real use case, but for movies and games it probably isn't.

If you get close enough to a 4k screen that it fills your field of view, I very much doubt blockiness is an issue.

You're not wrong, but neither were the people using 1080p screens when they said that about 1440 ones, or 1440p screens when they said that about 4k ones. Hell probably 800x600, 1024x768don't see the immediate need to waste GPU power on that for my large desktop displays though.

Isn't that a 60hz display?InnoCN 32M2V is the way to go. I replaced my LG CX with this as my primary display and for the price it's as good as it gets. It went as low as $799 on Amazon so that matches with the historical low of the LG 42 C2. For $799 you will not find a better 32" monitor out there, period. It isn't OLED so there's no fear of burn in, it's 4K at 32 inches with RGB subpixels so text clarity is AMAZING. Build quality actually feels rather excellent, but of course there will always be haters out there who don't even own the monitor but just wanna rag on the fact that it's a Chinese brand so it must be built poorly and cheaply by default, lol as if displays from Samsung, Acer, etc. don't have any build quality problems themselves.

Isn't that a 60hz display?

Heh, no doubt. Resolution increases for me have been about more screen real estate though, not an attempt to get a pixel density equivalent to a phone/tablet.You're not wrong, but neither were the people using 1080p screens when they said that about 1440 ones, or 1440p screens when they said that about 4k ones. Hell probably 800x600, 1024x768

For me the equivalent of dual 2560x1440 screens (or 5120x1440) without scaling is basically as much desktop space as I really need for anything I do.Heh, no doubt. Resolution increases for me have been about more screen real estate though, not an attempt to get a pixel density equivalent to a phone/tablet.

I've found legitimate usefulness increase as screens have gotten bigger and I can fit more work (or game!) on the screen at once.

Higher PPD than what I have doesn't increase usefulness for me, personally.

For me the equivalent of dual 2560x1440 screens (or 5120x1440) without scaling is basically as much desktop space as I really need for anything I do.

But my complaint with that setup was that it could be sharper for text, which is why I'm looking forward to the Samsung 57" 7680x2160 or dual 4K screen. I'll just end up scaling it to the equivalent of 5120x1440.

I read enough text that it started to bug me that reading on my 12.9" iPad Pro or 16" Mac was nicer than doing it on my desktop systems.

Wow... and here I've been since 2014 using 4k at 28" size the most but also having tried 24, 27, and 32 inch screens and always been thinking 8k (or higher than 4k) would be great! More ppi = sharper image, scaling works fine and if I want more desktop room I could always buy a larger 8k screen then if desired.

I'd love 8k at 32" ideally, as I sit about 24" from my screen on my desk. I'm surprised the thought of that is eliciting yawns here.

My 24" 4k was by far the best due to sharpness, but the physical size was just too small for me.

4k is fine for text at 28" for me... It's the detail in games I want, especially distance detail. I also like the higher ppi for video and photo editing, as well as sculpting and hand painting textures (I'm an indie game dev by trade).Just how clear do you want text to be?

IMO for 32", 6K is more than enough and 8K won't improve the situation much. 8K would be more useful in the 42-55" sizes. It's entirely pointless on something like a 70+ inch TV when the viewing distance for that needs to be so high that you won't be able to tell 4K from 8K anyway.Perhaps calling 8K useless or a gimmick is overly harsh, sure there are definitely benefits to have higher resolution, but is it really worth it? Just how clear do you want text to be? I get it, sharp crisp text looks nice, but pushing over 32 million pixels for that alone just seems totally not worth it IMO. 8K is probably an even less noticeable upgrade in gaming/movies. There is simply too much diminishing returns past a certain point. Oh and let's not forget, the smaller the pixels, the harder it's going to be to push more brightness for HDR...so anyone who wants better HDR but also wants smaller pixels, yeah that sounds kinda counterintuitive. I'll take the bigger pixels with better HDR performance over the opposite.

Yea an 8k 50" would be awesome.Wow... and here I've been since 2014 using 4k at 28" size the most but also having tried 24, 27, and 32 inch screens and always been thinking 8k (or higher than 4k) would be great! More ppi = sharper image, scaling works fine and if I want more desktop room I could always buy a larger 8k screen then if desired.

I'd love 8k at 32" ideally, as I sit about 24" from my screen on my desk. I'm surprised the thought of that is eliciting yawns here.

My 24" 4k was by far the best due to sharpness, but the physical size was just too small for me.

Perhaps calling 8K useless or a gimmick is overly harsh, sure there are definitely benefits to have higher resolution, but is it really worth it? Just how clear do you want text to be? I get it, sharp crisp text looks nice, but pushing over 32 million pixels for that alone just seems totally not worth it IMO. 8K is probably an even less noticeable upgrade in gaming/movies. There is simply too much diminishing returns past a certain point. Oh and let's not forget, the smaller the pixels, the harder it's going to be to push more brightness for HDR...so anyone who wants better HDR but also wants smaller pixels, yeah that sounds kinda counterintuitive. I'll take the bigger pixels with better HDR performance over the opposite.

Just how clear do you want text to be?

the smaller the pixels, the harder it's going to be to push more brightness for HDR...so anyone who wants better HDR but also wants smaller pixels

Ultimately I'd want the pixels so small that we didn't have to rely on edge smudging hacks anymore to hide how bad the pixel structure really looks but that's asking too much currently. The 2d desktop's graphics and imagery get no edge smudging compensations either typically. People doing graphics/photo/art work always want the highest PPD they can get because they are working in 2D imagery. The higher the PPD, the less staircase fringing on contrasted edges, the less non-standard subpixel layouts will affect how things look to your eyes, the less obvious DLSS/Frame amplification edge artifacts will be, etc. Larger perceived pixel sizes, larger problems.

But for now I was talking about something like a 55" 1000R 8k dream screen of mine which would "only" be around 122 PPD. That would look sweet but it's nowhere near overkill PPD wise imo. You can test this by taking a current 4k screen and stepping back from it far enough. That gives and idea in regard to pixel sizes - not viewing angles and screen real-estate obviously. You'd probably scale the default text size up a bit to get back to around "magazine article size" to your eyes which would give the edges more resolution/pixels to round edges with. Much finer piece of graph paper to color in squares on to make your fonts with. You'd probably have to do your text sub sampling customization all over again too since the edges would be different.

42" 4k screen at 65" view distance = 55" 8k screen at 40" view distance = ~ 122 PPD

48" 4k screen at 74" view distance = 55" 8k screen at 40" view distance = ~ 122 PPD

55" 4k screen at 85" view distance = 55" 8k screen at 40" view distance = ~ 122 PPD

My previous laptop was a 15.6" 4k screen. At 18" view to 24" view it was 90 to 121 PPD depending on what I was doing with it. Looked great.

The qdLED FALD LCD samung 8k screens can do 2000+ nit right now but that along with their 4k version that does 2000+nit both suffer from aggressive ABL due to the heat even though they are using LEDs and not OLEDs. If they used more boxy housings and heatsinks with active cooling they'd probably operate better. Just mentioning this because there is already a 2000+ nit 8k fald screen on the market.

micro OLED screens in upcoming gens of VR screens can go extremely bright supposedly too and they are tiny. In VR their PPD is low however since it's right near your eyeballs, even when 8k per eye/lens. Just saying there can be very tiny pixels even on some types of oled tech that can go extremely bright, (even if subtracting the effect of it looking even brighter from having the VR screens so close to your eyeballs).

. .

They've gone after a number of appliances already. Vacuum cleaners, ovens, microwaves etc. Overall it's just one approach to reduce energy usage - if manufacturers are given free reign then they might never prioritize power efficiency on stuff like this.I find it silly that I can run a hot tub, pool filter, (I own neither of those, just saying) . . multiple high powered gpu rigs with ineffecient and overclocked gpus between desktop and laptop, surround systems and amplifiers, etc... I can buy multiple screens instead of one for a multi-monitor array, but they don't want me to be able to buy a single screen with double the power use. I don't live in europe but it may affect the mfg's offerings.

I'd be curious to see what this looks like. I'd almost certainly scale everything to 200% for the 4k equivalent real estate but the sharpness would be a sight to see. It is legitimately the resources that are the concern though. If I'm content with 4k at 50", is it worth pushing four times the number of pixels for the sake of sharpness? That, and the sharpness itself would only work for vectors - raster elements would be softly scaled (unless nearest neighbour scaling can be forced) and I'm not a fan of that.Yea an 8k 50" would be awesome.

I'd be curious to see what this looks like. I'd almost certainly scale everything to 200% for the 4k equivalent real estate but the sharpness would be a sight to see. It is legitimately the resources that are the concern though. If I'm content with 4k at 50", is it worth pushing four times the number of pixels for the sake of sharpness? That, and the sharpness itself would only work for vectors - raster elements would be softly scaled (unless nearest neighbour scaling can be forced) and I'm not a fan of that.

I reserve final judgement until I see it in action for myself.

Ya it would look nice but until we have GPUs 4 times as fast as a 4090 I'm not going near 8k lol.I'd be curious to see what this looks like. I'd almost certainly scale everything to 200% for the 4k equivalent real estate but the sharpness would be a sight to see. It is legitimately the resources that are the concern though. If I'm content with 4k at 50", is it worth pushing four times the number of pixels for the sake of sharpness? That, and the sharpness itself would only work for vectors - raster elements would be softly scaled (unless nearest neighbour scaling can be forced) and I'm not a fan of that.

I reserve final judgement until I see it in action for myself.

I'd be curious to see what this looks like. I'd almost certainly scale everything to 200% for the 4k equivalent real estate but the sharpness would be a sight to see. It is legitimately the resources that are the concern though. If I'm content with 4k at 50", is it worth pushing four times the number of pixels for the sake of sharpness? That, and the sharpness itself would only work for vectors - raster elements would be softly scaled (unless nearest neighbour scaling can be forced) and I'm not a fan of that.

I reserve final judgement until I see it in action for myself.

"With a 8k 55" screen you'd get something like 49" wide x 28" tall. That would be like having a quad of four 28" 4k screens. I'd love that kind of thing for desktop/app + media use outside of gaming, and with games I could put whatever size resolution 1:1 (or AI upscaled) within that I wanted. Anything from full screen 4k upscaled via DLSS quality AI upscaling to 8k (and perhaps frame amplification tech as it matures) - DLSS which benefits more from higher starting resolutions as a foundation to work from and has it's own AA .. or running a 32:10 or 21:10 3840x based resolution for some games AI upscaled (to 7680x 2400 / 7680x3200 across the whole screen width) or even running a 1:1 pixel 4k screen space, or 5k, or 6k, letterboxed or tiled/windowed. It would be like having both a big 8k screen useful for some things full screen (with AI upscaled 4k content) + a wall of resolution and high PPD to be tiled or letterboxed however I wanted to instead of using a monitor array with bezels."

Oh yeah I'm in complete agreement, as things stand I think the downsides of the idea outweigh the positives and I'm perfectly content with what I've got.I'm sure it's going to look great. But again, is it worth it just for the sake of "muh sharpness"? If I had 2 options for my next display, one that uses DP 2.1 to keep 4K resolution but push refresh rates to 500Hz, while the other opts for 8K 144Hz instead, and the 8K display suffers from either lower HDR brightness or the same HDR brightness but at much higher heat and power consumption to do so, I know which display I would go for. There will be those who go for the other option of course, just giving my 2 cents.

For games and videos, yes. I'm not sure I want AI upscaling (or any sort of scaling that isn't vector or nearest neighbour) on the desktop though.AI upscaling looks good and better the higher the base rez you start from.

I'm sure it's going to look great. But again, is it worth it just for the sake of "muh sharpness"? If I had 2 options for my next display, one that uses DP 2.1 to keep 4K resolution but push refresh rates to 500Hz, while the other opts for 8K 144Hz instead, and the 8K display suffers from either lower HDR brightness or the same HDR brightness but at much higher heat and power consumption to do so, I know which display I would go for. There will be those who go for the other option of course, just giving my 2 cents.

https://www.rtings.com/tv/reviews/samsung/s95c-oled#test_608

Aside Fringing and not supporting DV, this is the best gaming TV until A95L comes around.

"the G3 can hit a peak brightness of 1,470 nits."

"The ability of the G3 to surpass 1,000 nits brightness is key, because that is more or less the level at which most modern HDR movies are mastered for home viewing. So any TV that can display images at over 1,000 nits should be able to reproduce the source material exactly as the director intended, without compromising its brightest scenes."

The improved brightness in the LG G3 OLED TV is due to the introduction of Micro Lens Array technology, which incorporates a layer of miniscule lenses that direct more of the light created by the OLED pixels towards the viewer. So, it gives LG a way to increase brightness without squeezing more out of its OLED panels.

LG Display, the subsidiary of LG that produces its OLED displays, has already confirmed that MLA is being used with its third-generation OLED panels, which it calls “META” OLED. However, until now, LG Electronics had not confirmed if the G3 specifically would be using that panel. Now, it has done so, joining Panasonic and Philips, who will also use META panels in their flagship OLED TVs (the Panasonic MZ2000 and Philips OLED908).

However, there’s a caveat, as not all G3 OLED TVs will come with the META panels. "The hardware that's in each size of the G3 differs, and in some sizes, MLA is part of that hardware solution,” Seperson explained.

Seperson didn’t officially state which sizes would get the META panel, but he didn’t really need to either. What he said is that the 55-, 65- and 77-inch G3 TVs will be “70% brighter” than last year’s model,

The problem with WRGB is that the more its brightness gets higher the more its colours get washed-out. You can notice it clearly when putting on side-by-side comparison against Quantum-Dots based IPS/VA/OLED displays. And I don't think MLA is a solution for that. The goal for this year's META + MLA WRGB is to beat QD-OLED in brightness just so they can say they have the brightest OLED TV in 2023, only that department. They don't put into consideration of other factors like Colour Coverages and such.If you decouple from desk mounted separately on a stand or wall mount, etc and have the room the upcoming 55inch LG G3 with its heatsink and micro lens array will be competitive performance wise if not surpassing though it will be expensive at like $2500 usd at release.

https://www.hdtvtest.co.uk/n/LGs-G3-to-use-MLA-tech-but-only-in-55-65-and-77-inch-models

..

https://www.forbes.com/sites/johnar...icing-and-availability-for-its-2023-oled-tvs/

The problem with WRGB is that the more its brightness gets higher the more its colours get washed-out. You can notice it clearly when putting on side-by-side comparison against Quantum-Dots based IPS/VA/OLED displays. And I don't think MLA is a solution for that. The goal for this year's META + MLA WRGB is to beat QD-OLED in brightness just so they can say they have the brightest OLED TV in 2023, only that department. They don't put into consideration of other factors like Colour Coverages and such.