TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,762

Multiple reviewers saying to finish the main story before going after side stuff. Includes before and after NG+.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

from "It's kind of obvious there is a contract / agreement, but we don't know the specifics." to "guesses" in just a few posts.... And people wonder how all this trash gets started and repeated?just guesses.

Great, time to mess with Bethesda's 3 fov settings again.It seems a lot of the same console commands from FO4 and Skyrim will work for Starfield.

https://www.ign.com/wikis/starfield/Cheats:_Codes,_Console_Commands,_and_Item_IDs

Read that tweet this morning about this. Going to personally give it a month for any bugs to be worked out.Multiple reviewers saying to finish the main story before going after side stuff. Includes before and after NG+.

Always a safe bet.Read that tweet this morning about this. Going to personally give it a month for any bugs to be worked out.

In this day in age. If you keep screaming about something even though you have no proof or evidence means that it must be true! People like to join on on things when usually 90% of the time the people doing the screaming haven't even touched the product because they read it on the internet.from "It's kind of obvious there is a contract / agreement, but we don't know the specifics." to "guesses" in just a few posts.... And people wonder how all this trash gets started and repeated?

Fuck that.Read that tweet this morning about this. Going to personally give it a month for any bugs to be worked out.

If you got time to lean, you've got time to clean.Shit! The boss is in the thread, everyone look like you're working.

lol in another 2 hours im on vacation and have 5 days off. Bring on Starfield!Shit! The boss is in the thread, everyone look like you're working.

Nice. I'm a sucker who signed up for holiday OT, but hey, it's triple time at least lol.lol in another 2 hours im on vacation and have 5 days off. Bring on Starfield!

Turn off SMT. You will get at least a small performance boost.This is what I am interested in.

I know my Threadripper 3960x is aging, but I'm hoping it will get me through this title with 60fps minimums.

I wonder if it uses lots of threads?

If AMD was so bullish----the game would have launched with FSR 3! This is their highest profile partnership in over a year."By all accounts," you mean by rumors you heard on line? Guess you missed Frank Azor directly addressing this exact rumor?

Edit: "AMD claims there’s nothing stopping Starfield from adding Nvidia DLSS" https://www.theverge.com/2023/8/25/22372077/amd-starfield-dlss-fsr-exclusive-frank-azor

When that "story" came out, I was shocked that AMD would do that. I called an AMD competitor contact that I have had for 20 years. Long story short, "It is all bullshit, AMD does not do that." Called a couple others, same answer. That story was a plant by NV IMO to make AMD the bad guy, and now it all makes sense.In this day in age. If you keep screaming about something even though you have no proof or evidence means that it must be true! People like to join on on things when usually 90% of the time the people doing the screaming haven't even touched the product because they read it on the internet.

Gotta love the times.

[Frank Azor, AMD gaming marketing head] He admits that — in general — when AMD pays publishers to bundle their games with a new graphics card, AMD does expect them to prioritize AMD features in return. “Money absolutely exchanges hands,” he says. “When we do bundles, we ask them: ‘Are you willing to prioritize FSR?’”

Starfield is bundled with AMD GPUs/CPUs so it sounds like a contractual agreement between Bethesda and AMD.

But without the full details we don't know why DLSS hasn't been implemented, just guesses.

Exactly. And to be to the point, AMD effed this up to by not addressing it through the end of the development phase. AMD left the door open for NV to run with that story. IMO.I tend to think of it as both FSR and DLSS take developer time to implement.

Most titles are always rushing towards a deadline to try to hit the market.

I interpret (but could be wrong) AMD's line about prioritizing FSR that when there is a time crunch at the end they will do FSR first, and allow Nvidia and Intel to get their specific technologies implemented "when there is time".

And even if you aren't an AMD fan, this approach kind of makes sense because if the developer goes for FSR first, the game launches with scaling that - while it may not be optimized for Nvidia hardware - works on all GPU's, whereas if they instead prioritized DLSS, scaling would not work on non Nvidia GPU's.

Read that tweet this morning about this. Going to personally give it a month for any bugs to be worked out.

I hope the controls are re-configurable unlike FO4 where I had to make an autohotkey script to just play the gaame.

Agreed. I also think AMD dropped the ball to not include FSR 3 with Starfield. I think that would of been a good PR move.Exactly. And to be to the point, AMD effed this up to by not addressing it through the end of the development phase. AMD left the door open for NV to run with that story. IMO.

To think Nvidia could fix everything by scooping up a few of these lucrative partnerships and give "80+%" of PC gamers the "correct" experience.Resources at NV going away from gaming IMO, and it looks pretty shitty to let Starfield RTX owners "suffer"

Yup, probably working on FSR3 right now or after fixing release bugs. It's new tech and they have multiple platforms to juggle.I tend to think of it as both FSR and DLSS take developer time to implement.

Most titles are always rushing towards a deadline to try to hit the market.

I interpret (but could be wrong) AMD's line about prioritizing FSR that when there is a time crunch at the end they will do FSR first, and allow Nvidia and Intel to get their specific technologies implemented "when there is time".

And even if you aren't an AMD fan, this approach kind of makes sense because if the developer goes for FSR first, the game launches with scaling that - while it may not be optimized for Nvidia hardware - works on all GPU's, whereas if they instead prioritized DLSS, scaling would not work on non Nvidia GPU's.

When that "story" came out, I was shocked that AMD would do that. I called an AMD competitor contact that I have had for 20 years. Long story short, "It is all bullshit, AMD does not do that." Called a couple others, same answer. That story was a plant by NV IMO to make AMD the bad guy, and now it all makes sense.

Resources at NV going away from gaming IMO, and it looks pretty shitty to let Starfield RTX owners "suffer" due to that if that is what NV lets the story be. Instead we get "AMD locked us out of Starfield." And I was just told that perf is looking suspect in the NV vs AMD camp as well. I bet we get another "It's AMD's fault," if perf comparisons end up being "bad" for NV. Guess we will see soon enough.

View attachment 595203

Fallout 4 has action mapping, you're just limited to what keys you can bind.I don't remember having to do that, but man that was years ago at this point.

I don't remember having to do that, but man that was years ago at this point.

Hey now I'm left handed too, but maybe early age PC use made me somewhat ambidextrous when it comes to using a mouse/keyboard.Im a lefty, so keypad is my go tonfor controls, couldnt rebind anything to it

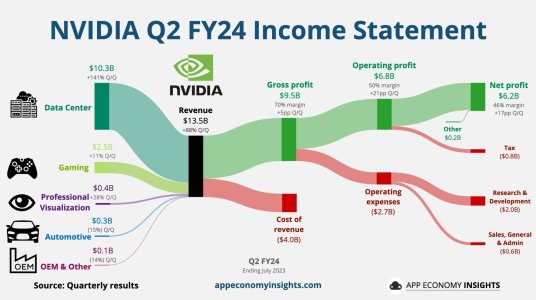

I see what you are saying about loss from gaming, but that is Cost of Revenue for the entire pipeline. Not just gaming.It seems almost unbelieveable that at the outrageous prices they have been charging lately, they predict a loss from gaming (If I am reading that chart right)

But yeah, the money is in AI and machine learning for sure. Those guys will spend thousands or even tens of thousands for a GPU without batting an eye.

IMO, its Nvidia's own fault. Their drivers have notably more CPU overhead than AMD's. And in some of these new games which only use a couple of CPU threads, or games which are CPU intensive in general----Nvidia cards can get choked out, in CPU limited scenarios. High end cards like 4080 and 4090 don't see their full potential on the best CPUs. And mid-range parts can also suffer, if the player is using a CPU which is a generation or two old.And I was just told that perf is looking suspect in the NV vs AMD camp as well. I bet we get another "It's AMD's fault," if perf comparisons end up being "bad" for NV. Guess we will see soon enough.

Hey now I'm left handed too, but maybe early age PC use made me somewhat ambidextrous when it comes to using a mouse/keyboard.

Not gonna lie, when I see those left handed mice, they look like alien devices to meI've been using computers since I was a kid (41 now) but I'm too retarded to use the mouse with my right hand. I am not ambidextrous at all.

IMO, its Nvidia's own fault. Their drivers have notably more CPU overhead than AMD's. And in some of these new games which only use a couple of CPU threads, or games which are CPU intensive in general----Nvidia cards can get choked out, in CPU limited scenarios. High end cards like 4080 and 4090 don't see their full potential on the best CPUs. And mid-range parts can also suffer, if the player is using a CPU which is a generation or two old.

Depends, it just may not be possible in some kinds of engines. There are tasks you just cannot split out in parallel. As I said, it's easy to say "they should just do it because we have the CPUs," that doesn't mean it is so easy to do.But with your avg gamer having at least 6c/12t, I would think at least 4 threads wouldn't be too much to ask for?

Especially since we've had dual cores in 2005 and quads in 2006.

I think they have had more than enough time to do it. Speaking of which, there are more than a few games that can take advantage of at least 8 threads.

After reading about performance. No IMO.Hmm, should I even try to run Starfield on a 4790k.

I also think AMD dropped the ball to not include FSR 3 with Starfield.

Yup, probably working on FSR3 right now or after fixing release bugs. It's new tech and they have multiple platforms to juggle.

5pm PST todaywhen is it supposed to unlock on steam w/ the 5 day early access super premium deluxe version?

But with your avg gamer having at least 6c/12t, I would think at least 4 threads wouldn't be too much to ask for?

Especially since we've had dual cores in 2005 and quads in 2006.

I think they have had more than enough time to do it. Speaking of which, there are more than a few games that can take advantage of at least 8 threads.

Hmm, should I even try to run Starfield on a 4790k.