erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,875

https://www.tomshardware.com/news/nvidia-subwarp-ray-tracing-performanceYesterday, Nvidia added DLDSR in the new drivers. Its an AI assisted version of DSR. It supposedly allows you to downscale with a trivial peformance loss. The exampel they show is to downscale 1620p to 1080p.

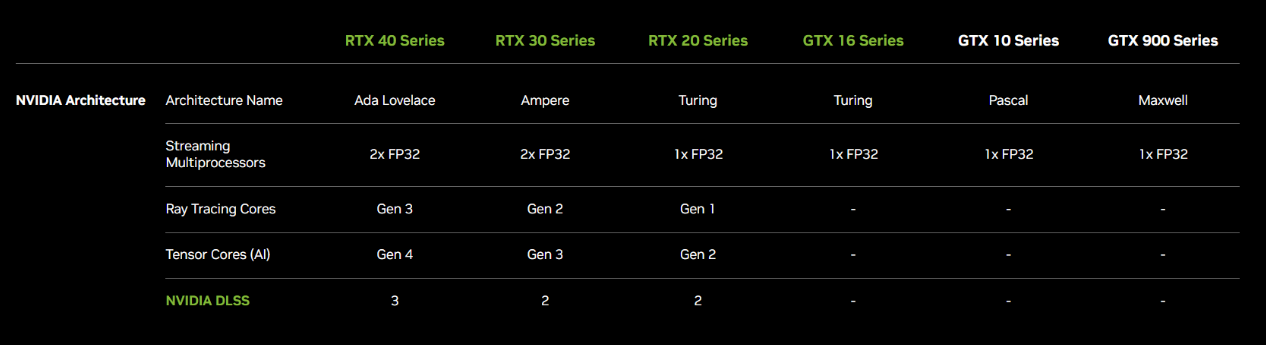

https://www.nvidia.com/en-us/geforc...&ranSiteID=kXQk6.ivFEQ-8vx6zqxUyqtoey5TKKE6mA

I am trying exactly that and it doesn't seem to be working. It looks just like regular DSR and gives me a big performance hit, just like DSR. **actually, using regular DSR is smoother for me. With DLDSR, the frametimes are jacked up and it gives me a slight rubber-banding effect.

View attachment 432715

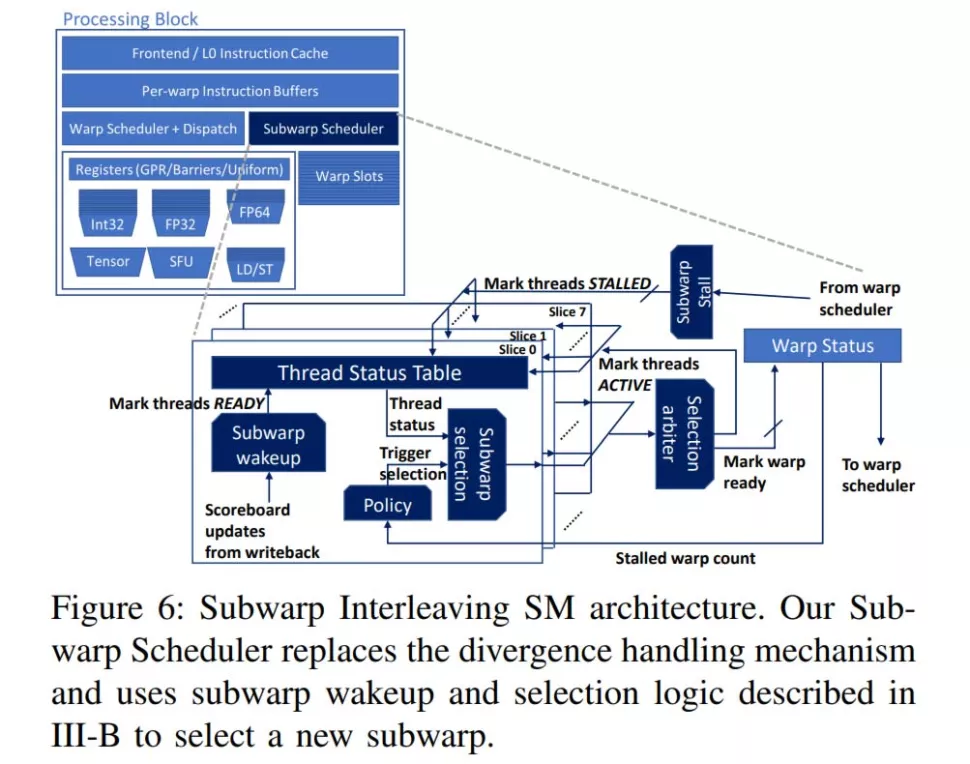

Nvidia GPU Subwarp Interleaving Boosts Ray Tracing by up to 20%

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)