elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,304

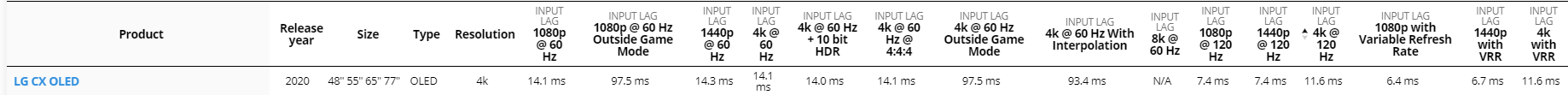

Everyone buying the C9, E9, B9, and CX will have HDR capability.. 10bit for movies especially since they aren't 120hz or 4:4:4 chroma (though 24fps movies x5 interpolates cleanly to 120hz so that can be useful). We will also get HDR capability in games and even at 120hz 4k 444 10bit HDR, even the CX assuming nvidia allows 10bit hdmi at least on it's 3000 series, (also assuming that it will have hdmi 2.1). The next gen of hdmi 2.1 consoles will have HDR too.

The only reason we are arguing about the visual leap in quality HDR is because a few people started saying HDR was a gimmick. For some reason, people are trying to ignore the huge visual benefits that a good HDR display has on available quality HDR material and upcoming HDR content over monitors that lack real HDR color volumes. So if anything, it was a sub-thread within this thread claiming no merit to HDR, calling it a gimmick, that received a flood of detailed testing examples, quotes from experts, visual examples including color temperature maps still and videos of popular movies using HDR color mapping, and user experiences including owners in this thread.

A lot of people have said HDR is a bigger visual benefit than 4k even. To me it's just another one of those growing pains. Certain people dig their heels in and tried to ignore the benefits of 16:9 over 4:3, 1440p and later 4k over 1080p, 120hz over 60hz, and g-sync/vrr over no variable frame rate.... also dvd over sd, 1080p over dvd, and 4k video content and displays... not needing a computer in a house was another one, not needing a separate internet service line, not needing a cellphone.. etc. etc.... I'm sure there will be some people that resist the move to AR in the future too. The world will move on without you.

The only reason we are arguing about the visual leap in quality HDR is because a few people started saying HDR was a gimmick. For some reason, people are trying to ignore the huge visual benefits that a good HDR display has on available quality HDR material and upcoming HDR content over monitors that lack real HDR color volumes. So if anything, it was a sub-thread within this thread claiming no merit to HDR, calling it a gimmick, that received a flood of detailed testing examples, quotes from experts, visual examples including color temperature maps still and videos of popular movies using HDR color mapping, and user experiences including owners in this thread.

A lot of people have said HDR is a bigger visual benefit than 4k even. To me it's just another one of those growing pains. Certain people dig their heels in and tried to ignore the benefits of 16:9 over 4:3, 1440p and later 4k over 1080p, 120hz over 60hz, and g-sync/vrr over no variable frame rate.... also dvd over sd, 1080p over dvd, and 4k video content and displays... not needing a computer in a house was another one, not needing a separate internet service line, not needing a cellphone.. etc. etc.... I'm sure there will be some people that resist the move to AR in the future too. The world will move on without you.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)