HDTVtest review of the CX.

edit*

I thought the rtings would have already been on here, guess not.

We have the same tv. Sony x800d 43" here, using it as my current monitor. What's it like to make such great choices in life and have such good taste?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

HDTVtest review of the CX.

edit*

I thought the rtings would have already been on here, guess not.

-----------------------------------

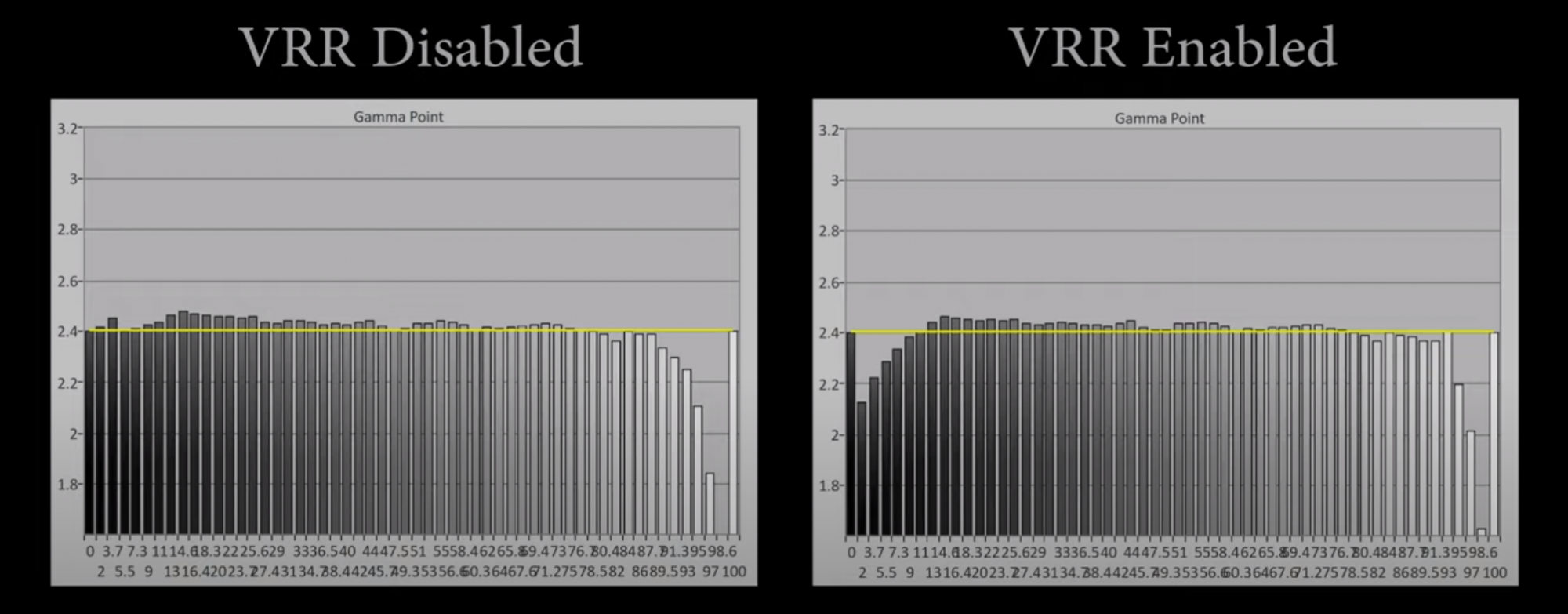

VRR Black Levels - still lose ultra blacks

===================================

Informative videos thanks.

Long wait for me but time flies

==========================

I'll be waiting for the november deals and the 3000 series gpus to see if there is hdmi 2.1 and 10bit 120hz 4k 444 before I get a 55" C9 or 48" CX but it's good to hear more questions and issues are getting answered and raised, and that at least some of them are more or less absolved. I'm looking forward to more feedback from owners in this and other threads.

I was thinking a similar wave length. I REALLY want this now. But wouldnt the GX series be a better choice for gaming consider its a better size? This way it takes less space? It would be nice if all of these models could have those reverse cameras so it does not look like a screen is there.

I want g sync 120 fps at 4k. My problem with my Samsung JS 55/65" is that the input lag is not as good for games.

It would be great to have an option where you don't need to consider 55" all in one 4k vs 27" 1440/1080p 240 hz.

I found myself seriously considering a new Dell for fps and thought to myself, "I am not a pro gamer... Never will be. However... Do I really want this experience of kicking ass more? Answer was yes, but who wands to downgrade real estate size?"

☺

GX is the same screen for a lot more money, just a different form factor focused on mounting. They did add one of those new digital tuners, but you could easily get an outside device to offer that.

IMO the only reason to wait is not to get a GX, it's to get a CX at bigger discounts later on.

We have the same tv. Sony x800d 43" here, using it as my current monitor. What's it like to make such great choices in life and have such good taste?

GX is the same screen for a lot more money, just a different form factor focused on mounting. They did add one of those new digital tuners, but you could easily get an outside device to offer that.

IMO the only reason to wait is not to get a GX, it's to get a CX at bigger discounts later on.

It seems to be a problem with the TV changing gamma when enabling VRR: https://www.notebookcheck.net/LG-s-...-worldwide-availability-in-June.466905.0.html

"Additionally, it looks like the current firmware has a few bugs such as VRR mode causing default gamma to be brighter above black "

And it's already been mentioned in a twitter post that it can be calibrated out. So either you'd have to fiddle with the TV settings to get the gamma correct again with VRR enabled or simply do a calibration to correct the gamma level. Either way it's not a big deal and can be fixed. Don't see why anyone would choose to turn Gsync off rather than just calibrating the TV. Yeah I get that LG should fix it themselves, not saying they shouldn't. But until they do, the USER can fix it themselves at least.

Hopefully you can edit the settings for VRR game mode and it will remember it without altering the gamma/settings on any other modes. That is, once you edit it, it will drop the edit when leaving VRR Game mode and pick it back up when you enter VRR Game mode again every time. Otherwise that is pretty annoying and not very useful to me... not acceptable as a fix.

Thank you so much for letting me know that! I personally mount the tv with flexible mount that allows me to move it so the stand was not important for me.GX is the same screen for a lot more money, just a different form factor focused on mounting. They did add one of those new digital tuners, but you could easily get an outside device to offer that.

IMO the only reason to wait is not to get a GX, it's to get a CX at bigger discounts later on.

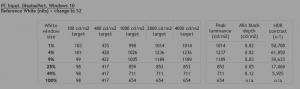

Very valid point, I did not even consider that! Does this mean we cannot take advantage of G-sync on our 2080Ti's as well? This makes me realize the upgrade path is real:And to make sure that nvidia's 7nm 3000 series is both HDMI 2.1 and supporting 10bit 120Hz VRR HDR 4k 444 chroma since the CX can't send 12bit as it has 40Gbps instead of 48Gbps hdmi 2.1. Most are assuming both hdmi2.1 and 10bit but until we see it, it's an assumption. Better deals too though, yes.

Can recommend these two videos on the GX:

That said, I have the 55" GX to be used as a PC monitor, and I can't really think of another reason to get it besides the design (which on the other hand is why I got it over the CX but got a quite good price on it as well).

I will check those out! How are you liking your 55 GX, mind sharing here or privately your deal? I was originally leaning GX due to how little space it takes. Yet I see it weighs a lot more and my omnimount / ergotron may not be able to handle anymore weight with the sonic carrier attached. I'd have considered the WX but 5k... Eh?

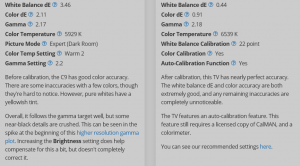

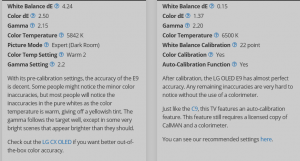

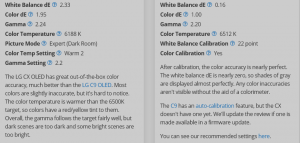

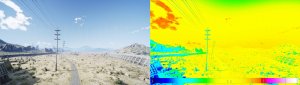

Seems that with HDR the recommendation has moved to a target of 2.4, particularly with OLED displays. 2.2 is still recommended with LCD displays.I noticed another thing.

View attachment 248615

2.4 gamma? Isn't this the wrong target for a PC monitor? IIRC 2.2 gamma is the correct target for monitors. That "bug" actually makes the near black gamma closer to 2.2 which is suppose to be the correct gamma so I want to see how the TV behaves when it's set to target 2.2 gamma off the bat and not 2.4 will it lower the near black gamma to somewhere around 2.0? Or will it not affect it at all? Also based on B7 OLED, you can change whatever settings you want in Game Mode and it won't affect other modes like Cinema...so yes just correct your gamma in game mode with VRR on and it should leave everything else like cinema mode alone. If you want it to have separate gamma for game mode with vrr on and game mode with vrr off then you may be out of luck as I'll have to double check if you can save custom presets but I don't see why you would need that when next gen consoles will also support VRR anyways so you can just have a single game mode with vrr always on with corrected gamma and call it a day. And since this affects the C9, then buying a C9 won't save you from this either. Just fix it yourself until LG fixes it officially.

Seems that with HDR the recommendation has moved to a target of 2.4, particularly with OLED displays. 2.2 is still recommended with LCD displays.

Interesting article on the subject:

https://www.jigsaw24.com/articles/whats-the-best-setting-for-gamma-with-rec709-video

When it comes to games most still ignore your software ICC, so hardware is still the way to go.Interesting information. Again I believe we can just correct the gamma through calibration so this still shouldn't be an issue. Doesn't the CX now support hardware calibration with calman? I have an X-Rite i1 Display pro so hopefully that'll work, if not I guess I can always resort to making an ICC profile in windows but that's not as optimal.

Seems that with HDR the recommendation has moved to a target of 2.4, particularly with OLED displays. 2.2 is still recommended with LCD displays.

Interesting article on the subject:

https://www.jigsaw24.com/articles/whats-the-best-setting-for-gamma-with-rec709-video

Yeah brightness has always been a weakness of OLED. Personally I use my display in a really dark room so I actually prefer the lower brightness of OLED when compared to my Acer X27 which is capable of 1000 nits highlights. In a bright room no doubt the Acer will have that extra HDR punch but in a really dark room it's just too much for me and the oled ends up being easier on my eyes. Ambient lighting conditions really plays a key role here.

On the console front hopefully the ALLM mode automatically switching to game mode doesn't alter any other settings after you already set them up manually. The info I've seen seems to say it just automatically switches TVs (and in some cases, receivers too if the video is passed through one) to GAME mode when it detects the game console input as active and back out of game mode once the device is switched to a non console device on another input.

I have a HUGE criticism of the CX: The ABL is WAAAAYYYYYYY too aggressive.

I just played the last 2 chapters FF7 Remake, and everytime it went to a bright or all white screen, the image darkened considerably compared to normal highlights. In a vacuum, I wouldn't care, but it's not; I have a Samsung Q90R that I've used throughout most of this game, and let me tell you, the brights/highlights are blindingly bright on that TV.

My understanding is that the Instant Game Response (ALLM) just turns it to low lag mode automatically on whatever input or picture preset you want rather than switching to the game mode preset. That's at least how it has been on my C9, e.g. I still have it on the ISF dark room preset on the input used by my PS4 Pro.

As for ABL, haven't noticed anything weird on C9 but personally I have not played FF7 Remake, just watched a lot when my girlfriend was playing it so I may have missed the scenes where ABL kicks in.

I have a HUGE criticism of the CX: The ABL is WAAAAYYYYYYY too aggressive.

I just played the last 2 chapters FF7 Remake, and everytime it went to a bright or all white screen, the image darkened considerably compared to normal highlights. In a vacuum, I wouldn't care, but it's not; I have a Samsung Q90R that I've used throughout most of this game, and let me tell you, the brights/highlights are blindingly bright on that TV.

In most scenarios, this issue does not present itself, so most people will not care. I will maintain that content with super fast motion looks considerably better on OLED. But for heavy HDR content? I think I like the Q90R is better, not because of the darks, but because of the brights. The Q90R just punches so hard when it comes to highlights and super bright screens.

Is that in "PC mode"? I recall there is a new setting this year that controls this, Peak brightness or something similar, but I mostly use PC mode and its not available there. I actually have a Samsung Q95T on order as well, and the only reason for that is brightness/ABL (I mainly use my 55" GX as a PC monitor and also for work, so white backgrounds is quite common). Don't care to much about burn in though, if it happens it will happen but by then the TV will have paid for itself anyway. Problem is that I also hope to use the same monitor for PC gaming and thats where it gets more compliacted.

According to RTings CX review, the CX has aggressive ABL like the C9, E9.

"The CX has decent HDR peak brightness, enough to bring out highlights in HDR. There's quite a bit of variation when displaying different content, and it gets the least bright with large areas, which is caused by the aggressive ABL. "

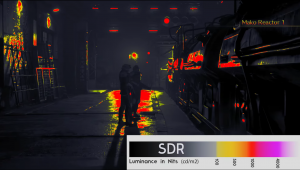

That is how it is with HDR.

With SDR, there is a Peak Brightness Setting. Since it limits the peak brightness it doesn't seem compatible with HDR.

View attachment 248742

From the Rtings C9 Review, regarding SDR settings concerning ABL:

"If ABL bothers you, setting the contrast to '80' and setting Peak Brightness to 'Off' essentially disables ABL, but the peak brightness is quite a bit lower (246-258 cd/m² in all scenes)."

One more reply since I've been doing a lot of digging at the moment....

Out of curiosity I looked at a few of the flagship LED/QLED FALD LCD tv reviews and they have horrible contrast, even for a FALD VA.

The samsung Q900R's native contrast is only 1630:1 , and only gets 6905:1 with FALD which is horrible. My 43" edge lit samsung and tcl VA screens at my computer get 4800:1 and 6150:1 native respectively. The Q900R is one of the few other TV's with HDMI 2.1.

The ABL hate and venmous rhetoric is pretty heavy in this video but he probably gets a lot of views from reactions:

--------------------------------

A test apparently designed to exacerbate the issue, in SDR if not using the settings mentioned in the previous reply:

He is talking about HDR content. If you view my reply just before yours I quoted the settings to avoid ABL in SDR mode for SDR content. You using your monitor in SDR mode could certainly avoid ABL using those settings.

Looks like the CX models are getting stellar reviews.

Being that I’m recovering from back surgery and am doing most of my gaming through my Nvidia Shield gamestreaming to my bedroom Sony 65XBR900E I might as well wait until Black Friday for lower prices and see how all the issues pan out. I really hope this popularity of these TVs get Nvidia to reimplement 10bit support in their consumer GPUs. They must already be aware of the demand. Has anyone posted this to their user forum?

Well, run over a CX with a steam roller and add a no gap wall mount you have the GX. Thats about it

Hello, I never see one test who tell me :

- if gsync works at 120 Hertz and the imput lag with this mode.

- the impact when hdr is on with the imput lag

- can we have both hdr and gsync on together.

I have a 2080ti and I need a summary of what this TV is capable for pc enthusiasts.

All coverage of the 55 cx doesn't give the reality of what the TV can do.

As a PC gamer I am afraid to see that there 0 good 4k monitor. That why all my hope are on this TV.

Also the c9 VS cx what are the big difference for pc gamer.?

IMHO as a pc gamer the 48CX soley as a pc gaming monitor will shine best with a 3080Ti *IF* the 3080Ti has full HDMI 2.1 on it. Right now nobody knows if Nvidia will put HDMI 2.1 in their next gen GPUs. Nvidia refuses to comment one way or another. I would say wait and see what the 3080Ti brings to the table before buying the 48CX. Now that's just my own opinion. Everyone has to decide for themselves.Hello, I never see one test who tell me :

- if gsync works at 120 Hertz and the imput lag with this mode.

- the impact when hdr is on with the imput lag

- can we have both hdr and gsync on together.

I have a 2080ti and I need a summary of what this TV is capable for pc enthusiasts.

All coverage of the 55 cx doesn't give the reality of what the TV can do.

As a PC gamer I am afraid to see that there 0 good 4k monitor. That why all my hope are on this TV.

Also the c9 VS cx what are the big difference for pc gamer.?

- gsync works at 120hz @ 1440p or less. input lag is roughly 6-10ms. LFC is supported (even though it's not advertised).

- hdr appears to have little to no impact on input lag as long as game mode is enabled.

- hdr is confirmed working for 1440p/120hz/gsync.

CX vs C9 are almost identical. The only major difference is that the CX supports 4k/120 (no gsync) and BFI 120hz. Outside of that, they're very close to the same.

No issues using my 55" CX as a PC monitor so far. Works great and looks great.

IMHO as a pc gamer the 48CX soley as a pc gaming monitor will shine best with a 3080Ti *IF* the 3080Ti has full HDMI 2.1 on it. Right now nobody knows if Nvidia will put HDMI 2.1 in their next gen GPUs. Nvidia refuses to comment one way or another. I would say wait and see what the 3080Ti brings to the table before buying the 48CX. Now that's just my own opinion. Everyone has to decide for themselves.

Thats because of the "wide viewing angle filter"

It also looks pretty shitty in comparison with WRGB OLEDs at 17:35 :

This guy is a known idiot.

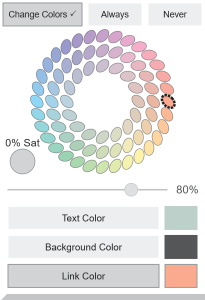

Right now on my big 43" VA screens I use a software ABL like effect on my browsers which kicks in, called "Turn off the lights". ToTL is avaiable on both firefox and chrome. It's a global setting with a slider rather than kicking on at a threshold like ABL, but sometimes a web page loads for a second before the "Turn of the lights" addon kicks in, where it cuts the brightness down to my default setting. I much prefer the dimmed ToTL when on white web pages. I also use the "Color Changer" addon for sites I frequent though, which will change the color of the background and other colors, using a color wheel if you want to micro manage it or set the defaults differently. Personally I will not be using my OLED for static app windows but I thought I'd mention these addons, and ToTL might work well for running a youtube tab on the OLED besides. Otherwise I run the AwesomeTube app on windows 10.

https://www.turnoffthelights.com/

I set turn off the lights' default setting to be a very modest amount of dimming at 20% rather than the dark default settings. It works on all web pages not just youtube, but on youtube and several other sites it preserves the video frame without dimming it. It has other youtube features like disabling auto play, filling the whole tab with the video frame automatically, volume with mouse/wheel movement, etc. While it can leave video frame alone on many sites, It does however dim images along with the rest of the web page, which is a good thing depending. You can always open the picture in a new tab and click the ToTL icon to toggle it off if you want to. You can also set links to be click through too so links still work. Another nice feature is that you can toggle a dimming slider to show on the web page if you want to adjust it on the fly. If you click the dimming slider, it toggles on/off to a dim square instead of the long slider so it stays out of the way. Or you can hide it completely in the settings if you prefer, which is the default setting.

I realize there are dark modes for these sites, plus I could use the color changer addon show below but I'm just using these two as examples of how TurnOffTheLights works:

https://addons.mozilla.org/en-US/firefox/addon/site-color-changer/

It's very easy to swap the colors around on the fly or turn the color changer off/on per site with this drop down settings window.

(example picture, not the colors/settings I use personally)

I can't decide between C9 and CX. I'd love to use the OLED as Ultrawide. So for example use a custom resolution of 3840x1600 to get a 21:9-ish experience with black bars on top and bottom. Would be awesome if you could confirm 3840x1600 @ 120HZ works on your 55'' CX.- gsync works at 120hz @ 1440p or less. input lag is roughly 6-10ms. LFC is supported (even though it's not advertised).

- hdr appears to have little to no impact on input lag as long as game mode is enabled.

- hdr is confirmed working for 1440p/120hz/gsync.

CX vs C9 are almost identical. The only major difference is that the CX supports 4k/120 (no gsync) and BFI 120hz. Outside of that, they're very close to the same.

No issues using my 55" CX as a PC monitor so far. Works great and looks great.