Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

LG 48CX

- Thread starter gan7114

- Start date

Testing in game (Doom) - there's definitely an improvement in motion with BFI (use auto or high). It's most noticeable when strafing rather than aiming.

The problem is it gets thrown off by low frame rates or input lag not sure what exactly. Like it worked well at 1080p 120fps regardless of vsync. But if I ran at 4k with lower frame rates, input lag increased (not sure if just due to lower FPS itself) and the BFI gets thrown off to the point where it actually makes the motion worse. HDR itself didn't seem to affect. Also below 120hz the flicker is terrible.

How are you testing this? Is there a way to enable BFI and GSYNC at the same time? It appears to be one or the other based on my testing.

How are you testing this? Is there a way to enable BFI and GSYNC at the same time? It appears to be one or the other based on my testing.

No, G-Sync has to be off for BFI on LG OLED. In order to properly use BFI like said above, the FPS of the game should be identical to the refresh rate of the BFI. It's why I prefer RTSS FPS cap; you can go down to the 1/1000th of a second to match the refresh rate in BFI after confirming it here:

https://testufo.com/refreshrate

It's also one of the big negatives of BFI; that you have to have enough GPU overhead (wasted resources usually) so that FPS doesn't dip below 120. Which at 4K, is a usually a tall order. Unlike older games in which the GPU utilization may have stuck +/- 10% and frame capping without using VRR was easy, in a lot of today's games GPU utilization can double or more depending on the scene making VRR a necessity.

Last edited:

delita

[H]ard|Gawd

- Joined

- Mar 10, 2014

- Messages

- 1,897

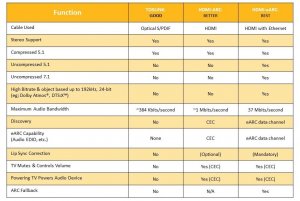

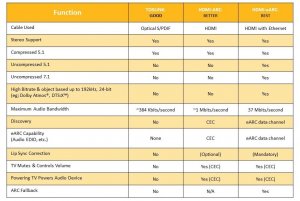

I have a 7.1 surround setup on small klipsch surround speakers and a different subwoofer in my pc room. I have some decent headphones (AKG 712's) I can plug into a receiver too. I am looking forward to "full" hdmi 2.1 with eARC support, which would avoid having to use the "phantom monitor" trick in order to get hdmi audio output from nvidia which I find buggy with my window placement software since the phantom monitor disappears any time I turn off the receiver, shuffling window positions, blinking monitors , and screwing up all memorized window positions and position hotkey placement. It also wastes a hdmi output on my gpu. The C9 has uncompressed audio format pass-through via e-ARC, 48Gbps hdmi 2.1 which is more guaranteed to get 120hz 4k 444 to the 10bit native monitor from a hdmi 2.1 gpu, and at least the black levels all work on non VRR HDR right now.

I'm dedicating the 48"CX (or 55" C9 if these support issues don't pan out) to being a gaming and media stage, with other monitor(s) in the array for static desktop/app windows. The OLED will be playing games, showing movies and videos, streams, and even high resolution photo and art slideshows/screensavers and perhaps audio visualizations at times. Some of those sources are uncompressed audio, 7.1 DTS-HD, uncompressed PCM 7.1, ATMOS, etc.

Btw Tidal and Amazon also have ATMOS music libraries that they market as "growing". (cordcutters article about Tidal ATMOS music). I'm mainly going to be using uncompressed audio for movies and FLAC audio files, but the Tidal ATMOS music support is intriguing if the library grows a lot in the future.

I want the display to be able to show uncompressed native video, and to pass through uncompressed audio sources untouched.

That means 10bit over nvidia hdmi 2.1 to the native 10bit 4k 120Hz 444 panel uncompressed. No DSC compression, not being forced to lower chroma, lowering Hz or upscaling of lower resolution. No cutting it down from 1:1 source material with available sources.

That also means uncompressed audio formats passed through untouched, uncompressed, uncut from the original source fidelity.

HDMI 2.1 inclusion was supposed to check all the boxes for eventual hdmi 2.1 gpus and other hdmi 2.1 sources. LG decided to take away or omit a bunch of those check-boxes on the CX, at least as it stands right now.

I'm definitely interested in Atmos making headway in the music world. I'm not a fan of Tidal though. I really wish Spotify would've pushed for uncompressed file formats and then eventually jump on this Atmos thing too as it moves forward. Spotify is king of the hill and Tidal is just.....very niche. I doubt they'll ever take FLAC or Atmos seriously unless someone else starts to pull subscriptions away from them, but for the AVERAGE Spotify user who doesn't care about this stuff (99% of them).....it'll never happen.

No, G-Sync has to be off for BFI on LG OLED. In order to properly use BFI like said above, the FPS of the game should be identical to the refresh rate of the BFI. It's why I prefer RTSS FPS cap; you can go down to the 1/1000th of a second to match the refresh rate in BFI after confirming it here:

https://testufo.com/refreshrate

It's also one of the big negatives of BFI; that you have to have enough GPU overhead (wasted resources usually) so that FPS doesn't dip below 120. Which at 4K, is a usually a tall order. Unlike older games in which the GPU utilization may have stuck +/- 10% and frame capping without using VRR was easy, in a lot of today's games GPU utilization can double or more depending on the scene making VRR a necessity.

Bummer.

I still definitely prefer GSYNC to BFI, but that's good to know.

For GSYNC on this monitor, I've set the Max FPS to 119 in the Nvidia Control Panel. I don't notice any tearing when I do that. It's also far more forgiving for my 2070 Super (compared to your Titan RTX).

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

For best results it is recommended to give headroom of at least few FPS so more like 115 fpsFor GSYNC on this monitor, I've set the Max FPS to 119 in the Nvidia Control Panel. I don't notice any tearing when I do that. It's also far more forgiving for my 2070 Super (compared to your Titan RTX).

Btw Tidal and Amazon also have ATMOS music libraries that they market as "growing". (cordcutters article about Tidal ATMOS music). I'm mainly going to be using uncompressed audio for movies and FLAC audio files, but the Tidal ATMOS music support is intriguing if the library grows a lot in the future.

As far as I know, this isn't real Atmos in the sense of a 7.1 TrueHD mix with object metadata for height channels, it's some kind of processing surround effect for headphones/2-channel. That stuff is only playable on phones and I have not seen any plans whatsoever to bring playback to real surround AVRs or any other true multi-channel playback device. Maybe they will someday but I wouldn't count on it anytime soon...

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,527

I have a 7.1 surround setup on small klipsch surround speakers and a different subwoofer in my pc room. I have some decent headphones (AKG 712's) I can plug into a receiver too. I am looking forward to "full" hdmi 2.1 with eARC support, which would avoid having to use the "phantom monitor" trick in order to get hdmi audio output from nvidia which I find buggy with my window placement software since the phantom monitor disappears any time I turn off the receiver, shuffling window positions, blinking monitors , and screwing up all memorized window positions and position hotkey placement. It also wastes a hdmi output on my gpu. The C9 has uncompressed audio format pass-through via e-ARC, 48Gbps hdmi 2.1 which is more guaranteed to get 120hz 4k 444 to the 10bit native monitor from a hdmi 2.1 gpu, and at least the black levels all work on non VRR HDR right now.

I'm dedicating the 48"CX (or 55" C9 if these support issues don't pan out) to being a gaming and media stage, with other monitor(s) in the array for static desktop/app windows. The OLED will be playing games, showing movies and videos, streams, and even high resolution photo and art slideshows/screensavers and perhaps audio visualizations at times. Some of those sources are uncompressed audio, 7.1 DTS-HD, uncompressed PCM 7.1, ATMOS, etc.

Btw Tidal and Amazon also have ATMOS music libraries that they market as "growing". (cordcutters article about Tidal ATMOS music). I'm mainly going to be using uncompressed audio for movies and FLAC audio files, but the Tidal ATMOS music support is intriguing if the library grows a lot in the future.

I want the display to be able to show uncompressed native video, and to pass through uncompressed audio sources untouched.

That means 10bit over nvidia hdmi 2.1 to the native 10bit 4k 120Hz 444 panel uncompressed. No DSC compression,not 8bit dithered~banding, not being forced to lower chroma, lowering Hz or upscaling of lower resolution. No cutting it down from 1:1 source material with available sources.

That also means uncompressed audio formats passed through untouched, uncompressed, uncut from the original source fidelity.

HDMI 2.1 inclusion was supposed to check all the boxes for eventual hdmi 2.1 gpus and other hdmi 2.1 sources. LG decided to take away or omit a bunch of those check-boxes on the CX, at least as it stands right now.

Is HDMI audio really going to be THAT superior over just using USB or Optical audio? Or is it just because your receiver lacks any input besides HDMI. Every audiophile I know has never once mentioned HDMI audio and all use external USB DACs. This is my buddy's setup with Sennheiser HD 800S. I get all my audio advice from him and he's never once told me that HDMI audio is the most superior.

N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

It seems to be for theatre types who can't run two cables basically.Is HDMI audio really going to be THAT superior over just using USB or Optical audio? Or is it just because your receiver lacks any input besides HDMI. Every audiophile I know has never once mentioned HDMI audio and all use external USB DACs. This is my buddy's setup with Sennheiser HD 800S. I get all my audio advice from him and he's never once told me that HDMI audio is the most superior.

View attachment 246384

View attachment 246385

Receivers and that age poorly, you have to update it each time the spec changes or one of the seven amps die (lol). Analogue signals are timeless, I have the same great Hifi for 14 years now.

The main reason to use HDMI audio is supported formats, you simply cannot pass Atmos over USB, there is no such thing as a DAC that can decode Atmos and no consumer software for it on PC either.

The benefit of this is somewhat limited if you don't have height speakers, however, as the AVR will just downmix and you end up with a mix that is usually not super different from the native 7.1 mix. Depends on the content, though.

The benefit of this is somewhat limited if you don't have height speakers, however, as the AVR will just downmix and you end up with a mix that is usually not super different from the native 7.1 mix. Depends on the content, though.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,527

The main reason to use HDMI audio is supported formats, you simply cannot pass Atmos over USB, there is no such thing as a DAC that can decode Atmos and no consumer software for it on PC either.

The benefit of this is somewhat limited if you don't have height speakers, however, as the AVR will just downmix and you end up with a mix that is usually not super different from the native 7.1 mix. Depends on the content, though.

So it's purely for surround sound purposes then, I see. What's currently stopping Atmos from being passed over USB?

IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Nothing really, just that such a device has yet to be brought to market.So it's purely for surround sound purposes then, I see. What's currently stopping Atmos from being passed over USB?

So it's purely for surround sound purposes then, I see. What's currently stopping Atmos from being passed over USB?

Licensing. Dolby wants no part of anything that simplifies piracy, and the Atmos metadata and decoding algorithm is proprietary. It may be reverse engineered eventually like older Dolby formats, but it has not yet.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,527

I see. Great info guys!

I went with the Acer CG437K instead of waiting for this one. I need one for a desktop monitor and 48" is just going to be too big for a desktop where I'll be sitting about 3 ft away. HDMI 2.1 also doesn't really mean anything for me at this point as I won't pickup a PS5 till Final Fantasy VII part 2 makes it out and by then, I'll be ready to replace my current 65" QLED with a 8K unit. Also once you're used to getting over 1500 nit, anything under 1000 will seems lacking.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,314

Dolby atmos MUSIC was not supposed to be my thesis on why I want eARC uncompressed audio pass-through. I mentioned it as an aside as something interesting. It's not just a remixed commercial released track though, it is supposed to be a remaster from the production studio using the separate multi track recordings individual master tracks. The library of atmos music available is pitifully small at the moment though afaik.

---------------------------------------------------------------------------------------

I posted this before, more than once, but I'll put it here again:

It doesn't just "save a cable". You aren't going to get uncompressed surround sound tracks without eArc. Toslink/optical is way too limited.

"optical connections tend to be used to transmit compressed Dolby Digital and DTS surround sound. "

"Like coaxial, one of the issues with optical is that it doesn’t have enough bandwidth for the lossless audio formats such as Dolby TrueHD or DTS-HD Master Audio soundtracks found on most Blu-rays. An optical connection also can’t support more than two channels of uncompressed PCM audio. "

eARC does save cables though too. It saves a hdmi port if you were going to use one with the "phantom monitor trick" for audio anyway. Arc and eARC let you send hdmi audio back out from the TV in general, which means if you want hdmi sound you don't have to buy a super expensive receiver that passes the video signal through the Receiver to the TV - which usually adds lag and complicates things anyway. You can plug your ps4/5, pc, etc into the TV and have one hdmi audio cable going out to the receiver.

-------------------------------------------------------------------------

Don't confuse musician's stereo and analog stereo setups with PCM 7.1 uncompressed surround audio, DTS-MASTER tracks in 7.1 ~ 9.1 , and ATMOS.

https://www.whathifi.com/us/advice/hdmi-arc-and-hdmi-earc-everything-you-need-to-know

"The main benefit of eARC is a big boost in bandwidth and speed. This allows you to send higher-quality audio from your TV to a soundbar or AV receiver.

There’s scope for eARC to deliver up to 32 channels of audio, including eight-channel, 24bit/192kHz uncompressed data streams at speeds of up to 38Mbps.

This means all those high bitrate formats currently available on Blu-ray discs, 4K Blu-rays and some streaming services – Dolby TrueHD, DTS-HD Master Audio and object-based formats such as Dolby Atmos and DTS:X – will all be compatible.

But whether manufacturers choose to support them all remains to be seen.

On paper, HDMI eARC should also make the handshake between compatible devices much smoother and negate the need to activate HDMI CEC (which doesn’t always work properly) - so operating multiple products shouldn’t require any extra steps to get things up and running."

-----------------------------------------------------------------------------

"HDMI 2.1 hardware support Enhanced Audio Return Channel (eARC), which increases the digital bandwidth dramatically to support uncompressed and lossless high-resolution multichannel audio, including Dolby TrueHD and Atmos, and DTS-HD Master Audio and DTS: X. All AudioQuest HDMI cables “with Ethernet” have the conductors and bandwidth required for eARC’s enhanced sonic capabilities.

-------------------------------------------------------------------------------

------------------------------------------------

https://arstechnica.com/gaming/2020/03/xbox-series-x-loses-xbox-ones-s-pdif-optical-audio-output/

https://www.gamesradar.com/ps5-audio-tempest/

---------------------------------------

The question is, why are they omitting support of all of these things and releasing them with black level bugs and less bandwidth besides? Especially when most of the features are in the C9, though it still has the VRR black level bug since VRR patch was released last october apparently.

---------------------------------------------------------------------------------------

I posted this before, more than once, but I'll put it here again:

It doesn't just "save a cable". You aren't going to get uncompressed surround sound tracks without eArc. Toslink/optical is way too limited.

"optical connections tend to be used to transmit compressed Dolby Digital and DTS surround sound. "

"Like coaxial, one of the issues with optical is that it doesn’t have enough bandwidth for the lossless audio formats such as Dolby TrueHD or DTS-HD Master Audio soundtracks found on most Blu-rays. An optical connection also can’t support more than two channels of uncompressed PCM audio. "

eARC does save cables though too. It saves a hdmi port if you were going to use one with the "phantom monitor trick" for audio anyway. Arc and eARC let you send hdmi audio back out from the TV in general, which means if you want hdmi sound you don't have to buy a super expensive receiver that passes the video signal through the Receiver to the TV - which usually adds lag and complicates things anyway. You can plug your ps4/5, pc, etc into the TV and have one hdmi audio cable going out to the receiver.

-------------------------------------------------------------------------

Don't confuse musician's stereo and analog stereo setups with PCM 7.1 uncompressed surround audio, DTS-MASTER tracks in 7.1 ~ 9.1 , and ATMOS.

https://www.whathifi.com/us/advice/hdmi-arc-and-hdmi-earc-everything-you-need-to-know

"The main benefit of eARC is a big boost in bandwidth and speed. This allows you to send higher-quality audio from your TV to a soundbar or AV receiver.

There’s scope for eARC to deliver up to 32 channels of audio, including eight-channel, 24bit/192kHz uncompressed data streams at speeds of up to 38Mbps.

This means all those high bitrate formats currently available on Blu-ray discs, 4K Blu-rays and some streaming services – Dolby TrueHD, DTS-HD Master Audio and object-based formats such as Dolby Atmos and DTS:X – will all be compatible.

But whether manufacturers choose to support them all remains to be seen.

On paper, HDMI eARC should also make the handshake between compatible devices much smoother and negate the need to activate HDMI CEC (which doesn’t always work properly) - so operating multiple products shouldn’t require any extra steps to get things up and running."

-----------------------------------------------------------------------------

"HDMI 2.1 hardware support Enhanced Audio Return Channel (eARC), which increases the digital bandwidth dramatically to support uncompressed and lossless high-resolution multichannel audio, including Dolby TrueHD and Atmos, and DTS-HD Master Audio and DTS: X. All AudioQuest HDMI cables “with Ethernet” have the conductors and bandwidth required for eARC’s enhanced sonic capabilities.

-------------------------------------------------------------------------------

------------------------------------------------

https://arstechnica.com/gaming/2020/03/xbox-series-x-loses-xbox-ones-s-pdif-optical-audio-output/

https://www.gamesradar.com/ps5-audio-tempest/

---------------------------------------

The question is, why are they omitting support of all of these things and releasing them with black level bugs and less bandwidth besides? Especially when most of the features are in the C9, though it still has the VRR black level bug since VRR patch was released last october apparently.

Last edited:

was using in game vsync settingsHow are you testing this? Is there a way to enable BFI and GSYNC at the same time? It appears to be one or the other based on my testing.

Were you monitoring your frame rates? Your frame rate has to precisely match your refresh rate to get proper motion on strobing/scanning displays.

Also try using "scanline-sync" from RTSS. It achieves v-sync without the input lag.

And have you tried any refresh rates between 60hz and 120hz with BFI? I'm wondering if all refresh rates are compatible, or just a select few.

ill have to get RTSS. the input lag occurred more from being at 4k than anything else. I can try 100hz

Also, Club3D's DP 1.4/HDMI 2.1 adapter is set to release in June and will give you access to 4K/120hz on the CX but they stated that this first iteration will not support VRR:

That's a shame. I'd be willing to pay $100+ just to see my CX 55" @ 4k/120hz GSYNC at full Chroma Subsampling. Without GSYNC support, though, I can't justify a purchase.

I tried the CG437K but even at it's current $1200 price it's just not worth it. $300 more for this OLED (+ whatever $ to accommodate it desk/distance wise) is a bargain for the massive jump in PQ.

TBH the brightness means nothing when the monitor only has 16 zones. I played FF7R on it swapping between my X27 to compare. The entire game had literally 1/2 the screen blasting 600nits+ because of HUD elements alone. It's super washed out in HDR compared to an actually capable HDR display (X27).

Not only that but it's too slow for 60hz let alone 120, see:

My suggestion to anyone out there considering both the CX and CG437K is pay the miniscule price different (Acer was $1500 new just a few months ago!) and do whatever it takes to make the OLED work for you instead of buying that terrible monitor.

Also, Club3D's DP 1.4/HDMI 2.1 adapter is set to release in June and will give you access to 4K/120hz on the CX but they stated that this first iteration will not support VRR:

I paid under $1K for the Acer and I'm not going to change my room layout just for a monitor (If I'm willing to use a 48/9", I would have gotten a Q70R or a 900F by now). I already tried a bunch of 4K Blu-ray on it and the HDR is beautiful (not as good/bright as my Q9 but good enough for computer work) and not washed out. I have a PS4 Pro coming in and I'll see how it goes. But what I meant was that I'm not doing console gaming on this. I do my console gaming and movie watching on the Q9 and will upgrade to a Q900T or whatever current model when FFVII R part 2 comes out. For a large screen TV, I'll take the best QLED over OLED anyday.

Last edited:

I paid under $1K for the Acer and I'm not going to change my room layout just for a monitor (If I'm willing to use a 48/9", I would have gotten a Q70R or a 900F by now). I already tried a bunch of 4K Blu-ray on it and the HDR is beautiful (not as good/bright as my Q9 but good enough for computer work) and not washed out. I have a PS4 Pro coming in and I'll see how it goes. But what I meant was that I'm not doing console gaming on this. I do my console gaming and movie watching on the Q9 and will upgrade to a Q900T or whatever current model when FFVII R part 2 comes out. For a large screen TV, I'll take the best QLED over OLED anyday.

Pretty close to my experience as well. I'm exclusively using a CX 55" as a computer monitor, but I also have a 65" Samsung Q90R for my living room and consoles. The CX is magnificent as a computer monitor. However, the Q90R is big, bright, and beautiful. I also think that Samsung does a better job on low bitrate content (especially for sub-4k formats on youtube). That's not to say the CX does bad, but I can see a lot of macro blocking near black on the CX that just isn't there on the Q90R.

For console gaming, movies, and TV, the Q90R is amazing. I'm looking forward to seeing the Xbox Series X and PS5 in all their glory on the Q90R.

Also, in case anyone is wondering, FFVII Remake looks spectacular in HDR on the Q90R... and that's only on a PS4 slim. I can't imagine how good it would look on a PS4 Pro.

Last edited:

Pretty close to my experience as well. I'm exclusively using a CX 55" as a computer monitor, but I also have a 65" Samsung Q90R for my living room and consoles. The CX is magnificent as a computer monitor. However, the Q90R is big, bright, and beautiful. I also think that Samsung does a better job on low bitrate content (especially for sub-4k formats on youtube). That's not to say the CX does bad, but I can see a lot of macro blocking near black on the CX that just isn't there on the Q90R.

For console gaming, movies, and TV, the Q90R is amazing. I'm looking forward to seeing the Xbox Series X and PS5 in all their glory on the Q90R.

Also, in case anyone is wondering, FFVII Remake looks spectacular in HDR on the Q90R... and that's only on a PS4 slim. I can't imagine how good it would look on a PS4 Pro.

Yea, FFVII R looks great on the Q9 even just using a slim. Will have to run it again on the PS4 pro when it gets here on Weds. How far do you sit from the 55" when you're using your PC?

Yea, FFVII R looks great on the Q9 even just using a slim. Will have to run it again on the PS4 pro when it gets here on Weds. How far do you sit from the 55" when you're using your PC?

4 or 5 feet. I had a 27" Dell monitor before this (1440P, 144hz, gsync). In order to accommodate the 4x increase in size, I bought a floor stand VESA mount and moved my desk away from the wall.

Link to floor stand: https://www.amazon.com/gp/product/B01M3XIJRG/ref=ppx_yo_dt_b_asin_title_o03_s00?ie=UTF8&psc=1

As an unintended bonus, the stand has a glass base, so I was able to move my PC out from under my desk and just sit it on top of the base.

Honestly, the entire setup is pretty ghetto, but it works well. Lord knows the picture quality upgrade from a 27" TN to a 55" OLED TV is insane.

As an Amazon Associate, HardForum may earn from qualifying purchases.

4 or 5 feet. I had a 27" Dell monitor before this (1440P, 144hz, gsync). In order to accommodate the 4x increase in size, I bought a floor stand VESA mount and moved my desk away from the wall.

Link to floor stand: https://www.amazon.com/gp/product/B01M3XIJRG/ref=ppx_yo_dt_b_asin_title_o03_s00?ie=UTF8&psc=1

As an unintended bonus, the stand has a glass base, so I was able to move my PC out from under my desk and just sit it on top of the base.

Honestly, the entire setup is pretty ghetto, but it works well. Lord knows the picture quality upgrade from a 27" TN to a 55" OLED TV is insane.

Cool setup. Currently, my JU7500 (which the CG437K will replace) sits about 20" away from the 36" deep desk edge since I have a speaker stand behind it holding up my center channel speaker above the top of the TV. If I use your stand, I can hang the TV a bit higher all the way back and put the center channel on the desk which means I can probably go to a larger screen size like a 48/9" but nothing in the range supports VRR at 120hz and I really don't want to take everything out of my office desk so I can move it and get your stand in there. Guess I'll stick to the Acer for now.

Last edited:

As an Amazon Associate, HardForum may earn from qualifying purchases.

I personally would not even consider the Acer unless it was $650. Motion clarity is so poor that it's unusable as a gaming display.

People have different standards and for me a edge lit BGR monitor that has 16 huge zones and behaves globally lit in 98% of HDR content with pixel response of VA's from 2014 is just unacceptable. There's literally no scenario outside of loading screens where it's local dimming does anything.

I'm not trying to rain on your parade so don't take it the wrong way but it is objectively terrible in HDR compared to even a $499 TCL R625.

Well, guess we'll have to agree to disagree or maybe you're more susceptible and I don't see it mentioned as a problem on all the reviews online (or the unit you got is somehow defective). As I said, I want a PC HDR PC monitor that I will do some gaming or video watching from time to time and I'm limited to the size and there's nothing else available except the ASUS PG43UQ which uses the same panel. I also won't be doing console gaming on this. The most action oriented PC game that I play is Armored Warfare, which I was able to run at 4K, max detial at about 90fps using a 2080 with no problem at all so that's good enough for me. I also don't see the price as a problem as I paid more than that for the 40JU7500 that I'm using now. and I have my 65" Q9 for watching movies and console gaming.

Last edited:

Help a fren out here guys:

Im about to pull the trigger on the Alienware AW5520QF, theyre 3K new on Ebay (US shipping) (inb4 no HMDI 2.1, you have to use it in a dark room, and you need a giant desk)

Why is this LG half the price? Whats the 1500 dollar difference here??

Im about to pull the trigger on the Alienware AW5520QF, theyre 3K new on Ebay (US shipping) (inb4 no HMDI 2.1, you have to use it in a dark room, and you need a giant desk)

Why is this LG half the price? Whats the 1500 dollar difference here??

XoR_

[H]ard|Gawd

- Joined

- Jan 18, 2016

- Messages

- 1,566

Computer monitors are overpriced.Why is this LG half the price? Whats the 1500 dollar difference here??

You will loose HDR with alienware but you probably gain better input lag and DP is better suited for connecting PC, at least for now.

elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,314

Help a fren out here guys:

Im about to pull the trigger on the Alienware AW5520QF, theyre 3K new on Ebay (US shipping) (inb4 no HMDI 2.1, you have to use it in a dark room, and you need a giant desk)

Why is this LG half the price? Whats the 1500 dollar difference here??

XoR_ is right. Computer gaming monitors are massively overpriced, mainly at this point just for the inclusion of a (too-narrow in bandwidth for 4k 120hz native) displayport.

To answer your question about what the difference is:

Quality HDR (see the Cnet review excerpts below)

HDMI 2.1 bandwidth. The alienware doesn't have hdmi 2.1 or dp 2.0. It has dp 1.4 so it has to run at 98Hz or lower than 4:4:4 chroma, etc. That is, it can't run a native 4k 10bit 120hz 444 (40Gbps) source without compressing it somehow or lowering the Hz. The hdmi 2.1 ports on the LG OLEDs provide future proofing for the 7nm gpus nvidia will release on later this year that everyone is assuming will have hdmi 2.1 output(s). AMD will likely follow suit and both of the new consoles should have hdmi 2.1 and VRR.

-------------------------------------------------------------------------

CNET https://www.cnet.com/reviews/alienware-aw5520qf-monitor-review/

The Good - Games look spectacular and play that way at 120Hz over DisplayPort.

The Bad - It's way too expensive given the tradeoffs, which include no future-proofing with HDMI 2.1 and relatively low brightness.

The Bottom Line - The Alienware 55 OLED Gaming Monitor is a great gaming monitor, but the price premium over a standard OLED TV is hard to get past.

"the monitor has a wide color gamut -- 94% UHDA-P3 in my testing -- but you don't really get HDR. The monitor has such relatively low brightness, that HDR barely looks better. It's rated for a maximum of 400 nits, and that's for a window of about 3% of the screen, where normally the specification is a 10% window. Typically, as the window grows, the brightness drops, and in my testing the peak was closer to 300 nits for a 2% window, and typically about 260 nits for a 10% window. It gets as low as 110 nits at full screen. "

"It just feels like the Alienware 55 has shipped either too late or too early: A year ago, it would have seemed more amazing for the money, and a year from now it would probably have offered more for the money, like a better OLED panel and HDMI 2.1. "

-------------------------------------------------------------------------

Last edited:

Help a fren out here guys:

Im about to pull the trigger on the Alienware AW5520QF, theyre 3K new on Ebay (US shipping) (inb4 no HMDI 2.1, you have to use it in a dark room, and you need a giant desk)

Why is this LG half the price? Whats the 1500 dollar difference here??

Half the price because they sell way more of them. Seriously, at this point, there is no reason to go with the Alienware display compared to LG OLED TVs.

Curious, for those of you who have tried improving text quality on the OLED by changing ClearType settings, have you also tried to change the system font (Segoe UI) in Windows 10? If I remember correctly, that font is made for ClearType so it would seem natural that other fonts like Sans Serif and Tahoma would be better without CC.

Changing fonts in Windows is not trivial these days but here is how it's done:

https://www.faqforge.com/windows/windows-10/how-to-change-the-default-font-in-windows-10/

(For obvious reasons, this only apply to Windows and other programs that let you specify fonts).

Changing fonts in Windows is not trivial these days but here is how it's done:

https://www.faqforge.com/windows/windows-10/how-to-change-the-default-font-in-windows-10/

(For obvious reasons, this only apply to Windows and other programs that let you specify fonts).

Last edited:

antonlissone

Weaksauce

- Joined

- May 15, 2020

- Messages

- 85

Interestingly i don’t see any problems with fonts on my cx...Curious, for those of you who have tried improving text quality on the OLED by changing ClearType settings, have you also tried to change the system font (Segoe UI) in Windows 10? If I remember correctly, that font is made for ClearType so it would seem natural that other fonts like Sans Serif and Tahoma would be better without CC.

Changing fonts in Windows is not trivial these days but here is how it's done:

https://www.faqforge.com/windows/windows-10/how-to-change-the-default-font-in-windows-10/

I went with the Acer CG437K instead of waiting for this one. I need one for a desktop monitor and 48" is just going to be too big for a desktop where I'll be sitting about 3 ft away. HDMI 2.1 also doesn't really mean anything for me at this point as I won't pickup a PS5 till Final Fantasy VII part 2 makes it out and by then, I'll be ready to replace my current 65" QLED with a 8K unit. Also once you're used to getting over 1500 nit, anything under 1000 will seems lacking.

I hope you like it more than I did, I returned within one day due to the problem with text rendering due to VA panel + BGR. But as a gaming monitor mostly it is probably nice. The only important thing is if you like it of course

Lateralus

More [H]uman than Human

- Joined

- Aug 7, 2004

- Messages

- 18,505

Interestingly i don’t see any problems with fonts on my cx...

I don't on my B7, either. I know someone posted something above about LG changing the pixel structure but the text on mine has always been perfectly acceptable. And I have owned many, many monitors of all types.

Regarding the lack of brightness compared to QLED sets, I do not run my set at anywhere close to full brightness outside of HDR scenarios, so that's a non-issue for me.

Pretty close to my experience as well. I'm exclusively using a CX 55" as a computer monitor, but I also have a 65" Samsung Q90R for my living room and consoles. The CX is magnificent as a computer monitor. However, the Q90R is big, bright, and beautiful. I also think that Samsung does a better job on low bitrate content (especially for sub-4k formats on youtube). That's not to say the CX does bad, but I can see a lot of macro blocking near black on the CX that just isn't there on the Q90R.

For console gaming, movies, and TV, the Q90R is amazing. I'm looking forward to seeing the Xbox Series X and PS5 in all their glory on the Q90R.

Also, in case anyone is wondering, FFVII Remake looks spectacular in HDR on the Q90R... and that's only on a PS4 slim. I can't imagine how good it would look on a PS4 Pro.

Would you say that rendering of text is better on the Q90R than the CX when used a a normal PC (ie not for gaming)? Being a LCD it should probably be more suitable as a "text monitor" for Windows, even though VA panels have traditionally not been that great for this compared with IPS. OLEDs have not been that great either for this to be hones.

I don't on my B7, either. I know someone posted something above about LG changing the pixel structure but the text on mine has always been perfectly acceptable. And I have owned many, many monitors of all types.

Regarding the lack of brightness compared to QLED sets, I do not run my set at anywhere close to full brightness outside of HDR scenarios, so that's a non-issue for me.

Actually, when comparing my 55" C7 with my 55" GX, I actually think the former looks better (for PC text that is) which was kind of a bummer as the GX was supposed to replace it. In general, having scaling enabled seem to improve text quality but of course decrease usable desk space (assuming you eye sight is good enough to use a 4K monitor without scaling that is). I have way to many windows at the same time for that trade off.

Last edited:

Interestingly i don’t see any problems with fonts on my cx...

Honestly, I think this comes down to eye sight combined with how sensitive you are (even if all settings are optimal). I had the 49" CRG90 for a while and many people said it looked great even for text but I could clearly see that it didn't (on the same actual screen that is). Comparing with a 27" 4K Acer X27 probably does not help either, I run it at 100% scaling and text and similar just look amazing

(Sorry if that sounded a bit patronizing, not my intention)

Honestly, I can't tell a difference. I've never really sat close enough to the TV to notice fine details in text rendering. If I had to guess, I'd say they're pretty much identical; Q90R = RGB, CX = WRGB. Becuase they both have all 3 color subpixels in each pixel, it should be basically identical.Would you say that rendering of text is better on the Q90R than the CX when used a a normal PC (ie not for gaming)? Being a LCD it should probably be more suitable as a "text monitor" for Windows, even though VA panels have traditionally not been that great for this compared with IPS. OLEDs have not been that great either for this to be hones.

I would tilt towards the CX being ever so slightly better simply because each of the pixels are self-lit, and because if the text is "White" on the CX, only the White subpixel is lit, while on the Q90R, White = RGB subpixels.

Honestly, I can't tell a difference. I've never really sat close enough to the TV to notice fine details in text rendering. If I had to guess, I'd say they're pretty much identical; Q90R = RGB, CX = WRGB. Becuase they both have all 3 color subpixels in each pixel, it should be basically identical.

I would tilt towards the CX being ever so slightly better simply because each of the pixels are self-lit, and because if the text is "White" on the CX, only the White subpixel is lit, while on the Q90R, White = RGB subpixels.

Thanks. Thing is that RGB should, at least in theory, work better with Windows and ClearType. Or at least the impression I have but as seen in this thread there is some confusing surrounding this. I have also seen that due to the new wide angle filter the pixels are more blurry on the new Samsungs. This video shows it more in detail (at the end, but the video is really interesting I think so worth watching all of it).

Thanks. Thing is that RGB should, at least in theory, work better with Windows and ClearType. Or at least the impression I have but as seen in this thread there is some confusing surrounding this. I have also seen that due to the new wide angle filter the pixels are more blurry on the new Samsungs. This video shows it more in detail (at the end, but the video is really interesting I think so worth watching all of it).

Yea, I remember seeing this video about a Q900 vs C9. Honestly, I think they were more excited about the OLED TV being so thin than the actual picture quality.

Yea, I remember seeing this video about a Q900 vs C9. Honestly, I think they were more excited about the OLED TV being so thin than the actual picture quality.

Indeed but I kind of liked their excitement

But to summarize, you feel that as a PC monitor, the Q90R (and probably Q90T) isn't really more suited than a OLED even though being LCD (aka "QLED"). Maybe with the exception of burn in then.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,527

Help a fren out here guys:

Im about to pull the trigger on the Alienware AW5520QF, theyre 3K new on Ebay (US shipping) (inb4 no HMDI 2.1, you have to use it in a dark room, and you need a giant desk)

Why is this LG half the price? Whats the 1500 dollar difference here??

If you get that Alienware the only thing you will be enjoying is 4k120Hz at 444 chroma for about a few months and that's it. Once new GPUs come out with HDMI 2.1 then the LG CX will have the same capability but until then it's limited to 420 chroma at 4k120Hz with current cards. I would say the Alienware would've been worth it when it came out last year but at this point we are so close to new GPUs do you really want to drop $3k now just to enjoy 444 chroma for a few months?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)