N4CR

Supreme [H]ardness

- Joined

- Oct 17, 2011

- Messages

- 4,947

I was working from quotes saying that was how they got the white array as a base for WRGB color filtration. Many sites detail the yellow and blue emitters. To be clear, they are using a yellow emitter, and a blue emitter. They aren't converting yellow to blue.

-------------------------------------------------------------------

https://www.cnet.com/news/what-is-oled-and-what-can-it-do-for-your-tv/ November 2019

Yellow plus blue makes green (and red and cyan and magenta)

Currently, all OLED TVs are made by LG, and how they've made them is rather unusual. All TVs, to create the images you watch, use red, green, and blue mixed together to create all the colors of the rainbow (well, not quite all colors, but most). To create the colored light, LCDs use RGB color filters, while plasmas used RGB phosphors and Samsung's short-lived OLED TV (and all their OLED-screened phones use red, green and blue OLED elements.

LG's OLED only use two colors: a sandwich of blue and yellow OLED materials. Then, using color filters, the yellow and blue light is filtered to create red, green and blue. To add a bit more brightness, there's also a clear "white" element, too. It's a lot easier if I show you:

The steps to create an image with LG's OLED.

View attachment 245401

A yellow OLED material creates yellow (i.e. red and green) light. When combined with blue (1), this creates "white" light (2). Using color filters (3) the desired sub-pixel color (including clear/white) is created (4).

Geoffrey Morrison/CNET

Though this seems odd and convoluted, it obviously works since LG is the only company that has successfully marketed large-screen OLED TVs in any numbers. This is because it's more cost-effective to make ("more" being the key word there).

The apparent downsides, such as light output and color accuracy, don't seem to be issues. Sure, they're not as bright as the brightest LCDs, but they are still very bright, and the current models have the same color saturation as the best LCDs.

-----------------------------------------------------------------

https://www.oled-info.com/reports-say-lgd-aims-change-its-woled-tv-structure-yb-rgb Dated article but details the tech.

Reports from China suggest that LG Display is considering changing the basic structure of its white OLED panels (WOLED) used in LGD's OLED TVs. LGD is currently using yellow and blue OLED materials to create a white OLED, but now LGD may switch to an RGB based mix.

It's not clear from the Chinese reports (which are unverified yet, of course) - but it's likely that LGD will not switch to a direct-emission RGB structure, but rather use the RGB materials to create a white OLED and remain with a color-filter based design. Switching from Y/W to R/G/B may enable LGD to achieve higher color purity - and so a larger color gamut, and may also be more efficient.

LGD's WRGB architecture - which creates 4 sub pixels using color filters (reg, green, blue and non-filtered) to create a colored image from a single white OLED pixel - is less efficient and less color-pure compared to a real RGB sub-pixel architecture, but WOLED displays are much easier to produce as there's less need for subpixel patterning.

------------------------------------------------------------

https://www.oled-info.com/qa-cynoras-ceo-discuss-companys-new-blue-5-emitter March 5, 2020

-------------------------------------------------------------

The main point being they are all made into a white array with a clear unfiltered (white) and, r, g, b subpixel filter above. I wasn't listing it as a negative outside of the highest HDR brightness levels perhaps. Rather showing that they likely utilize the additional large white clear spot in the fitler as a subpixel in order to boost effective brightness to our eyes without having to boost the output of the OLED emitters as much. Cutting down on the actual brightness levels and heat of the OLEDS while still getting effective color brightness levels is probably one of the reasons that burn in isn't as much of an issue on LG OLEDs, especially with the brightness levels ~ %windows they are able to hit in HDR now. Before LG developed this method, I believe it was that non-WRGB OLED's blue subpixel emitter would wear out unevenly, much earlier than the others and as I said, the overall output per color brightness probably had to be more without the added large white subpixel.

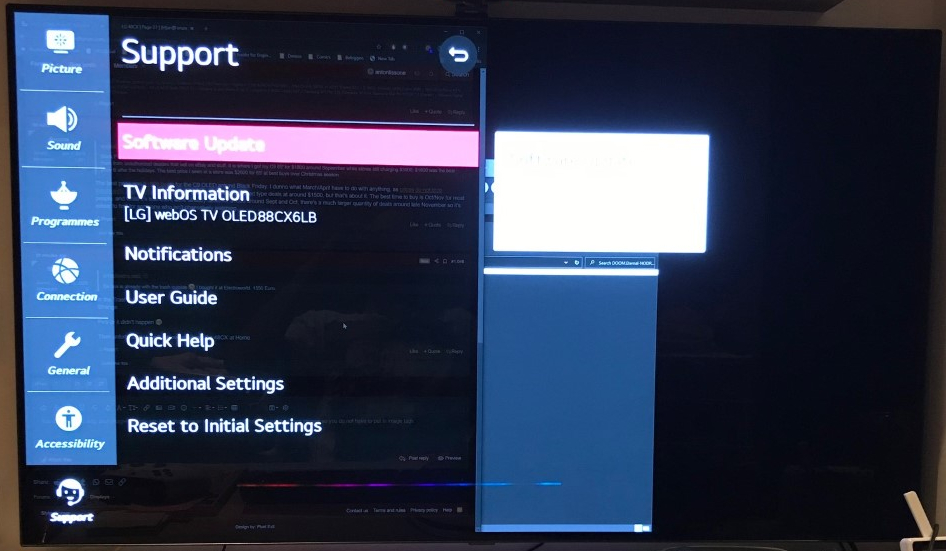

As the reviews I quoted said, LG's multi layer WRGB tech is one of the reasons LG OLED are reliable enough and why LG OLED became dominant so I wouldn't consider it a negative overall. It's a neat ~ "hack" workaround technology as the main tool vs. burn-in danger levels and OLED wear, and it works. (Further protections using ABL, %window restrictions, pixel shifting, logo detection ~dimming, OLED wear evening cycles, etc. too of course). The white subpixel structure was however brought up by others in the thread as a potential negative - in regard to text rendering for regular desktop use which is why it's been added to the conversation currently in that respect.

Thanks for clarifying, it was arranged in a way to make it look like it had yellow converting to blue.

Blue + yellow does make white, it's been done with lasers as well. It's the same as how an LED works but they are doing it separately as each white pixel is a yellow and blue oled source mixed. They probably have too high losses/thermal dissipation for stokes conversion using traditional way (around 30% with higher CRI solutions), but this blue/yellow way will be less effective for red unfortunately. That said, with the right filters they could have some converted emission.

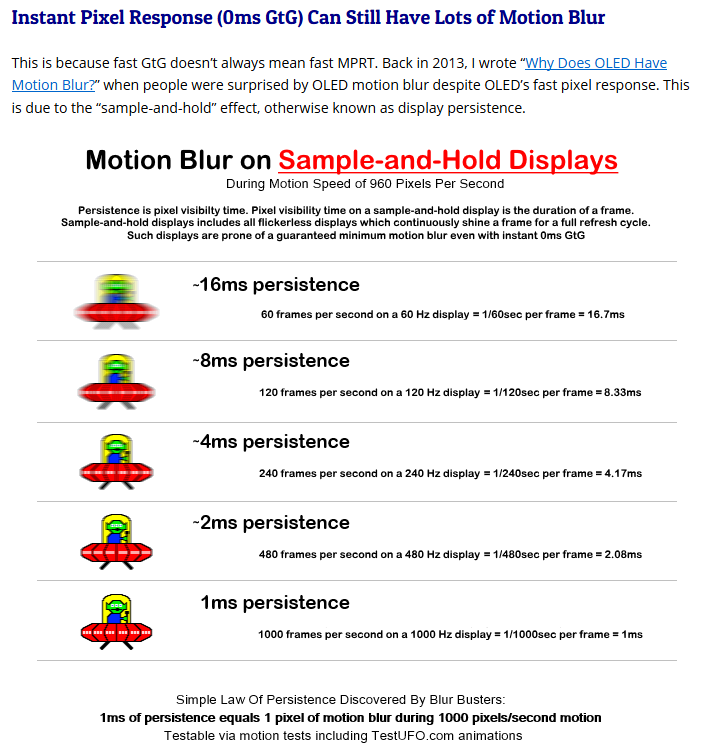

Another reason the CFA (colour filter array) will be easiest, is existing infrastructure and know how for camera CMOS sensors. It is less efficient than direct emission LEDs though, except possibly green as that has rather poor efficiency, however the fact that green can be 3-5x the lumens/W of blue and red at the same power level helps mitigate this. But as you said it is the winning play at this point and likely will continue to be until further improvements are made in direct emission OLED RGBW and desktop software to address text to different subpixel types. This has been an issue with some AUO panels and others prior so I think the time for it to be addressed is rapidly approaching, especially if owners make more noise...

Also I wonder if the Y+B sub-subpixels for white are individually addressable as it would enable some interesting warm/cold shifts of colour and blue-boosting. But you can see why they are moving to RGB sub-sub-pixels for white, this way you can also work a little easier around the sub-pixel/text issue.

It looks like direct RGB(or more) emission of narrow linewidth sources (the superior method after seeing them all) will be mostly the realm of VR/AR headsets and giant led video walls at this point, but those semiconductor microled solutions blow everything else out of the water. 1kfps and brightness 2 million nits, there isn't really much of a thermal limit with them compared to flexible printed medium.. they are closer to CPUs. And there is no text compatibility issues as you can retain RGB if necessary.

I'd say after a CX48, one of these micro-led headsets will be the next future-looking investment. Only limit for them soon will be fpga and interfaces (and power on your head lol).

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)