Blackstone

2[H]4U

- Joined

- Mar 8, 2007

- Messages

- 3,584

The display doesn't become special until there is a 2.1 HDMI GPU.

Ampere?

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

The display doesn't become special until there is a 2.1 HDMI GPU.

I was talking about scaling on monitors.Nvidia's GPU scaling supports integer scaling so you can just use that if you want to play at 1080p. To me 1080p looks far worse than regular scaled 1440p paired with image sharpening. I find that 1440p to 4K is a much smaller difference than 1080p to 1440p. Tried games like RDR2 with lots of fine small detail and a lot of it is lost running at 1080p.

AI frame interpolation can look really goodIt might sound too good to be true but if it works I'm hoping they could potentially develop better interpolation to multiply raw frame rates on top of that. So for example they use DLSS 2.0 on 1080p to produce a 4k display signal with learned fidelity and it gets a solid 100fps - if a better form of interpolation were developed they could then multiply that 100fps many times to feed higher Hz monitors. If DLSS is as good as they say it is, regular upscaling will be obsolete. Anything higher than 120hz is really outside of the scope of this thread though so that extreme Hz talk is probably is getting off topic.

It appears to me that they are promoting the use of DLSS to counteract the massive frame rate hit that RTX~ray tracing can cause, but if DLSS allows more people to feed the 120hz 4k this display is capable of with ample frame rate to get over 100fps even without RTX, it could be a great thing. I'll reserve judgement until I see it in action on titles myself but the videos look promising.

Mostly but tests of processing features is of interest, you can still drive it at 120Hz.The display doesn't become special until there is a 2.1 HDMI GPU.

Size is something that can be fixed with a custom resolution though, use that today already. It is details mainly about the actual difference in input lag I am looking for here as I have seen both things be claimed here, both that the difference now is so small that not even a pro would notice it (and I am far from being a Pro) and that there is still a noticeable difference for fast paced games.

They've quoted as low as 6ms. Unless you have a common frame rate LOW of 120fps at 120hz you aren't even getting 8.3ms per frame so it's pretty negligible input lag. Most people use VRR (g-sync, free-sync, HDMI variable refresh rate) in order to pump the graphics settings a bit higher and ride a fluctuating frame rate plus and minus 15, 20, even 30fps from "my framerate" which = your frame rate average. That is unless you are playing CSGO or L4D2 or something that gets like 300fps to start with.

----------------------------------

5 to 6ms is well under the fluctuating wave of frame rates most people are going to be rolling at 4k resolution when using VRR and a moderate frame rate average where the lower ranges of their actual frame rates aren't above 100fps-Hz.... (10ms per frame).

I'll be happy with ~6ms on a monitor with such great OLED per pixel visuals and other features (hdmi 2.1, VRR, 120hz 4k 4:4:4, etc.) while also not being limited to a slim ~13' tall or so belt model .

120hz is 8.3ms per frame and that is only if, even if ignoring a few frame rate "potholes" in your graph, you are getting 120fps solid all of the time as a common frame rate low.... which most people aren't in any kind of demanding games and settings near or at 4k resolution.

-------------------------------------------------------------------------------------------------------

120fps-Hz (solid, not average):

.................8.3ms per frame

.................50% reduction in sample and hold blur to a "soften blur" compared to baseline 60fps-Hz smearing blur (~ 8ms persistence vs 16ms persistence)

.................double the motion definition 2:1 ("# of unique pages~action states in a flip book flipping twice as fast"), incl. viewport movement, path articulation

................. 10 frames shown to every 5 at 60fps-Hz solid

................. 10 frames to every 8.3 shown at 100fps-Hz solide

................. 10 frames to every 10Hz refresh on a 120hz screen at 120fps solid (1:1)

115fps-Hz rate cap (solid frame rate, not average)

................. 8.7ms per frame

................ ~ 45% +?? reduction in sample in hold blur (8.7ms image persistence)

100fps-Hz (solid, not average):

................. 10ms per frame

................. ~40% reduction in sample and hold blur (10ms image persistence)

................. 10 frames to every 6 shown at 60fps-Hz solid (1.6:1)

................. 10 frames to ever 10 shown at 100fps-Hz solid

................. 10 frames to every 12 shown at 120fps-hz solid (1.2:1, 20fps-hz short of 120fps-hz)

75fps-Hz (solid, not average):

................. 13.3ms per frame

................. 15 ??? % reduction in sample and hold blur? (13.3ms persistence) .. still smearing blur, slightly moderated

................. 10 frames to every 8 shown at 60fps-Hz solid (1.25:1)

................. 10 frames to every 13 shown at 100fps-Hz solid (1.3:1)

................. 10 frames to every 16 shown at 120fps-Hz solid (1.6:1)

60fps-Hz (solid, not average):

................. 16.6ms per frame

................. baseline "100%" smearing blur "outside of the lines" of individual objects as well as viewport movement of the whole game world at speed

................. 1:1 motion definition vs 60fps-Hz baseline

................. 10 frames at 60fps-Hz to every 16.6 frames shown at 100fps-Hz

.................. [6 frames to every 10 shown at 100fps-Hz solid (5:3)]

..................10 frames at 60fps-Hz to every 20 frames shown at 120fps-Hz

.................. [5 frames to every 10 shown at 120fps-Hz solid (2:1, half)]

40fps-Hz (solid, not average):

................. 25ms per frame

................. baseline smearing blur (or worse) page-y choppy motion , sluggish

................. 10 frames to every 15 shown at 60fps-Hz solid (1.5:1)

................. 10 frames to every 25 shown at 100fps-Hz solid (2.5:1)

................. 10 frames to every 30 shown at 120fps-Hz solid (3:1)

30fps-Hz (solid, not average):

................. 33.3ms per frame

................. smearing blur, page-y/choppy animations ~ "molasses" movement and FoV movement ~ motion definition

................. 10 frames to every 20 shown at 60fps-Hz solid

................. 10 frames to every 30 shown at 100fps-Hz solid

................. 10 frames to every 40 shown at 120fps-Hz solid

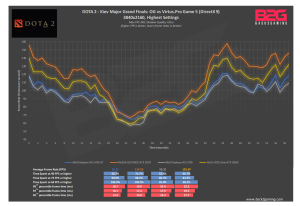

(source of image below: back2gaming.com)

When we talk about gaming OLED monitors then we wont use HDMI 2.1 nonsense with them but Display Port 2.0 and with it have stupendous amount of bandwidth, 80Gbit/s and bandwidth saving techniques such as DSC (Digital Stream Compression) which has 3:1 compression with little to no visual quality impact. With 2160p gaming monitor you would not really need to drop resolution to save bandwidth. You might possibly want to do this to get better frame rates from your GPU but with all these new fancy AI up-scaling techniques that we already have and are actively developed it might just be needed. If however you really wanted integer scaled 1080p (or eg. 1440p on 2880p monitor) then you could easily use GPUs own integer scaling without even remotely worrying about connection bandwidth.

I also think that gaming OLEDs wont be 120Hz but more like 240Hz.

None that I know of. Mostly, there's nothing stopping such a product once panels are produced in volume at those sizes. 240Hz is small potatoes for OLED, while it's stretching LCD to it's limits.OLED gaming "monitors"? 240HZ? --- Not trying to be a smart alec, serious question, is there any evidence something like this exists or is in development?

Sooner or later we will have gaming OLEDs and by that time I guess they will be 240Hz and of course no one will even want to run them with current GPU's and current bandwidth limitations.OLED gaming "monitors"? 240HZ? --- Not trying to be a smart alec, serious question, is there any evidence something like this exists or is in development? I've heard there's a couple of OLED "monitors" on the market for thousands of dollars, but I mean are there some "semi mainstream" ones targeted towards gaming being developed? Links?

Ok - i just got my LG48CX connected to my 2080TI and here are the options to run the display. But there are limitations....

1 - 4:2:0 - 8 bit 3840 x 2160 @ 60hz max / with Gsync / with HDR

2 - 4:4:4/RGB - 8 bit 3840 x 2160 @ 60hz max / with Gsync / with HDR

3 - 4:2:0 - 8 bit 3840 x 2160 @ 120hz max / without Gsync / without HDR

4 - 4:2:0 - 8 bit 2560 x 1440 @ 120hz max / with Gsync / with HDR

I've also tried custom resolutions at 3840x1600 or 3840x1440. Test works fine (stretching but guessing thats a tv setting issue), but enabling it doesn't work.

Am i missing something? I'd like to run 3 but with GSync and HDR... the difference between 60hz and 120hz on the desktop is VERY noticable.

Is this a G-Sync limitation of the display? Or a limitation to the bandwith? The latter i'd assume would be resolved when upgrading to a 3080TI IF that supports HDMI 2.1.

snip

I’ll try later today. But i think i prefer gsync...Question for you since you are the first 48CX owner I've seen online: How is the motion clarity at 4K/120Hz 4:2:0 with the Trumotion BFI settings turned on? And how bad is the brightness drops between settings?

I’ll try later today. But i think i prefer gsync...

Thanks. Killing 'instance game response' did the trickWell that is not good news. What about using different input icons? I assume you are using "PC input" at the moment? Trumotion isn't working even at 60 Hz 4K? Something seems off. Try turning off Auto Low Latency Mode and see what happens.

Thanks. Killing 'instance game response' did the trick

I'd almost say i like motion less sharp in doom... on 60hz High it almost looks like stuttering when moving crosshair vertical. Like movies panning a camera at 24p... At 120HZ it kills HDR. I'll do another test at 1440p With HDR at 120hz. See if that yields different results for me.That's a shame. My understanding is that "Instant game response" is basically enabling low latency mode for whatever preset you are using so it disables some processing to give you lower input lag. For the C9 Rtings.com reports 50.6 ms input lag outside game mode which is going to be annoying to play with. My previous Samsung KS8000 TV was about 20 ms in SDR and 35 in HDR with game mode on and the difference was noticeable whereas the 20ms I was not bothered by at all and played plenty of input precise games through just fine.

So I guess BFI is not going to be an option for gaming but might give you slightly better motion on movies and TV. The C9 only has an on/off toggle for this and personally I did not notice any relevant difference when watching some HDR TV series.

If you are trying to spot BFI on vs off, it's best to look how a game is when the game runs at high framerate and you are moving faster. Everything will just stay in focus better.

Ok. Here's the result. I'm not sure what to think. Or maybe it's just my bad eyes

@60 Hz:

Off vs Low: i don't notice a difference. Slight drop in brightness. But let's call that irrelevant. Images (ufo) not crisp)

Low vs Medium: No noticable difference in motion blur. Further drop in brightness. Not too bad - close up to the screen.

Medium vs High: The images are now crisp. Massive difference. BUT the screen flicker is noticable to the eye. Especially for the brighter colors and white.

@120Hz:

Off vs Low: Slight motion blur Better than Mediun on 60HZ, but not as crisp as HIGH on 60Hz.

Low vs Medium: Good. Sweetspot. Drop in brightness not too bad. Looks like HIGH on 60Hz.

Medium vs High: No difference with Medium other than brightness drop. No flicker like on 60Hz.

Ok - now with playing Doom:

* 120HZ is much smooter than 60Hz.... But i prefer HDR in DOOM vs higher framerate. I think....

* Playing on 60HZ and setting true motion to HIGH reduces brightness to a degree that overall it still looks good. But lights in ceilings or neon signs, distant flames etc. things that really pop-up in HDR are now boring. I don't think this is an option. Also - i don't notice a sharper image when moving... and the flicker is less visible in darker areas but when larger areas are bright - it is noticable in game.

* At 120hz, the brightness on Medium (as high is no difference in Blur) is not that much lower than Off/Low. Although in this case none of it is HDR because of the bandwith limitations - and as i said HDR looks good in DOOM. For me - i just don't see less motion blur when moving my crosshair. Fast/slow.. doesn't really matter. Nor does flying debree, enemy rockets etc.

So - i'll stick to G-Sync HDR 60Hz for now...Until 3080Ti's become available. Then i'll do 120HZ HDR. I don't see any gaming value in BFI.

That's a shame. My understanding is that "Instant game response" is basically enabling low latency mode for whatever preset you are using so it disables some processing to give you lower input lag. For the C9 Rtings.com reports 50.6 ms input lag outside game mode which is going to be annoying to play with. My previous Samsung KS8000 TV was about 20 ms in SDR and 35 in HDR with game mode on and the difference was noticeable whereas the 20ms I was not bothered by at all and played plenty of input precise games through just fine.

So I guess BFI is not going to be an option for gaming but might give you slightly better motion on movies and TV. The C9 only has an on/off toggle for this and personally I did not notice any relevant difference when watching some HDR TV series.

If you are trying to spot BFI on vs off, it's best to look how a game is when the game runs at high framerate and you are moving faster. Everything will just stay in focus better.

I know in the UFO test you see a difference.... but in game i'm not so sure.

I just did 2540x1440p DOOM HDR/GSync with True Motion at High. You do notice things stay sharper at higher movement speed and when things get 'busy'... but the dimmer display is also visible in HDR mode. I don't know what i prefer at this point

G-Sync is the strong suit. The CX having 120 hz BFI is however undoubtedly superior for ye olde 24 hz content with proper 5:5 pulldown. The C9's advantage at 12-bit 120 hz in 4:4:4 probably won't materialize given that the most recent 120 hz 4K 3D film in Gemini Man was an utter flop so there is no want or demand there at all for any Dolby Vision releases in that format for likely the next decade along with the movie industry being utterly destroyed. I also can't imagine that Nvidia would give LG the coveted G-Sync certification just to screw them over by relegating their freshest displays to 8-bit. So await driver/firmware updates which will most likely give you full use of the display.I know in the UFO test you see a difference.... but in game i'm not so sure.

I just did 2540x1440p DOOM HDR/GSync with True Motion at High. You do notice things stay sharper at higher movement speed and when things get 'busy'... but the dimmer display is also visible in HDR mode. I don't know what i prefer at this point

At 4k 120hz 4:2:0 gsync will still state its on in the nvidia control panel. However if you run the nvidia pendulum demo? The gsync checkbox is off and you cannot enable it. Also the pendulum move isn’t as smooth.G-Sync is the strong suit. The CX having 120 hz BFI is however undoubtedly superior for ye olde 24 hz content with proper 5:5 pulldown. The C9's advantage at 12-bit 120 hz in 4:4:4 probably won't materialize given that the most recent 120 hz 4K 3D film in Gemini Man was an utter flop so there is no want or demand there at all for any Dolby Vision releases in that format for likely the next decade along with the movie industry being utterly destroyed. I also can't imagine that Nvidia would give LG the coveted G-Sync certification just to screw them over by relegating their freshest displays to 8-bit. So await driver/firmware updates which will most likely give you full use of the display.

Sorry, it looks like I got in the way of your reply to Vega above...At 4k 120hz 4:2:0 gsync will still state its on in the nvidia control panel. However if you run the nvidia pendulum demo? The gsync checkbox is off and you cannot enable it. Also the pendulum move isn’t as smooth.

Are you seeing any of the same issues this guy is seeing?

[.....]

So I guess BFI is not going to be an option for gaming but might give you slightly better motion on movies and TV. The C9 only has an on/off toggle for this and personally I did not notice any relevant difference when watching some HDR TV series.

If you are trying to spot BFI on vs off, it's best to look how a game is when the game runs at high framerate and you are moving faster. Everything will just stay in focus better.

Interesting. High Trumotion at 4K/120 Hz should be quite noticeable increase in motion clarity. I believe from what I've read in the past, that is a 50% black frame insertion, so motion clarity should be doubled and in theory lose half the brightness. 60 Hz BFI shouldn't really be tested IMO, as 60 Hz is always too low of a frequency for non-irritating viewing. Remember; to properly test BFI your FPS should be locked to the refresh rate and V-Sync=ON. Otherwise you will get visual artifacts. So for 120 Hz BFI mode, make sure your DOOM FPS is locked at 120 with V-Sync ON.

Assuming you rule out 75% outright, the 318 nit they are using as a basis with BFI off (and assumingly with ABL enabled~not avoided) gets

..cut 40% at medium BFI setting, which does mathematically result in 190.8 nit so is around what they measured at 187nit.

..on Low BFI 25% the math result is at 238.5nit which is lower than the 273nit they quoted as being measured so perhaps it's not as aggressive as a 25% brightness reduction, perhaps interpolation is on too which would makes sense if the input lag is higher. I'm not sure if the tradeoffs would be worth it runing low BFI compared rather than no BFI with a raw fps hovering around a 120fps+120Hz graph considering the PWM effect on your eyes and the input lag increase from BFI.

..

..Neither of those quotes show the after ABL values. ABL on regular SDR brightness cuts it down to around 140nit on a C9 before BFI brightness reduction is considered so if you used medium 40% you could end up with a result of 84nit* of scene color brightness/highlights/detail in areas of color post ABL.

...

..If you run at around 250nit color brightness/color detail ceiling in SDR with the contrast at 80 and peak brightness to "Off" in order to avoid ABL kicking in you end up with medium BFI reducing 40% of 250 -> probably having a result of around 140nit of color detail/brightness (which is coincidentally the same as what you get in the previous (default) ABL scenario after ABL kicks in but before BFI brightness reduction considerations if enabled).

--------------------------------------------------------------------

So would you want to run SDR on the screen:

..normally at ~ 320nit of color detail but seeing 140nit ABL kick in intermittently

..normally + medium BFI --> 190nit of color detail (320nit minus 40%) until ABL kicks in then down to as low as *84nit (40% strobe effect subtracted from 140nit ABL) . 40% blur reduction (compared to 60fps+60hz but not 120fps+120hz), PWM, input lag.

..ABL-avoiding 80contrast, peak brightness"off" ~> 250nit seeing no ABL triggering

..ABL-avoiding 80 contrast, peak brightness "off" ~> BFI active (250 nit minus 40%) lowering it to 140nit average screen brightness (and detail limit in fields of color) throughout. 40% blur reduction compared to 60fps+60Hz, perhaps 20% vs 120fps+120hz.. +PWM, input lag increase.

*That is because as I understand it - BFI's dimming effect is on the way our eyes perceive the screen meaning the LG ABL burn in safety tech would not realize the after-BFI-effecive-brightness so would still be operating normally as if BFI wasn't a factor.

Does BFI work with any refresh rate? Like, 75hz, 90hz, 101hz, etc? Or do you have to choose either 60 or 120hz?

I wanted to know the details of how it works as well, especially with VRR activated and working fully because your frame rate and hz would be varying and for most people dropping considerably on the low end of a game's graph. A 60-90 -130 graph didn't seem like it would play nice with bfi.

It seems like "chief blurbuster" mark r is saying the hz/fps is unlinked from the black frame since its a per pixel strobe.

"Hz and persistence can be unlinked/unrelated thanks to strobe duty cycle adjustment."

"Does not matter if full strobe or rolling scan, as it is per-pixel duty cycle.

Note: That said, non-global illumination can cause artifacts (e.g. skewing during scan of any CRT, OLED (including strobed and nonstrobed rolling scans) or nonstrobed LCD"

"rolling strobe on OLED can be fractional refreshes, so OLED BFI can actually be arbitrary lengths unrelated to refresh cycle length. Since the off-pass can chase behind the on-pass simultaneously on the same screen at an arbitrary distance"

from blurbusters. com

Strobing on OLEDs sometimes have to behave differently because there’s no independent light source separate from pixel refresh source like for LCDs.

As a result, strobing on most OLEDs are almost always rolling-scan strobe (some exceptions apply, as some panels are designed differently OLED transistors can be preconfigured in scanout refresh, and then a illumination voltage does a global illumination at the end).

However, most large OLED panels have to do a rolling-scan strobe for reducing persistence. Also, rolling strobe on OLED can be fractional refreshes, so OLED BFI can actually be arbitrary lengths unrelated to refresh cycle length. Since the off-pass can chase behind the on-pass simultaneously on the same screen at an arbitrary distance, much like it did on the Dell UP3017Q monitor. Like changing the phosphor of a CRT to a shorter or medium persistence (except it’s a squarewave rather than a fade wave), CRT phosphor persistence length being unrelated to refresh cycle length. So BFI isn’t necessarily integer divisors here, and the meaning of “strobing” vs “BFI” is blurred into one meaning.

- An OLED with a 50%:50% on BFI will reduce motion blur by 50% (half original motion blur)

- An OLED with a 25%:75% on BFI will reduce motion blur by 75% (quarter original motion blur)

Typically, most OLED BFI is only in 50% granularity (8ms persistence steps), though the new 2019 LG OLEDs can do BFI in 25% granularity at 60Hz and 50% granularity at 120Hz (4ms persistence steps)

Except for the virtual reality OLEDs (Oculus Rift 2ms persistence), no OLEDs currently can match the short pulse length of a strobe backlight just yet, though I'd expect that a 2020 or 2021 LG OLED would thus be able to do so./QUOTE] - <edit by elvn: > they dropped bfi from the 2019 models but the numbers apply to 2020 oleds as 15% 40% it seems.

Black duty cycle is independent of refresh rate. However, percentage of black duty cycle is directly proportional to blur reduction (at the same (any) refresh rate). i.e. 75% of the time black = 75% blur reduction. Or from the visible frame perspective: Twice as long frame visibility translates to twice the motion blur.

Does not matter if full strobe or rolling scan, as it is per-pixel duty cycle.

Note: That said, non-global illumination can cause artifacts (e.g. skewing during scan of any CRT, OLED (including strobed and nonstrobed rolling scans) or nonstrobed LCD -- when viewing http://www.testufo.com/blurtrail will skew in nonlightboost at 32pps -- but stops skewing in lightboost or global strobe. Also, if viewing animation on iPad, rotate display until you see line skew)

Bell curve strobe rather than squarewave strobe can be useful and may look better for some applications other than VR, or slower motion/unsynchronized(VSYNC OFF) motion. As a slight persistence softening can reduce the harshness of microstutters from non-perfect refreshrate-framerate synchronization. But other ultrafast refresh-rate-synchronized motion, minimum motion blur dictates point strobing (as short as possible persistence, which is electronically easier with squarewave...just turn off pixel automatically mid-refresh....independent of refresh rate exact interval....aka Rolling Scan).

There is no such thing as "180Hz internal operation" as Oculus is already 80-90% black duty cycle (2ms persistence, despite 1/90sec = ~11ms refresh cycles) -- it is just a pixel-turnoff time delay, in a rolling scan algorithm. You adjust persistence simply by chasing the off-scan closer to the on-scan. See high speed videos. Screen gets darker the more you shorten a rolling scan OLED persistence, and you may get into color nonlinearities as active matrix transistors turning on/off OLED pixels aren't perfectly squarewave at the sub-millisecond level.

Good VR makes perfect framerate-refreshrare vsyncing mandatory, unfortunately for the Holodeck immersion. No ifs, buts, no protest, it is just the way the cookie crumbles for VR. In this case, striving for CRT-style curve strobing (instead of square wave strobing) is rather useless UNLESS you need it to hide imperfections (e.g. Flaws in the display) or reduce eyestrain at lower strobe rates.

1ms of persistence translates to 1 pixel of motion blur for every 1000 pixels/second motion. (We call this the "BlurBusters Law") For motion on a 4K display going one screenwidth per second, at 1ms strobe flashes, all 1-pixel fine details gets motionblurred to 4 pixels from the act of eyetracking against the visible part of the refresh cycle.

This can also be observed in action by the motion-induced optical illusion that only appears during eye tracking: http://www.testufo.com/eyetracking

If you have an adjustable-persistence monitor:

You can even see the relationship between persistence and motion blur, then use your persistence adjustment (strobe duty cycle adjustment, e.g. ULMB strobe adjustment, BENQ Blur Reduction strobe length, etc) while watching http://www.testufo.com/blurtrail ... The line blurring goes exactly twice the blurring (no matter the refresh rate) for double the persistence, and follows BlurBusters Law (1ms strobe length = 1 pixel added motion blur during 1000 pixel/sec motion, but becomes 2 pixels of blurring at 2ms persistence)

Hz and persistence can be unlinked/unrelated thanks to strobe duty cycle adjustment. But if you wanted to completely eliminate black periods, a 2ms persistence display with no strobing, would need to run 500fps@500Hz flickerfree to match Lightboost 2ms, or Oculus flicker rolling scan 2ms. This is because 1 second divided by 2ms equals 500 visible distinct frames needed to fill all 2ms slots of 1 second to avoid any blackness, while keeping motion of each frame perfectly in sync with eye movements. Even a 2000fps@2000Hz display (needed for 0.5ms full persistence woth zero black cycle) would still have human-visible motion blur in extreme conditions (e.g. Fast head turning with 4K or 8K VR while trying to read fine text on a wall). Oculus knows this. Michael Abrash confirms the relationship between persistence and motion blur.

Are you seeing any of the same issues this guy is seeing?

Can confirm the Dolby Vision Black Crush. It's really bad.

Unable to test the other items.

(Owner of a CX 55")

Has any manufacturer actually managed to get 2020 models that is a clear improvement over the 2019 ones? I'm guessing we can't really blame this on COVID19 either since these sets should have been finalized well before that (although it could affect manufacturing and delivery of course).

Must say that I am also feeling more and more doubtful about replacing my gaming monitors with current TVs, they just does not feel ready even when they should be more than capable on a "panel level".

He reports 3 things:

* EARC issues. Cannot test - not using this in my setup

* Black Levels in Dolby vision broken - i can test tonight when it's dark outside just running Nightflyer or something on netflix.

* Black levels in VRR are broken - i'll test this tonight as well in doom or any other game.

Other suggestions? I've looked at http://www.lagom.nl/lcd-test/black.php#blacktest.png but that seems to work perfectly (althought that is on the desktop).

To you guys who are planning on using the CX as a PC display...why do you care about eARC? I use it on my 65 C9 because its my living room TV routing my speaker set up thru the receiver which works perfectly...but for my PC application I use studio monitors routed through my Schiit DAC/AMP combo and could care less about eARC on that one.

Testing in game (Doom) - there's definitely an improvement in motion with BFI (use auto or high). It's most noticeable when strafing rather than aiming.

The problem is it gets thrown off by low frame rates or input lag not sure what exactly. Like it worked well at 1080p 120fps regardless of vsync. But if I ran at 4k with lower frame rates, input lag increased (not sure if just due to lower FPS itself) and the BFI gets thrown off to the point where it actually makes the motion worse. HDR itself didn't seem to affect. Also below 120hz the flicker is terrible.