elvn

Supreme [H]ardness

- Joined

- May 5, 2006

- Messages

- 5,303

I never said it was all about maximum image smoothness. I merely mentioned the fact that IPS still has less motion blur. As well as the VA.

Higher Hz screens that approach their peak HZ in the frame rate of the game do. 240Hz at 220fps+ , 360fps at 220fps - 330fps+, etc. 175fpsHz is marginal and even that is when at 175fps.

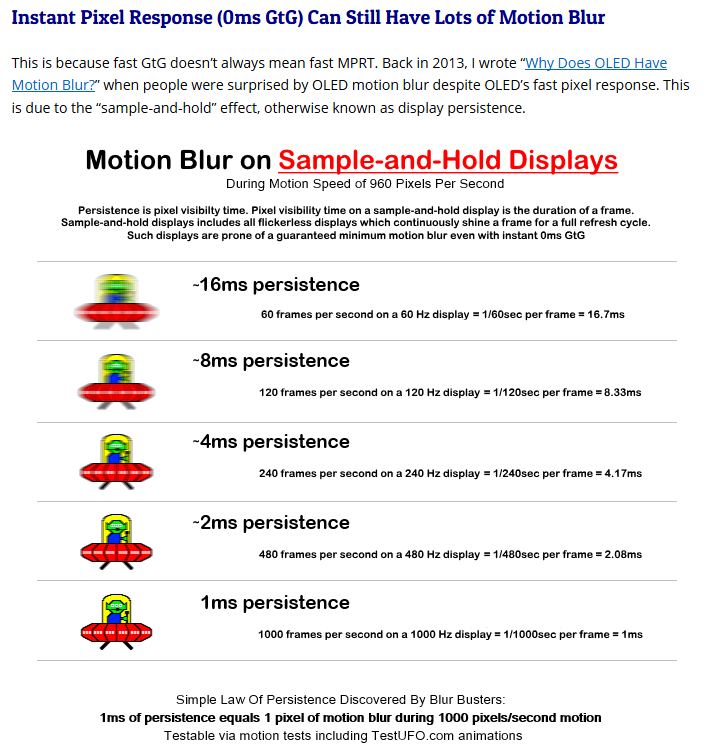

At the same FPS ranges.. where the top 2/3 of their range is hovering near 120fpsHz on demanding games (at 4k resolution), or more commonly less frame rate range averages due to how demanding 4k is and how high graphics settings ceilings can go when set to very high plus to ultra settings, all of the screens would be nearly the same sample-and-hold blur except that the OLED would probably look slightly tighter due to the response time.

------------------------------

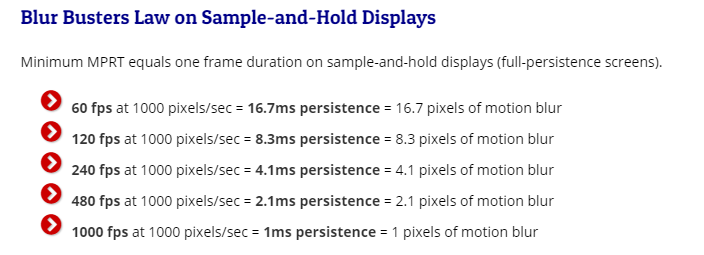

- 60 fps at 1000 pixels/sec = 16.7ms persistence = 16.7 pixels of motion blur

- 120 fps at 1000 pixels/sec = 8.3ms persistence = 8.3 pixels of motion blur

- 240 fps at 1000 pixels/sec = 4.1ms persistence = 4.1 pixels of motion blur

- 480 fps at 1000 pixels/sec = 2.1ms persistence = 2.1 pixels of motion blur

- 1000 fps at 1000 pixels/sec = 1ms persistence = 1 pixels of motion blur

Assumptions: The exact Blur Busters Law minimum is achieved only if pixel transitions are fully square-wave (0ms GtG) on fully-sharp sources (e.g. VR, computer graphics). Actual MPRT numbers can be higher. Slow GtG pixel response will increase numbers above the Blur Busters Law guaranteed minimum motion blur.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)