OFaceSIG

2[H]4U

- Joined

- Aug 31, 2009

- Messages

- 4,034

I don't know who this processor is for.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Someone who likes.building water cooling setupsI don't know who this processor is for.

It's for people like me. Which is why I have two 10900k's.

Nice. Any luck with overclocking?

Yes, the ES chip can do 5.3 ghz all cores at around 1.310v-1.320v load or something. But I'm having some weird issue where it seems (at least from a few quick tests) that using a cache ratio of x50 makes the system MORE stable than a cache ratio of x49! I was getting CPU Cache L0 errors in Cinebench R20 at x53/x49 at 1.305v load. ES CPU bug? Or am I just cursed? idk...Maybe everything's going to hell because the load temps are above 90C...and we don't talk about fight club when your load temps are above 90C! But when I set cache to x50...it was stable? What...

My retail chip seems to need absurd volts for 5.3 all cores (like 1.4v at full load), but the 4.7, 4.8, 4.9 and 5 ghz voltages seem to be exactly the same load voltage requirement as my ES.

basically, the retail chip falls off at 5.2 ghz, and falls off HARD at 5.3 ghz.

Interesting. Perhaps your retail chip was a 'C' batch.

https://www.techpowerup.com/266741/msi-shares-fascinating-insights-into-comet-lake-binning

Interesting. Perhaps your retail chip was a 'C' batch.

https://www.techpowerup.com/266741/msi-shares-fascinating-insights-into-comet-lake-binning

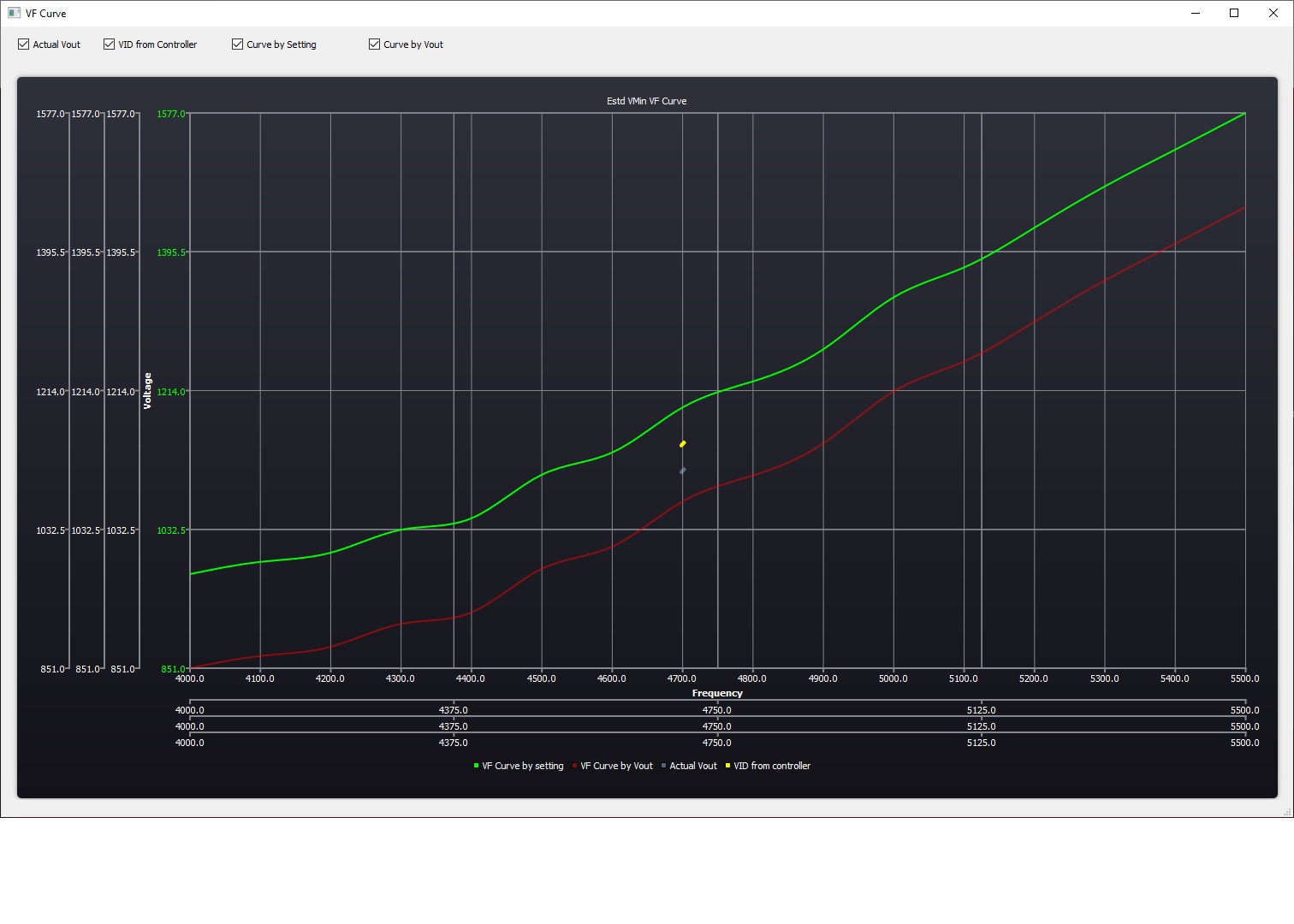

Where do you get the tuner software?Here is the non AVX vf curve for the ES chip. the 94 quality one (this is in top 10%, high A tier).

The red line is the predicted VR VOUT value at 100C needed for non AVX prime95 small FFT (100C means, as if Thermal Velocity Boost Voltage Optimizations are disabled--this setting being enabled reduces the vCPU 1.55mv every 1C starting at 100C

TVB Voltage optimizations have been here since Kaby Lake. This isn't anything new.

"vCPU" is CPU VID when AC Loadline and DC Loadline are both at 0.01 mOhms.

The blue line is the predicted Bios setting at loadline calibration level 4, that Asus guesses that you need for that VR OUT.

If your CPU can do a lower VR OUT than the red line predicts (if it is at 100C), then there is a guardband of voltage leeway.

In my case, for example, at 5.2 ghz, I can do AVX small FFT disabled Prime95 with a load VOUT of 1.235v, at 90C (200 amps), while the red prediction says I need 100C.

For 5.3 ghz, I have no cooling headroom to test that. But Battlefield 5 and Minecraft (Java, loading to main menu loop test) seems to need about 1.325v load, although AIDA64 Stress FPU needs less. This might be due to violent transients that BF5 and Minecraft loading causes. Notice the prediction says I need about 1.356v.

View attachment 247718

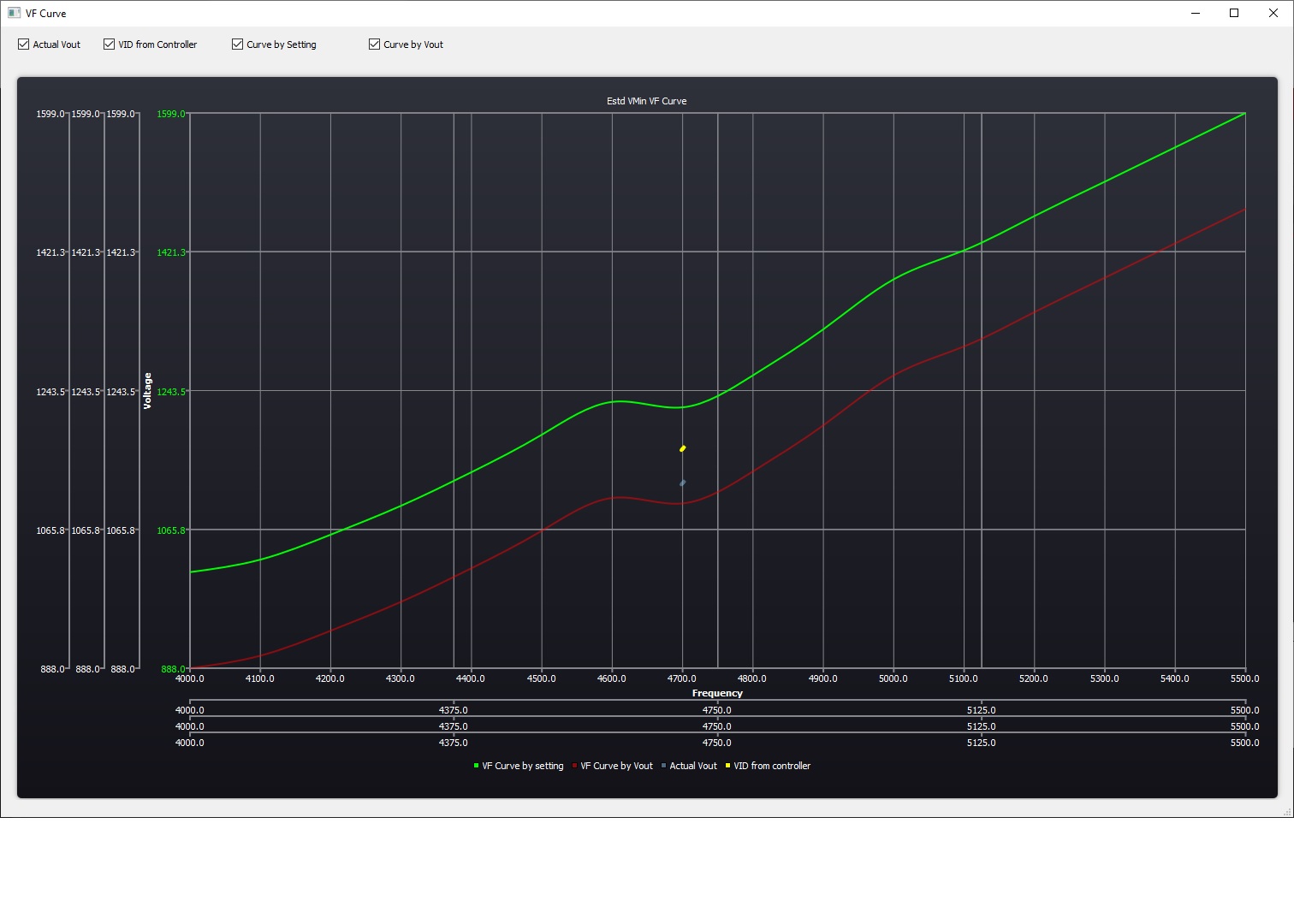

Here is the AVX prediction chart. (Predicted based on AVX small FFT prime95).

View attachment 247719

Where do you get the tuner software?

I don't know who this processor is for.

Yeah I returned my Ryzen 3950x ..... I mean, the productivity benches were kinda cool I guess, at the end of the day I'm a gamer so I'm not sure who that CPU is really for. I guess kids that stream and like to run render benchmarks over and over again. I also had a shit ton of issues with memory. I had to return 2 sets of 16gb because they just refused to work. Then, I found out you had to by "Ryzen Approved" DDR4 ... I mean, what the hell is up with that. Also, why couldn't I overclock the CPU to 5ghz like Intel? AMD is 7nm, Intel is 14nm? That makes NO SENSE to me. And under load, the heat was insane, Was hitting the high 80's. So returned it. Like I said, not sure who the Ryzen 3950x is for. Lots and lots of cons vs the ONE pro I could find and that was you get a few more cores. But, aren't most of us gamers?

ok

- 7nm is not as mature a process as 14+nm

- 7nm means that the size of the transistors is smaller, therefore they produce less heat but are more densely packed thus more heat is produced in a smaller area which means higher temperatures under load

- It also means that it is harder to get heat away from the source.

- Higher temperatures are inversely proportionate to speed, the faster you go, the hotter you get, you can’t go as fast if you are hot.

- 12-16 cores are not necessary for the majority of use cases at this time

- 12-16 threads is optimal for gaming at the high end of the scale, 8 is sufficient, 12 is very good, 16 is .. probably overkill in general.

- 8-10 threads is adequate for the majority of games these days (Starcraft 2 uses up to 4 reasonably well, AC:Origins/odyssey use 10-12, Doom eternal uses 14-15 albeit not putting all under load most of the time)

- More cores is better for: Video rendering/compression, 3d rendering, compiling software, running benchmarks, deep learning/ai, Microsoft excel

- For the vast majority of users these days 6 cores is enough, heck most can get by happily on 4.

- Idling on the desktop and web browsing can be done happily on 2 cores or one core with 2 threads

- Streaming and gaming can be done with QSV or Nvenc

delivering it through a plate of copper and 500-600 pins in easy. The fact that those 200 amps are running through a piece of glass not much bigger than the end of my thumb in a controlled, predictable, and useful manner instead of melting is what’s awe inspiring to me.The thought of 200 amps is worrying in itself. 200a/1.25v = 250w so it makes sense, but delivering 200A across pcb traces is non trivial

Thank you.

Also, after doing some research, it turns out that one of the major reasons Intel can out perform AMD at a hight NM process is their CPU tech. Apperently, Intel has 1000's and 1000's of patents pertaining to inside their CPU's, tech that AMD simply does not have. I've been reading that Intel at 10nm will still out perform AMD at 5nm, let alone their 7nm. Brand prediction, data gates, a lot of way over my head crazy stuff that Intel has. This is what give them the ability to overclock, etc. Very interesting stuff if you start digging in and reading.

Come to think of it, I think I understand who the 10900K is for, it's for people who are serious about gaming and won't accept anything but the fastest gaming experience you can buy.

No, the key reason that intel is “still in the game” is because most software is compiled with their chips in mind first.

Thank you.

I guess another plus is AMD is affordable to people with a lot less money which would mean ... a lot of people. So I am glad kids with a lot less money can at least have AMD in their corner. Also, after doing some research, it turns out that one of the major reasons Intel can out perform AMD at a hight NM process is their CPU tech. Apperently, Intel has 1000's and 1000's of patents pertaining to inside their CPU's, tech that AMD simply does not have. I've been reading that Intel at 10nm will still out perform AMD at 5nm, let alone their 7nm. Brand prediction, data gates, a lot of way over my head crazy stuff that Intel has. This is what give them the ability to overclock, etc. Very interesting stuff if you start digging in and reading.

I am so happy that AMD woke Intel up. This is going to be amazing ride in the coming years for all of us.

I picked up an Intel 10900K today at Microcenter and an Asus z490 ROG Maximus Hero Wifi along with Samsung B-Die 4400Mhz DDR4 memory. Second place has never ever really been something I've ever wanted to settle for. I anticipate having one of the worlds fastest gaming PC's once I am done.

Come to think of it, I think I understand who the 10900K is for, it's for people who are serious about gaming and won't accept anything but the fastest gaming experience you can buy.

You must be trolling as their is way to much arrogance in that post.

It's like you are bitter that 'kids with less money' can get within 10% gaming of those with unlimited resources ... using a 2080ti at 1080p.

Then the node stuff. First off, nobody except you was surprised that AMD did not shoot up to 5 ghz with a node shrink. Smaller node does NOT isually mean higher clocks.

Glad you have been doing a lot of reading, but nobody believes that nonsense about 10nm Intel.

Your entire post does not seem genuine. "serious about gaming and will not accept second best":

Bro, tone it down a bit. Even the biggest nerds on here had to cringe some when they read that.

No real person talks like this.

Not sure why you thought the 3950x was for you if your primary goal is gaming. That said, if you found 80* hot and you bought a 10900k.and plan to overclock.... You either have some substantial cooling (which should have had no problems with a 3950x) or you are likely going to see as high or higher temps. The 3950x is for someone like me who plays games once i a while on a very GPU limited machine. I mostly do development work (compiling, databases), some transcoding and just screwing with machine learning and misc other stuff. It can be used for those that do other things besides just game... Some people leave their encodes running while gaming.. with 4/8 its really noticeable, with 16/32 you won't even notice. It's for sure not for everyone and I don't pretend like it is. 3600x and 10600k(f) are the chips to look at for most use cases. 10900k and 3950x are for different niches.Thank you.

I guess another plus is AMD is affordable to people with a lot less money which would mean ... a lot of people. So I am glad kids with a lot less money can at least have AMD in their corner. Also, after doing some research, it turns out that one of the major reasons Intel can out perform AMD at a hight NM process is their CPU tech. Apperently, Intel has 1000's and 1000's of patents pertaining to inside their CPU's, tech that AMD simply does not have. I've been reading that Intel at 10nm will still out perform AMD at 5nm, let alone their 7nm. Brand prediction, data gates, a lot of way over my head crazy stuff that Intel has. This is what give them the ability to overclock, etc. Very interesting stuff if you start digging in and reading.

I am so happy that AMD woke Intel up. This is going to be amazing ride in the coming years for all of us.

I picked up an Intel 10900K today at Microcenter and an Asus z490 ROG Maximus Hero Wifi along with Samsung B-Die 4400Mhz DDR4 memory. Second place has never ever really been something I've ever wanted to settle for. I anticipate having one of the worlds fastest gaming PC's once I am done.

Come to think of it, I think I understand who the 10900K is for, it's for people who are serious about gaming and won't accept anything but the fastest gaming experience you can buy.

Not sure why you thought the 3950x was for you if your primary goal is gaming. That said, if you found 80* hot and you bought a 10900k.and plan to overclock.... You either have some substantial cooling (which should have had no problems with a 3950x) or you are likely going to see as high or higher temps. The 3950x is for someone like me who plays games once i a while on a very GPU limited machine. I mostly do development work (compiling, databases), some transcoding and just screwing with machine learning and misc other stuff. It can be used for those that do other things besides just game... Some people leave their encodes running while gaming.. with 4/8 its really noticeable, with 16/32 you won't even notice. It's for sure not for everyone and I don't pretend like it is. 3600x and 10600k(f) are the chips to look at for most use cases. 10900k and 3950x are for different niches.

He, but if your buying a 10900 for other work loads it's even worse. My point was the use case for 3950x and 10900k are different, but do have some overlap (aka, 3950x can game just a little slower, 10900k can do threaded workloads well, just a little slower in general). I also said the 3600x/10600k fits the bill for a more broad base than 10900k/3950x. If you need or have the $, you're not missing anything by going bigger. It's just; know what you're getting and why.If you're buying a 10900k for gaming, you're missing the boat and wasting a lot of money. For the vast majority of all-arounders out there, the 3900x/10700k is plenty with the 10700k for the gaming first, productivity second crowd and the 3900x for the productivity first, gaming second crowd.

I don’t know if that’s a bragging point.It's for people like me. Which is why I have two 10900k's.

No, that really isn't it.

The real reason is that: Intel has better inter-process and cache latency. Games don't have completely independent threads. They frequently need to to pass information between them, and they get preempted more causing cache hits. Clock speed boost is just icing on the cake.

I’d like to agree with you, but with the 10x series that isn’t the case

https://www.anandtech.com/show/15785/the-intel-comet-lake-review-skylake-we-go-again/4

I was in the EXACT same boat... I fell into all the "hype" created around that cpu upon launch and thought it would make a great gaming cpu along with a "do anything" pc, and it was great at multi tasking, not knocking that at all, but gaming...... Ill take a hard pass. I OC'd my 3950 to 4.6 on ccd0 and 4.4ccd1 and this 10900k at complete stock (for games) blows it away. Glad i went back to intel since for me, games are my priority, not having 67 chome tabs openYeah I returned my Ryzen 3950x ..... I mean, the productivity benches were kinda cool I guess, at the end of the day I'm a gamer so I'm not sure who that CPU is really for. I guess kids that stream and like to run render benchmarks over and over again. I also had a shit ton of issues with memory. I had to return 2 sets of 16gb because they just refused to work. Then, I found out you had to by "Ryzen Approved" DDR4 ... I mean, what the hell is up with that. Also, why couldn't I overclock the CPU to 5ghz like Intel? AMD is 7nm, Intel is 14nm? That makes NO SENSE to me. And under load, the heat was insane, Was hitting the high 80's. So returned it. Like I said, not sure who the Ryzen 3950x is for. Lots and lots of cons vs the ONE pro I could find and that was you get a few more cores. But, aren't most of us gamers?

Has anyone run the 10900k with HT disabled on the two or four best cores and they're at a higher frequency? Then a game has the screaming fast cores (Far Cry 5?) simultaneous with so many threads but maybe with less heat.

Thank you.

I guess another plus is AMD is affordable to people with a lot less money which would mean ... a lot of people. So I am glad kids with a lot less money can at least have AMD in their corner. Also, after doing some research, it turns out that one of the major reasons Intel can out perform AMD at a hight NM process is their CPU tech. Apperently, Intel has 1000's and 1000's of patents pertaining to inside their CPU's, tech that AMD simply does not have. I've been reading that Intel at 10nm will still out perform AMD at 5nm, let alone their 7nm. Brand prediction, data gates, a lot of way over my head crazy stuff that Intel has. This is what give them the ability to overclock, etc. Very interesting stuff if you start digging in and reading.

I am so happy that AMD woke Intel up. This is going to be amazing ride in the coming years for all of us.

I picked up an Intel 10900K today at Microcenter and an Asus z490 ROG Maximus Hero Wifi along with Samsung B-Die 4400Mhz DDR4 memory. Second place has never ever really been something I've ever wanted to settle for. I anticipate having one of the worlds fastest gaming PC's once I am done.

Come to think of it, I think I understand who the 10900K is for, it's for people who are serious about gaming and won't accept anything but the fastest gaming experience you can buy.

I'm not going to get "old techology" 14 nm components ...

Buying based on process technology is among the most irrelevant of reasons there is.

If AMD was selling 7nm Dozer derived parts, how much would 7nm vs 14nm matter to you?

Has anyone run the 10900k with HT disabled on the two or four best cores and they're at a higher frequency? Then a game has the screaming fast cores (Far Cry 5?) simultaneous with so many threads but maybe with less heat.