Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Dell Alienware AW3423DW 34″ QD-OLED 175Hz (3440 x 1440)

- Thread starter Blade-Runner

- Start date

ziocomposite

Limp Gawd

- Joined

- Sep 20, 2011

- Messages

- 192

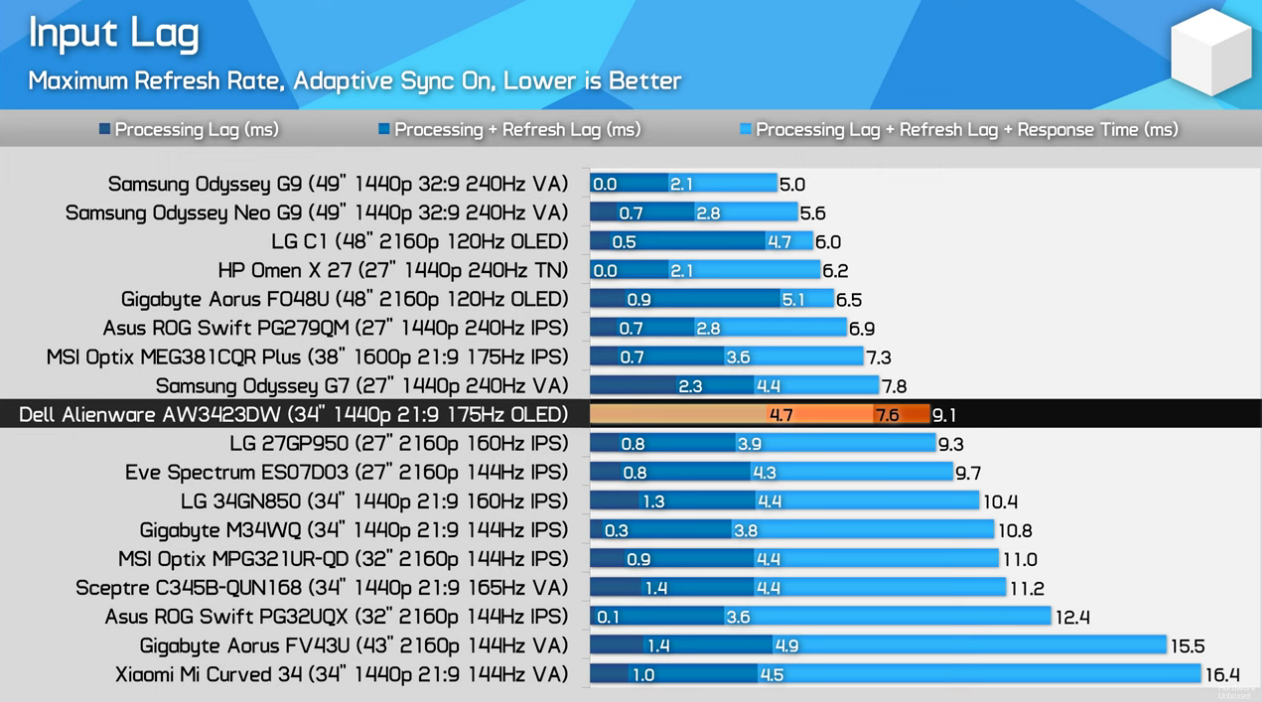

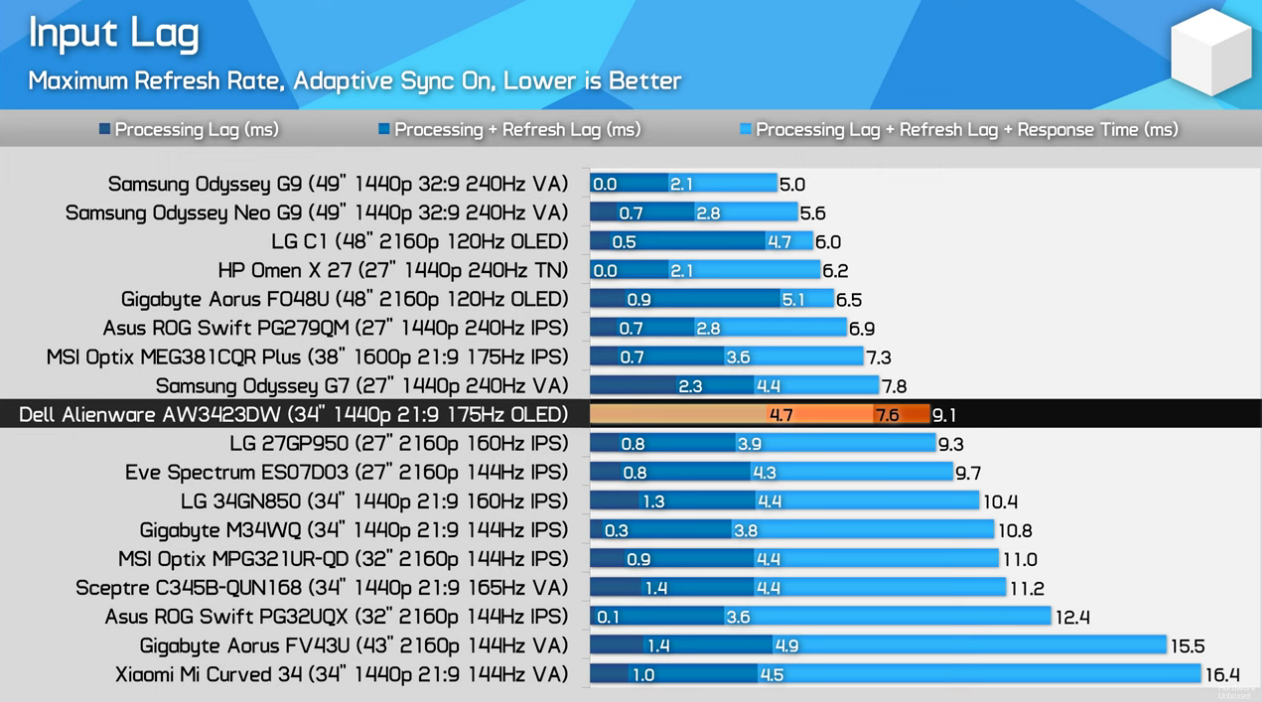

Matches my initial impression of the monitor as well. Works fine for my "competitive" needs though I assume most would be using a 16:9 for said scenarios. Curious if Dell can fine tune the processing delay and how the G8 will handle this. That being said, have basically swapped over gaming/FPS duties on the AW and the added 1ms hasn't been an issue personally.

So far haven't seen any real numbers taken with Native display resolution and Max hz. Waiting on Rtings, HUB, TFT, Prad, etc.

The panel is fast and without the weird ghosting/smearing issues.

Have placed a G7 240hz 1440p and AW side by side and the G7 is a smidge faster in response while AW has better clarity/smoother. This was using "Duplicate Display" and unfortunately can't send a native/active signal resolution of 2560x1440p to the AW.

Another reviewer that didn't test properly! Where are the results with no gsync or adaptive sync? Where are the input lag tests with Gsync (Hardware) instead of Adaptive sync? These are big oversights IMHO. A LOT of us never use that nonsense because it increases input lag. Back to waiting for TFTcentral and Rtings.Matches my initial impression of the monitor as well. Works fine for my "competitive" needs though I assume most would be using a 16:9 for said scenarios. Curious if Dell can fine tune the processing delay and how the G8 will handle this. That being said, have basically swapped over gaming/FPS duties on the AW and the added 1ms hasn't been an issue personally.

View attachment 461937

Last edited:

ziocomposite

Limp Gawd

- Joined

- Sep 20, 2011

- Messages

- 192

The test is valid and "proper". There are a lot of us who use Gsync/Adaptive Sync.Another reviewer that didn't test properly! Where are the results with no gsync or adaptive sync? A LOT of us never use that nonsense because it increases input lag. Back to waiting for TFTcentral and Rtings.

That being said, hopefully you get your non-gsync results as well since that is also valid.

I'm aware. The 16K spec is with DSC, but we don't know exactly how efficient DSC is, so I calculated how much bandwidth that spec would require without and then matched it with my hypothetical 4K spec assuming an equal level of compression.It doesn't offer that high a bitrate. It caps out at 80Gbps raw (77.4Gbps after overhead) so a little less than twice HDMI 2.1 (48/42Gbps). It also should be noted that is the max rate, there are actually 3 new bitrates for 2.0: 40Gbps, 54Gbps, and 80Gbps so just because something supports 2.0 doesn't mean it'll support the highest data rate.

Anything that would require data rate beyond 77Gbps of effective throughput, is going to either use DSC, chroma subsampling, or both.

It's almost like I'm asking here because I already did that and didn't find anything. The resolutions you find in every article or thread about DP 2.0, like what you linked, are just quoted from the official display modes, nothing interesting like what I asked.Then Google just another site with specs about DP 2.0, it’s not that difficult mate.

G-Sync doesn't add appreciable input lag unless you're playing a game where you can get several hundred FPS more than your G-Sync limit, and even then it's like 4-5 ms (142 fps cap gsync vs 1000+ fps no-sync).Another reviewer that didn't test properly! Where are the results with no gsync or adaptive sync? Where are the input lag tests with Gsync (Hardware) instead of Adaptive sync? These are big oversights IMHO. A LOT of us never use that nonsense because it increases input lag. Back to waiting for TFTcentral and Rtings.

Last edited:

But he never tested Gsync, only adaptive sync and we know for a fact that input lag consistently measures lower on Gsync displays. Personally, I won't be using either gsync, freesync or HDR because all I play is Warzone.The test is valid and "proper". There are a lot of us who use Gsync/Adaptive Sync.

That being said, hopefully you get your non-gsync results as well since that is also valid.

I feel bad for anyone who wastes this display solely for Warzone.But he never tested Gsync, only adaptive sync and we know for a fact that input lag consistently measures lower on Gsync displays. Personally, I won't be using either gsync, freesync or HDR because all I play is Warzone.

G-Sync doesn't add appreciable input lag unless you're playing a game where you can get several hundred FPS more than your G-Sync limit, and even then it's like 4-5 ms (142 fps cap gsync vs 1000+ fps no-sync).

That varies from display to display but if your argument is that this monitor with a hardware gsync module and gsync enabled has comparable input lag to this monitor without any form of adaptive sync enabled we have ZERO data. He didn't test gsync because Tim used an AMD graphics card. He didn't test with no adaptive sync because Techspot and Tim think all gamers give a shit about HDR. Half of us don't care AT ALL.

ziocomposite

Limp Gawd

- Joined

- Sep 20, 2011

- Messages

- 192

You are absolutely right, Tim did it on purpose to spite you

Sometimes I play Apex Legends and Rocket League.... if I'm not sweating I'm not having fun.I feel bad for anyone who wastes this display solely for Warzone.

That varies from display to display but if your argument is that this monitor with a hardware gsync module and gsync enabled has comparable input lag to this monitor without any form of adaptive sync enabled we have ZERO data. He didn't test gsync because Tim used an AMD graphics card. He didn't test with no adaptive sync because Techspot and Tim think all gamers give a shit about HDR. Half of us don't care AT ALL.

I bet more gamers would care about HDR than single digit millisecond differences in input lag, especially after experiencing in person what good HDR can add to the experience of playing a game. Double especially on a console port like Warzone where the TTK is like a minute and a half

The vast majority of PC gamers don't play competitively

The ttk in Warzone is 700ms, the ttk in Apex is a minute and a half. Battle Royale games still account for over 700 million gamers worldwide. Just because you don’t enjoy them doesn’t mean millions of don’t play them.I bet more gamers would care about HDR than single digit millisecond differences in input lag, especially after experiencing in person what good HDR can add to the experience of playing a game. Double especially on a console port like Warzone where the TTK is like a minute and a half

The vast majority of PC gamers don't play competitively

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Accounts* not playersBattle Royale games still account for over 700 million gamers worldwide.

I dunno if you realize this but the majority of people here are in favor of HDR and consider it a transformative experience. The idea of catering a display to a single demographic of users that you happen to be in rather than making it great for all purposes is baffling to me.The ttk in Warzone is 700ms, the ttk in Apex is a minute and a half. Battle Royale games still account for over 700 million gamers worldwide. Just because you don’t enjoy them doesn’t mean millions of don’t play them.

It's great that you're a esports whore and can take advantage of the motion clarity benefits this monitor provides but we can also have great contrast, HDR, etc. Your posts make it sound like everyone on the planet spends 16 hours a day shitting/pissing in their computer chair on Warzone.

The ttk in Warzone is 700ms, the ttk in Apex is a minute and a half. Battle Royale games still account for over 700 million gamers worldwide. Just because you don’t enjoy them doesn’t mean millions of don’t play them.

What percent of those 700 million people are playing on a cell phone (which probably has better HDR support than most PCs)? 70?

I said most PC gamers don't play competitively. BRs being popular kind of supports that claim, actually. A lot of the sweatier gamers avoid BRs because of the RNG factor which allows more casual people to beat better players due to luck. They are not purely skill based like other more competitive games.

MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,519

I dunno if you realize this but the majority of people here are in favor of HDR and consider it a transformative experience. The idea of catering a display to a single demographic of users that you happen to be in rather than making it great for all purposes is baffling to me.

It's great that you're a esports whore and can take advantage of the motion clarity benefits this monitor provides but we can also have great contrast, HDR, etc. Your posts make it sound like everyone on the planet spends 16 hours a day shitting/pissing in their computer chair on Warzone.

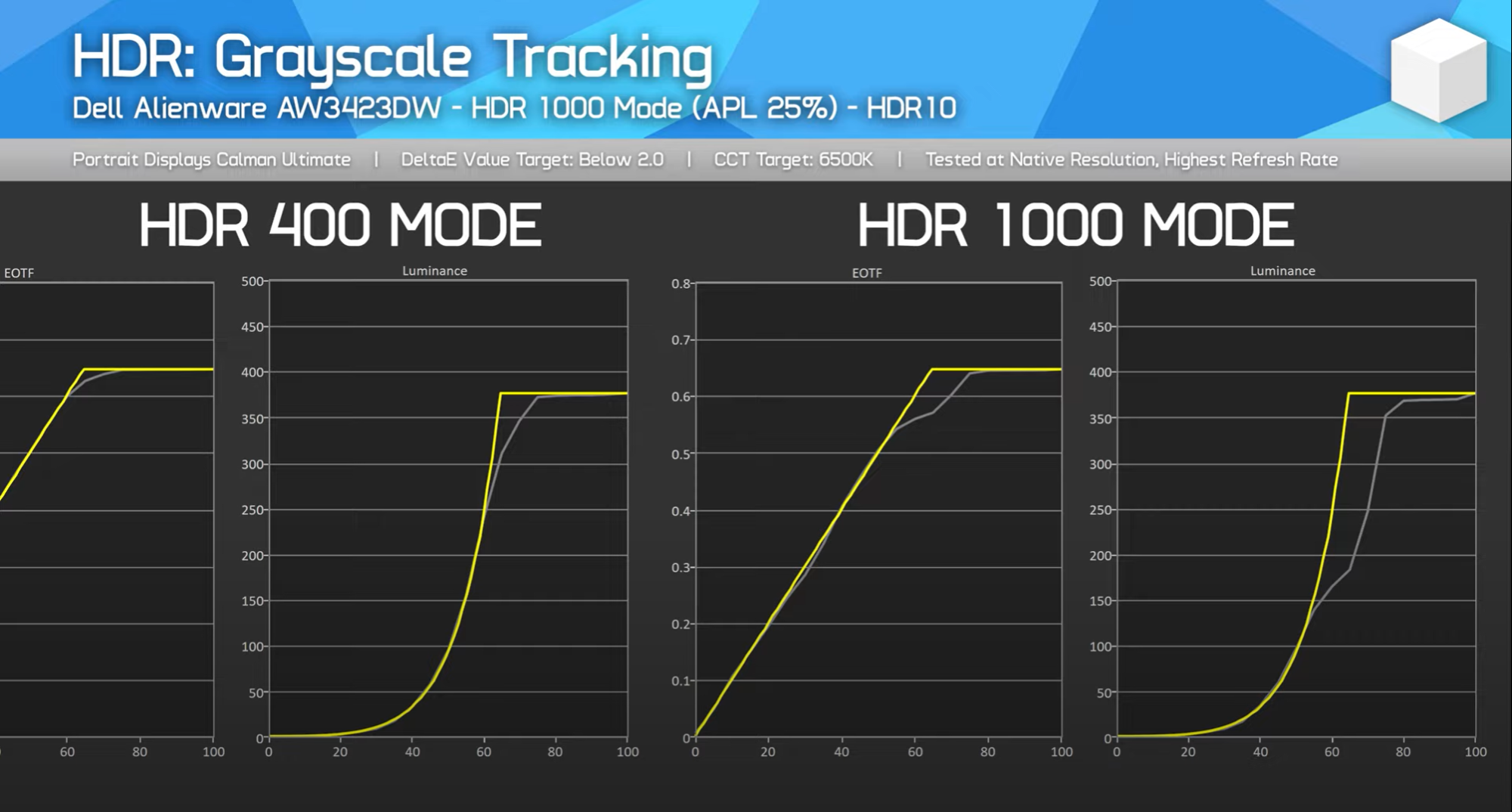

Except the HDR on this monitor seems to have a few issues though.

I'm sure it's still miles better than any LCD, but it seems like it could use some work.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

Game-bugs and server issues are more likely to fail you, than 4-5ms of display input lag. Especially in Apex and Warzone

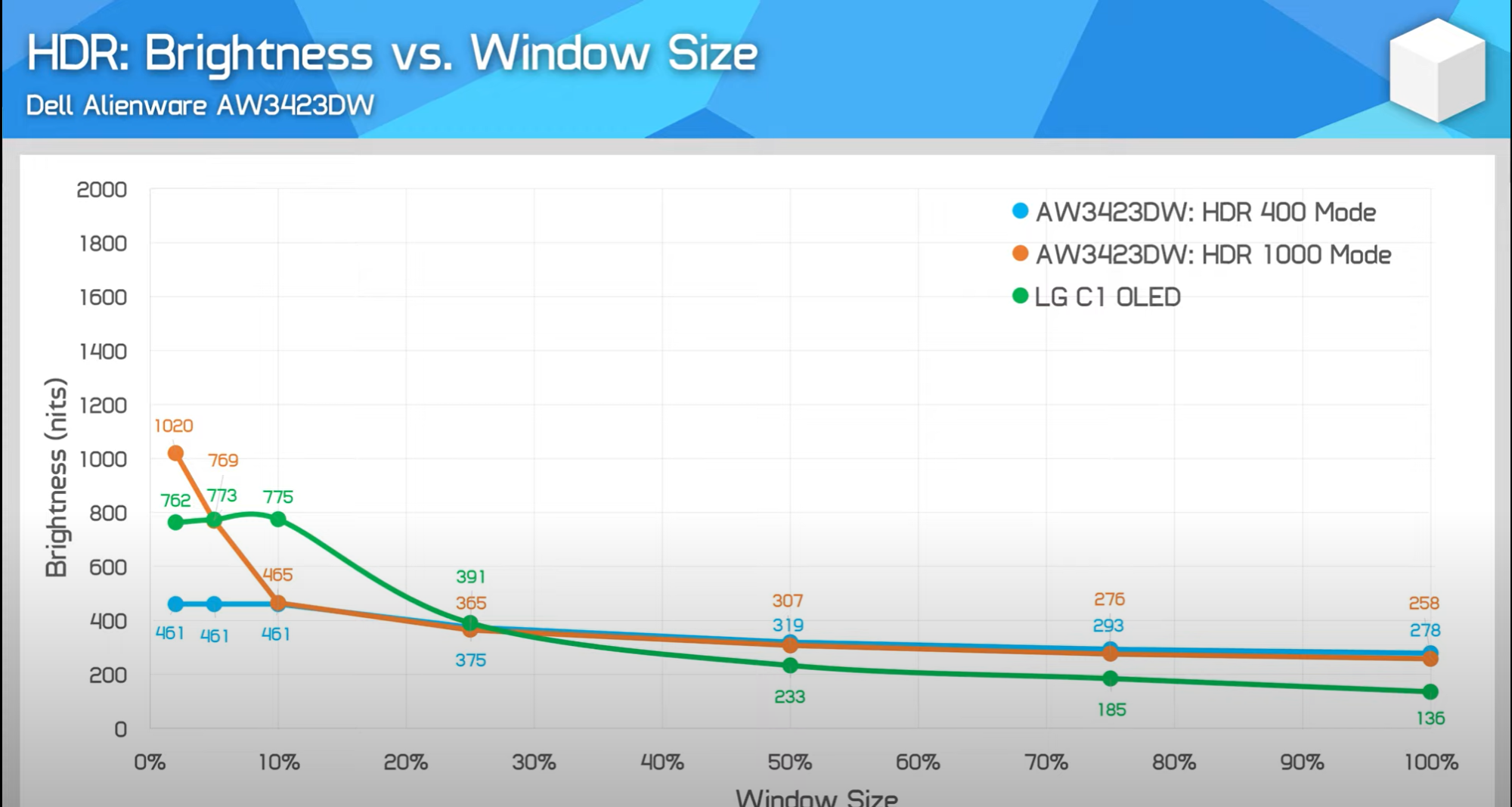

Yeah I noted way back that HDR looks more impressive on a C1. The fact that he recommends HDR1000 mode confirms his eyes don't work or he didn't test enough because the ABL makes it unusable. Didn't know about the EOTF issue but that also explains why 1000 mode looks poor.Except the HDR on this monitor seems to have a few issues though.

I'm sure it's still miles better than any LCD, but it seems like it could use some work.

Real world use this is a 450nit peak brightness monitor.

I'm not asking for this display to cater to me, all I'm saying is that testing input lag with Adaptive sync instead of Gsync or neither makes zero sense on a $1400 monitor with a built-in hardware Gsync module.I dunno if you realize this but the majority of people here are in favor of HDR and consider it a transformative experience. The idea of catering a display to a single demographic of users that you happen to be in rather than making it great for all purposes is baffling to me.

It's great that you're a esports whore and can take advantage of the motion clarity benefits this monitor provides but we can also have great contrast, HDR, etc. Your posts make it sound like everyone on the planet spends 16 hours a day shitting/pissing in their computer chair on Warzone.

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

Which isn't a big deal. It's certified as HDR400 True black. So it being able to actually punch above that at all is kind of a nice trend.Yeah I noted way back that HDR looks more impressive on a C1. The fact that he recommends HDR1000 mode confirms his eyes don't work or he didn't test enough because the ABL makes it unusable. Didn't know about the EOTF issue but that also explains why 1000 mode looks poor.

Real world use this is a 450nit peak brightness monitor.

stonewalker

Gawd

- Joined

- May 26, 2007

- Messages

- 765

I'm curious to see the input lag results with no gsync or adaptive sync.

Stryker7314

Gawd

- Joined

- Apr 22, 2011

- Messages

- 867

Yuck.Matches my initial impression of the monitor as well. Works fine for my "competitive" needs though I assume most would be using a 16:9 for said scenarios. Curious if Dell can fine tune the processing delay and how the G8 will handle this. That being said, have basically swapped over gaming/FPS duties on the AW and the added 1ms hasn't been an issue personally.

View attachment 461937

undertaker2k8

[H]ard|Gawd

- Joined

- Jul 25, 2012

- Messages

- 1,990

Enhanced Interrogator

[H]ard|Gawd

- Joined

- Mar 23, 2013

- Messages

- 1,429

I wonder if the processing lag is a result of the pixel shifting.

Curious to see how Samsung's numbers come out when they release their monitor with this panel.

Curious to see how Samsung's numbers come out when they release their monitor with this panel.

robbiekhan

Gawd

- Joined

- Apr 13, 2004

- Messages

- 764

Anyone got any experience with the LG 38WN95C-W?

if I start to get too annoyed by the fan noise on this Alienware then I may consider switching to the LG. It appears to have an internal LUT so the means of colour calibration that I am used to direct to the monitor is retained from my old LG as I don't really like calibrating via an icc profile and GFX card's lut combo. Plus, 38" 144Hz so would be rather good on that front.

Just throwing around an idea should the fan noise start to get on my nerves since my PC so almost as silent in use as it is when off so I can hear every whisper from the monitor's two fans.

if I start to get too annoyed by the fan noise on this Alienware then I may consider switching to the LG. It appears to have an internal LUT so the means of colour calibration that I am used to direct to the monitor is retained from my old LG as I don't really like calibrating via an icc profile and GFX card's lut combo. Plus, 38" 144Hz so would be rather good on that front.

Just throwing around an idea should the fan noise start to get on my nerves since my PC so almost as silent in use as it is when off so I can hear every whisper from the monitor's two fans.

undertaker2k8

[H]ard|Gawd

- Joined

- Jul 25, 2012

- Messages

- 1,990

edge-lit IPS and HDR will never work well, period.Anyone got any experience with the LG 38WN95C-W?

if I start to get too annoyed by the fan noise on this Alienware then I may consider switching to the LG. It appears to have an internal LUT so the means of colour calibration that I am used to direct to the monitor is retained from my old LG as I don't really like calibrating via an icc profile and GFX card's lut combo. Plus, 38" 144Hz so would be rather good on that front.

Just throwing around an idea should the fan noise start to get on my nerves since my PC so almost as silent in use as it is when off so I can hear every whisper from the monitor's two fans.

robbiekhan

Gawd

- Joined

- Apr 13, 2004

- Messages

- 764

Hmm figured that might be the case. Ultimately I will likely just have to accept the fan noise and hope a future 38" model will come with FreeSync Premium instead of Gsync so there will be no fan. There's no denying that OLED is unbeatable really.

sharknice

2[H]4U

- Joined

- Nov 12, 2012

- Messages

- 3,758

This is hardforum Liquid cool your monitor.Hmm figured that might be the case. Ultimately I will likely just have to accept the fan noise and hope a future 38" model will come with FreeSync Premium instead of Gsync so there will be no fan. There's no denying that OLED is unbeatable really.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Yeah, seriously! Cnc a block and run tubing from your pc to the monitor.This is hardforum Liquid cool your monitor.

My goodness, people have gotten soft!

robbiekhan

Gawd

- Joined

- Apr 13, 2004

- Messages

- 764

Sounds ridiculous.

You son of a bitch I'm in!

You son of a bitch I'm in!

Hey! New here, but I agree that it's really hard to hit 175 FPS at 1440p ultrawide with high settings. I ordered this monitor about 3 weeks ago and won't receive it until June 9th. I'm holding off on playing games that I already own like CP2077 and Red Dead until I replace my (apparently now lowly) 1080Ti with a new GPU. Prices are falling back to Earth, but I'll wait for the RTX 4000 series since they are less than six months away. Maybe the RTX 4080 (or whatever they label it) will get me to 175Hz with eye candy. We'll see...Problem is hitting those kind of frame rates though. I have a 3080 Ti and 6900 XT and neither GPU is really capable of pushing 3440x1440 at 175fps in the latest games, at least not at ultra details or even high. But if next gen GPU's are as powerful as they are rumored to be then that will all change.

Really? You can see this from 10 feet away on a blurry video of a video?The Alienware (top) is clearly flickering. I thought it was supposed to be flicker free?

https://www.certipedia.com/quality_marks/1111246519?locale=en

This is the third or so clip of it I've seen where flicker is visible.

ssj3rd

Limp Gawd

- Joined

- Aug 30, 2021

- Messages

- 184

They have been All deactivated for this monitor…Been looking around for deals or coupons - anybody here know of one?

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,554

Yeah, pretty lame. I'll be waiting on this because of that. Typical corporate/government discounts of 10% aren't working on this.

ssj3rd

Limp Gawd

- Joined

- Aug 30, 2021

- Messages

- 184

I guess you can wait a very long time for it to happen…

Mchart

Supreme [H]ardness

- Joined

- Aug 7, 2004

- Messages

- 6,554

Already have an Acer X27, I want to move to this panel, but I can wait.I guess you can wait a very long time for it to happen…

I've honestly been waiting for them to just flat out cancel my 5-6 week old order one of these days because I used a bunch of the working coupons on day 1.Yeah, pretty lame. I'll be waiting on this because of that. Typical corporate/government discounts of 10% aren't working on this.

I also don't have the "advanced exchange warranty" on the invoice either since it was bugged majority of the first day of orders as well which is a little worrisome

Anyone know if there are any discounts for this? I asked Dell as I am part of the Membership Discount Program but they are telling me this particular monitor will not be eligible for the program (even though all other monitors pretty much are). I am thinking it will someday be part of the program but it might be a long time.

Zepher

[H]ipster Replacement

- Joined

- Sep 29, 2001

- Messages

- 20,939

Someone was asking about ambient lighting making the screen grey on another forum so I took these two photos earlier.

I have my really bright LED shop light on behind me and screen still looks fine,

I have my really bright LED shop light on behind me and screen still looks fine,

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)