Shintai

Supreme [H]ardness

- Joined

- Jul 1, 2016

- Messages

- 5,678

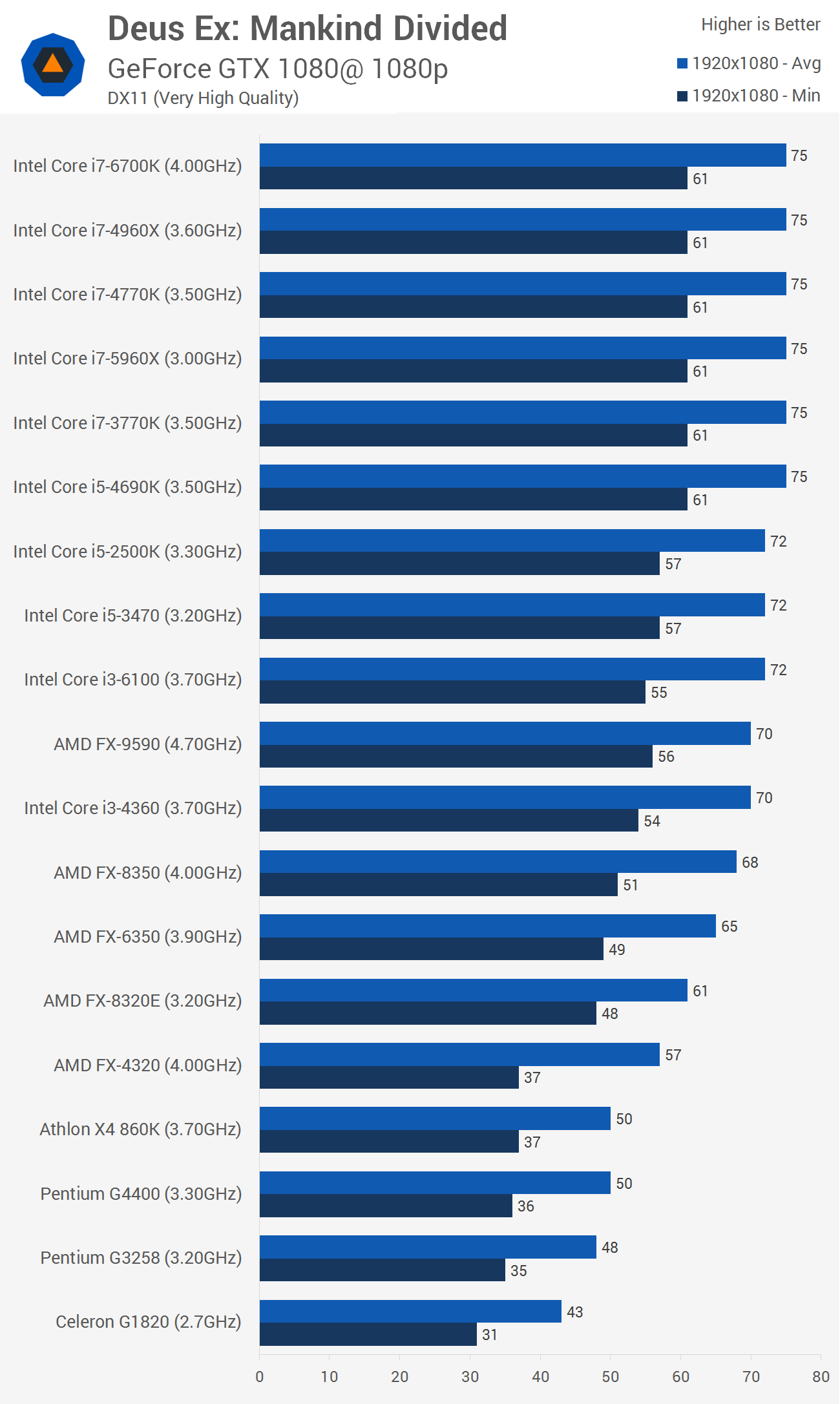

Case in point:

The 1080 GTX can barely max the game at 1080p, but the FX-4230? No issues whatsoever. CPU performance certainly helps, but games have been GPU limited for ages now. Upgrading CPUs for gaming purposes is a thing of the past; they're fast enough to get all the necessary work done, and performance is almost entirely dominated by the GPUs ability to pump out frames.

Be careful with possible prescripted benchmarks. They will have artificially low CPU usage.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)