- Joined

- May 18, 1997

- Messages

- 55,596

NVIDIA GPU Generational Performance Part 1

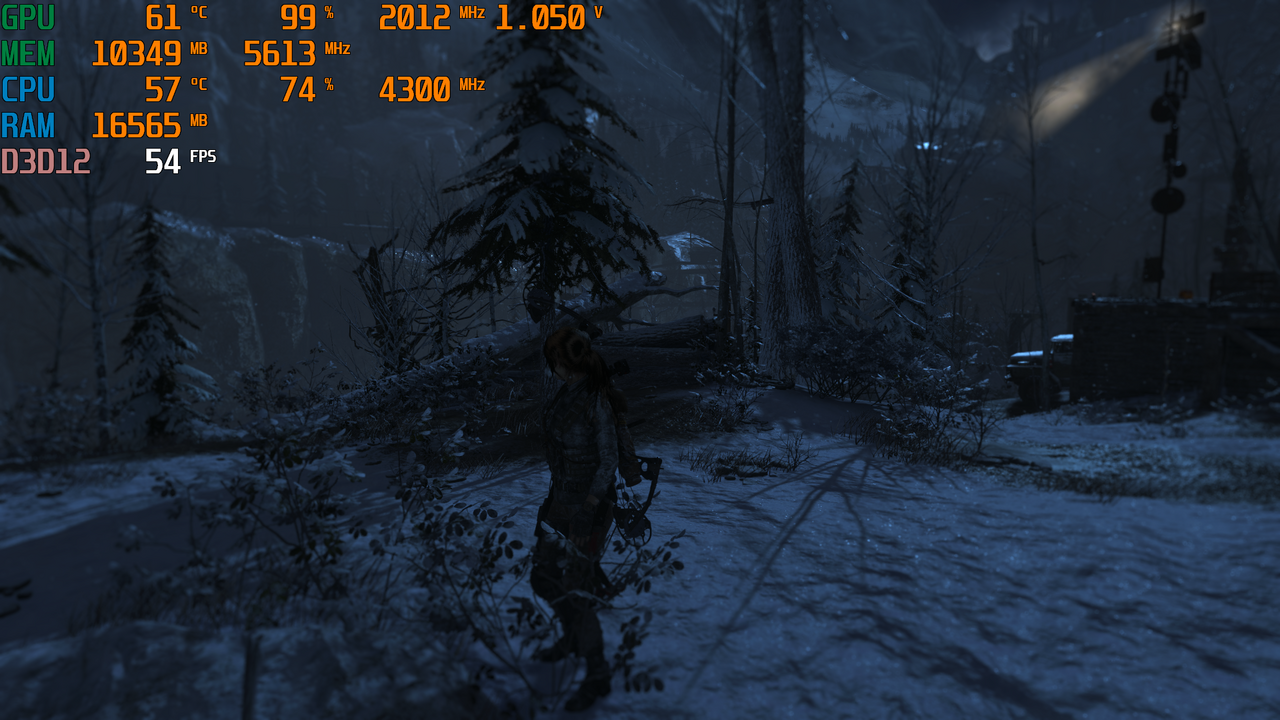

Ever wonder how much performance you are really getting from GPU to GPU upgrade in games? What if we took GPUs from NVIDIA and AMD and compared performance gained from 2013 to 2018? We are going to start that process today in Part 1 focusing on the GeForce GTX 780, GeForce GTX 980 and GeForce GTX 1080 in 14 games.

If you like our content, please support HardOCP on Patreon.

Ever wonder how much performance you are really getting from GPU to GPU upgrade in games? What if we took GPUs from NVIDIA and AMD and compared performance gained from 2013 to 2018? We are going to start that process today in Part 1 focusing on the GeForce GTX 780, GeForce GTX 980 and GeForce GTX 1080 in 14 games.

If you like our content, please support HardOCP on Patreon.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)