Have you tried 1.45v? You can safely go up to 1.5v with DDR4, myself I like to keep it no more than 1.45v.

Not yet, but that's the next step.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Have you tried 1.45v? You can safely go up to 1.5v with DDR4, myself I like to keep it no more than 1.45v.

Well I had no luck with 3466 tonight. Tried magictoaster's timings and noko's tip, neither resulted in anything different unfortunately.

I've also figured out the ratio at which the tRFCs are determined. Posibly useful for Titanium folks since on Auto, it uses the same value for all entries.

Take tRFC divide by 1.34 = tRFC2

Take tRFC2 divide by 1.625 = tRFC4

Might be useful to those of us whose boards just plop in tRFC's value into all of them. (Granted, doesn't seem to be hurting performance, and seems to require less voltage on the IMC, but... there it is at least)

Yeah, I've found dram voltage actually helps a good bit (to a point), but soc is only marginally helpful, if at all (though, I only have offset, and no LLC setting on my board for now). SoC is mostly useful if you change bclk, though I'm not sure which specific areas it helps stabilize.Over at overclock.net someone suggested the following adjustments to get a stable system @3466:

- SOC: 1.5

- PROC_ODT: 60

- DRAM: 1.4 (same for boot)

Using those settings I'm able to get everything stable at 3466 @ 14-14-14-14-34 (T1). Might be worth a try. (Actually, looks like I can keep SOC and PROC_ODT on Auto and only set DRAM to 1.4 and get everything stable).

On a side note: I'm not in anyway a pro at this. I'm mostly just looking at what is working for other users with similar configuration and trying things so YMMV.

SOC 1.5v is way too high, ASUS recommend no more 1.2v, AMD recommends 1.1v.Over at overclock.net someone suggested the following adjustments to get a stable system @3466:

- SOC: 1.5

- PROC_ODT: 60

- DRAM: 1.4 (same for boot)

Using those settings I'm able to get everything stable at 3466 @ 14-14-14-14-34 (T1). Might be worth a try. (Actually, looks like I can keep SOC and PROC_ODT on Auto and only set DRAM to 1.4 and get everything stable).

On a side note: I'm not in anyway a pro at this. I'm mostly just looking at what is working for other users with similar configuration and trying things so YMMV.

SOC 1.5v is way too high, ASUS recommend no more 1.2v, AMD recommends 1.1v.

Seeing as you have the same board and, basically, same RAM as I do.... I'm surprised you need that much voltage, or CR2. If your kit is a 14-14-14 kit then yours should technically be binned better than mine as well, as mine are 15-15-15.One of the more important settings that few people mention Bank Group Swap should be disabled if you have only 2 dimms and they are single rank. It helped me reach 3333mhz at on DDR4 3200 memory. Before I could only reach 3200mhz. My timings at 3333mhz are 14-14-14-14-35 CR2 . MY SOC 1.075 volts , Dram voltage 1.39 volts.

lol, yeah I figure it might have been a mistype, didn't want anyone to plug that in and watch their board go pufff.Indeed! I meant 1.15, not 1.5, sorry!

lol, yeah I figure it might have been a mistype, didn't want anyone to plug that in and watch their board go pufff.

I thought AGESA 1.0.0.6 was promising up to 4000mhz on the memory. All these memory issues is why I've waited this long for skylake x

I doubt anyone was promising anythingI thought AGESA 1.0.0.6 was promising up to 4000mhz on the memory. All these memory issues is why I've waited this long for skylake x

I thought AGESA 1.0.0.6 was promising up to 4000mhz on the memory. All these memory issues is why I've waited this long for skylake x

Have you tried 1.45v? You can safely go up to 1.5v with DDR4, myself I like to keep it no more than 1.45v.

Just found the "training voltage" setting. It's CLDO_VDDP on the gigabyte gaming K5.I could run 3200+ with my 2800 hynix m-die chips if I waited around 30 minutes for it to do memory training (voltage set to 1.4 manually), and it was stable in memtest86, but my board has no LLC adjustment and I don't see the startup voltage setting so (unless I want to wait that long every time and forget about suspend/sleep) it's not a realistic setting. :/

highest i've seen tried and successful so far was 3800, i don't think i've seen any posts with people even using ddr4 4000 to actually try it.

Tried the new AGESA 1.0.0.6 for the Prime X370. Unfortunately it seems 3333 C14 1T is the best I can do with my 3600 RAM and that required pumping 1.4V into the RAM and 1.1V into the SOC. I haven't had time to run a proper multi-hour memtest yet so I don't even know if that's going to be 100% stable.

3466 fails memtest after 2 - 5 seconds, and 3600 won't boot (Windows starts complaining about corrupted files etc.). Went as high as 1.15V on the SOC and tried CAS16 and 18, but it still wouldn't work.

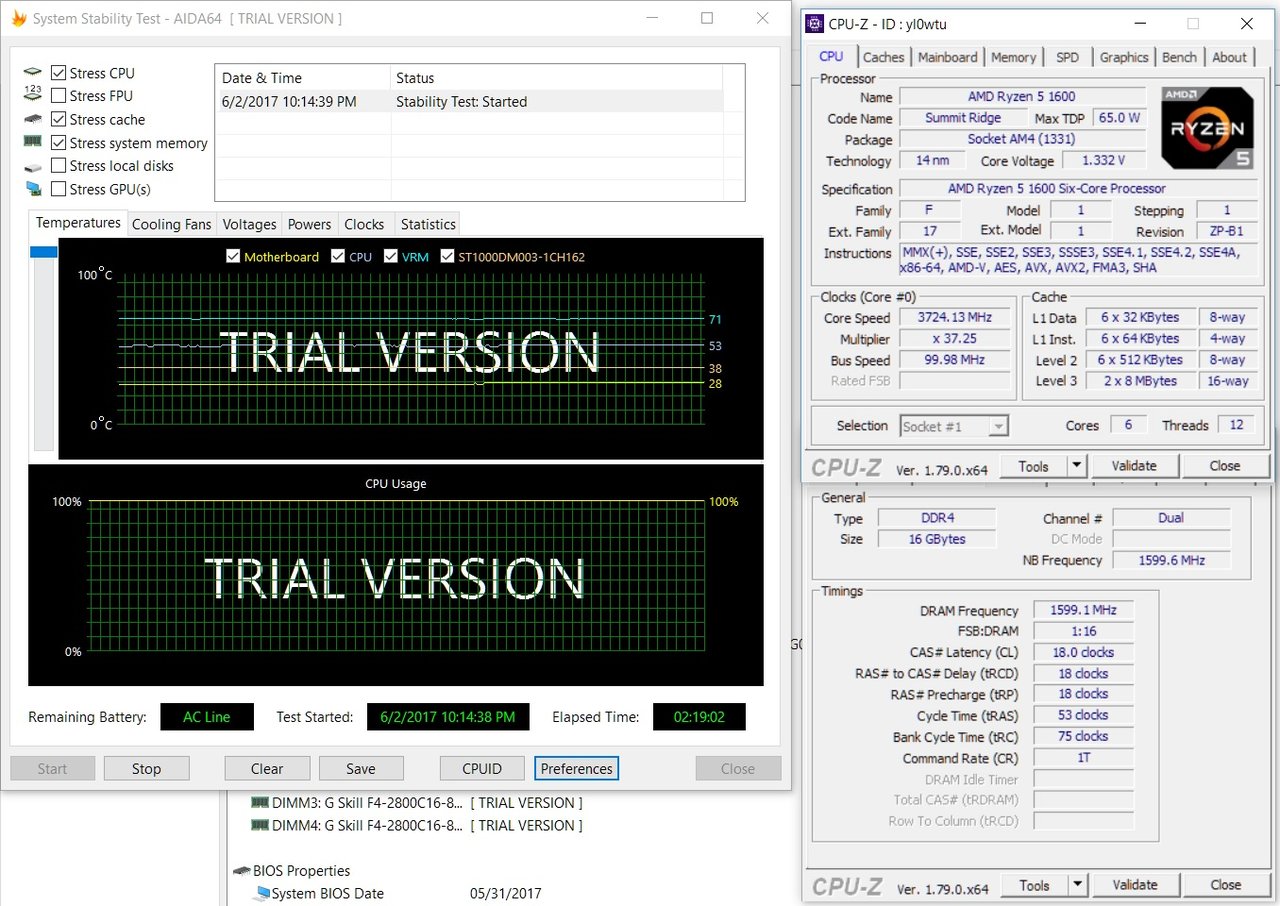

Memtest86 shifts the test pattern for each pass, so the more passes you run the better. AIDA64 may be stressing (other parts of) the SoC in addition to the memory controller, so if it's unstable that could cause a fail, too. You might try bumping the soc a few milivolts and see if it still fails.Well I'm back to 3200 C14. It's really odd how it behaves at anything above this speed.

For example, at 3333 C14, it can run Memtest86+ for at least a couple of hours (until I got bored and canceled it), but it would fail AIDA64 "Stress system memory" after anything from 8 minutes to 1 hour.

So I reduced it to 3333 C16 and this seemed stable. Ran AIDA64 for 2 hours yesterday. But today, it failed after 8 minutes. It never actually crashes, it just displays "Hardware error detected" or something.

So why is 3333 C14 Memtest86+ stable, but not even 3333 C16 works reliably in AIDA64? Either AIDA64 is just way more demanding on the memory than Memtest86+, or there's some bug or glitch with the new dividers that causes it to think there's a hardware failure when there isn't. I'd guess it's simply more demanding and uses a more stressful algorithm.

It's also weird that 3200 C14 only requires 1.0V SOC and 1.35 RAM, where as 3333 C16 apparently isn't even stable with 1.15V SOC and 1.4V RAM. Why such a huge difference for 133 MHz and loosened timings?

I'd suggest anyone who wants to make sure they have stable memory run AIDA64 memory test for over 2 hours, maybe as long as 12 - 24 hours... Memtest86+ doesn't seem to be enough.

Great!A little more ram voltage did the trick, now running 2933 [email protected], so pretty pleased with that!

Memtest86 shifts the test pattern for each pass, so the more passes you run the better. AIDA64 may be stressing (other parts of) the SoC in addition to the memory controller, so if it's unstable that could cause a fail, too. You might try bumping the soc a few milivolts and see if it still fails.

Yeah,Well I already went to 1.15V which is 0.05V over the max recommended for 24/7 use. I could try 2T command rate I guess, but that would just increase the latency even more compared to 3200 C14 1T. Anyway I'm guessing 3200 C14 is more or less just as fast as 3333 C16 because of the lower latency.

Just a bit surprised that 3200 C14 is so effortless while another 133 makes the system totally crap itself no matter how much voltage I apply.

I'm not 100% sure that's correct. My Titanium has (hidden, but I changed that heh) a setting called DRAM Voltage(Training), as well as CLDO_VDDR. The "(Training)" voltage, at the higher RAM divisors, applies 1.4V or 1.5V, which is way more than what CLDO uses.Just found the "training voltage" setting. It's CLDO_VDDP on the gigabyte gaming K5.

Ah, that'd make more sense. So, then, if I had access to the training setting you have, I could probably boot more reliably. I have got it to the point that it boots every second or third attempt now, and it's rock solid at 3200c18-18-18. Sleep functions as expected (it doesn't choke like during boot).I'm not 100% sure that's correct. My Titanium has (hidden, but I changed that heh) a setting called DRAM Voltage(Training), as well as CLDO_VDDR. The "(Training)" voltage, at the higher RAM divisors, applies 1.4V or 1.5V, which is way more than what CLDO uses.

According to google searching, CLDO_VDDR is the "DDR PHY" voltage.

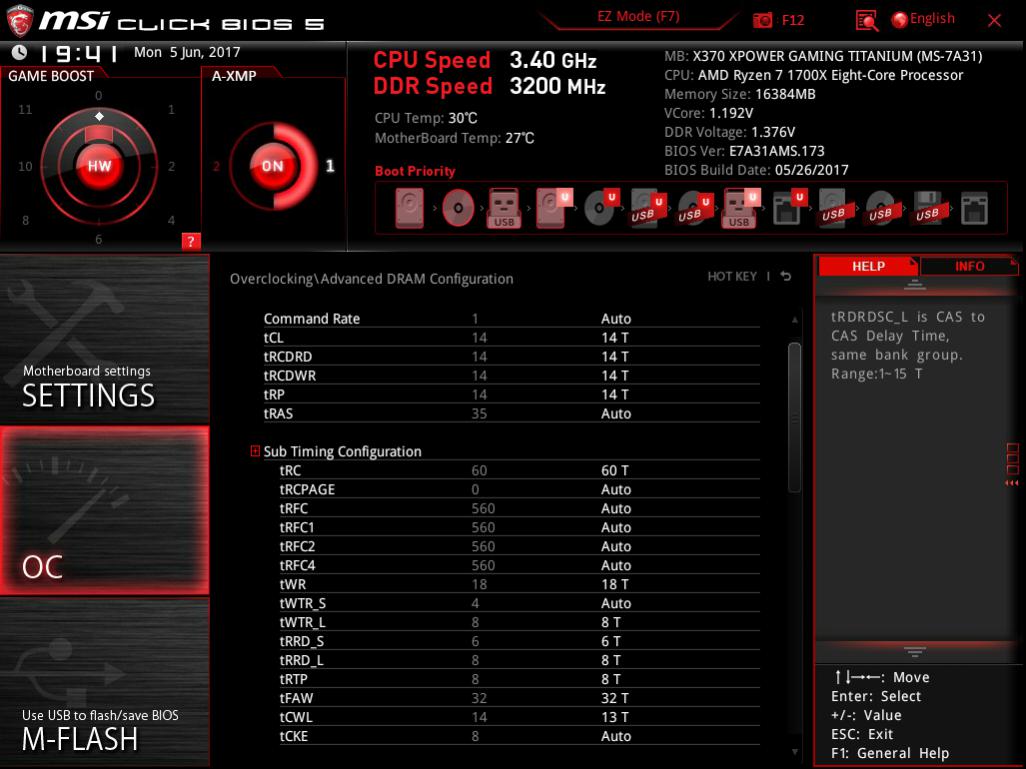

Those are some rather good numbers on Aida64 Mem and Cache bench!ALRIGHT! Time for an update from me finally. Not been able to get 3466 to POST ever again, though my reply to Nobu at the end of this post may change that, as I wasn't aware that's what the setting was for.

Nevertheless, while 3333 was working just fine, I had been using all Auto settings. Since the only tighter timings reference I did come across was for 3200, I went about trying to see where I could come in terms of that site's "moderate" sub timings. Their's didn't quite work, so instead of just trying to nail down which one was causing instability, I loosened a couple just a tiny bit and that did the trick. Or at least enough so to keep Minecraft stable for ~10hrs of playing, where as prior to my adjustments it would crash randomly.

ANYWAYS, here are those settings (unless mentioned, all other settings are on Auto).

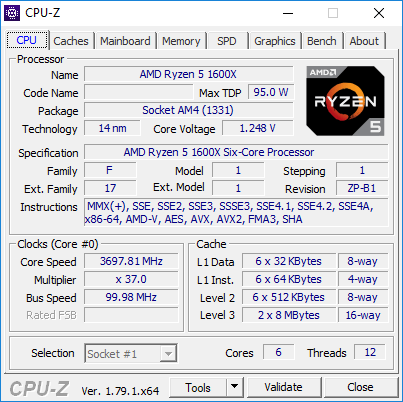

CPU P0 @ 38x

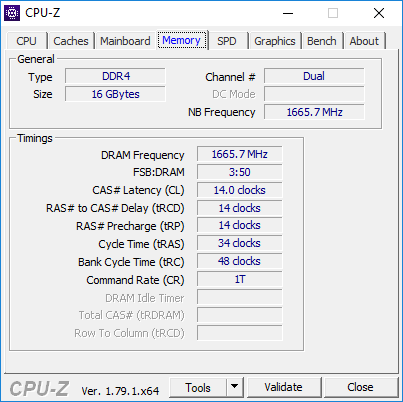

DDR Speed @ 3333

CPU NB Voltage 1.05V

CPU NB Power Duty Control "Current Balance" (favors amp output over thermal control)

DRAM Voltage 1.36V (1.376V actual)

DRAM Voltage(Training) 1.5V.

Below, an image of the Primary and SubTimings; everything else is on Auto except ProcODT which is at 48 Ohms.

(NOTE: I don't have A-XMP turned on, ignore that, as well as the DDR Speed, as I just used my 3200's timings)

Performance looks something like this:

View attachment 26883

Ran AIDA's Stress Test on Cache and System Memory, and it went exactly 30 minutes before failing. As such, I suspect that'll end up being "Game Stable". I just need to get FO4 on this system to actually find out :|

I'm not 100% sure that's correct. My Titanium has (hidden, but I changed that heh) a setting called DRAM Voltage(Training), as well as CLDO_VDDR. The "(Training)" voltage, at the higher RAM divisors, applies 1.4V or 1.5V, which is way more than what CLDO uses.

According to google searching, CLDO_VDDR is the "DDR PHY" voltage.

Originally Posted by The Stilt

tRC, tWR, tRDRDSCL, tWRWRSCL and tRFC are basically the only critical subtimings (for the time being).

Setting the SCL values to 2 CLKs basically makes no difference to the stability, but results in a nice performance boost.

Minimum tRC, tWR and tRFC depend on ICs and their quality.

tCWL adjustment is broken in AGESA 1.0.0.6 beta, but it makes pretty much no difference either.

http://www.overclock.net/t/1624603/rog-crosshair-vi-overclocking-thread/18350#post_26138948

What board do you have?Ah, that'd make more sense. So, then, if I had access to the training setting you have, I could probably boot more reliably. I have got it to the point that it boots every second or third attempt now, and it's rock solid at 3200c18-18-18. Sleep functions as expected (it doesn't choke like during boot).

Thinking about returning this board and getting an asrock fatal1ty k4, though. Have until June 16th to decide...

I haven't paid close attention to what other's have been getting on their AIDA CachMem, but I'm quite surprised to see I'm beating yours, given you're running just as much RAM as I am, low timings at 1T, but faster everything heh Have you tried running 16-16-16-36 just to see if that 14 is dragging things down by being a bit too quick (causing some sort of stall)? And to address the last sentence while I'm at it... Man that tCWL is way higher than I'd have expected O_O On my Titanium it is always 1 or 2 T below tCL. For example, if everything is on Auto, it'll set primary timings to 16-15-15-36, and tCWL will be 14. Though I just realized I had set it to 14 and so is in parity with tCL. I know he says is 'broken', but I think we can both agree that there's an impact on POSTing from changing it. I know it has been for me. However, I can't say for certain that MSI has added the true 1.0.0.6 AGESA or if it's a cobbled one based off 1.0.0.4a. Software that reads the string says .4a, but the hex value is 8001127 (or 24? regardless....) and that's a fair bit higher than prior. Which it wouldn't be too surprising that, at least for MSI, the string doesn't get updated even if the AGESA had been. hehThose are some rather good numbers on Aida64 Mem and Cache bench!

For those who want to play around with Sub-Timings, The Stilt said this which I've found to be very useful:

The_Stilt said:tRC, tWR, tRDRDSCL, tWRWRSCL and tRFC are basically the only critical subtimings (for the time being).

Setting the SCL values to 2 CLKs basically makes no difference to the stability, but results in a nice performance boost.

Minimum tRC, tWR and tRFC depend on ICs and their quality.

tCWL adjustment is broken in AGESA 1.0.0.6 beta, but it makes pretty much no difference either.

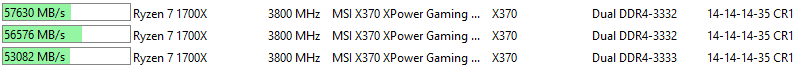

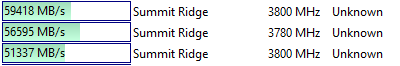

Setting the two SCL values to 2 made a good difference in Copy speeds and overall ram speed, first image is with Auto tRDRDSCL and tWRWRSCL which were at 6 each, and second is with those set to 2:

tRC going from 75 to 65 helped as well, tCWL auto was 24 but was not stable going any lower so left that alone. Anyways found that to be useful information.

Yea, damn GigabyteFormula.350 I have a gigabyte x370 k5. Its crc protected, but (after a lot of searching) I found the tools I needed to mod/flash the bios (sort of--a lot of the features on the tools didn't work or were disabled, depending on the version I got).

I was just trying to replace the agesa, so I never looked at any of the hidden features, and then they finally released a beta with the new agesa, so I don't know if the modded dos flashing utility I downloaded works or not.

I think this would be a good reference as I'm reading stuff all over the map, so post your config and ram speed so we can see what the reality is.

After some experiments, the best I can get out of my corsair vengeance lpx 16gb (2x8gb) 3000mhz cas15 1.35v kit is:

Ryzen 1700

Asus b350m-a

Bios 0502

2400mhz, cas15, 1.35v

edit: memory sticks are in A2/B2 slots (so channel 2) per the Asus manual recommendation for 2 sticks. I haven't tried A1/B1.

The kit name is

CMK16GX4M2B3000C15B

I couldn't get it to post over 2133 with the bios it shipped with. Interestingly, the original bios had a ton more ram parameters to tweak, but didn't help. I tried up to cas18 and 1.375v but it wouldn't post over 2400mhz. According to asus site, this kit is double sided and rated at 2133 on their board, so at least I got it a little higher.

Edit 4/17:

Bios 0604

2666mhz, cas16, 1.35v bios is set to cas15 but cpu-z is showing 16, not sure why.

Getting there.

Well, besides code and algorithm changes, compiler changes and build system can make a difference. Pretty sure it says somewhere (either on the website or in the program) that results aren't comparable between versions.Conspiracy theory time! lol

Found an old AIDA on my computer, one I had initially been using which lacked the ability to recognize Ryzen beyond a 16 Core processor at its clockspeed. It didn't even know it was HT, just thought it was all native cores. The L2 and L3 cache speeds were also horribly inaccurate, but there is one curiosity of the older benchmark code that I think is worth bringing up...

Here are my Read/Write/Copy scores when run by a recent version (I don't have the latest):

View attachment 26972

And when ran on the older version...

View attachment 26979

Sure it's a give and take... Take from the older result's Read, give to the newer result's Write, but still. You expect results as non-complex as memory speeds to only increase. IMO at least.

[/conspiracy]

Right right, and that's totally understandable. I made sure to draw comparisons between the two as well, I just didn't want to bloat the screen with them.Well, besides code and algorithm changes, compiler changes and build system can make a difference. Pretty sure it says somewhere (either on the website or in the program) that results aren't comparable between versions.

Yea, damn GigabyteThey started doing that a number of years ago, as far back as my Llano FM1 system. Prior to that I had an 890GX which I was able to mod quite easily (both are Award), but while the same tool opened the FM1 board's BIOS and could mod it just fine, the BIOS wouldn't flash due to CRC Mismatch :\ It was a huge bummer. And I don't think there was an "Engineering Version" of the flasher, like with the AMI, so I don't think I can force it to flash. Oh well, I made due lol

Well from what I read regarding nabbing the AGESA from one and adding it to another, is that it's not as easy as it might seem, particularly on AMI systems (definitely not as easy as with Award based systems, or as with how the Intel Microcode is packaged). This post I read, while it was referring to Bulldozer systems, it looks like the AGESA is still packaged the same, spanning multiple modules. It was said that these BIOSes aren't exactly compiled separately as it might look, with the modules being individual. Granted, they are still modules, but that the BIOS as a whole is coded around them in a sense. So pulling them out and mix-matching won't yield the results we'd anticipate. Even still, I can't imagine that the memory timing or other features would become available unless you were able to hex edit them in. Which hex editing seemed to be the most successful way of modding in AGESA changes based on their findings. I had the exact same idea you had... lol

EDIT: Just finished testing The Stilt's "-SCL @ 2" tweak, and... well I also changed tCWL to 12 (it was set to 13 but wouldn't apply, so ran at 14)... I have a feeling one of them has impacted my L3 performance. >_>

Memory speed has increased nicely, and the latency dropped 2ns

View attachment 26960

I'm 98.738% sure that CPU_SoC is the alternate name for CPU NB, as that's the correct voltage for it by default (actually I believe 0.900V is AMD's default). To be fair, I don't even know if I am needing mine set to 1.05V like I have it. It's just that previously when trying out speeds and timings, I seemed to have needed a smidge more to get it to POST. My using 1.05V was not really based on anything but a gut reaction to the Titanium (due to AGESA .0.6?) applying a voltage of 1.15V when on Auto. I had been just fine with .900V at 3200 so I couldn't imagine why it'd need to be higher, so I just picked a voltage in the middle of those lol

Never got those numbers, I tried 3466 and some BCLK and looks like I corrupted windows. My memory hole is right around the 3333mhz so that strap will not work unless I adjust the CPU_SOC (not sure exact name) which for CH6 defaults to 950mv, it is just a bare setting it (having to turn off the machine, if memory fails it goes back to default etc.). Anyways thanks for sharing, Aida64 if accurate, would be showing MSI is kicking ASUS's ass in memory speeds. Well at least mine but I can't recall anyone else getting those types of results from ASUS.

I'm 98.738% sure that CPU_SoC is the alternate name for CPU NB, as that's the correct voltage for it by default (actually I believe 0.900V is AMD's default). To be fair, I don't even know if I am needing mine set to 1.05V like I have it. It's just that previously when trying out speeds and timings, I seemed to have needed a smidge more to get it to POST. My using 1.05V was not really based on anything but a gut reaction to the Titanium (due to AGESA .0.6?) applying a voltage of 1.15V when on Auto. I had been just fine with .900V at 3200 so I couldn't imagine why it'd need to be higher, so I just picked a voltage in the middle of those lol

THAT being said, yes, my results are curiously higher than most people's that I'm coming across. I may have to go about updating it to see if it's a quirk of my version or what... Then again, looking at your screenshots, we do infact have the same BenchDLL of v4.3.741. Regardless, the other thing I find very curious is that you're running 3.9GHz, I'm running 3.8GHz, and yet my L1 speeds are quite a lot faster than yours as well; I broke 1TB/s, and it's reliably that result between runs and reboots.

IF you feel up for it, I'm curious if it at all has to do with both my approach for running 3.8GHz, or something to do with the 38x multiplier. One thing I've seen mentioned once or twice on other sites, is that W10 doesn't like the 1/4 multipliers. Whether or not that's true, or if that's somehow at play here, no idea. I presume that running at 36x isn't stable for you? If it's stable-enough to get a run of benchmarks in, maybe see if that has anythi........ you know what..... o_0 This just smacked me out of no-where... Ok so the "my approach for 3.8GHz" is that I use the K17TK program (I had made a thread about it, prolly on page 2 of this subforum), as I've not taken to BIOS clock adjustment yet. That being said, my early testing was at 36.25 and 36.5 multi. There have been a couple times where I've adjust it to that and suddenly my scores have plummeted for AIDA. As in my CPU Queen for example, lower than even my default runs. I had been chalking it up to me screwing around with other results, but now I'm wondering if that's not it at all and that Ryzen has some sort of hiccup with them? So we know that the caches run full speed, but what if they are somehow unable to run at the same 1/4 multi steps that the CPU can and it's causing cache misses? I'm going to check right now and see what happens if I use 37.75x instead of 38x. After that, I'll see what setting 38x in the BIOS does, instead of using the utility from Windows.

[Side Note: Computer failed to wake up from sleep with the SCL set to 2, but unfortunately I had changed tCWL to 12 (from 14) as well, so it could be that. I'll put it back where I had it and leave SCL where it is. Was having restart issues, too... Figured what the hell, dropped the Training voltage from 1.5V to 1.45V and PRESTO! Booted no problem. I might have to try that for 3466 as well... Speaking of which, why aren't you using 3466 instead of 3200+109BClk??]