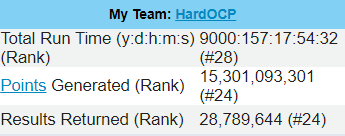

In Windows the power usage didn't change when I was running a single WU or two. The only difference was there was very little downtime on the GPU for processing. When it was processing on the GPU the GPU was at 100% utilization and I was only running more than one work until so when it was doing the CPU processing the GPU wasn't idle or at least not very often. Two possible explanations for this. The Polaris architecture is more efficient at running these or it's simply not powerful enough not to be running at 100% utilization for the work units. It's exactly the same under Manjaro. That's why I haven't bothered to set it up to run a third work unit simultaneously. When crunching a work unit the GPU is at 100% use and two work units are set to run so the GPU downtime while CPU crunching is kept to a minimum.Lol a bird in the hand is worth two in the bush. Also have you tried to run more than two in Windows? I would keep increasing number of tasks till you reach 100% gpu usage in afterburner and you should get into that 150 range your talking about and get some good points. I mean i assume good points since i don't have a RX570 lol. Best utilization maybe

That said, the points look good enough. The GPU points are blowing away what my 5800x and 2600x have been doing combined on CPU. It will take a few days to stabilize the points output but so far for the day I've seen a 400k+ point increase at the halfway mark for the day's stats and the GPU was not crunching anything for several hours of that half day's stats. Not bad for an extra 50w-55w of power over an idle GPU (idle is usually around 32w-33w for the GPU and currently using 85w-89w while crunching.)

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)

amino acid sidechains in proteins.

amino acid sidechains in proteins.