As others mentioned, 1440p should benefit more not less. I see AMD doing better @ 1440p but I'm sure the same crowd that screams Intel is the only thing to buy if you game because it wins @ low resolutions will write off any 1080/1440 wins and focus only on 4k for the GPUA lot of the leaks have been at 4K too. Even the 3dmark stuff. I wonder if that’s because Navi’s uber cache isn’t as helpful at lower resolutions.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

[WCCF] [IgorsLab] Alleged performance benchmarks for the AMD Radeon RX 6800 XT "Big Navi" graphics card have been leaked out.

What? There is an indication.... It was in RDNA1 and modified/updated as part of rdna2 architecture... Why on earth would it not be part of rdna2 in xbox/ps5? I would hazard to guess this is where it originated (console design) and the was modified to work for desktop GPUs.Also there’s no indication that the consoles have any special caching in place. This could be a PC only play.

If you read up on the architecture for x box, it matches up with the same for rdna2 pcie cards. I'm not going to research enough to see if it's the same amount of cache, but it's the same design and should have the same benefits.

https://www.google.com/amp/s/www.te...2-architecture-and-zen-2-cpu-enhancements?amp

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,004

I really hope the Lemmings won't really care about the RX6000 launch, because they think Nvidia is king, but this was said about Ryzen too, everyone thought Intel is King and look where they are now.

The same could happen to Nvidia if they don't pull their heads out of their butts.

Intel still outsell AMD 10 to 1 on everything except DIY.

Why? Because mindshare. It also helps that Intel is still faster in some workloads, so if your gaming or using Adobe products, even a well educated enthusiast is going to say 'buy Intel'.

Even though most enthusiasts will recommend AMD, and the data shows that they win in the majority of workloads; Intel have been Winning because they are the defacto standard. The status quo. The Frosted Flakes™. If Intel win in a SINGLE workload, they win everything. AMD is still considered the 'Flakes that are Frosted'. And the only way they are going to be able to break out of their budget alternative image is to literally make it so that they are the ONLY choice.

Well that and supply, Intel also out produces AMD 10:1Intel still outsell AMD 10 to 1 on everything except DIY.

Why? Because mindshare. It also helps that Intel is still faster in some workloads, so if your gaming or using Adobe products, even a well educated enthusiast is going to say 'buy Intel'.

Even though most enthusiasts will recommend AMD, and the data shows that they win in the majority of workloads; Intel have been Winning because they are the defacto standard. The status quo. The Frosted Flakes™. If Intel win in a SINGLE workload, they win everything. AMD is still considered the 'Flakes that are Frosted'. And the only way they are going to be able to break out of their budget alternative image is to literally make it so that they are the ONLY choice.

Smoked Brisket

Gawd

- Joined

- Feb 6, 2013

- Messages

- 786

remember, if big Navi beats an overclocked 3090 in 99 out of 100 scenarios, but is within margin of error of a SINGLE ONE scenario, Nvidia has the faster card.

This is how Mindshare works. The only way for AMD to claw back mindshare is to be exclusively faster in. every. game. If Nvidia wins in a SINGLE GAME, its status-quo preserved.

"In the ballpark" only solidifies Radeon as an "Budget-Alternative" brand. Like the knock-off breakfast cereal brands that are what you buy when you can't afford real cocoa pops.

I think you are going to far but I get the overall point. There is one big caveat this time. Nvidia had a horrific launch. Not many regular enthusiasts have cards in hand still. If AMD competes to the extent that it effectively ties Nvidia at the top tiers and is able to get these cards in the hands of people, they can grab market share at an increased rate and be a generation ahead of expectations.

What? There is an indication.... It was in RDNA1 and modified/updated as part of rdna2 architecture... Why on earth would it not be part of rdna2 in xbox/ps5? I would hazard to guess this is where it originated (console design) and the was modified to work for desktop GPUs.

If you read up on the architecture for x box, it matches up with the same for rdna2 pcie cards. I'm not going to research enough to see if it's the same amount of cache, but it's the same design and should have the same benefits.

https://www.google.com/amp/s/www.techpowerup.com/271115/microsoft-details-xbox-series-x-soc-drops-more-details-on-rdna2-architecture-and-zen-2-cpu-enhancements?amp

Can you quote the specific bit that talks about a large on die cache? It’s certainly not there on Navi 10.

The PC Cards have 32 MB of continuous cache the Xbox has 4 sets of 8MB that use interconnects. One of the many little differences.What? There is an indication.... It was in RDNA1 and modified/updated as part of rdna2 architecture... Why on earth would it not be part of rdna2 in xbox/ps5? I would hazard to guess this is where it originated (console design) and the was modified to work for desktop GPUs.

If you read up on the architecture for x box, it matches up with the same for rdna2 pcie cards. I'm not going to research enough to see if it's the same amount of cache, but it's the same design and should have the same benefits.

https://www.google.com/amp/s/www.te...2-architecture-and-zen-2-cpu-enhancements?amp

Really this gen at 1440p and below the rule will be but what you can afford within reason*. Intel, AMD, and NVidia seem to have products lined up that will work well in their given price points.As others mentioned, 1440p should benefit more not less. I see AMD doing better @ 1440p but I'm sure the same crowd that screams Intel is the only thing to buy if you game because it wins @ low resolutions will write off any 1080/1440 wins and focus only on 4k for the GPU. It's just people will find ways to justify their purchases and biases whether they make sense or not. Anyways, looks like it could be a really interesting holiday season.

* by within reason I mean if you have $700 for your GPU & CPU don’t pair. $600 GPU with an $80 CPU and expect the chart performance for a card**.

** You would expect that to be common sense but if you look at half the systems some of the High school and Elementary students in a few of my buildings have asked me to look over you would collectively cringe. Like 2060 Super paired with an 8 year old i3 kind of deal.

https://amp.hothardware.com/reviews/amd-navi-radeon-rx-5700-architectureCan you quote the specific bit that talks about a large on die cache? It’s certainly not there on Navi 10.

"Navi features a new cache hierarchy as well. AMD added a new L1 cache and doubled the load bandwidth for the L0 cache to ALU. The new cache hierarchy reduces cache latency at each level and improved effective bandwidth as well."

This was RDNA1 and was 100% included in navi 10. This is why if you cut the memory clocks by 50% you only lose 20% performance on average. This was improved/extended for rdna2.

25:20 could be an indication:Also there’s no indication that the consoles have any special caching in place. This could be a PC only play.

Last edited:

yes a buzzword for what does NOT existAI is just another overused technology buzzword, it seems like everything is an AI nowdays.

an if then else / switch tree is NOT ai

https://amp.hothardware.com/reviews/amd-navi-radeon-rx-5700-architecture

"Navi features a new cache hierarchy as well. AMD added a new L1 cache and doubled the load bandwidth for the L0 cache to ALU. The new cache hierarchy reduces cache latency at each level and improved effective bandwidth as well."

This was RDNA1 and was 100% included in navi 10. This is why if you cut the memory clocks by 50% you only lose 20% performance on average. This was improved/extended for rdna2.

Oh I thought you were saying the rumored infinity cache was already present in Navi 10. The shared L1 cache in each shader array on RDNA is well documented. Those L1 caches are still very small at 128KB each. The infinity cache is rumored to be something different altogether, essentially another cache level (L3?) with much higher capacity.

yes a buzzword for what does NOT exist

an if then else / switch tree is NOT ai

Isn't that basically what our brains are? I big nested switch statement tied to memory.

I just meant they started adding/changing the cache hierarchy for RDNA1 and extended/upgraded it for rdna2. This was as evident with testing performance vs. memory speeds in RDNA1. This was when they introduced an extra level of cache. Rdna2 extends this by doubling L2 cache and making L0 cach available between two CUs instead of one. It's not as if this is magical and came out of nowhere. It's an advancement on the existing hierarchy. This means twice as much L0 available to each CU without actually having to double L0 cache size, so this is a good compromise between die size/cache size. Without having access to more white papers and technical details I'm not sure if/what else has changed, but they started down this trajectory with RDNA1, and this should be a better implementation.Oh I thought you were saying the rumored infinity cache was already present in Navi 10. The shared L1 cache in each shader array on RDNA is well documented. The infinity cache is rumored to be something different altogether, essentially another cache level (L3?) with much higher capacity.

I just meant they started adding/changing the cache hierarchy for RDNA1 and extended/upgraded it for rdna2. This was as evident with testing performance vs. memory speeds in RDNA1. This was when they introduced an extra level of cache. Rdna2 extends this by doubling L2 cache and making L0 cach available between two CUs instead of one. It's not as if this is magical and came out of nowhere. It's an advancement on the existing hierarchy. This means twice as much L0 available to each CU without actually having to double L0 cache size, so this is a good compromise between die size/cache size. Without having access to more white papers and technical details I'm not sure if/what else has changed, but they started down this trajectory with RDNA1, and this should be a better implementation.

So you’re buying into the shared L0 rumor? I’m putting my money on the 128MB big cache rumor. Maybe they’ll do both.

Buying in? Microsoft already released details which shows 2 CUs per L0... They showed it during their hot chips 32 presentation where it shows 26 "dual CUs" which makes up the total 52. Each dual CU shares L0.So you’re buying into the shared L0 rumor? I’m putting my money on the 128MB big cache rumor. Maybe they’ll do both.

Zarathustra[H]

Extremely [H]

- Joined

- Oct 29, 2000

- Messages

- 38,877

What you dont see is that 3dmark translates almost 1:1 to real world gaming performance because they utilize the same graphics techniques that major developers use. That is why 3dMark is the gold standard. Im guessing you bought a 3080? I understand you might be upset - I knew I was going to be if I purchased a card - thats why I waited and boy am I glad I did. I guarantee if you own a 3080 you could flip that card for what or more than you paid in 2 days tops and get a Navi 21 or keep the card and just enjoy it because 3080 is a beast of a card.

I haven't bought anything yet. I was hoping big Navi would do the trick, but I am not exactly thrilled with those 4k numbers.

To be clear, I criticize benchmarks when they are bad benchmarks. I have no brand based fandoms, and I have no interest in twisting benchmarks to make my choice seem better.

Only a fucking moron does that.

I just miss the proper tests that the H used to do.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,820

This. The existing brands of anything that’s being copied have the issue that people expect them not to change. New entrants to the market don’t have this issue and can innovate on the Cocoa Puffs flavors and make them better.This sounds right up my alley since I tend to prefer "knock-off" brand cereals, not just because of price but because they tend to taste better anyway.

ZeroBarrier

Gawd

- Joined

- Mar 19, 2011

- Messages

- 1,011

Some of us won't touch an AMD that only wins in 60% of games by 5% each game. Some of us remember how terrible AMD drivers are and how much a fucking headache every driver release is. Some of us just want to stick a GPU into our system and start playing an hour later.Going to agree with this to an extent. 99-1 is an exaggerated number hopefully to emphasize their point. But I do agree that if AMD cards flat out win let’s say 60% of games or wins in 80% of games but only by a couple percent in most of them, then that still may not be enough to sway many people from Nvidia.

Anecdotally, amongst all my friends that PC game, 5 of them have only bought Nvidia cards for every upgrade over the past decade and 2 have bought an AMD card at some point. Those 5 don’t even research AMD’s offerings whenever they upgrade because they’ve always been happy with Nvidia. Those are the difficult customers to convert.

Buying in? Microsoft already released details which shows 2 CUs per L0... They showed it during their hot chips 32 presentation where it shows 26 "dual CUs" which makes up the total 52. Each dual CU shares L0.

And you think that’s the basis of the infinity cache rumor? A dual-CU already shares L0 in Navi 10 that’s not new.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,004

I haven't bought anything yet. I was hoping big Navi would do the trick, but I am not exactly thrilled with those 4k numbers.

To be clear, I criticize benchmarks when they are bad benchmarks. I have no brand based fandoms, and I have no interest in twisting benchmarks to make my choice seem better.

Only a fucking moron does that.

I just miss the proper tests that the H used to do.

Gamers Nexus and Digital Foundry do some amazing, in-depth reviews and they nearly always have old-fashioned text article versions of the videos for those more inclined to read.

Take for instance, some people don't like to watch the reviews. They would prefer to read an article in peace, and they complain about how 'nowadays' there are no in-depth text-based reviews. This is wrong, as all the popular reviewers provide all major reviews in both video and text forms.

This is the GN 3080 FE review in video form

This is the review in text form

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

Some of us won't touch an AMD that only wins in 60% of games by 5% each game. Some of us remember how terrible AMD drivers are and how much a fucking headache every driver release is. Some of us just want to stick a GPU into our system and start playing an hour later.

So what brand is that, because I have used them all and they all had driver issues? Tho I think Matrox gave me the least trouble..

Some of us won't touch an AMD that only wins in 60% of games by 5% each game. Some of us remember how terrible AMD drivers are and how much a fucking headache every driver release is. Some of us just want to stick a GPU into our system and start playing an hour later.

Remember to consider the source folks.

Zero here doesn't understand how calendars work, and proclaimed last month that big navi would be slower than a 3080 and use way more power.

Big navi could win in every metric and he'd be telling you that it was a bad buy. I encourage anyone who thinks he's a neutral commenter to look at his post history.

In before biggest navi lands between 3070 and 3080 using more power than a 3080 priced at $549.99, just to have NV release a 3070Ti that sits just above biggest navi while sipping power in comparison for $599.99.

Just my prediction, I could be totally wrong; but just how many times does history have to back hand slap people before we learn the lesson?1

https://hardforum.com/threads/intel...han-the-ryzen-9-3900x.1995105/post-1044555422

BrotherMichigan

Limp Gawd

- Joined

- Apr 8, 2016

- Messages

- 376

Try the 290X, which competed with the contemporary Titan.I agree. They have to stay there to become relevent in that market. IIRC last time they were there was during the HD 7000 series vs 600 series.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,004

Remember to consider the source folks.

Zero here doesn't understand how calendars work, and proclaimed last month that big navi would be slower than a 3080 and use way more power.

Big navi could win in every metric and he'd be telling you that it was a bad buy. I encourage anyone who thinks he's a neutral commenter to look at his post history.

https://hardforum.com/threads/intel...han-the-ryzen-9-3900x.1995105/post-1044555422

But to be fair, when was the last time AMD didn't disappoint everyone with a GPU launch?

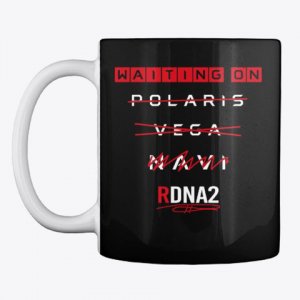

Why do you think stuff like this exists:

cybereality

[H]F Junkie

- Joined

- Mar 22, 2008

- Messages

- 8,789

Radeon VII was pretty decent, even though it was a small limited run.

But to be fair, when was the last time AMD didn't disappoint everyone with a GPU launch?

Why do you think stuff like this exists:

View attachment 292946

I guess it's all about perspective and your performance point, I've been PC gaming for a long time. They were competitive for most of that time, so maybe I'm looking at it on a macro scale.

In the last decade, 7970 was a good product, same with the 290. After that, if mid range was your thing they had/have decent offerings, especially the 5700.

If they had a high end gpu in 2017, I might have had that instead of a 1080ti.

But with all of that, I'm not foolish enough to think that they're incapable, or that nvidia is infallible.

whateverer

[H]ard|Gawd

- Joined

- Nov 2, 2016

- Messages

- 1,815

Try the 290X, which competed with the contemporary Titan.

And was just as impossible to find as the RTX 3080 for it's first 6 months of existence.

https://www.extremetech.com/gaming/...urrency-mining-could-kill-amds-gaming-efforts

And from far before that:

https://www.anandtech.com/show/3587

You people act like this is some new fucking thing, but AMD has experienced this Gold Rush for the 5870 and then the 290x launch. It is just a new experience for Nvidia buyers.

Mindshare in this case is a silly fallacy.Three generations of cards is enough to establish a consumer perception that "Nvidia=faster". That's why I think AMD still needs to hit Nvidia on price this generation. Being a better value WITH better performance is going to be the quickest way to win back mind share. Then AMD can charge more, like they have announced with Zen 3.

Trust me I understand what your saying but... Nvidia isn't at that level. They are just another part supplier, there is no major mind share win. If what your suggesting was true Nvidia would have outsold the 570/80/90 class hardware. It takes 2 seconds to pull up steams hardware survey to see that AMD in that market segment owns Nvidia. (and yes they have been on the market longer... but for a long time they where next to impossible for gamers to get their hands on till mining crashed) Yes no doubt everything up from there is Nvidia territory by a mile... cause they have had the superior higher end product for some time. In the 5700 market segment sales are actually pretty close, Nvidia does have more market share there but I imagine that could have something to do with Nvidias feature set even if the 5700s hang in pure raster. According to steam the 2060s market share is only slightly ahead.

If Nvidias name alone sold cards AMD wouldn't be outselling the 1660s. Clearly having a slightly faster card in that price range matters to the majority of purchasers.

Radeon VII was pretty decent, even though it was a small limited run.

Needed to be $100 cheaper. Good performance but later, hotter, buggier, louder and no next gen features vs the similar priced 2080.

If Nvidias name alone sold cards AMD wouldn't be outselling the 1660s. Clearly having a slightly faster card in that price range matters to the majority of purchasers.

In what alternate universe is AMD outselling the 1660? I hope you’re not referring to the rx 580 which launched 2 years earlier.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,004

Mindshare in this case is a silly fallacy.

Trust me I understand what your saying but... Nvidia isn't at that level. They are just another part supplier, there is no major mind share win. If what your suggesting was true Nvidia would have outsold the 570/80/90 class hardware. It takes 2 seconds to pull up steams hardware survey to see that AMD in that market segment owns Nvidia. (and yes they have been on the market longer... but for a long time they where next to impossible for gamers to get their hands on till mining crashed) Yes no doubt everything up from there is Nvidia territory by a mile... cause they have had the superior higher end product for some time. In the 5700 market segment sales are actually pretty close, Nvidia does have more market share there but I imagine that could have something to do with Nvidias feature set even if the 5700s hang in pure raster. According to steam the 2060s market share is only slightly ahead.

If Nvidias name alone sold cards AMD wouldn't be outselling the 1660s. Clearly having a slightly faster card in that price range matters to the majority of purchasers.

I work for a retail shop building, designing and repairing PCs. I only see a narrow slice of the market, to be sure, but its quite an informative slice.

I wholeheartedly recommend RX570s and RX580s in certain price brackets. But once the budget allows for it, it's NVidia all the way. The 1660 (where I live) is just too good of a deal to pass up at it's price. And the 5600/5700s are no longer stocked on our shelves because of all the returns we had to process due to black screen issues. We lost money when time is taken into account with the 5700 and 5600 series. Fool me once... So its pretty much "if you can't afford a 1660, buy an RX580 or RX570, anything lower than that, save up more money and return to buy an RX580 or 570".

So you have this perception of AMD being the poor man's champion. Great. But that's not how to make your product sexy. It makes future cards seem cheap and plastic, even if they aren't, simply because AMD's name is attached to it.

I work for a retail shop building, designing and repairing PCs. I only see a narrow slice of the market, to be sure, but its quite an informative slice.

I wholeheartedly recommend RX570s and RX580s in certain price brackets. But once the budget allows for it, it's NVidia all the way. The 1660 (where I live) is just too good of a deal to pass up at it's price. And the 5600/5700s are no longer stocked on our shelves because of all the returns we had to process due to black screen issues. We lost money when time is taken into account with the 5700 and 5600 series. Fool me once... So its pretty much "if you can't afford a 1660, buy an RX580 or RX570, anything lower than that, save up more money and return to buy an RX580 or 570".

So you have this perception of AMD being the poor man's champion. Great. But that's not how to make your product sexy. It makes future cards seem cheap and plastic, even if they aren't, simply because AMD's name is attached to it.

Sort of proves the point though doesn't it.... if X or Y company has the fastest card for that amount of $$$. You go with that card. You don't say skip the 580 cause ya its faster then the what Nvidia sells, but still buy Nvidia. If you said you told people that I would cede the point. But you didn't you just said you recommend the best card for the market bracket.

I agree AMD has NOT given Nvidia good competition at any other price bracket for awhile now. My point is Nvidia doesn't have some magic... just spend cause its Nvidia power. Clueless gamers buy whatever is in their pre built system.... semi educated ones listen to sales people like yourself. Educated ones find the best values at their price point... and however has the fastest card gets the sale, UNLESS the other guys card is a better value. If you have the fastest card by what logic do you need to undercut the competition still.

The idea that 2 product cycles gives Nvidia a mulligan and people will just buy their cards even if there slower for = money is silly. IF (and we all agree these performance numbers are rumor until proven otherwise) nvidia has the slower card... its they who are going to have to compete on price.

PS... as an aside you guys where missing out on 5600/5700 sales for no reason. The black screen driver issue was just that a driver issue that is long corrected. Nvidia has had their share of those as well... not to excuse AMDs issue having a black screen issue even if it only effected a handful of customers around a product launch isn't fantastic. Love my 5700... but ya here is hoping this Navi2 generation has none of the stupid driver bugs or firmware games. I get how that crap can be a huge pita for retailers not just customers.

Last edited:

Friend of mine who owns a shop had the same issues with the 5600/5700. Would have been nice if AMD admitted they had a problem with them like NVidia did with the 2080ti’s. It’s all caused by that bad memory batch that made its rounds. By bud was left holding the bag with a few dozen bum 5700’s he got them all returned to his supplier but they fought him on the returns. The amount of drinking he got done that month... hurts thinking about it, he’s way bigger than me and I could not keep up.I work for a retail shop building, designing and repairing PCs. I only see a narrow slice of the market, to be sure, but its quite an informative slice.

I wholeheartedly recommend RX570s and RX580s in certain price brackets. But once the budget allows for it, it's NVidia all the way. The 1660 (where I live) is just too good of a deal to pass up at it's price. And the 5600/5700s are no longer stocked on our shelves because of all the returns we had to process due to black screen issues. We lost money when time is taken into account with the 5700 and 5600 series. Fool me once... So its pretty much "if you can't afford a 1660, buy an RX580 or RX570, anything lower than that, save up more money and return to buy an RX580 or 570".

So you have this perception of AMD being the poor man's champion. Great. But that's not how to make your product sexy. It makes future cards seem cheap and plastic, even if they aren't, simply because AMD's name is attached to it.

Manny Calavera

2[H]4U

- Joined

- Jun 10, 2004

- Messages

- 3,986

no it doesn't work that way unless everyone is simply stupid.

There is THE answer. KazeoHin is right,as we all know,99.99% of CONsumers are stupid beyond belief. nVIDIA's marketing dept will do the rest,along with confirmation bias ingrained in most folks.

Very possible... marketing people get paid for a reason. No one needs a marketing dept when there a mile ahead of the competition. This might be the first time in a long while that Nvidias Marketing dept will have to earn their keep.There is THE answer. KazeoHin is right,as we all know,99.99% of CONsumers are stupid beyond belief. nVIDIA's marketing dept will do the rest,along with confirmation bias ingrained in most folks.

Of course all the marketing in the world won't help if AMD launches with tons of stock and Nvidia is still struggling.

Manny Calavera

2[H]4U

- Joined

- Jun 10, 2004

- Messages

- 3,986

Very possible... marketing people get paid for a reason. No one needs a marketing dept when there a mile ahead of the competition. This might be the first time in a long while that Nvidias Marketing dept will have to earn their keep.

Of course all the marketing in the world won't help if AMD launches with tons of stock and Nvidia is still struggling.

Agree !

So what brand is that, because I have used them all and they all had driver issues? Tho I think Matrox gave me the least trouble..

Least trouble Ihave ever had with a GPU period is simple Intel CPU graphics. No frills, no horsepower at all, no high fps, but intels embedded gpu is the most stable thing I have literally ever used.

I’m old and I’m tired and some times it’s not about the best or the fastest. It’s about the least number of hassles when I turn it on.Least trouble Ihave ever had with a GPU period is simple Intel CPU graphics. No frills, no horsepower at all, no high fps, but intels embedded gpu is the most stable thing I have literally ever used.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)