- Joined

- Mar 3, 2018

- Messages

- 1,713

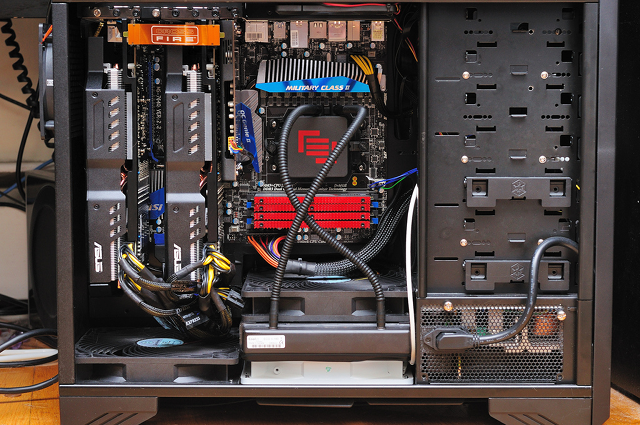

Multi GPU setups have fallen out of favor in the past few years. Games rarely advertise support for it anymore, and AMD has even deprecated the "Crossfire" brand. TechSpot noted that the last multi-GPU test they ran was in 2016, so they decided to grab a pair of RX 590s and see what the experience is like today, and the results were mixed. Battlefield V, for example, doesn't seem to support the 2nd 590 at all, while second card boosted Battlefield 1 performance by 46%. Scaling was about as perfect as it can be in Strange Brigade's DX12 mode, while Battlefront II performance was significantly worse with 2 GPUs.

As for the RX 590s in Crossfire, we'd much rather have a single Vega 64 graphics card. It's extremely rare that two 590s will provide higher frame rates than a single Vega 64, while also offering stutter-free gaming. If you're only ever going to play a game like F1 2018 that supports Crossfire really well, then getting two RX 570s for $300 will be a hard combo to beat. But who buys a graphics card to only ever play one or two games? Other drawbacks that are also part of this conversation include heat and power consumption. Those two RX 590s were dumping so much heat into the Corsair Crystal 570X case that you could justify spending more money in case fans and even then you'll still be running hotter due to the way the cards are stacked. You'll also lose out on the power supply. The RTX 2070 works without an issue with a 500w unit, and 600w would be more than enough. The Crossfire 590s though will need an 800 watt unit, 750w would be the minimum.

As for the RX 590s in Crossfire, we'd much rather have a single Vega 64 graphics card. It's extremely rare that two 590s will provide higher frame rates than a single Vega 64, while also offering stutter-free gaming. If you're only ever going to play a game like F1 2018 that supports Crossfire really well, then getting two RX 570s for $300 will be a hard combo to beat. But who buys a graphics card to only ever play one or two games? Other drawbacks that are also part of this conversation include heat and power consumption. Those two RX 590s were dumping so much heat into the Corsair Crystal 570X case that you could justify spending more money in case fans and even then you'll still be running hotter due to the way the cards are stacked. You'll also lose out on the power supply. The RTX 2070 works without an issue with a 500w unit, and 600w would be more than enough. The Crossfire 590s though will need an 800 watt unit, 750w would be the minimum.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)