- Joined

- Mar 3, 2018

- Messages

- 1,713

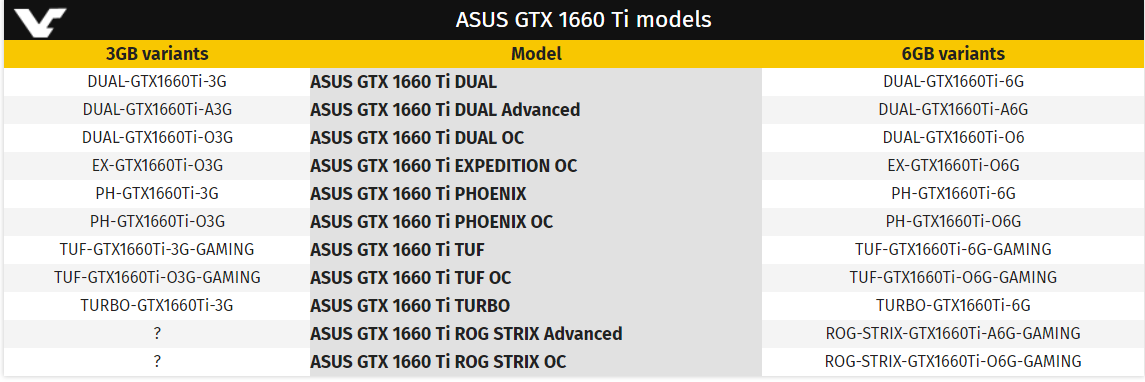

As the 1660 TI launch nears, more and more designs from various manufacturers are starting to pop up around the web. Videocardz posted designs from MSI, Asus, Galax, Palit, and EVGA, and they even managed to get a die shot of what they claim to be the TU116 GPU, as well as a shot of the MSI Geforce 1660 TI Ventus Xt's PCB. On top of the pictures, Videocardz spotted yet another potential leak from the Eurasion Economic Commission's website. This one in particular suggest that Asus could lanch several 1660 TI SKUs with 3GB of memory instead of 6GB.

The 6GB variant may be exclusive to ROG STRIX series though, as there is no such product with 3GB memory listed. ASUS will launch DUAL, Expedition, Phoenix, TUF (the new series), Turbo and ROG STRIX series of GeForce GTX 1660 Ti graphics. Models are as always divided into Advanced, OC and non-OC variants (which basically feature different clock speeds). The GTX 1660 Ti graphics cards will be announced on February 22nd. We expect a large list of custom models to be available at launch as GTX 1660 Ti basically replaces GTX 1060, the most popular SKU in NVIDIA offer.

The 6GB variant may be exclusive to ROG STRIX series though, as there is no such product with 3GB memory listed. ASUS will launch DUAL, Expedition, Phoenix, TUF (the new series), Turbo and ROG STRIX series of GeForce GTX 1660 Ti graphics. Models are as always divided into Advanced, OC and non-OC variants (which basically feature different clock speeds). The GTX 1660 Ti graphics cards will be announced on February 22nd. We expect a large list of custom models to be available at launch as GTX 1660 Ti basically replaces GTX 1060, the most popular SKU in NVIDIA offer.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)