Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Shopping for a card again (3090, 4080, 7900 XT/XTX)

- Thread starter pavel

- Start date

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,651

Using Radeon Chill helped a LOT with this.

recommend tinkering with it.

Except it doesn't work in more than half of my games, which is a bummer. Even forcing global FRC on doesn't always work.

Basing your card purchase decision on a shitty game like Hogwarts seems like a sound plan.

I liked the game, it was pretty fun until they broke the main mission with a patch and I never went back to it. I might try again at some point, it honestly was pretty entertaining.

LolBasing your card purchase decision on a shitty game like Hogwarts seems like a sound plan.

Starrbuck

2[H]4U

- Joined

- Jun 12, 2005

- Messages

- 2,981

I sold my 3080 too. I was starting to hit that 10GB limit with 1440p. Went with AMD this gen as I think NV's prices are just insane. Enjoying the 24GB on my ASUS TUF OC RX 7900 XTX. Good luck.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,749

The game is good, the optimization etc are not.Basing your card purchase decision on a shitty game like Hogwarts seems like a sound plan.

Like, on paper the wattage is similar but the AMD card can't reduce its power draw, say you're playing an indie game that maxes out your refresh rate but only uses 40% GPU - you're still pulling down 350-400W while the 4080 is at like 100W. I noticed right away that my whole case was getting hot and ended up having to turn my case fans way up to compensate.

Pls somebody tell me that is was just a bug and it's already fixed on AMD cards. This is fucking crazy.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

Pls somebody tell me that is was just a bug and it's already fixed on AMD cards. This is fucking crazy.

I have no idea what he is talking about, only issue I have heard is with dual monitors making the idle wattage higher. My 6900XT has no issues using less power in a non demanding game and I don't remember any reviews saying the 7900XTX had constant high power draw, just that it could draw quite a bit of power at times.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

They probably had something pegging the card or something. Trying to say "AMD cards can't run at less than 100% power" is dumb and misleading.I have no idea what he is talking about, only issue I have heard is with dual monitors making the idle wattage higher. My 6900XT has no issues using less power in a non demanding game and I don't remember any reviews saying the 7900XTX had constant high power draw, just that it could draw quite a bit of power at times.

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,651

They probably had something pegging the card or something. Trying to say "AMD cards can't run at less than 100% power" is dumb and misleading.

Thats not exactly what I said, but hey anything against AMD these days is called "dumb and misleading". The Red Gods will surely favor you with high performance next gen... oh wait no they won't.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

You literally said AMD can't reduce power draw.Thats not exactly what I said, but hey anything against AMD these days is called "dumb and misleading". The Red Gods will surely favor you with high performance next gen... oh wait no they won't.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

Then don't say things that make no sense. I used both companies and never seen a issue like your trying to say, my guess is your using some monitoring software that doesn't support the 7900XTX or needs a update for it. It literally makes no sense it could draw massive wattage while not using the card at 100%, GPU has to be doing something to cause the power draw.Thats not exactly what I said, but hey anything against AMD these days is called "dumb and misleading". The Red Gods will surely favor you with high performance next gen... oh wait no they won't.

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,651

Then don't say things that make no sense. I used both companies and never seen a issue like your trying to say, my guess is your using some monitoring software that doesn't support the 7900XTX or needs a update for it. It literally makes no sense it could draw massive wattage while not using the card at 100%, GPU has to be doing something to cause the power draw.

I mean you can just go look at AMDs own website. They dropped a driver to try and fix the idle power usage being through the roof which was triggered by using 4K or multi-monitor- the card would jump up to 3D clocks on the desktop which causes this and it is indeed "doing nothing" as you say can't make sense. Low usage games have the same problem, but most people don't notice this because they aren't playing on a card like that at 4K and running a game that isn't pushing the hardware to the limit.

There is probably a reason why they dropped out of the race for high end next gen, their process seems to need refinement. Theres nothing wrong with this, its just reality. I own 4 AMD cards right now, the only Nvidia one I have in the house is an 8800GT. All I want is them to fix their stuff so we can have some competition, but pretending flawed products don't exist isn't going to get them there.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

So now we're falling back to the idle power issue?I mean you can just go look at AMDs own website. They dropped a driver to try and fix the idle power usage being through the roof which was triggered by using 4K or multi-monitor- the card would jump up to 3D clocks on the desktop which causes this and it is indeed "doing nothing" as you say can't make sense. Low usage games have the same problem, but most people don't notice this because they aren't playing on a card like that at 4K and running a game that isn't pushing the hardware to the limit.

Dude, lol. You claimed an AMD card will blow 400w playing an indie game at 40% load.

Eshelmen

Supreme [H]ardness

- Joined

- Feb 3, 2004

- Messages

- 6,663

Pls somebody tell me that is was just a bug and it's already fixed on AMD cards. This is fucking crazy.

I don't see that issue at all. Some games I'm seeing 200 watts drawn, others I'm seeing 300-330

Last edited:

LigTasm

Supreme [H]ardness

- Joined

- Jul 29, 2011

- Messages

- 6,651

So now we're falling back to the idle power issue?

Dude, lol. You claimed an AMD card will blow 400w playing an indie game at 40% load.

That is what I observed with both of my 7900XTX, yes. I'm ballparking the wattage because I'm looking at the draw on my UPS, not using a monitoring overlay, but it was somewhere around the cards normal gaming power usage. I had two different models with different power targets, one was a 355W card and the other was 420W.

I've seen various posts about this in other places too, but I don't think many people notice it because there was never a negative effect on gameplay. I just happened to notice that my entire case was getting ridiculously hot after I swapped out the 4090 so I was trying to figure out what was going on.

I have also complained about the exact opposite on Nvidia cards, at one point there was a game I was playing that would trigger desktop idle mode and I would get single digit fps. That particular bug still exists, but because the game engine is no longer used in new stuff it likely won't ever be fixed with driver patches.

I don't see that issue at all. Some games I'm seeing 200 watts drawn, others I'm seeing 300-330

View: https://www.youtube.com/watch?v=HznATcpWldo&t=281s

Watch this video.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

Not 1 soul is arguing that AMD is more efficient with this generation but you can tweak it just like you can with a Nvidia card to draw less and still perform. Anyone that read any reviews should know this and be unsurprised.

You can only do so much though - it's the architecture/tech. - and also, the other complaint is with the drivers - I read a lot of complaints with amd cards - ppl give up - sure, I know you can blame user error - but, it's rarely the other way around - ppl who complain about nvidia (not heat; not power) - and switch to AMD. The main complaints with Nvidia seems to be the price of the cards and the crippling of them.Not 1 soul is arguing that AMD is more efficient with this generation but you can tweak it just like you can with a Nvidia card to draw less and still perform. Anyone that read any reviews should know this and be unsurprised.

So, the concern about heat/temps and the power consumption seem to be legitimate.

That guy probably has legit complaints with the power (drivers, too, perhaps) - and even if there's some monitoring issue - then, that's yet another peculiar add-on to the problem list, no?

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

To this day I still find it amusing seemingly everyone has an opinion on AMD drivers despite having far, far fewer sales/ marketshare than Nvidia. Everyone "has a friend" who just had nothing but issues with AMD. That guy must have a lot of friends.the other complaint is with the drivers - I read a lot of complaints with amd cards

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

You can only do so much though - it's the architecture/tech. - and also, the other complaint is with the drivers - I read a lot of complaints with amd cards - ppl give up - sure, I know you can blame user error - but, it's rarely the other way around - ppl who complain about nvidia (not heat; not power) - and switch to AMD. The main complaints with Nvidia seems to be the price of the cards and the crippling of them.

So, the concern about heat/temps and the power consumption seem to be legitimate.

That guy probably has legit complaints with the power (drivers, too, perhaps) - and even if there's some monitoring issue - then, that's yet another peculiar add-on to the problem list, no?

Not really I used both, and both companies had bad drivers. Most time the issue gets fixed fairly quickly but Nvidia has some bad ones that ate cards at one time. Haters love to label AMD drivers bad and give next to no specifics or they latch on to 1 known issue that effects a few users. Probably the biggest issue AMD had that took awhile to fix was for VR users. Both companies cards get hot and use wattage when being pushed and actual temps of the card are meaningless unless they are throttling but high end cards have always been power hogs for the most part.

He may have a legit issue, but when one is vague and generalizes things I tend to have more doubt of there being a issue. If that was the common issue then reviewers would have caught it and many other users would be seeing this issue. Could just be something else in his machine is causing issues, he had a water leak inside his case, so god knows what issue that could have caused.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

Confirmation bias. Someone happens to have an issue with a Radeon card installed, must be Radeon. Internet said so. Many people just assume without trying to troubleshoot any other variables.Could just be something else in his machine is causing issues, he had a water leak inside his case, so god knows what issue that could have caused.

*Edit* Keep waiting for my 6800xt to run into one of the many crippling issues I'm supposed to have. Keeping my fingers crossed

Not 1 soul is arguing that AMD is more efficient with this generation but you can tweak it just like you can with a Nvidia card to draw less and still perform. Anyone that read any reviews should know this and be unsurprised.

Would like to see somebody here with a 7900XTX testing CS:GO while power limiting it to 86 watts.

Eshelmen

Supreme [H]ardness

- Joined

- Feb 3, 2004

- Messages

- 6,663

Why would you even want that?Would like to see somebody here with a 7900XTX testing CS:GO while power limiting it to 86 watts.

Why would you even want that?

"you can tweak it just like you can with a Nvidia card to draw less and still perform"...

I'm curious to see what performance actually remains at the end if you lock it to the same level of power consumption. I wonder if it's even possible, probably would just crash. If this video is 100% legit and that's how the cards still perform, it's just incredible embarassing how much worse AMDs power efficiency is in less performance heavy games.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

Being less efficient is a far cry from "always maxed at 400w no matter the game"

Being less efficient is a far cry from "always maxed at 400w no matter the game"

Yeah sure, but the video above is still shocking. Wouldn't even think twice and pay 300$ more for a complete silent and cool card when I would play CS:GO etc. If this is real and not a driver bug, it's fucking nuts in my eyes.

AMD has a rep for driver problems more than Nvidia does. I really don't want to go to the time of proving that so I hope you're not asking me and just trying to troll? Which is it?To this day I still find it amusing seemingly everyone has an opinion on AMD drivers despite having far, far fewer sales/ marketshare than Nvidia. Everyone "has a friend" who just had nothing but issues with AMD. That guy must have a lot of friends.

This debate I find annoying and time-consuming. You can say what you want but I'm not changing my mind on it. It's pretty obvious to me.

In fact, it makes it worse that the market share is so low - if they want higher market share, they should devote more time to the drivers and experience. Also, the lack of features or how long it takes for features to progress - especially, when it's not related to gaming.

It's not just 'being less efficient' - at least, I don't think the video tuber was only saying that. It's HOW much less efficient it is to a comparable competitor card - when the performance is neck and neck or even if one is surpassing the other - the AMD card is way more power hungry and inefficient. It's relative and not just an examination of the AMD card on its own.Being less efficient is a far cry from "always maxed at 400w no matter the game"

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,843

Not asking you to prove anything nor trolling. You made no statement that your decision was made yet and that any dissenting opinions would be considered trolling.I really don't want to go to the time of proving that so I hope you're not asking me and just trying to troll? Which is it?

Enjoy your purchase.

I haven't decided on a gpu yet. Even if I think AMD drivers are more problematic - there are a lot of ppl who are satisfied - so, I agreed with your post in part - and I am prepared for any risk - at least, with drivers - there is a chance to have a fix - unliked hardware-related issues. Sorry, if I sounded aggressive or whatever. I just think amd probably has more driver complaints - whether that is warranted, it's the case, regardless and I don't see how one can argue otherwise - that's just my perception.Not asking you to prove anything nor trolling. You made no statement that your decision was made yet and that any dissenting opinions would be considered trolling.

Enjoy your purchase.

Perhaps, but I think it can be argued that AMD card owners have more driver complaints - in general/overall - than Nvidia card owners. There's more 'card switching' from AMD to Nvidia than the other way around - that might be a guess but I can find lots of 'ughhhh amd drivers' complaints on even the AMD sites and there's many on reddit and forums as well. Obviously, there's Nvidia ones as well but I'm talking sheer numbers, volume etc.Not really I used both, and both companies had bad drivers. Most time the issue gets fixed fairly quickly but Nvidia has some bad ones that ate cards at one time. Haters love to label AMD drivers bad and give next to no specifics or they latch on to 1 known issue that effects a few users. Probably the biggest issue AMD had that took awhile to fix was for VR users. Both companies cards get hot and use wattage when being pushed and actual temps of the card are meaningless unless they are throttling but high end cards have always been power hogs for the most part.

He may have a legit issue, but when one is vague and generalizes things I tend to have more doubt of there being a issue. If that was the common issue then reviewers would have caught it and many other users would be seeing this issue. Could just be something else in his machine is causing issues, he had a water leak inside his case, so god knows what issue that could have caused.

I'm also talking about reputation - so whether it's justified - ppl have the perception that AMD drivers are more problematic - would there be no reason for that? I doubt it.

OnceSetThisCannotChange

Limp Gawd

- Joined

- Sep 15, 2017

- Messages

- 250

If I was buying with focus on VR or productivity, I'd pay a little more and get a 4080, but as I got a reasonable deal on 7900XTX this is what I finally bought + my OS will be Linux, so an even easier choice. I waited since 1080ti to finally upgrade. Did not have an AMD/ATI card in ages, X850XT was the last one.

Not exactly concerned with drivers, will see how it goes. Just reading it seems to be similar, there are always issues, it does not appear that AMD is significantly worse at the moment - which was the case during the initial launch of RDNA, right now it appears to be similar. Issues I expect are high power draw with multiple monitors, but planning to drive a single 4k display and we will see if there is more once I put it together next week.

edit: did not have a desktop card, but I did buy a 6800M laptop in the meantime, no major issues so I guess this is where confidence is coming from

Not exactly concerned with drivers, will see how it goes. Just reading it seems to be similar, there are always issues, it does not appear that AMD is significantly worse at the moment - which was the case during the initial launch of RDNA, right now it appears to be similar. Issues I expect are high power draw with multiple monitors, but planning to drive a single 4k display and we will see if there is more once I put it together next week.

edit: did not have a desktop card, but I did buy a 6800M laptop in the meantime, no major issues so I guess this is where confidence is coming from

Last edited:

How is the Linux experience so far? I plan on dual booting - I'll have an extra SSD to boot Linux - I had issues with DR last time I tried to install DR on Ubuntu and Fedora - but, it was an easy install on Windows. I want to try it again - but, I'll mostly use Windows on that.If I was buying with focus on VR or productivity, I'd pay a little more and get a 4080, but as I got a reasonable deal on 7900XTX this is what I finally bought + my OS will be Linux, so an even easier choice. I waited since 1080ti to finally upgrade. Did not have an AMD/ATI card in ages, X850XT was the last one.

Not exactly concerned with drivers, will see how it goes. Just reading it seems to be similar, there are always issues, it does not appear that AMD is significantly worse at the moment - which was the case during the initial launch of RDNA, right now it appears to be similar. Issues I expect are high power draw with multiple monitors, but planning to drive a single 4k display and we will see if there is more once I put it together next week.

edit: did not have a desktop card, but I did buy a 6800M laptop in the meantime, no major issues so I guess this is where confidence is coming from

One of the reasons (besides price) for considering the 7900 XTX was Windows use. Also, I have reason to believe that AMD cards are improving for productivity - at least, for the software I'll be using - it's not too bad at Davinci Resolve - doubts about 6000 series of cards - but, for the 7900 XTX - it's close to the 4080 in performance, apparently. The 24gb of vram is good if needed. The higher power consumption is the main/biggest negative, imho.

I am interested in getting into Blender too - I used it a long time ago. It's pretty awful in Blender except the introduction of HIP-RT is at least potentially making it a lot better - increasing speeds. Unfortunately, that feature doesn't work in Linux yet - my impression is that there's licensing issues - and it might need proprietary software so it makes it a bit more complicated (to use) in Linux.

I'll only be using one display - 4K TV - so, yeah, the multiple monitor issues don't concern me - those will probably be fixed eventually - but, yeah, AMD seems to take its time to iron out issues and bring support to newer hardware and systems. At least, when it's not gaming-related.

OnceSetThisCannotChange

Limp Gawd

- Joined

- Sep 15, 2017

- Messages

- 250

Don't know yet. Got the card, but working & travelling this week and weekend, hope to put it together sometimes next week. I can check in at a later point.

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,567

How is the Linux experience so far? I plan on dual booting - I'll have an extra SSD to boot Linux - I had issues with DR last time I tried to install DR on Ubuntu and Fedora - but, it was an easy install on Windows. I want to try it again - but, I'll mostly use Windows on that.

One of the reasons (besides price) for considering the 7900 XTX was Windows use. Also, I have reason to believe that AMD cards are improving for productivity - at least, for the software I'll be using - it's not too bad at Davinci Resolve - doubts about 6000 series of cards - but, for the 7900 XTX - it's close to the 4080 in performance, apparently. The 24gb of vram is good if needed. The higher power consumption is the main/biggest negative, imho.

I am interested in getting into Blender too - I used it a long time ago. It's pretty awful in Blender except the introduction of HIP-RT is at least potentially making it a lot better - increasing speeds. Unfortunately, that feature doesn't work in Linux yet - my impression is that there's licensing issues - and it might need proprietary software so it makes it a bit more complicated (to use) in Linux.

I'll only be using one display - 4K TV - so, yeah, the multiple monitor issues don't concern me - those will probably be fixed eventually - but, yeah, AMD seems to take its time to iron out issues and bring support to newer hardware and systems. At least, when it's not gaming-related.

It's going to have higher power draw with multiple monitors, they got it down quite a bit with the last update but it's going to have higher draw then a similar Nvidia card. Part of that reason from what I hear is the newer display port they used, apparently it requires more juice.

Oh, really? I haven't heard of that reason yet.It's going to have higher power draw with multiple monitors, they got it down quite a bit with the last update but it's going to have higher draw then a similar Nvidia card. Part of that reason from what I hear is the newer display port they used, apparently it requires more juice.

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,123

This might be true in this generation. Nvidia have a new VRM design where they claim 10X improvement in power management response time. Might lead to being able to have more refined voltage curves, needing less voltage overhead for stability:It's not just 'being less efficient' - at least, I don't think the video tuber was only saying that. It's HOW much less efficient it is to a comparable competitor card - when the performance is neck and neck or even if one is surpassing the other - the AMD card is way more power hungry and inefficient. It's relative and not just an examination of the AMD card on its own.

from Techpowerup

On previous generation AMD 6XXX/Nvidia 3XXX, AMD cards were more power efficient.In the chart above, NVIDIA shows how current spikes get mitigated by their new VRM design, which uses a PID controller feedback loop. While the RTX 3090 bounces up and down, the RTX 4090 stays relatively stable, and just follows the general trend of the current loading pattern thanks to a 10x improvement in power management response time. It's also worth pointing out that the peak power current spike on the RTX 3090 is higher than the RTX 4090, even though the RTX 3090 is rated 100 W lower than the RTX 4090 (350 W vs 450 W).

Tamlin_WSGF

2[H]4U

- Joined

- Aug 1, 2006

- Messages

- 3,123

Are you going to produce content of value (in $$$, not sentimental value) or just tinker a bit on a hobby basis/educational purposes? If its actually money generating work, build a dedicated system for it without any crap you dont actually use for work. Dont dual boot if you can avoid it. Unless you dont have any choice due to budget constraints, I would not overthink all the other things you can use the system for and plan the build for professional use if you are actually going to produce content that generates money.How is the Linux experience so far? I plan on dual booting - I'll have an extra SSD to boot Linux - I had issues with DR last time I tried to install DR on Ubuntu and Fedora - but, it was an easy install on Windows. I want to try it again - but, I'll mostly use Windows on that.

One of the reasons (besides price) for considering the 7900 XTX was Windows use. Also, I have reason to believe that AMD cards are improving for productivity - at least, for the software I'll be using - it's not too bad at Davinci Resolve - doubts about 6000 series of cards - but, for the 7900 XTX - it's close to the 4080 in performance, apparently. The 24gb of vram is good if needed. The higher power consumption is the main/biggest negative, imho.

I am interested in getting into Blender too - I used it a long time ago. It's pretty awful in Blender except the introduction of HIP-RT is at least potentially making it a lot better - increasing speeds. Unfortunately, that feature doesn't work in Linux yet - my impression is that there's licensing issues - and it might need proprietary software so it makes it a bit more complicated (to use) in Linux.

I'll only be using one display - 4K TV - so, yeah, the multiple monitor issues don't concern me - those will probably be fixed eventually - but, yeah, AMD seems to take its time to iron out issues and bring support to newer hardware and systems. At least, when it's not gaming-related.

Same goes if you want to do some money generating gaming streams or similar. Build a smaller computer for stream with capture from gaming computer. This way, stream and chat stays up if you need to restart gaming computer for some reason or while trying out the newest, hottest and buggiest game for your viewers.

If its not serious work, both AMD and Nvidia cards can do the job. Nvidia have better support in some programs. For Linux, AMD is less of a headache, especially if you do some distro hopping or want to use gamescope for gaming. Both AMD and Nvidia can give you stable systems for games in windows. Both have some isolated issues (read release notes for drivers), but in general, both are stable.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,661

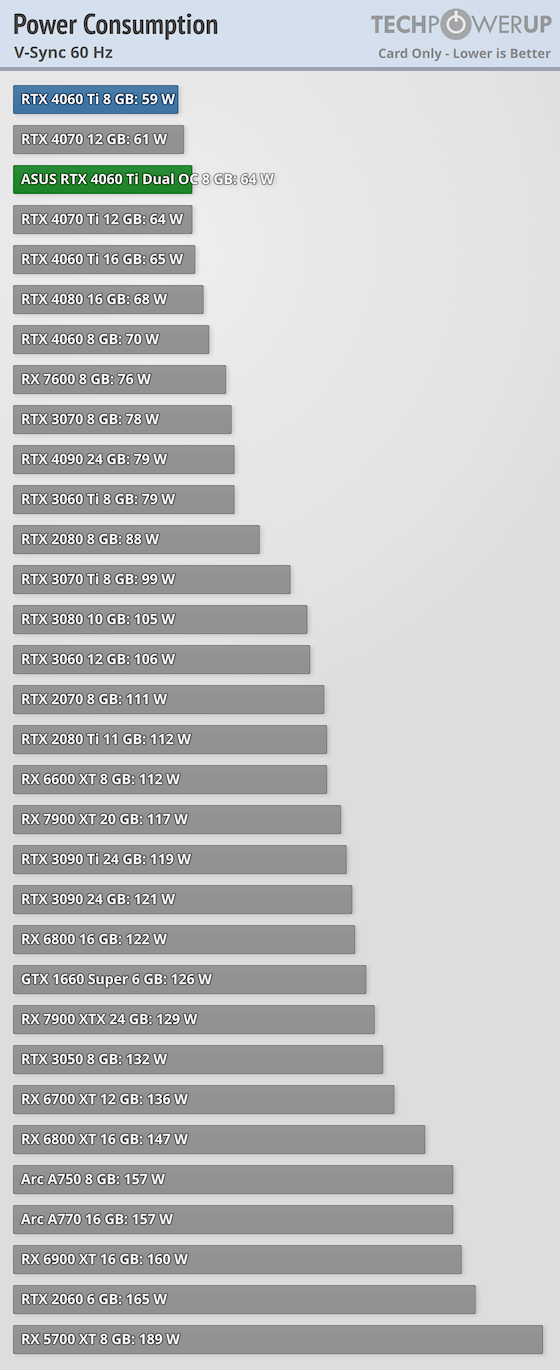

- V-Sync: If you don't need the highest framerate and want to conserve power, running at 60 FPS is a good option. In this test, we run Cyberpunk 2077 at 1920x1080, capped to 60 FPS. This test is also useful in testing a graphic card's ability to react to situations with only low power requirements. For graphics cards that can't reach 60 FPS at 1080p, we report the power draw at the highest achievable frame rate.

Also, the XTX isn't exactly inefficient. It blows the pants off a 3090 ti, with a lot less power. Nvidia's Ada Lovelace cards were certainly killing it on performance per watt. But, AMD still made some shit happen. I'm interested to see how efficient the 7700 and 7800 are. Last gen, their 6800 was really efficient. RTX 4070 is only a little better.

As for why 7900 XTX uses a fair chunk more power when V-synced or when running a really easy game engine: Who knows? Could be a fundamental limitation of the architecture. Could be something they overlooked, when designing the clock generators etc (there seems to be chatter around the net, about RDNA3 having some weirdness above 2300mhz and/or if there is too much difference between max and min clocks. Sort of underlined by how it only hits 3ghz at pretty low gaming temps).

Based on recent driver releases, it seems they still have some internal learning to do, about their own architecture. The Last of Us and Forza Horizon weren't performing correctly. Now they are and....even a little better than we might have thought. 1% lows they get on a few games, is pretty remarkable. I wonder if that may be related to the higher average power usage.

Last edited:

Huh? The 6800 and 6900 are two of the worst cards in the chart.

- V-Sync: If you don't need the highest framerate and want to conserve power, running at 60 FPS is a good option. In this test, we run Cyberpunk 2077 at 1920x1080, capped to 60 FPS. This test is also useful in testing a graphic card's ability to react to situations with only low power requirements. For graphics cards that can't reach 60 FPS at 1080p, we report the power draw at the highest achievable frame rate.

View attachment 591566

Also, the XTX isn't exactly inefficient. It blows the pants off a 3090 ti, with a lot less power. Nvidia's Ada Lovelace cards were certainly killing it on performance per watt. But, AMD still made some shit happen. I'm interested to see how efficient the 7700 and 7800 are. Last gen, their 6800 was really efficient. RTX 4070 is only a little better.

As for why 7900 XTX uses a fair chunk more power when V-synced or when running a really easy game engine: Who knows? Could be a fundamental limitation of the architecture. Could be something they overlooked, when designing the clock generators etc (there seems to be chatter around the net, about RDNA3 having some weirdness above 2300mhz and/or if there is too much difference between max and min clocks. Sort of underlined by how it only hits 3ghz at pretty low gaming temps).

Based on recent driver releases, it seems they still have some internal learning to do, about their own architecture. The Last of Us and Forza Horizon weren't performing correctly. Now they are and....even a little better than we might have thought. 1% lows they get on a few games, is pretty remarkable. I wonder if that may be related to the higher average power usage.

The 4080 and 4090 sip power. It's your chart.

The 3090 is close to the 7900 XTX - I guess, that's bad compared to the uplift in performance for the 7900 XTX - the 4080 is almost 1/2 the power of the 7900 xtx and alternately, the 7900 xtx is almost double - that pretty much matches the video I presented here - posted in this thread.

I'm just observing out loud - I'd definitely want to undervolt this (7900 XTX) card - if only to lower temps and possible/potential noise.

Edit: I'm not criticizing you - just questioning your conclusion about the 6000 series - or challenging it - since, I don't see how you (and the other guy) reached, virtually, the same conclusion. I must be missing something - because my eyes tell me something different.

I appreciate the post - your explanation of the other 'stuff' is interesting - and I never thought of using vsync - and limiting frames. But, many gamers don't like to use vsync, though?

It's an interesting topic in itself - I don't know much about it - I just know it's often used to eliminate/avoid tearing - and now there's g-sync for nvidia and freesync for AMD cards - is one 'better' than the other? Freesync is/was open standard - and most monitors have it - nvidia cards can use it, too - but, g-sync was closed - i.e. nvdia cards can use variable refresh rate on displays with g-sync - but, amd cards might not?

It's interesting to clarify - because, I will probably still to a TV for my display - and I probably want freesync regardless of whether I get an amd or nvidia card?

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)