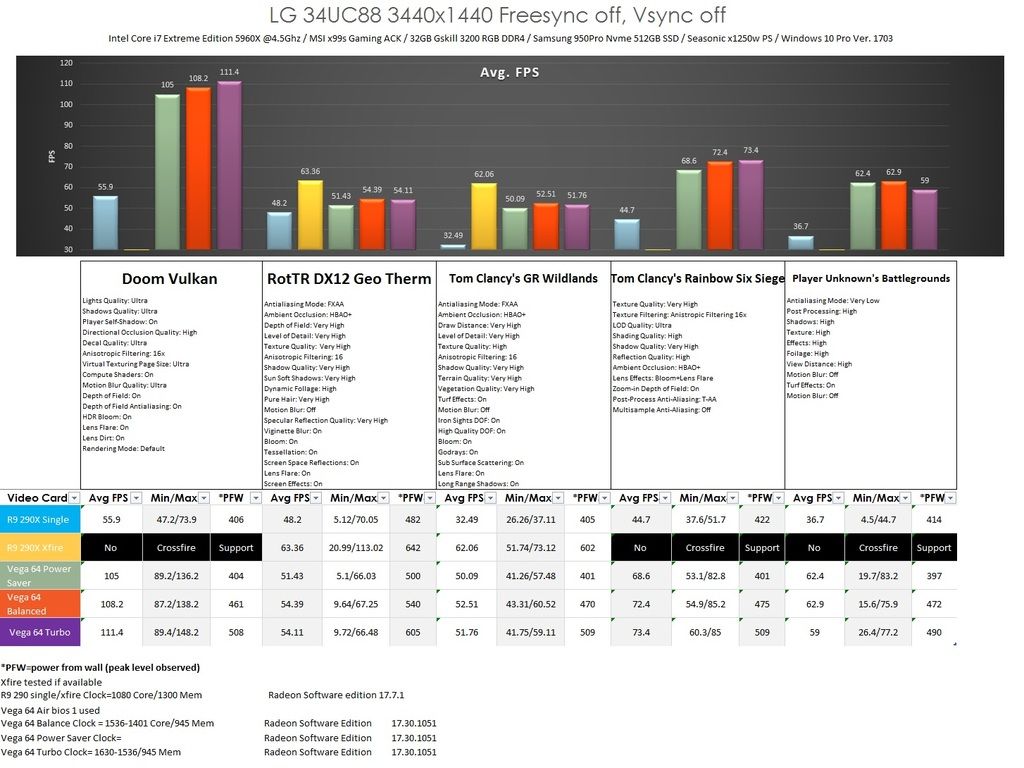

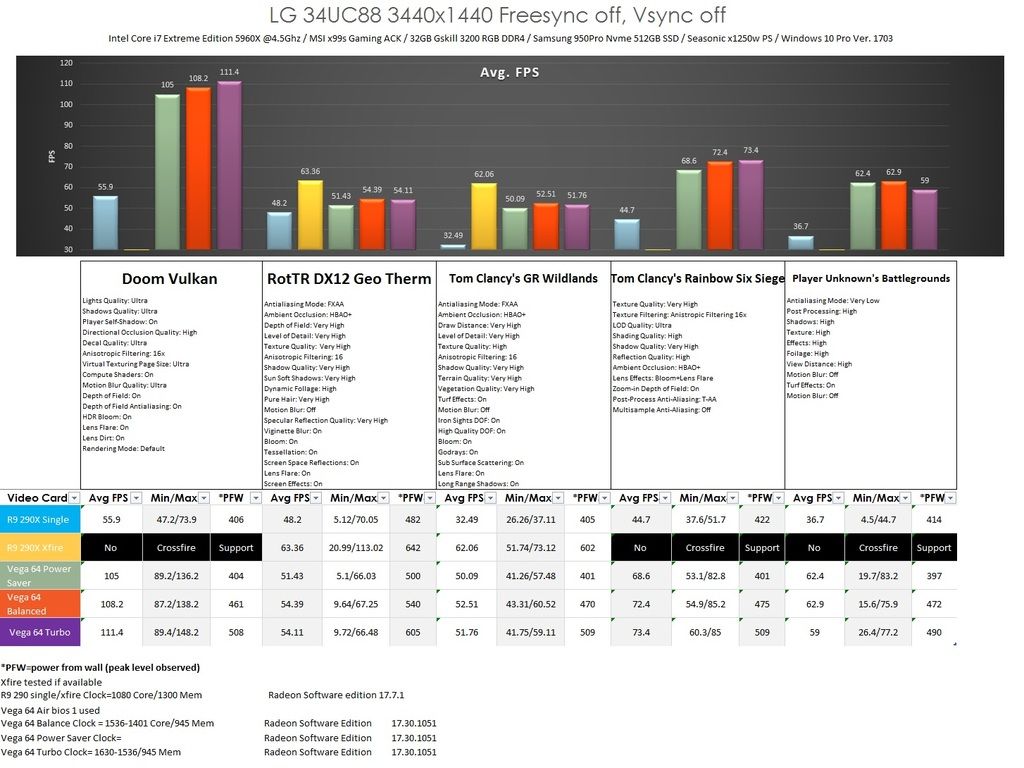

I finally installed the card tonight after some benchmarking of my old 290x's in crossfire to compare what I got myself into. Plus, I wanted to share my results with an Ultrawide as there isn't much posted results for us who own one. This should help who are curious what Vega 64 can do with a few titles at the moment. Sorry for the crude chart, but it should give an idea of the frame rates and power draw I got from the wall.

What have I learned from this? Well it looks like it's true that balanced mode is the best setting to use for the most part. And for the record, I did not fuss with power limits or anything like that. I kept it all at the default settings to show the performance without any tweaks. These aren't the best drivers, it's not called beta for nothing. I can see they concentrated on a few games and others really need work such as Tomb Raider.

This card is in dire need of a water block. Heat wasn't that bad really, reached a max temp of 82c, but I rather keep the clock speed at the ceiling for the setting I chose. Not bounce all over the place. As expected the power used is not spectacular. AMD has never been great in this regard since it became part of the discussion for GPU's for sometime now. It was nice when it was all about brute force and who gives a shit how much power it sucks. Well since my 290x was another power hungry GPU, it's good to see at least Vega 64 not toppling over power draw when crossfire was enabled with the 290x. Speaking of crossfire, damn wish recent games would support the damn option. Looking from the graph I could have kept going on with my almost 4 year old cards.

Now I did come across coil whine. I can happily say it only happened during the load intro screen for Doom as it was running at 5400 fps. Besides that, no other time did I experience coil whine. Not even close to what the 290x's had. Fan noise is a big improvement for me compared to the 290x OEM fan. It wasn't a distraction at all. Thank god I watercooled the 290x's soon after the install. Even though Vega's fan doesn't bother me, I still want it watercooled nonetheless.

So am I happy with the purchase? Yes and no. Plus is I can game with one card that has great avg frame rates and minimum frame rates that would stay in the LG's Freesync range. And have everything cranked to boot. PUBG looks so good on high and it's now thankfully playable instead of all low settings. That's why I really wanted to upgrade to stay within the monitors freesync range even after modding it to have a range of 35-75 instead of 55-75 without flicker. Tomb raider has a deceiving low minimum record as there is one second glitch that freezes during benchmarking that of course is recorded. If I had recorded different score from the 3 scenes for benchmark it would have been better, but it seems like that scene is the norm for testing. With that said by the way, oh boy did I miss freesync during the testing. Watching the benchmarks in between watching the kill-a-watt meter I couldn't stand the tearing and perceived studdering. I can't live without Freesync. I've even debated about upgrading from the 75hz LG to one of those Korean 100Hz panels. Looks like Vega can utilize some of the frames above 75hz, but not by much for some games. Hopefully that improves soon.

The no part of the equation is I wish crossfire was properly supported. With Freesync and the results of the benchmarks, I can clearly see two 290x's can beat a single Vega 64. That saddens me. It's great that I get better performance with a single Vega, but come on! I really thought Vega would beat out a crossfired 290x setup especially when I paid the same for a pair of 290s compared to just one Vega. I guess that's a no for the moment with Ultrawide. The other is no DVI for my Korean 1440p secondary monitor. Sure I don't overclock it to 120hz anymore but now its dark on the wall on top of my ultrawide monitor. Guess I'll have to hunt down a dual link DVI to HDMI cable.

It's a damn shame that more people can't own one with all the BS going on with price gouging and miners taking most of the limited stock again. Whether Vega 64 is mocked for not beating a 1080ti and going back and forth with a 1080 with more power usage. We all should have the right to at least take a chance on buying one and discuss what you bought instead of bitching about availability and the price rising.

Now just waiting for a waterblock and better drivers.

What have I learned from this? Well it looks like it's true that balanced mode is the best setting to use for the most part. And for the record, I did not fuss with power limits or anything like that. I kept it all at the default settings to show the performance without any tweaks. These aren't the best drivers, it's not called beta for nothing. I can see they concentrated on a few games and others really need work such as Tomb Raider.

This card is in dire need of a water block. Heat wasn't that bad really, reached a max temp of 82c, but I rather keep the clock speed at the ceiling for the setting I chose. Not bounce all over the place. As expected the power used is not spectacular. AMD has never been great in this regard since it became part of the discussion for GPU's for sometime now. It was nice when it was all about brute force and who gives a shit how much power it sucks. Well since my 290x was another power hungry GPU, it's good to see at least Vega 64 not toppling over power draw when crossfire was enabled with the 290x. Speaking of crossfire, damn wish recent games would support the damn option. Looking from the graph I could have kept going on with my almost 4 year old cards.

Now I did come across coil whine. I can happily say it only happened during the load intro screen for Doom as it was running at 5400 fps. Besides that, no other time did I experience coil whine. Not even close to what the 290x's had. Fan noise is a big improvement for me compared to the 290x OEM fan. It wasn't a distraction at all. Thank god I watercooled the 290x's soon after the install. Even though Vega's fan doesn't bother me, I still want it watercooled nonetheless.

So am I happy with the purchase? Yes and no. Plus is I can game with one card that has great avg frame rates and minimum frame rates that would stay in the LG's Freesync range. And have everything cranked to boot. PUBG looks so good on high and it's now thankfully playable instead of all low settings. That's why I really wanted to upgrade to stay within the monitors freesync range even after modding it to have a range of 35-75 instead of 55-75 without flicker. Tomb raider has a deceiving low minimum record as there is one second glitch that freezes during benchmarking that of course is recorded. If I had recorded different score from the 3 scenes for benchmark it would have been better, but it seems like that scene is the norm for testing. With that said by the way, oh boy did I miss freesync during the testing. Watching the benchmarks in between watching the kill-a-watt meter I couldn't stand the tearing and perceived studdering. I can't live without Freesync. I've even debated about upgrading from the 75hz LG to one of those Korean 100Hz panels. Looks like Vega can utilize some of the frames above 75hz, but not by much for some games. Hopefully that improves soon.

The no part of the equation is I wish crossfire was properly supported. With Freesync and the results of the benchmarks, I can clearly see two 290x's can beat a single Vega 64. That saddens me. It's great that I get better performance with a single Vega, but come on! I really thought Vega would beat out a crossfired 290x setup especially when I paid the same for a pair of 290s compared to just one Vega. I guess that's a no for the moment with Ultrawide. The other is no DVI for my Korean 1440p secondary monitor. Sure I don't overclock it to 120hz anymore but now its dark on the wall on top of my ultrawide monitor. Guess I'll have to hunt down a dual link DVI to HDMI cable.

It's a damn shame that more people can't own one with all the BS going on with price gouging and miners taking most of the limited stock again. Whether Vega 64 is mocked for not beating a 1080ti and going back and forth with a 1080 with more power usage. We all should have the right to at least take a chance on buying one and discuss what you bought instead of bitching about availability and the price rising.

Now just waiting for a waterblock and better drivers.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)