Amazing. They really have to dangle dlss3 over your head like jingle keys because why would you buy this vs any other card that can be had for $400 right now?

It's really not all that much better than a 3060, and you can get something like a 6800 for that price. (Edit, not quite. Still in the high 400s)

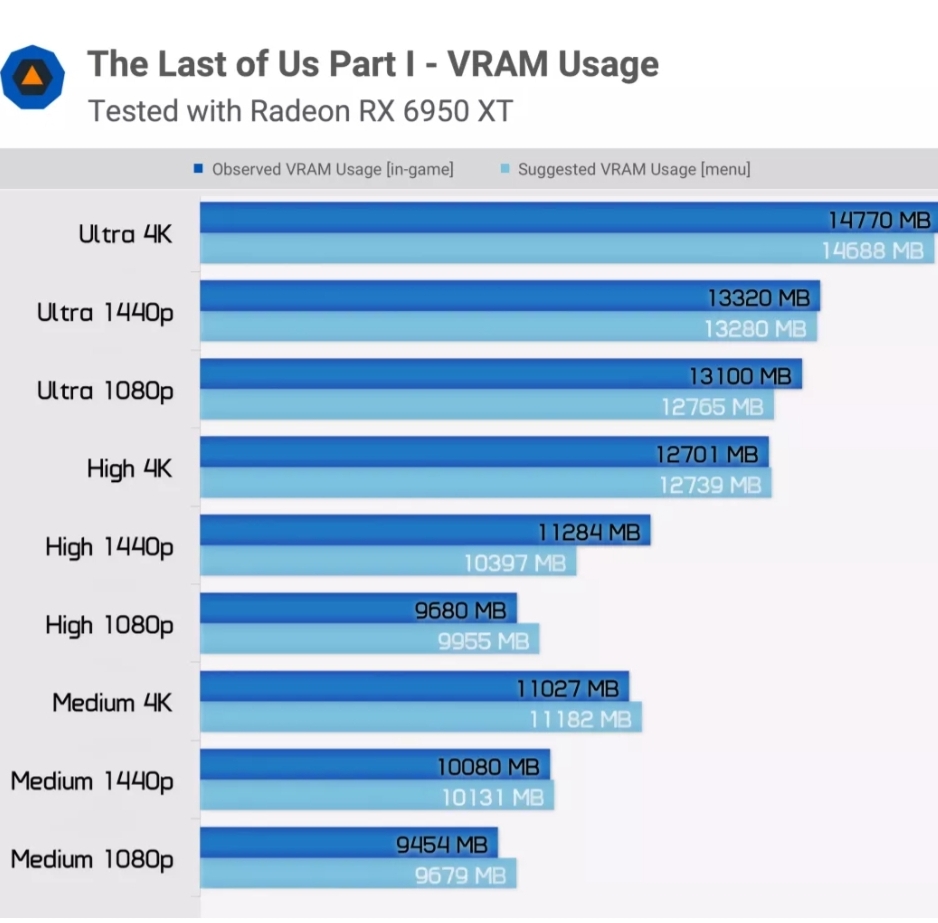

Yeah, the slides Nvidia put out are rather telling. Proof will be in the pudding aka test results but we have to wait till July for the 4060ti 16gb vs 8gb vs 4070 12gb comparisons. $500 for a 16GB 4060ti is just silly. But hey, it only uses 160w of power! Efficiency!

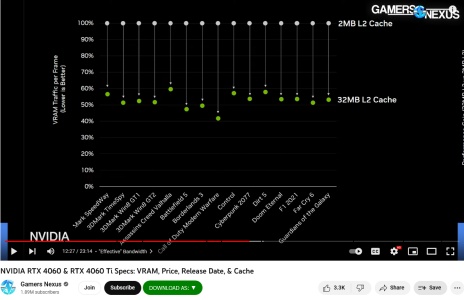

GN has a decent video on this news. Starting at 7mins in, Steve talks about memory bus, cache and bandwidth. Around 11min30s he covers Nvidia's "effective bandwidth" claims. This NV slide though, via GN's video is them showing us why they think 128bit bus isn't a big deal, the 32mb L2 cache.

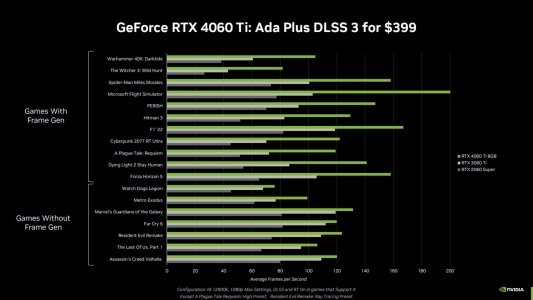

This slide (via Videocardz) is pure marketing to make you ignore that without frame generation tech, this ain't much of an upgrade over a 3060 or 2060Super, certainly not any 8GB versions, for the same price 2-4 years later.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)