IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Any GPU will be 'hot' without proper cooling.Interesting, 3 slot card because it is really "hot" ?

NVIDIA Ampere with hot memory and even the board partners are still in the dark

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Any GPU will be 'hot' without proper cooling.Interesting, 3 slot card because it is really "hot" ?

NVIDIA Ampere with hot memory and even the board partners are still in the dark

Good thing I went with an 850 watt PSU for my recent build. I almost went lower.

For me I would use it because I don't like adapters, weird I know but I'll make a custom cable before using one.according to vcz its FE only and Nvidia is including an adapter for existing cables.

why even bother using the 12 pin...

according to vcz its FE only and Nvidia is including an adapter for existing cables.

why even bother using the 12 pin...

If you are able to hold off on a purchase do so, you will be happier waiting to see how the launches play out. An additional bonus is that you could wait until there is actual supply to go with the cards that rock the benchmarks on games that interest you. You will not say I wish I bought day 1 again like the 1080ti, that anomaly happens once in your lifetime.

Damn that 3090 really looks juicy. I usually wait to see what the requirements for the next Battlefield are. Maybe GTA 6 will be a killer app for this?

I’m sort of worried if I buy into this card now, in a year or two there will be games built for rtx,

Three times in my life time. 9700 Pro, 8800 GTX, and the 1080ti.

I can see that, I remember them showing it without Raytracing running just fine on a 1080ti for the initial play demo. With how the last play sessions being described as struggling with Raytracing and a 2080ti, they are showing it will need some serious power to fix that even with optimization. I look forward to how far behind AMD's implementation of Raytracing is compared to Ampere. Just common sense they are at a deficit just how far, I wouldn't be surprised if they were a step behind turning.I suspect that Cyberpunk 2077 will be used a the "game" to promote Ampere's performance.

A 850W minimum certainly would axe the majority of SFF builds.

They probably do this for people that get crappy 850-watt PSUs for $50.Why would you need 850W minimum? Let's say 400W worst case scenario for the GPU, 200W for CPU and the rest maybe 50W at most for peak usage. Unless you run applications that 100% stress an overclocked Intel CPU and 100% stress the GPU, I don't see a reason to require 850W.

Damn that 3090 really looks juicy. I usually wait to see what the requirements for the next Battlefield are. Maybe GTA 6 will be a killer app for this?

I’m sort of worried if I buy into this card now, in a year or two there will be games built for rtx,

Three times in my life time. 9700 Pro, 8800 GTX, and the 1080ti.

Theoretical - yes.Any GPU will be 'hot' without proper cooling.

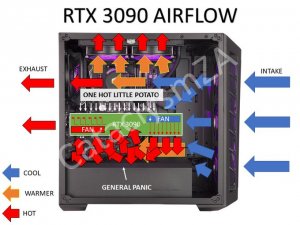

Which way does the back fan blow? Towards the ram on the motherboard or through the fins blowing hot air into the GPU fan side? This design is not making much sense at least to me. If this cooler cost $150, removes 350w+, what can AIBs improve upon cooling wise? Can't really make it bigger, water cooling is the only way I see it could be improved upon, everything else may be less or equal to it but not better with AIB cooling is first thought. Custom boards with more traditional cooling, if that is better then I don't think Nvidia would have wasted their time on this design. Only a few more days before we get some better answers.

It looks like the fans blow into the heatsink from each side and the ‘X’ pattern of the fins channel the heat away from the gpu into the surroundings. Meaning 400w of heat dumped into your case. Toaster time.

I could be wrong of course.

I just thought of something with the new adapter. My PSU uses cables with capacitors. Would using a cable or adapter without capacitors potentially cause any issues with my PSU?

GamesBeat: Is it a good guess that you’re going to reveal Ampere chips for games and desktops on September 1?

Huang:

Well, on September 1, I’ll have some really exciting news to tell you. But I don’t want to ruin it for you. I have to have some surprises for you. You’re hard to surprise. I’ve gotta surprise you.

GamesBeat: That must feel good because it’s a validation of the AI strategy, the data strategy.

Huang: The AI strategy and the datacenter strategy are working out well. We were right that AI processing is going to require acceleration, and the Ampere architecture is the biggest generational leap we’ve ever had. It was a home run.

I'm going with an expansion on DLSS, maynot call it 3.0 but its probably more about it being available all the time.Biggest generational leap in AI processing? what does that mean to us?

Biggest generational leap in AI processing? what does that mean to us?

Jesus fuck no they won't. The only people that believe that are the jealous fucks that can't afford one themselves. This is brought up ever time a refresh comes out and I don't see the outrage. People are perfectly fine buying the top tier card at release. People wouldn't never upgrade if they worry about what is coming next.Big Boi: https://videocardz.com/newz/nvidia-geforce-rtx-3090-graphics-card-pictured

Kind of seems like Samsung 8nm is going to produce some Fermi like wattages and temps. At least the performance will likely be insane, but I guarantee everyone that buys one is going to be pissed about a 7nm TSCM refresh next year.

Jesus fuck no they won't. The only people that believe that are the jealous fucks that can't afford one themselves. This is brought up ever time a refresh comes out and I don't see the outrage. People are perfectly fine buying the top tier card at release. People wouldn't never upgrade if they worry about what is coming next.

These furnace TDPs worry me. I mean, is the 3080 actually faster than a 2080 Ti in the same power envelope? Or is it just a 2080 Ti with faster RAM? And don't even get me started on the 3090. For people in ITX setups that are temperature limited, I really wonder what are we looking at once you account for reducing power limit to accommodate weaker cooling setups. Maybe 10-15% faster than 2080 Ti?

Keep buying 225W+ GPU's and you will have heat. If it bothers you that much, liquid cool or downgrade your GPU's in the future.Same for me. I'm tired of the space heater under my desk, solely because of the GPU.

Went from 220W GTX 570 to a 250W GTX 780, then to dual 780 SLI, and now a single 250W 980Ti.

I was hoping that the upper range offerings of this new 3000 series would peak around 200-220W TDP for the 3080Ti/3090, so I'm a bit shocked to see them weighing in around (a speculated) 320-350W.

If the TDP ratings turn out to be true, then I may just forego nVidia and get an AMD next-gen, if their Navi2 thermals and TDP are substantially better.

You'd might as well just go with a less expensive Nvidia product then. If AMDs TDPs are that much lower, their performance will be even worse.If the TDP ratings turn out to be true, then I may just forego nVidia and get an AMD next-gen, if their Navi2 thermals and TDP are substantially better.

Should be said that unless the tubing for the liquid cooling goes into another room, it's going to be dumping the same heat into the same room -- just more efficiently.Keep buying 225W+ GPU's and you will have heat. If it bothers you that much, liquid cool