WARNING: armchair, paper-based analysis ahead

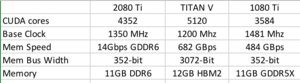

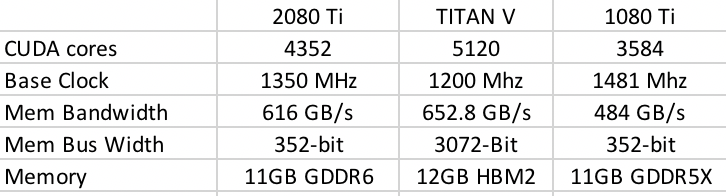

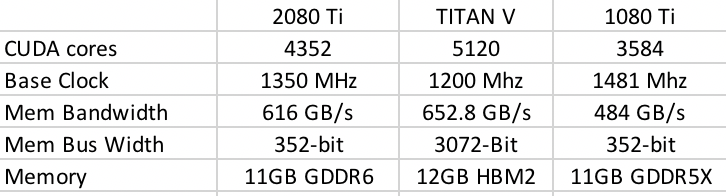

Since there are no gaming benchmarks I was curious to see if the TITAN V represents a good approximation of what we can expect when it comes to gaming performance with the 2080 Ti...I was somewhat shocked that the TITAN V bests the 2080 Ti on paper in few pretty substantial ways:

My ghetto chart below:

(source1, source2)

So yeah, $3000 - $1200 you "save" a whole $1800 but it looks like gaming performance over the 1080 Ti will be in the 25% range (if we're lucky) if the TITAN V gives us any indication.

We are missing some data on ROPS and Texture Units for the 2080 Ti...

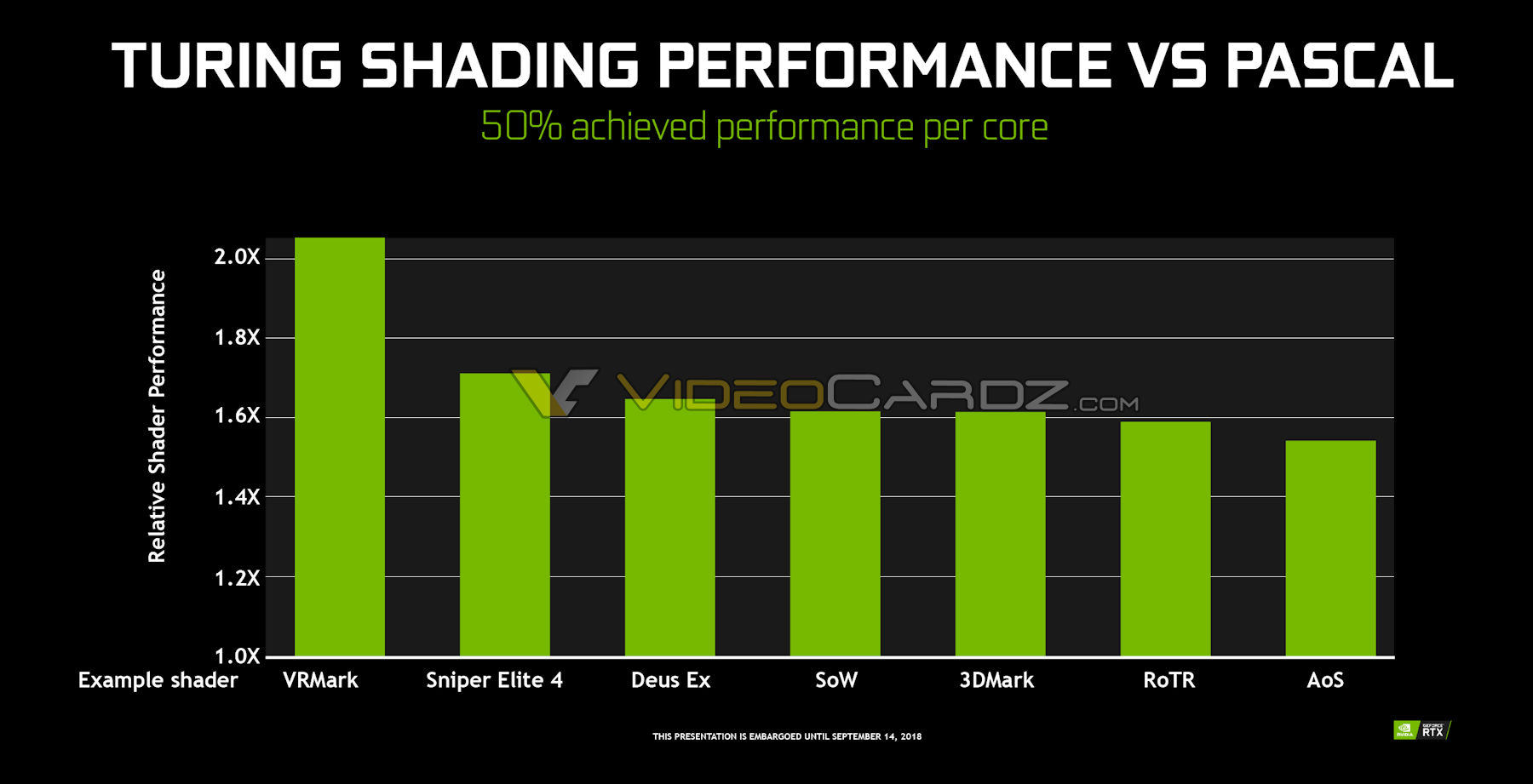

Thoughts? Is it all about the "RTX-enabled" games for now?

Since there are no gaming benchmarks I was curious to see if the TITAN V represents a good approximation of what we can expect when it comes to gaming performance with the 2080 Ti...I was somewhat shocked that the TITAN V bests the 2080 Ti on paper in few pretty substantial ways:

My ghetto chart below:

(source1, source2)

So yeah, $3000 - $1200 you "save" a whole $1800 but it looks like gaming performance over the 1080 Ti will be in the 25% range (if we're lucky) if the TITAN V gives us any indication.

We are missing some data on ROPS and Texture Units for the 2080 Ti...

Thoughts? Is it all about the "RTX-enabled" games for now?

Attachments

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)