How much do options such as HBAO+/VXAO/etc affect memory?DX12 runs far better than 11 here, it's more smooth, fewer hiccups, VRAM usage is outrageous though.

Cheers

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

How much do options such as HBAO+/VXAO/etc affect memory?DX12 runs far better than 11 here, it's more smooth, fewer hiccups, VRAM usage is outrageous though.

How much do options such as HBAO+/VXAO/etc affect memory?

Cheers

You must be 1 of about 10 who bought that on UWPI bought the UWP version, I don't think it ever got the VXAO patch, I tried looking for patch notes for it but nothing to be found lol. I haven't actually found anyone else with the UWP version to ask

Even benchmarking is a pain in the ass, the game uses double buffering by default, so unless I set my refresh rate to something high it doesn't even stress the GPU

I bought the UWP version, I don't think it ever got the VXAO patch, I tried looking for patch notes for it but nothing to be found lol. I haven't actually found anyone else with the UWP version to ask

Even benchmarking is a pain in the ass, the game uses double buffering by default, so unless I set my refresh rate to something high it doesn't even stress the GPU

You must be 1 of about 10 who bought that on UWP

Cheers

I have the UWP version. If you run it with all of the graphics settings at max on a 3x 1080P, it'll stress any GUP. There's now way my old GTX 680s could handle this, a GTX 1080 does pretty nicely. Everything max in DX 12 using FXAA at 5760x1080, it's pretty amazing.

Nope.Sorry for necroing this sticky, but a quick question about DX12 vs DX11 (I don't own any DX12 games currently available).

At the exact same settings, is there any difference between DX12 and DX11 in terms of visual quality?

SLI/CFX for DX 12 support.Async compute added in new patch.

[07-08-2016] PC Patch notes for patch 1.0.668.0 (Patch #7) :: Rise of the Tomb Raider General Discussions

As a note using very high textures with the 1070 didn't seem to affect performance at all, for the nano it significantly decrease performance on these benchmarks.

now swap the cards and rerun everything

Yes, very much so, very high textures eats a lots of VRam. The 290x choked using very high textures while the Nano just slows down but is still smooth for the most part. The 1070 it just improves the quality a little with no noticeable performance lost.VRAM being the culprit?

Those system are pretty different, might show different results... And it might show that the 9590 is actually comparable to the i7 despite what others think.nope, too much of a pain for that. Benchmark performance between the two machines it shows mostly plus DX 12 is not helping AMD but the opposite.

Those system are pretty different, might show different results... And it might show that the 9590 is actually comparable to the i7 despite what others think.

nope, too much of a pain for that. Benchmark performance between the two machines it shows mostly plus DX 12 is not helping AMD but the opposite.

Well for that game and on those systems that is the result. You can compare one system to the other. What shows is in this game DX 12 improves performance somewhat over DX 11 for Pascal and not for GCN 1.2. Yes I acknowledge that maybe with DX 12 the cpu was less of a bottleneck for the 1070 which still proves DX 12 is improving performance. If I down clocked my FX 9590 that maybe will prove that or not, same as with the I7-6700K. Better yet let Brent and Kyle plow through this and clearly review results when they get to it.its funny how someone can post two different firestrike systems and everyone is all "but they are different systems!!" but this is cool...

I'm not so sure.

Running the benchmark on DX11 at 1080p with a 6600K @ 4.7GHz + clocked 980ti, there were a few short times all cores would max and framerate would stutter.

Changing to a 6700K @ 4.6GHz, in the same place all cores + HT cores still maxed out but for a fraction of the time and framerate looked smooth throughout.

DX11 can make use of 8 cores already.

Changing to DX12 will surely not help.

Well for that game and on those systems that is the result. You can compare one system to the other. What shows is in this game DX 12 improves performance somewhat over DX 11 for Pascal and not for GCN 1.2. Yes I acknowledge that maybe with DX 12 the cpu was less of a bottleneck for the 1070 which still proves DX 12 is improving performance. If I down clocked my FX 9590 that maybe will prove that or not, same as with the I7-6700K. Better yet let Brent and Kyle plow through this and clearly review results when they get to it.

Verify for yourself. All 8 cores of my 6700K get used almost identically.Well this is not trueYou might see some activity but it does not work like that. There is no DX11 engine that can do more then 4-5 cores without a negative scaling impact ..

Due to technical reasons DX12 can use all the cores all the time and send data on every core to the gpu this is the difference between both API.

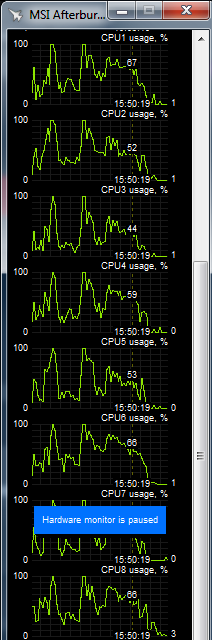

Yeah there are several games I have tested that peg an i5 in spots including Rise of the Tomb Raider, Batman Arkam Knight, Witcher 3, AC Syndicate and AC Unity.Verify for yourself. All 8 cores of my 6700K get used almost identically.

If you dont manage it, I'll post a cpu use graph. Let me know.

Decided to post it anyway to save deviationWell this is not trueYou might see some activity but it does not work like that. There is no DX11 engine that can do more then 4-5 cores without a negative scaling impact ..

Due to technical reasons DX12 can use all the cores all the time and send data on every core to the gpu this is the difference between both API.

Decided to post it anyway to save deviation

GPU use increased and it became smoother moving from a 6600K to a 6700K.

No negative scaling.

All "8" cores used optimally.

View attachment 5128

ps on Win7-64

corrected the image, first one was vsync on

I changed from a 4 core to 4 core + 4HT and saw a reduction in stutters, no negative scaling and all 8 cores were well utilised when needed.It is to technical to explain but the DX11 limitations and your screen shot have nothing to do with each other. Unless you are running it on a 8 core AMD cpu or a 8 core 16 threads Intel cpu you are not going to see the difference ....

Well for that game and on those systems that is the result. You can compare one system to the other. What shows is in this game DX 12 improves performance somewhat over DX 11 for Pascal and not for GCN 1.2. Yes I acknowledge that maybe with DX 12 the cpu was less of a bottleneck for the 1070 which still proves DX 12 is improving performance. If I down clocked my FX 9590 that maybe will prove that or not, same as with the I7-6700K. Better yet let Brent and Kyle plow through this and clearly review results when they get to it.

He stated his criteria, I showed that TR under DX11 can fulfil them.to me you are trying to show the difference but are not doing it right and cannot be bothered to do it right. so you examples are useless.

You should really just stop talking. There are DX11 games that will peg an i5 and that is a fact. And when the i5 is pegged it can cause some hitching and slow downs that are not there when using an i7.to me you are trying to show the difference but are not doing it right and cannot be bothered to do it right. so you examples are useless.

Both my test systems were identical apart from the 6700K was 100MHz slower, which should have made it worse if only 4 cores mattered. But it was better.why are you talking about i5? maybe you should stop. my point was the base systems are very different and he's trying to show the difference but wont take the hour(at most) to swap video cards to make the testing more "scientific" for a lack of a better term. I swapped cpus to show differences(or lack of) in my AOTS test...

idk what wtf youre talking about but I'm giving up.Drop the the obtuse act. You said that there is no DX11 engine that can scale beyond 4-5 cores and that is BS. There are some games that peg an i5 and will perform better with an i7. I see 70-80% cpu usage in parts of some games on my 4770k.

Drop the the obtuse act. You said that there is no DX11 engine that can scale beyond 4-5 cores and that is BS. There are some games that peg an i5 and will perform better with an i7. I see 70-80% cpu usage in parts of some games on my 4770k.