IdiotInCharge

NVIDIA SHILL

- Joined

- Jun 13, 2003

- Messages

- 14,675

Thanks for the detailed response!

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

###############

sub zfslib_val2kb { #hide:

###############

my $w1=$_[0];

-> $w1=~s/,/./;

if ($w1=~/K/) { $w1=~s/K//; }

if ($w1=~/M/) { $w1=~s/M//; $w1=$w1*1000; }

if ($w1=~/G/) { $w1=~s/G//; $w1=$w1*1000000; }

if ($w1=~/T/) { $w1=~s/T//; $w1=$w1*1000000000; }

if ($w1=~/P/) { $w1=~s/P//; $w1=$w1*1000000000000; }

return ($w1);

}NAME USED AVAIL MOUNTPOINT %

rpool 23,2G 15,3G /rpool 39%

storage 5,62T 5,61T /storage 50%

tank 17,3T 3,95T /tank 15%!

vmstore 304G 107G /vmstore 26%NAME USED AVAIL MOUNTPOINT %

rpool 23,2G 15,3G /rpool 39.7%

storage 5,62T 5,61T /storage 50.0%

tank 17,3T 3,95T /tank 18.6%

vmstore 304G 107G /vmstore 26.0%chmod -fvR A=everyone@:modify_set:file_inherit/dir_inherit:allow folder/name/path/chmod -fvR A=user:root:ful_set:file_inherit/dir_inherit:allow folder/name/path/'zfs list' outputs numbers with _comma_ but perl requires _point_ to handle calculations correctly.

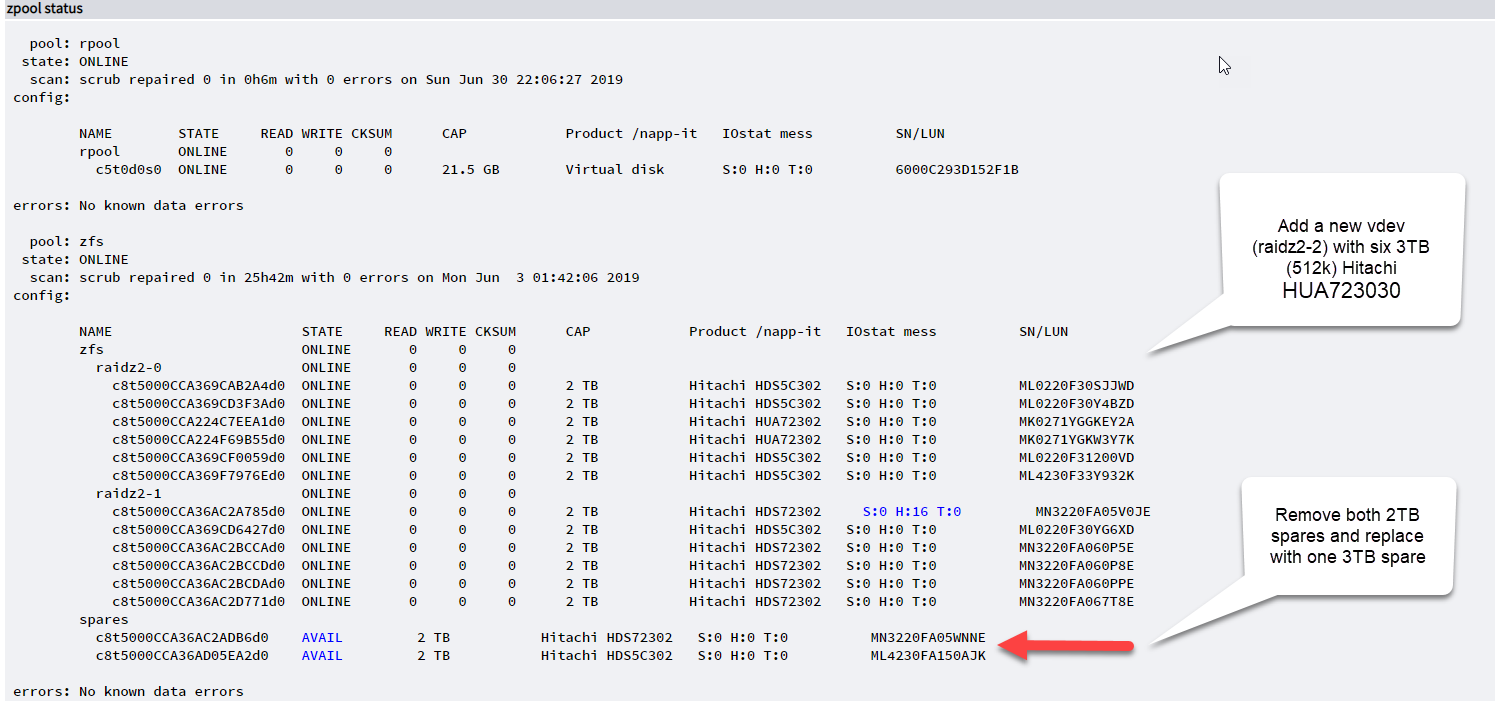

You can of course remove the hotspares and create a new vdev but

All modern disks are 4k disks. If you force 512B/ashift=9 you will see a performance degration

and you are not able to replace a faulted disk with a 4k one

If you want to create such a pool, I would create a new pool from a vdev of 3TB disks (ashift=12).

Then copy the data over and add the 2TB vdevs where you also force ashift=12 (ashift is a vdev property)

Your pool will have 28TB usable from 18 disks and > 100W power need.

If you intend to buy the disks newly, I would propably avoid such many small disks and create

a whole new pool from larger disks (6TB, or propably 8TB) and a single raid-Z2.

A performance degration is with 4k disks (512e are also 4k) and ashift=9.

There is no problem with real 512B disks and ashift=12 so try to create a new pool with all vdevs in ashift=12

I would not buy nowadays real 512B disks but 512e (or 4k) and I would not buy small 3TB disks.

Anyone here ever build an SSD pool? With the much lower expected failure rate and higher scrub / resilver speeds is it still worth going with raidz2 over raidz1?

Are the disks detected?

Have you enabled pass-through in 018 and not in 030?

Funnily, the shield runs on a Linux kernel but doesn't support a linux protocol like NFS, only Window's. Lol, makes total sense.

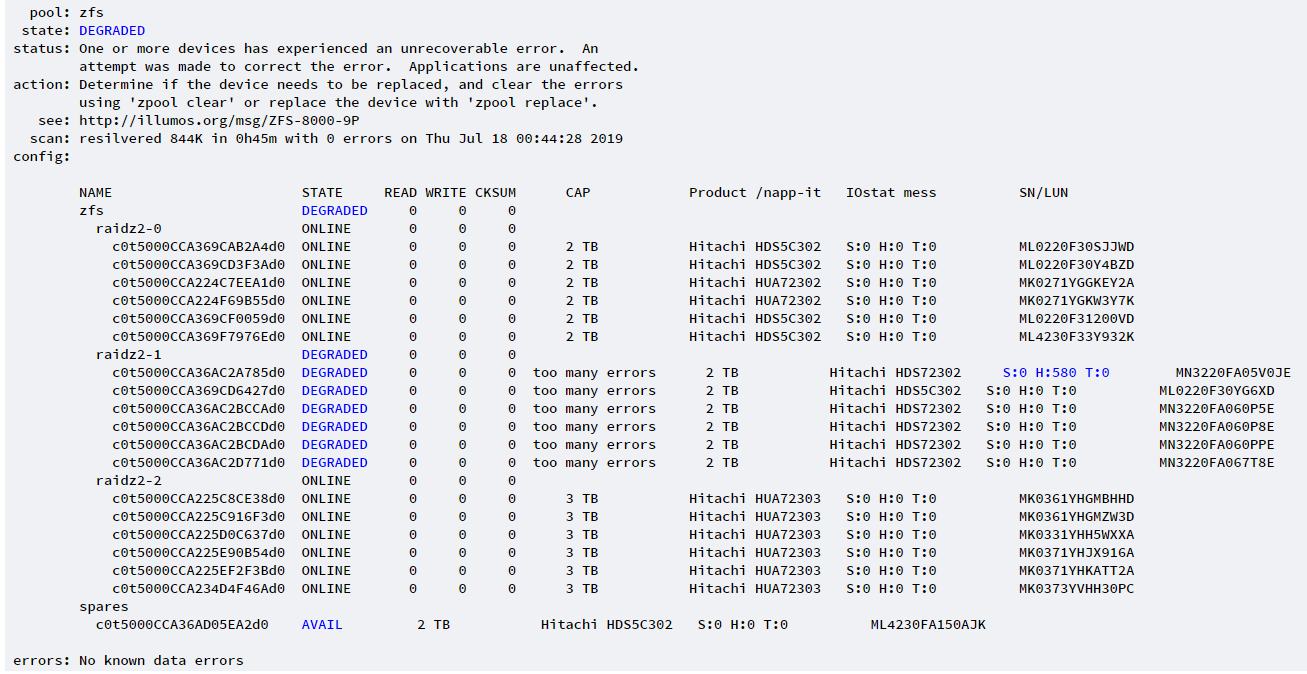

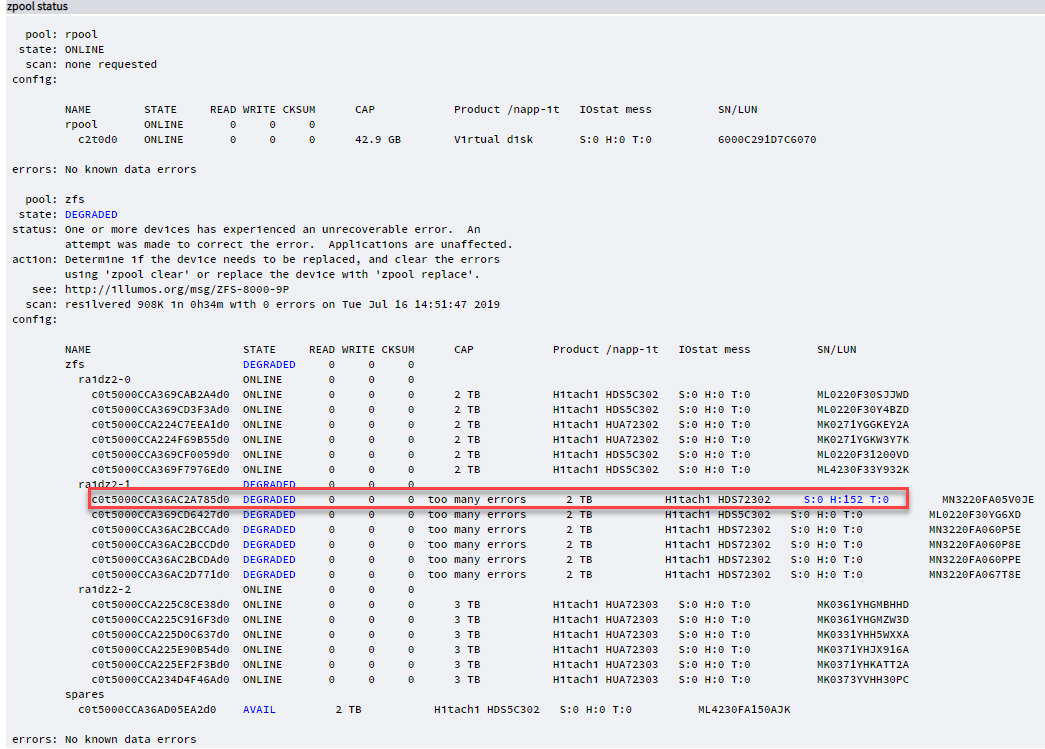

So basically want to remove the hot spare and replace it with the bad disk

Use Pool > Clear to clear the "too many errors"

To remove the hot spare finally from the pool, use menu Disk > Remove

Then replace: use menu Disk > Replace

Are the problem disks on the same power, backplane or HBA?

Maybe there is a reason

After you have replaced the disk with the hard errors do a low level/intensive test ex with WD datalifeguard

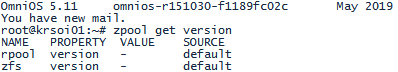

pool version ="-" is ok.

This is a synonym to Open-ZFS pool v5000 with feature flags where features determine differences , no longer a pool version. Internal v5000 is mainly to be sure that Oracle will never come close to this with their genuine ZFS.

What was the problem with a replace faulted <-> spare?

Usually a spare can replace a faulted disk but remains a spare.

The idea behind is that you replace the faultet disk then, replace spare > new and the spare remains a spare.

If you want to remove the spare property, first remove then replace.

What was the problem with a replace faulted <-> spare?

Usually a spare can replace a faulted disk but remains a spare.

The idea behind is that you replace the faultet disk then, replace spare > new and the spare remains a spare.

If you want to remove the spare property, first remove then replace.