Dell 27 in IPS has sold like hot cakes

Doubt that their sales numbers can rival those of low cost 39"-40" VA 4k TVs

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Dell 27 in IPS has sold like hot cakes

Prove me wrong. Show me the numbers of GPU sales per vendor from 1995-2000. Show me the market was 5 times larger than today because we had about 7 different manufactures back than give or take the period.I actually wasn't telling you anything, I was asking you to back up your claim that they were produced in low numbers.....which based on your reply, I'm guessing you cant.

Prove me wrong. Show me the numbers of GPU sales per vendor from 1995-2000. Show me the market was 5 times larger than today because we had about 7 different manufactures back than give or take the period.

2 vendors vs 7+/- means that there must be ~ 3 times more global sales than today per unit to claim economies of scales were the same. The GPU market was more units in 2005 than today but definitely not in 1995. Gaming was extremely small back than and there were 3x more vendors.

http://www.techspot.com/article/650-history-of-the-gpu/

you can do the calculations from this source and get an idea of how fewer the sales were between companies compared to 2005 and today. Anandtech has an article showing global sales 2005 to 2013 if you want to educate yourself. Granted this doesn't give you the needed data for the highest tear cards.

I just posted the numbers jesus. You have to extract them and use several different sources which i referenced. Granted the by die/chip data doesn't appear to exist but you can see market wide data and see the difference in units and some basic math shows that 1995 numbers were substantially lower and 2005 was higher. now large die vs large die doesn't exist (to the public) as i stated...not sure why they hide 20 year old data.I'm not really sure you understand how this works...when you make a claim, its not my responsibility to prove you wrong.

I honestly don't know the numbers, which is why I asked for your source. so either you can back up your claim, or admit you're talking out your ass...either way is fine by me.

Dang, the NVIDIA store switched from "Buy it Now" to "Out of Stock" on me as I was browsing around

https://www.nvidia.com/en-us/geforce/products/10series/geforce-gtx-1080-ti/

LOL.They are selling quick it seems...Newegg had a few also and poof..all gone

I am forcing myself to wait for the AIB's which I am hoping will offer better cooling solutions.

new GPUs are always out of stock for a few weeksAnyone else experiencing a shortage of supply in their area like I am? Both my local (within driving distance) MIcro Centers got like 5-6 each.

Is there any reviews/quides with OVing done? I am curious what the real max is of this card. Any OVing TXP reviews?

new GPUs are always out of stock for a few weeks

You can set your watch to people being surprised whenever new Nvidia cards are out of stock.

It's like the people that are shocked they can't get a reservation when a popular new restaurant opens up.

This will replace my 980ti later this year. Once the market settles. Unbelievable performance increase for a single year of new tech.

Nvidia blew everyone away with the 1080 and did it again without any competition with the 1080ti.... Impressive.

Thankfully we have VR to keep Nvidia pushing forward otherwise they might have pulled an Intel and phoned this one in.

Guru3D overvolts their 1080 Ti in their review. Though I think they just add +50mv to every card they test.

I like it and would like to see higher power limits BIOS BIOS or software. Gigabyte software allows 130% which is super hard to push with 980TI I hit it once or twice with max software voltage on my 980TI with 1570 clocks.This IMO is a mistake, if you overvolt the GPU you end up pushing the card to the TDP maximum level quicker, and in the end can end up hurting your overclocking or keeping it from the highest it can be due to power throttling. The best way to overclock is to first overclock with no voltage modification, find out how high you can get it without voltage first, and look at the TDP level its at. If there is room, or just by trial and error you can then begin bumping up voltage slowly, in increments, and see if you can eek the clock higher. But every card is going to be overclockable to different levels of voltage, there isn't just one voltage increase that applies to all gpus, one card could cap out at 20% voltage, another at 30%, so you have to do it manually each and every time for the card in question. Sometimes you'll find the highest and most stable overclock without throttling is done without voltage at all. GPU Boost is variable, but if you try to push it too far and hit that power cap, the clock is going to throttle down after long periods of gaming. This is also why it's important to test the clock speed in gaming for about 30 minutes to make sure it doesn't drop down, or what it drops down to. This is how we do our testing. Memory overclock also affects the power draw of the card as well and can also cause the clock to throttle down if pushed too hard. There's a lot of variables to consider.

When you look at our overclocking results and final stable overclock you can be assured the clock speed is maintaining that clock frequency consistently during long periods of gaming. We even show it to prove the data in our clock speed over time graph. The last thing you want is to overclock a card and think you are running a certain frequency, when in reality in games it really isn't after 10 minutes of gaming. A benchmark run, remember, is very short, a benchmark like 3dmark or something doesn't run long enough to see the real result of gaming for 30 minutes.

That is in the overclocking section not normal benchmarking section of his reviews: http://www.guru3d.com/articles_pages/geforce_gtx_1080_ti_review,32.htmlGuru3D overvolts their 1080 Ti in their review. Though I think they just add +50mv to every card they test.

Custom BIOS is available but only for the most expensive high end models that are usually used as well for LN2, only 2 custom BIOS I know are for the GTX1080 HoF and the GTX1080 top Strix while not doing much on other models.I doubt we'll see cards with a custom bios. Don't think we ever got them with the regular 1080. Didn't really look into it myself but no one else been able to hack nvidia's security on the bios. Hell I'm not sure you can even flash the bios on Pascal cards.

AND CSI_PC: Yeah I know. Guru3D reviews the cards performance in games and then he overclocks.

Not sure why there wouldnt be. 980TIs had custom BIOS. I never bothered because my 980TI had more software controls than standard and it was sufficient. 30 or 40mv and 130% power was all I needed to get my desired results for 980TI.I doubt we'll see cards with a custom bios. Don't think we ever got them with the regular 1080. Didn't really look into it myself but no one else been able to hack nvidia's security on the bios. Hell I'm not sure you can even flash the bios on Pascal cards.

AND CSI_PC: Yeah I know. Guru3D reviews the cards performance in games and then he overclocks.

Not sure why there wouldnt be. 980TIs had custom BIOS. I never bothered because my 980TI had more software controls than standard and it was sufficient. 30 or 40mv and 130% power was all I needed to get my desired results for 980TI.

Oh yeah. If they managed to ~ double performance of x70, x80, x80ti card from previous gen this time, I wonder what Volta will bring. If Intel brings 6 cores to mainstream - I might do complete upgrade and move current hw to itx box console-killer in the next year.As a gtx 1080 owner since release, i am quite happy with the current performance of my card, but the gtx 1080 Ti has me hyped for what we can expect from volta!

I am more intersted in HBM for the reason of having smaller cards.Oh yeah. If they managed to ~ double performance of x70, x80, x80ti card from previous gen this time, I wonder what Volta will bring. If Intel brings 6 cores to mainstream - I might do complete upgrade and move current hw to itx box console-killer in the next year.

Maybe if you run on one of those tiny 4k screens at 28", but at that point you are wasting 4k anyway.

Wasn't this a bug in a driver a while back? Somehow multi-mon setups cause the cards to run at full clocks?I have a specific question about the 1080 Ti. My Maxwell Titan X will not run slow - and thus quiet - in 2D mode if I have three monitors attached. Nor indeed if I have only the two 4K monitors attached. If I were to attach three 4K monitors to the 1080 Ti will it run slow and quiet?

Wasn't this a bug in a driver a while back? Somehow multi-mon setups cause the cards to run at full clocks?

Here are my thoughts....

The 1080Ti card, or any video card for that matter, is only as good as the monitor it is hooked up to and how well optimized the graphics are. When I built my computer in September, 2014, I did splurge on the 980 card, even having the wait two months until the model I wanted came in stock. With some minor exceptions, I got capped out at 60FPS on my then current Acer H6 H276HLbmid. That meant that when the 980Ti, and then the 1080 were introduced, I would see almost no performance benefit because the limiting factor was my monitor. That changed in December when I got my Viewsonic G-Sync.

Looking at the numbers, I am drooling over the 2560x1440 numbers for framerate. They aren't hitting 165FPS which is the maximum framerate for monitors, but they are more consistently hitting 60FPS averages and sometimes exceeds them. This is a good thing. It would be interesting when we get away from Founders Edition cards and go into the AIB overclocked versions with the customer heatsink versions. And, this is why I'm going to be waiting a few months until the introduction shortages work themselves out and we see what type of reaction we see from Team Red.

One thing that I did notice, at least on the Founders Edition cards, is that the connectors are only Displayport and HDMI, with DVI finally being ditched (although you can use a Displayport to DVI cable). HDMI connectors were on cards as early as the 480Ti, while Displayport was included on the 680Ti. (This, by the way, was doing a Google image search, so please correct me). I think some of the AIB will still include a DVI connector.

That is the most ridiculous thing I've ever heard.

Maybe if you run on one of those tiny 4k screens at 28", but at that point you are wasting 4k anyway.

4K really comes into it's own at over 40", and at that point the DPI is ~100 just like any typical monitor, meaning aliasing is just as significant.

Don't make the mistake of assuming that your implementation is the only one that is used. Personally I'd argue that 4k makes no sense under 40"

I beg to differ. People don't only game on their PCs and a high-DPI monitor - I have a 28" 4K monitor and a 24" 4K monitor - makes text usage much nicer.

“If the average reading distance is 1 foot (12 inches = 305 mm), p @0.4 arc minute is 35.5 microns or about 720 ppi/dpi. p @1 arc minute is 89 microns or about 300 dpi/ppi. This is why magazines are printed at 300 dpi – it’s good enough for most people. Fine art printers aim for 720, and that’s the best it need be. Very few people stick their heads closer than 1 foot away from a painting or photograph.”

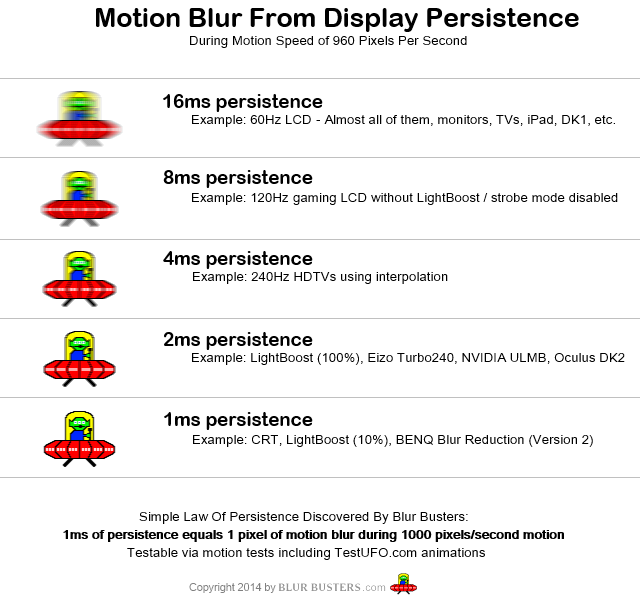

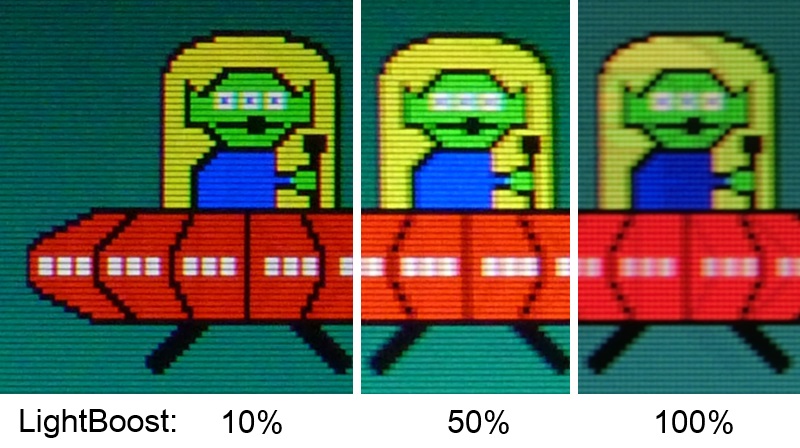

165 hz is trash plus its always CPU limited on single thread games and even multi threaded games. Single thread games struggle 120hz on my rig below. Additionally, if you go NVidia 120hz ULMB is multiple times better than 165hz (go to blurbusters.com and educate yourself for the love of anything) but realize minimum frame rate is critical with ULMB or stuttering is horrific. Even on my rig below single thread games suck with stutter and GPU intensive games are an issue too. Can't wait for my 1080TI...waiting for AIB ones.

I have 4K on a 27in screen the Dell 4K 27 in IPS and i do photoshop (used to) and game on it and 4K is not wasted on 27 in. Anyone who says it is...is flat out ignorant or an idiot. 4K on 32 in is gold but 27 works. I just didn't have 2 grand for the 32 in so 500 for 27 in was a wise choice but not wasted. Plus those 28 in TN are utter fucking trash. Really sick of stupid people keep saying this dumbass shit.

EDIT: edited my post above adding zara quote and baby fed you guys and googled human eye DPI.......And, you point, as it relates to my post, is..... what again?

Per the tests conducted by [H], the highest average framerate for a game using the 1080Ti at 2560x1440 resolution was 140FPS from Sniper Elite 4 and 159FPS using Doom. Those were the numbers that I was looking and what concerned me under my current setup. I didn't say "2K" or "4k".

I also stated that the performance of the card is monitor dependent. Where am I wrong on this?

And, what is the actual resolution you are talking about when you say "4K"? Because, when I hear "4K", I think of those "4K" television sets where the actual resolution is more like 2Kish.

That is the most ridiculous thing I've ever heard.

Maybe if you run on one of those tiny 4k screens at 28", but at that point you are wasting 4k anyway.

4K really comes into it's own at over 40", and at that point the DPI is ~100 just like any typical monitor, meaning aliasing is just as significant.

Don't make the mistake of assuming that your implementation is the only one that is used. Personally I'd argue that 4k makes no sense under 40"

EDIT: edited my post above adding zara quote and baby fed you guys and googled human eye DPI.......

~300DPI at 2 feet.

The 4K part was referring to zara...forogt to quote him too. Sorry for the confusion but...

4K refers to 4000x_______ FHD is 2K 2560x1440 is 2K/2.5K. _K goes off the first number. 4K monitor is ussually 3840x2160

https://en.wikipedia.org/wiki/4K_resolution

and if you dont understand my post than I won't waste anymore time typing because i will simply be wasting my time on you. *facepalm*

look at the photos above. 165hz is like 6ms when 120hz with 100% ULMB (stobe) is 2ms! I see so many gamers using 144hz or 165 hz on their ULMB monitors which is idiotic because 120hz ULMB is magitudes better as the pictures above show. Thats why 165hz is 100% pointless because if you have ULMB. 165hz is worse and impossible to get that many frames consistently. So its a pointless feature at the end of the day.Since you edited your reply to apply some clarification..... I'm going to reply as well from *MY* viewpoint.

The maximum framerate of the my panel in my current monitor is 144Hz, but it can be overclocked to 165Hz. It says so in the specification. That, to me, means a maximum theoretical framerate of 165 frames per second. The higher the framerate, the smoother the animation. Isn't it safe to say that the 1080Ti card achieves higher framerates than my current 980 card? [H] and multiple others sites say "YES!". Yes, some of the graphics performance is dependent on the CPU, which is why [H] and other sites test several games, not just one or two.

Now, in looking at Newegg, if you want a 5K (5120 x 2880) or a 4K (3840 x 2160) monitor, then the highest refresh rate is 60Hz. Drop down to 2K (2560 x 1440), and the max refresh rate goes to 144Hz. Since I prefer IPS monitors, it's safe to say that the DPI is higher on a monitor whose maximum resolution is 2560x1440 than it is under 1920x1080. I do notice the smoothness of the fonts and the additional screen real estate under the higher resolution, not to mention the importance when editing photos.

Now, doesn't G-Sync and associated adaptive sync technology which includes Lightboost help with the "blur" factor? I've noticed the difference when I upgraded my monitor.

If anything, your post just confirms my initial point.... which is that the graphics card performance is dependent on the monitor that is hooked up.

look at the photos above. 165hz is like 6ms when 120hz with 100% ULMB (stobe) is 2ms! I see so many gamers using 144hz or 165 hz on their ULMB monitors which is idiotic because 120hz ULMB is magitudes better as the pictures above show. Thats why 165hz is 100% pointless because if you have ULMB. 165hz is worse and impossible to get that many frames consistently. So its a pointless feature at the end of the day.

Gsync and ULMB can't be used at once which sucks. I hope that gets changed some day :/