chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,601

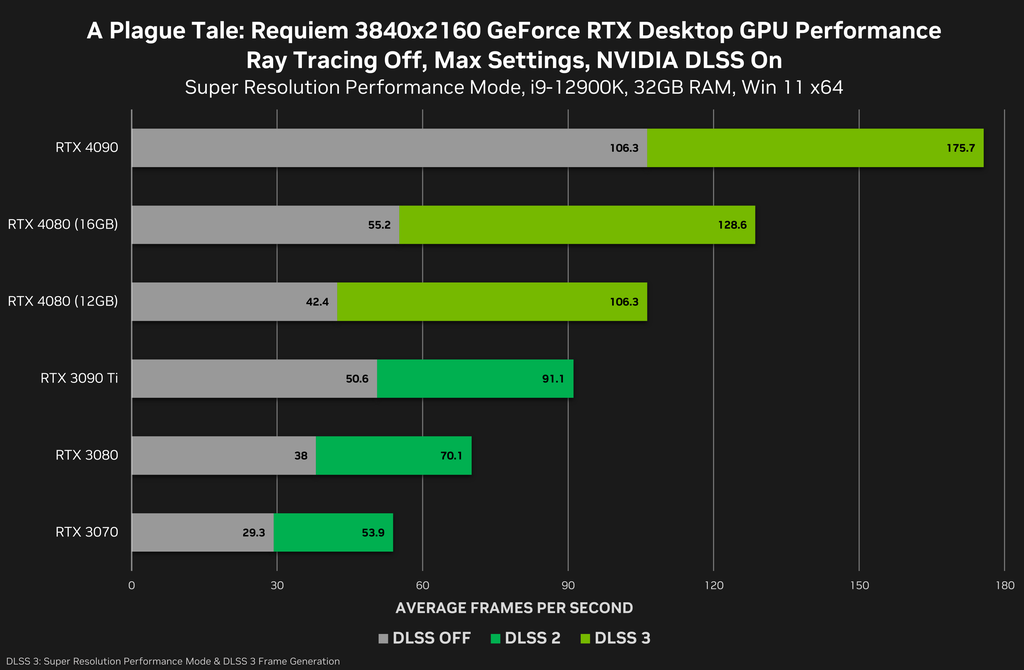

Nvidia had to put all of those tensor cores into the 4090. In Techpowerup's review, t got 68fps at 4K in Control with full RT effects. It takes a 4090 to just now do that.Its not really internal SLI as I understand it... I guess we'll get more details soon.

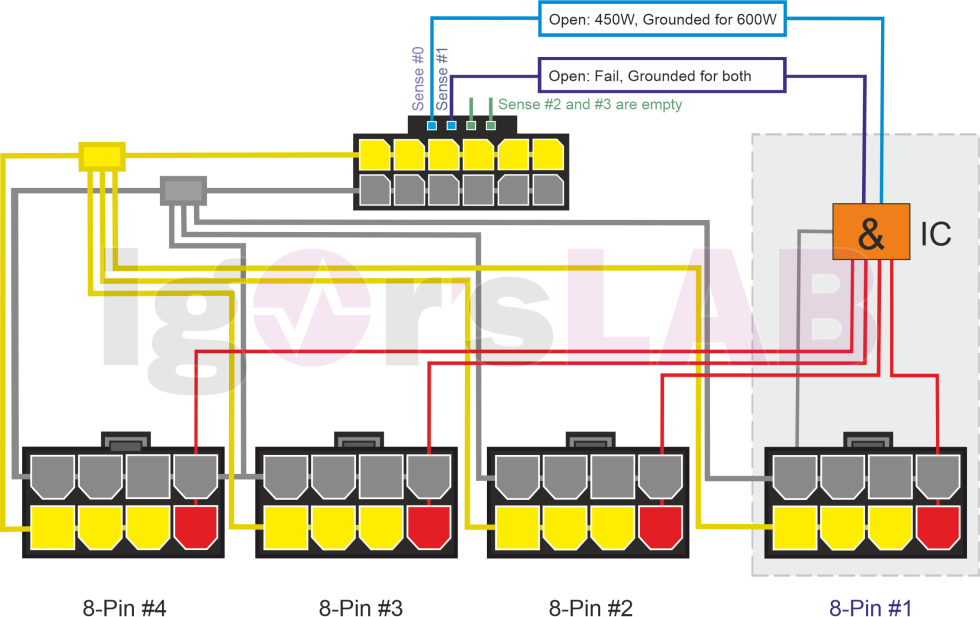

It sounds like just like Ryzen the CPU and all the bits are in one hunk of silicon. Its the supporting things that don't need to be on the latest Fab that are off loaded. CPU side that means memory controllers. GPU side we'll see what they off load.

Chiplets gets more exciting when you think about how AMD can better tailor products. They can take the same core raster chiplets and use them for consumer gaming, workstation parts, and AI... potentially I assume they can also have more compute heavy chiplets they could leave out of consumer gaming parts (reducing cost) and double up in data center packages greatly increasing yields on compute monsters. All speculation right now. I don't think we'll have to worry about SLI like issues though... we worried about the same stuff with the first Zens.

PS... looking more at what the 4090 is though looking more like a Data Center chip with the insanity level of tensor hardware. I believe in past generations Nvidia would never have dropped that chip in a consumer card (at least one that isn't an official titan) I suspect the believe AMD is going to have some god level RT bits in chiplet form or something. And yes I'll come back to this post and laugh at myself in a few weeks if AMDs cards are underwhelming. lol

4K TVs have been mainstream for awhile. And PC gamers have started turning to TVs for the image quality. PC graphics have been lagging in comfortably featuring 4K.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)