Still, there should be some point in it. Otherwise, you could save time and effort by just setting fire to piles of cash.The Hard in me is starting to gripe for some unique useful OCing, something one can use 24/7. No need for gaming performance or some other BS reason some folks believe in, just shear pushing something beyond anything expected successfully

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

NVIDIA CEO Jensen Huang hints at ‘exciting’ next-generation GPU update on September 20th Tuesday

- Thread starter Comixbooks

- Start date

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,691

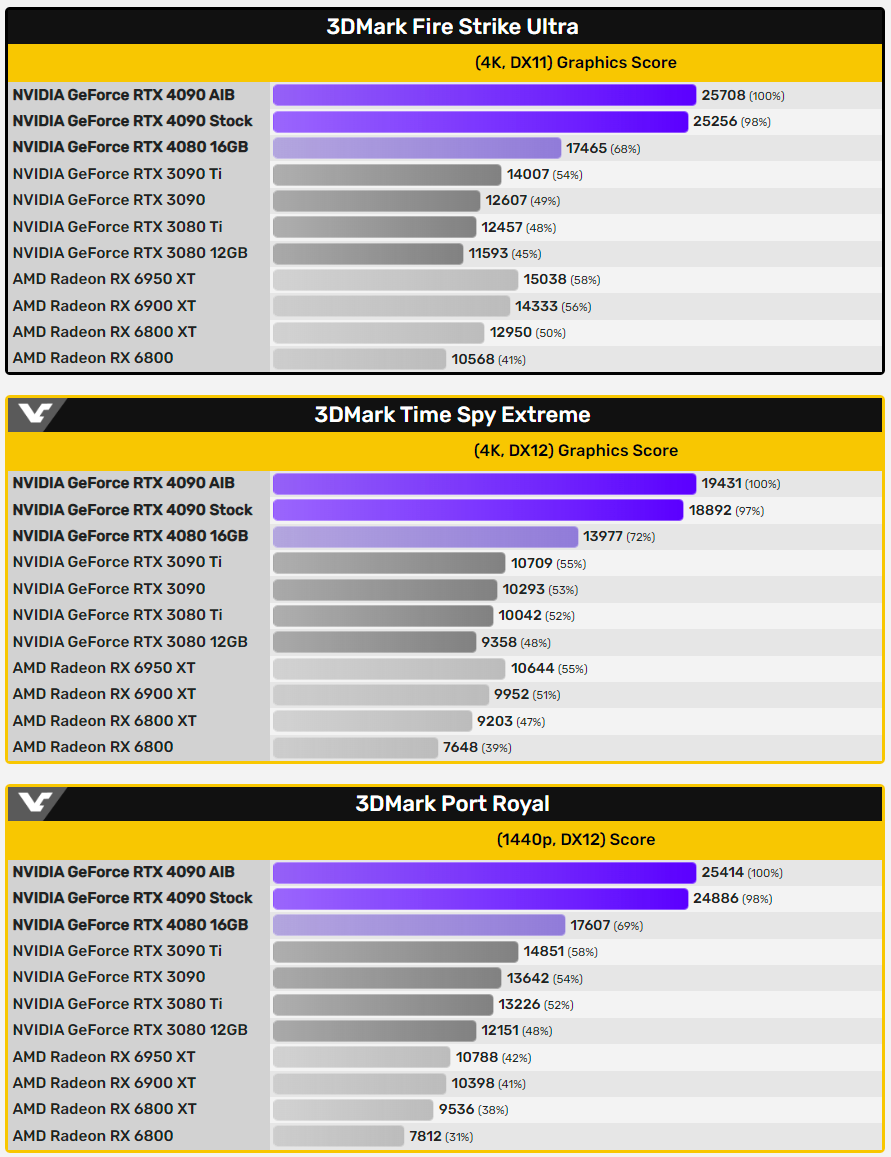

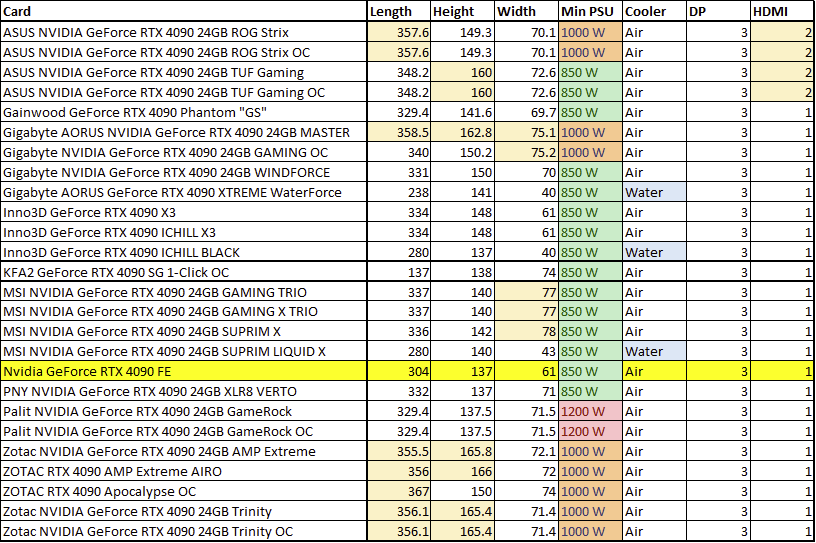

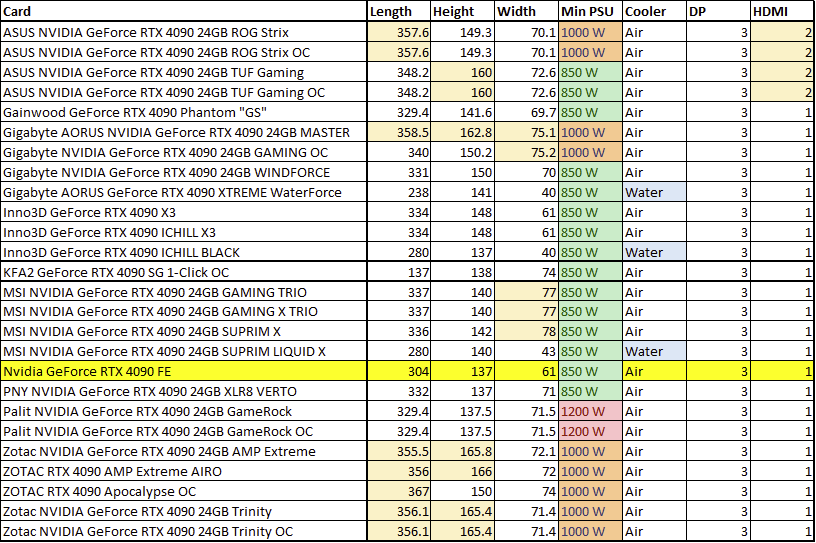

Someone on Reddit made a 4090 comparison chart:

Pushing an envelope, going beyond what others believe is possible, in a nutshell, fun competitive drive getting others to also push things can in itself be a reason for folks to get involved. As for usefulness, even the competitive gamers who turn down settings anyways, lower resolutions are more CPU and Monitor refresh limited than GPU limited at this time. The one game/simulation use scenario that a 4090 and beyond I see as viable is VR with a very high resolution and refresh rate headsets where folks also play regular games in them as well (something not as common). As for the new tricks or DLSS 3, I definitely have my doubts on the viability of every other frame being AI generated, I don't see how fine grain or high frequency textures (hope I am wrong) is not totally messed up or dramatically changed from frame to frame.Still, there should be some point in it. Otherwise, you could save time and effort by just setting fire to piles of cash.

On the practical side, game settings that hit performance but give no noticeable or trivial improvement with big FPS decreases can be used to optimize performance of current generation of cards without needing to spend $1700 plus. For me, game play is always paramount over visuals in a game. Visuals are nice extras but if a game has the best visuals but the game play sucks - it doesn't matter the FPS or how good it looks -> the game still sucks. At this time there is no practical reason for a 4090 for me. A high end game monitor is a much better choice, unfortunately most high end game monitors are lacking something significant for the costs at this time. AMD is going to have DP 2 with RNDA 3, well at least that has been rumoured , since I normally keep Graphics cards 5 years+ that is also a consideration. Speaking of RNDA 3, seems to be wise to see what AMD has to offer, last generation RNDA 2 actually kicked some ASS for the $.

This is great. Decent pickings for Lian Li O11D if going horizontal mounting (was worried limited to FE). Max height clearance for O11D Evo is 169mm so thinking max card height is around 145mm and add about 20-25 mm for cable clearance (using the PSU adapter for non ATX 3.0 PSUs).Someone on Reddit made a 4090 comparison chart:

erek

[H]F Junkie

- Joined

- Dec 19, 2005

- Messages

- 10,890

GotNoRice

[H]F Junkie

- Joined

- Jul 11, 2001

- Messages

- 12,003

Well if that is accurate then it means the FE is not the only true 3-slot solution. The air-cooled Inno3D cards are longer and taller than the FE (which is probably good because that should equate to a larger heatsink) yet still maintain the same width as the FE. It will be interesting to see if they sell those for $1600 or tack on a couple hundred extra like some other cards.

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,669

Yep... Not to mention the potential oc'ability of them or the potential for dlss 3 to rock. We'll see soon...If the coming week's reviews back up this leak, 4090 is looking like 2 generations worth of performance leap (usually its around 40-45% if I recall).

View attachment 517321

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,014

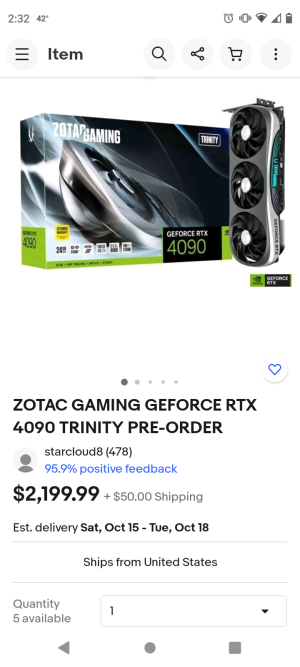

Don't worry about the Price scalpers got em'.

Last edited:

MavericK

Zero Cool

- Joined

- Sep 2, 2004

- Messages

- 31,897

If it's long enough to touch the bottom of the case, then you don't need support!

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

If people are dumb enough to pay those prices to those third party sellers then so be it.

Don't worry about the Price scalpers em'.

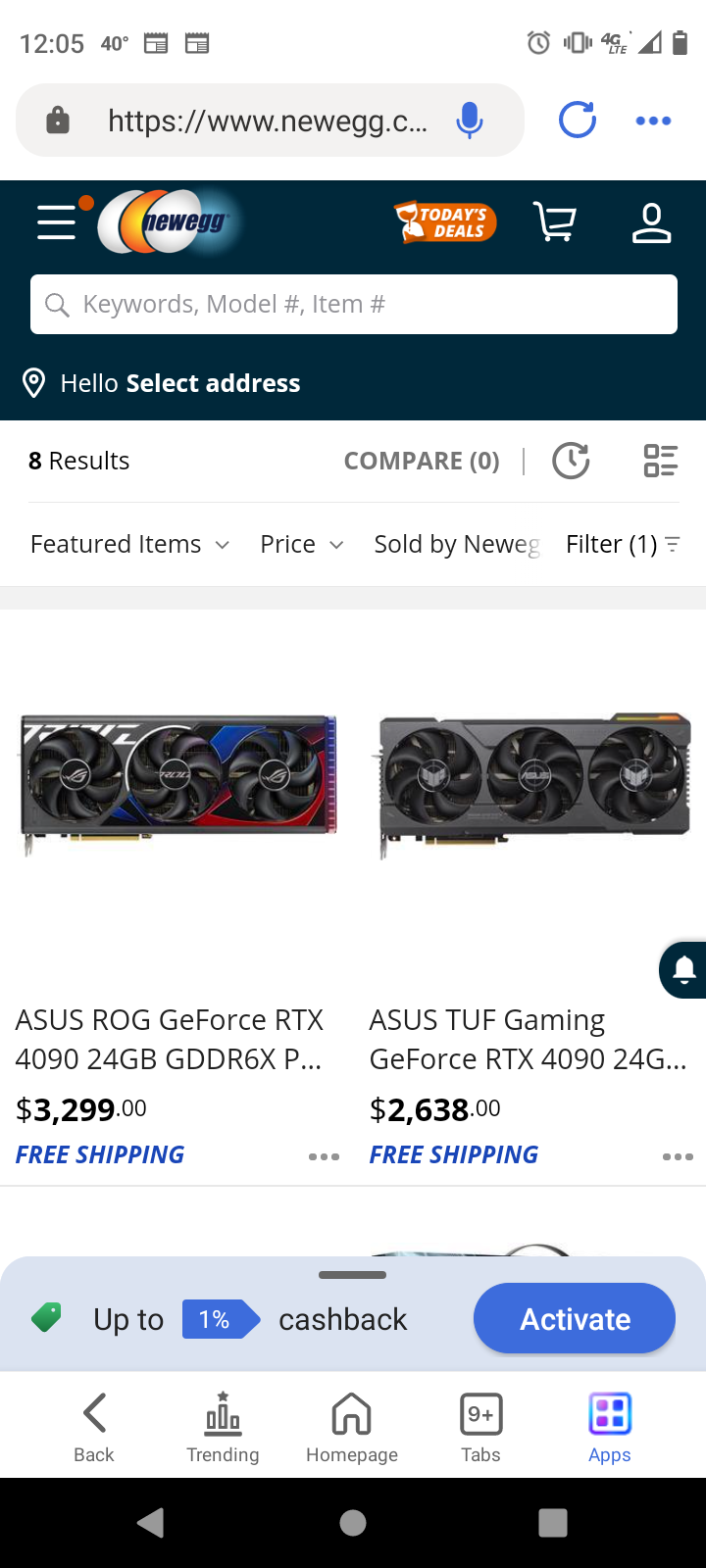

Dear God... $2600 to $3200.

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

Those are nothing more than third party sellers trying to take advantage of morons before the launch.Dear God... $2600 to $3200.

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,691

Review embargo should be lifting at 9 AM EST today.

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,014

Those are nothing more than third party sellers trying to take advantage of morons before the launch.

I hope your right but don't want a repeat of 2020.

Attachments

TheHig

[H]ard|Gawd

- Joined

- Apr 9, 2016

- Messages

- 1,345

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,014

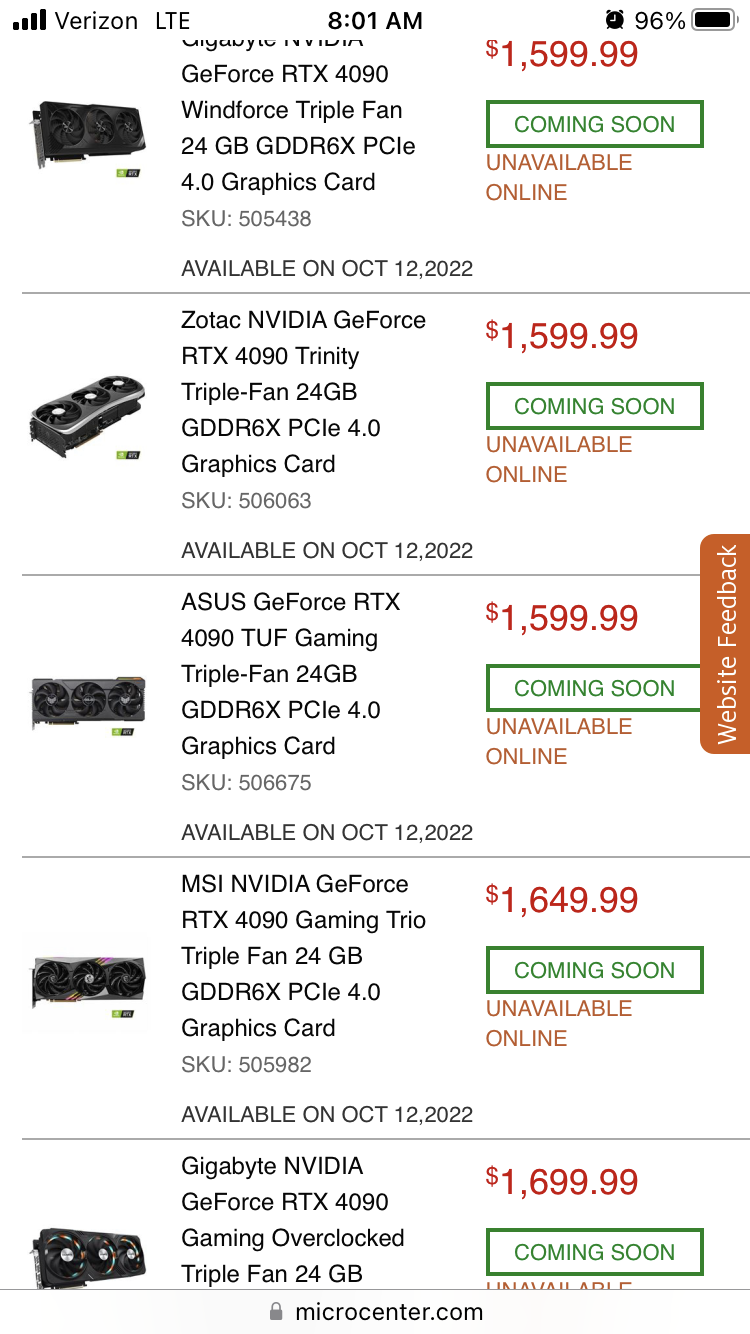

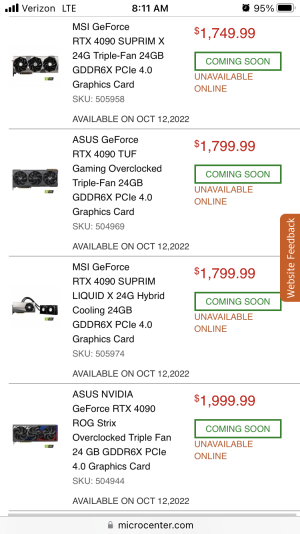

I'm thinking these Cards are going to be more scare than Toilet Paper in early 2020 especially with EVGA now gone which basically sold the most cards.

Last edited:

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,554

I'm thinking these Cards are going to be more scare than Toilet Paper in early 2020 especially with EVGA gone now which basically sold the most cards.

Highly doubtful, they should be pretty easy to find at the prices they are going to launch at, only a few that have to have the best will buy them. People are not as flush with cash right now.

hititnquitit

2[H]4U

- Joined

- Apr 16, 2018

- Messages

- 2,570

Agreed, I doubt we'll ever see that level of buying again. Once the impulse wave gets theirs, these are far too price prohibitive to sell well.

The numbers are impressive...

Its like looking at a supercar though. Sure the performance is insane... BUT its still not for you. lol

4090 has launched a new mid life crisis class of GPU. Only instead of the vette that screams... hey I'm up for another divorce. This mid life buy says hey I don't even bother with the comb over. All the more power to folks that actually buy these, but I'm still going to laugh at them.

Its like looking at a supercar though. Sure the performance is insane... BUT its still not for you. lol

4090 has launched a new mid life crisis class of GPU. Only instead of the vette that screams... hey I'm up for another divorce. This mid life buy says hey I don't even bother with the comb over. All the more power to folks that actually buy these, but I'm still going to laugh at them.

I bet nvidia will be launching their next gpu series as a full tower case with room for an atx motherbord and periferals, so gaming pc's will start with a video card then. It is also gonna require a dedicated 240v power outlet and an exclusive circuit braker, otherwise, we'll need to upgrade our houses' entire power grid to be able to run their stuff.

Comixbooks

Fully [H]

- Joined

- Jun 7, 2008

- Messages

- 22,014

https://videocardz.com/140065/nvidia-geforce-rtx-4090-founders-edition-review-roundup

Reviews list benchmarks are all over the place but in general they are up.

Reviews list benchmarks are all over the place but in general they are up.

Last edited:

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

I was wrong, those are some impressive gains. But that power draw, good lord…. I don’t expect the 3080 class stuff to be as impressive, but I’ll happily be surprised.

Considering the 4090 has 60% more cores compared to the 4080 16GB, I tend to agree.I was wrong, those are some impressive gains. But that power draw, good lord…. I don’t expect the 3080 class stuff to be as impressive, but I’ll happily be surprised.

Unlike the 3090 vs 3080... this isn't going to be even close. The 4090 will be significantly faster at 4K. HOWEVER, at 1440p, they will probably look about the same.

Numbers like these make me wonder what could have been if Nvidia hadn't used Samsung for the 3000 series.

The architecture is good, but I really wonder how much of these impressive gains are from the architecture and how much from the dramatically better TSMC process.

The architecture is good, but I really wonder how much of these impressive gains are from the architecture and how much from the dramatically better TSMC process.

I don't know that is a lot more cores vs 3000. I mean usable RT is impressive... it takes 40% more tensor cores to do it.Numbers like these make me wonder what could have been if Nvidia hadn't used Samsung for the 3000 series.

The architecture is good, but I really wonder how much of these impressive gains are from the architecture and how much from the dramatically better TSMC process.

I'm looking forward to reviews of the 4080. The reduction in shaders might not make a massive difference at 1440... and I am sure 4k raster will still be up on the 3090. The reduction in tensor core count to basically 3090 numbers though... it will be interesting to see if the impressive RT performance carries over. I believe the 4080 tensor count is actually just a bit lower then the 3090... guess well see how much RT performance is generational and how much is brute force with the insane 512 tensors on the 3090.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,589

4080 will be about the same RT performance as the 3090.I don't know that is a lot more cores vs 3000. I mean usable RT is impressive... it takes 40% more tensor cores to do it.

I'm looking forward to reviews of the 4080. The reduction in shaders might not make a massive difference at 1440... and I am sure 4k raster will still be up on the 3090. The reduction in tensor core count to basically 3090 numbers though... it will be interesting to see if the impressive RT performance carries over. I believe the 4080 tensor count is actually just a bit lower then the 3090... guess well see how much RT performance is generational and how much is brute force with the insane 512 tensors on the 3090.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

I feel like most of the gains are just the move to tsmc. You couldn’t push the transistor count to this on Samsung. I don’t care what the Intel folks say or think, imho, tsmc is the premier fab for these sorts of products. That’s bad for us. Stuff is going to get a lot more expensive as Apple just found out.Numbers like these make me wonder what could have been if Nvidia hadn't used Samsung for the 3000 series.

The architecture is good, but I really wonder how much of these impressive gains are from the architecture and how much from the dramatically better TSMC process.

It just that it turned out that Samsung 8nm wasn't even as good as TSMC 12/10nm (with a much higher failure rate), let alone the 7nm, I get that Samsung had offered Nvidia one hell of a sweet price to move them over from TSMC and I just wonder what the 3000 series could have been had Nvidia not taken that "deal".I don't know that is a lot more cores vs 3000. I mean usable RT is impressive... it takes 40% more tensor cores to do it.

I'm looking forward to reviews of the 4080. The reduction in shaders might not make a massive difference at 1440... and I am sure 4k raster will still be up on the 3090. The reduction in tensor core count to basically 3090 numbers though... it will be interesting to see if the impressive RT performance carries over. I believe the 4080 tensor count is actually just a bit lower then the 3090... guess well see how much RT performance is generational and how much is brute force with the insane 512 tensors on the 3090.

Makes me really wonder what AMD is going to bring to the table, the node jump from TSMC 7nm to TSMC 4nm isn't nearly as big a jump as going from Samsung 8nm, to TSMC 4nm, AMD had a distinct node advantage for the last 2 years and I wonder how their cards will match up now that they no longer have that advantage.I feel like most of the gains are just the move to tsmc. You couldn’t push the transistor count to this on Samsung. I don’t care what the Intel folks say or think, imho, tsmc is the premier fab for these sorts of products. That’s bad for us. Stuff is going to get a lot more expensive as Apple just found out.

With RDNA 3 being the first chiplet based GPU line, I'd be more concerned about first gen teething issues like any microstuttering or weirdness caused by the effective "internal-SLI". Chiplets are a nice cost reduction multiplier, but it remains to be seen at what performance cost.Makes me really wonder what AMD is going to bring to the table, the node jump from TSMC 7nm to TSMC 4nm isn't nearly as big a jump as going from Samsung 8nm, to TSMC 4nm, AMD had a distinct node advantage for the last 2 years and I wonder how their cards will match up now that they no longer have that advantage.

kamikazi

[H]ard|Gawd

- Joined

- Jan 19, 2006

- Messages

- 1,607

It's all about the chiplets this time around.Makes me really wonder what AMD is going to bring to the table, the node jump from TSMC 7nm to TSMC 4nm isn't nearly as big a jump as going from Samsung 8nm, to TSMC 4nm, AMD had a distinct node advantage for the last 2 years and I wonder how their cards will match up now that they no longer have that advantage.

We'll see if that is true it means Gen4 RT cores are no better then Gen3.4080 will be about the same RT performance as the 3090.

4080 304 tensors

3090 328 tensors

The number of hardware units is basically identical... if all 4080 can do is = 3090 in RT that means basically zero RT uplift this generation outside the one SKU that super stacks the Tensor bits.

I suspect there is some generation improvement. I think it will be telling for RT how much of an improvement. It needs to be substantial imo or 4060s will be basically as useless for RT as 3060 class hardware. For RT to take off it needs to be usable in the regular human price segments.

Highly doubtful. 3090s were often passed on in the microcenter queues and you could often get it during the middle of the day. Theres no mining now and with the economy being the way it is I bet there is a lot less demand.I'm thinking these Cards are going to be more scare than Toilet Paper in early 2020 especially with EVGA now gone which basically sold the most cards.

Its not really internal SLI as I understand it... I guess we'll get more details soon.With RDNA 3 being the first chiplet based GPU line, I'd be more concerned about first gen teething issues like any microstuttering or weirdness caused by the effective "internal-SLI". Chiplets are a nice cost reduction multiplier, but it remains to be seen at what performance cost.

It sounds like just like Ryzen the CPU and all the bits are in one hunk of silicon. Its the supporting things that don't need to be on the latest Fab that are off loaded. CPU side that means memory controllers. GPU side we'll see what they off load.

Chiplets gets more exciting when you think about how AMD can better tailor products. They can take the same core raster chiplets and use them for consumer gaming, workstation parts, and AI... potentially I assume they can also have more compute heavy chiplets they could leave out of consumer gaming parts (reducing cost) and double up in data center packages greatly increasing yields on compute monsters. All speculation right now. I don't think we'll have to worry about SLI like issues though... we worried about the same stuff with the first Zens.

PS... looking more at what the 4090 is though looking more like a Data Center chip with the insanity level of tensor hardware. I believe in past generations Nvidia would never have dropped that chip in a consumer card (at least one that isn't an official titan) I suspect the believe AMD is going to have some god level RT bits in chiplet form or something. And yes I'll come back to this post and laugh at myself in a few weeks if AMDs cards are underwhelming. lol

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

I hope so. I bought a $900 gpu once, I’m not doing it again.It's all about the chiplets this time around.

Randall Stephens

[H]ard|Gawd

- Joined

- Mar 3, 2017

- Messages

- 1,819

First stop, cpu chiplet. Second stop, gpu chiplet. Third stop, hopefully, cpu and gpu chiplet together on a 100fps 1080p capable APU. First company to that will do very well.Its not really internal SLI as I understand it... I guess we'll get more details soon.

It sounds like just like Ryzen the CPU and all the bits are in one hunk of silicon. Its the supporting things that don't need to be on the latest Fab that are off loaded. CPU side that means memory controllers. GPU side we'll see what they off load.

Chiplets gets more exciting when you think about how AMD can better tailor products. They can take the same core raster chiplets and use them for consumer gaming, workstation parts, and AI... potentially I assume they can also have more compute heavy chiplets they could leave out of consumer gaming parts (reducing cost) and double up in data center packages greatly increasing yields on compute monsters. All speculation right now. I don't think we'll have to worry about SLI like issues though... we worried about the same stuff with the first Zens.

That's what I am afraid of, sort of... TSMC and Apple developed a really good interposer for the M1 Max that could more or less seamlessly link the two GPUs in there and that seems to work well (~95% scaling), it's all about how well AMD manages to present and manage the resources internally that will matter, so drivers and firmware...With RDNA 3 being the first chiplet based GPU line, I'd be more concerned about first gen teething issues like any microstuttering or weirdness caused by the effective "internal-SLI". Chiplets are a nice cost reduction multiplier, but it remains to be seen at what performance cost.

But RDNA 2 managed to perform more or less evenly with the Ampere architecture while having a 2-generation lead in the manufacturing node, now that they are on an even footing there is RDNA 3 going to be that much of a leap over RDNA 2.

The benchmarks from the Instinct series would indicate that there isn't that huge of a leap there, but the MI250 is on 6nm, not 4nm even though it is their first chiplet design for a GPU accelerator.

I just want to see what AMD puts out so we can view the 40xx parts with some degree of context because currently, the 4090 exists in a vacuum and while an impressive leap over the 30xx parts how does it fare overall?

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,589

They did the same thing with Ampere. The tensor cores were improved-----but only the 3080 and 3090 included enough of them to do better than Turing.We'll see if that is true it means Gen4 RT cores are no better then Gen3.

4080 304 tensors

3090 328 tensors

The number of hardware units is basically identical... if all 4080 can do is = 3090 in RT that means basically zero RT uplift this generation outside the one SKU that super stacks the Tensor bits.

I suspect there is some generation improvement. I think it will be telling for RT how much of an improvement. It needs to be substantial imo or 4060s will be basically as useless for RT as 3060 class hardware. For RT to take off it needs to be usable in the regular human price segments.

Not sure if having a chiplet for the IO and cache will be that big of a deal, at least according to leaks rumors it is far from the rumored GPU chiplet design talked about around 2020.It's all about the chiplets this time around.

It is still a mono compute die with cache-IO being chiplet around, possible a nice price saving using older nodes for the cache part specially if yield would have been bad otherwise, but I doubt it will be all about them. On the other part, I would not worry about that design causing much SLI like issues

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)