Moral of the story, if you're buying a 4090 its still an excellent generational uplift sans DLSS 3 which really isn't necessary for that particular card. DLSS 3 is more in an early technical phase than a finished product and in most use cases, you're better off not enabling it (stuff like flight simulator potentially being an exception). When is the 4080 series going to be released? I suspect after all of the good press regarding the 4090 those SKUs will likely be a let down over previous gen. Nvidia seems to be pushing DLSS 3 hard in their performance comparisons, and as it stands, I'm not sure that's a valid comparison.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

More DLSS...

- Thread starter Auer

- Start date

Moral of the story, if you're buying a 4090 its still an excellent generational uplift sans DLSS 3 which really isn't necessary for that particular card. DLSS 3 is more in an early technical phase than a finished product and in most use cases, you're better off not enabling it (stuff like flight simulator potentially being an exception). When is the 4080 series going to be released? I suspect after all of the good press regarding the 4090 those SKUs will likely be a let down over previous gen. Nvidia seems to be pushing DLSS 3 hard in their performance comparisons, and as it stands, I'm not sure that's a valid comparison.

certain games absolutely require DLSS at 4K resolutions with RT maxed out...Flight Simulator is one game and the upcoming Cyberpunk 2077 uber ray tracing patch is going to be another extreme case

can 40 series users downgrade to DLSS 2?

RJ1892

[H]ard|Gawd

- Joined

- Apr 3, 2014

- Messages

- 1,347

Yea frame generation is optional.certain games absolutely require DLSS at 4K resolutions with RT maxed out...Flight Simulator is one game and the upcoming Cyberpunk 2077 uber ray tracing patch is going to be another extreme case

can 40 series users downgrade to DLSS 2?

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,788

Gonna need a citation on "a lot". Top played games on steam and hardware surveys would suggest the opposite.there were a lot of people playing the early RT games...it was worth it to cover it...lots of other sites did

Gonna need a citation on "a lot". Top played games on steam and hardware surveys would suggest the opposite.

Battlefield V and Shadow of the Tomb Raider were two of the earliest RT games

Yea frame generation is optional.

so if you don't want to use DLSS 3 you just replace the dll file with an earlier DLSS version?

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

What? Frame generation is just another option you pick. If you don't choose that option then you're just using DLSS 2.so if you don't want to use DLSS 3 you just replace the dll file with an earlier DLSS version?

TaintedSquirrel

[H]F Junkie

- Joined

- Aug 5, 2013

- Messages

- 12,688

Shown here (from Spider-Man Cyberpunk)

Last edited:

cool, I didn't realize that disabling Frame Generation meant you are reverting back to DLSS 2...so Nvidia seemed to realize that DLSS 3 was not this amazing new tech and put that in as a backup...otherwise why put Frame Generation as a separate toggle?...usually DLSS is just enabled or disabled universally and you aren't able to revert back to previous versions

cool, I didn't realize that disabling Frame Generation meant you are reverting back to DLSS 2...so Nvidia seemed to realize that DLSS 3 was not this amazing new tech and put that in as a backup...otherwise why put Frame Generation as a separate toggle?...usually DLSS is just enabled or disabled universally and you aren't able to revert back to previous versions

No matter how good it would have been at first (and would always cause issue if it create a frame rate superior to your monitor), forcing it would have been quite the backlash and for what benefit ?

jobert

[H]ard|Gawd

- Joined

- Dec 13, 2020

- Messages

- 1,575

I'm pretty sure that is cyberpunk 2077...

3DMark has officially announced support of NVIDIA's DLSS 3 Frame Generation technology within its DLSS Feature Test...

https://steamcommunity.com/games/223850/announcements/detail/3399680664220209537

https://steamcommunity.com/games/223850/announcements/detail/3399680664220209537

Just played the first part of Spider Man at 4K maxed using Frame Generation and... wow. Just wow.

- 120 FPS locked.

- Super smooth.

- RTX 4090 is whisper quiet.

- Only uses around 250-watts.

- Temperature: 55-58C.

Rev. Night

[H]ard|Gawd

- Joined

- Mar 30, 2004

- Messages

- 1,489

Can I have some frames? More please sir

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Part 1 of 4

It's frankly neat that frame rate amplification technologies are starting to merge spatial (superresolution) & temporal (generation) techniques.

That being said, DLSS "2.0" is superior on 4090 than the 3000 series, so it's not really "2.0" when you disable Frame Generation. From what I know, NIVIDA had always planned to let you disable Frame Generation.

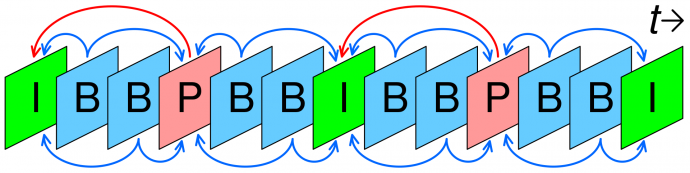

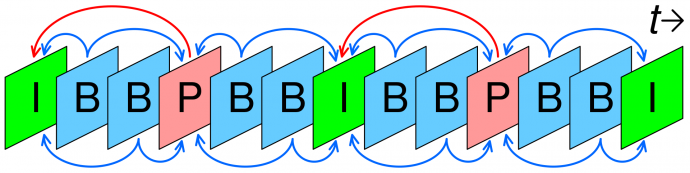

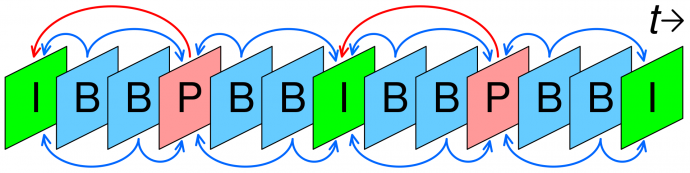

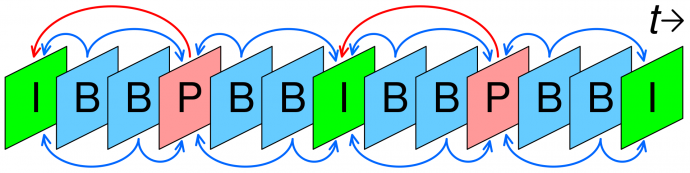

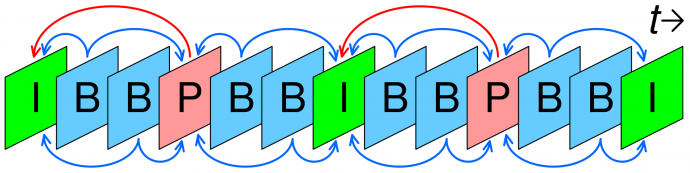

It's neat how GPU pipelines are slowly headed in the direction of the metaphor of video (I-Frames, B-Frames, P-Frames).

(Credit: Wikipedia on interpolation techniques built into common video compression formats)

Video streams of H.264 (e.g. Netflix) sends only 1 full frame per second, with the rest of the frames in between being "interpolated" / "estimated". But we don't notice the 23 interpolated frames between the 1 standalone frames.

Ten to twenty years ago we saw "pulsing artifacts" in video compression, but the video compression became so good.

I expect frame rate amplification technologies to become massively better over the long term with various tricks to reduce rendering workload without any visual artifacts, and to reduce latency significantly.

Eventually, I expect the quality to be just as good as non-DLSS, once Vulkan adds frame-rate-amplification helper APIs (see below for why).

However...

I wish reprojection is made available in non-VR games for low-lag Frame Generation (under <10ms penalty) algorithms.

Oculus Rift uses ASW 2.0 to convert 45fps to 90fps. It's simply a pixel shifting technology, to smooth pans/turns/scrolls.

It requires the frame rate amplification technology (frame generation) to have access to the original high-Hz controller data, such as 1000Hz mouse or 1000Hz head tracker. That way, frame generation is low-latency to your spatial movements (scrolling / pans / turns / etc).

Currently, NVIDIA's Frame Generation is a bit black box between original renders, and Frame Generation doesn't seem to take into account of intermediate controller positions, to make Frame Generation feel less laggy.

I expect that DLSS 4.0 (hopefully NVIDIA will backport it to 4000 series) will include low-lag Frame Generation by accepting additional data (1000Hz mouse movements) between original rendered frames, and perhaps allow 8:1 frame rate amplification.

I suggest NVIDIA does the following for lower-latency 4:1 frame rate amplification on an RTX 4090 (might take a year to develop a game API, in collaboration with a vendor such as Epic Unreal 5):

LOOP

Or more complex hybrids:

In fact, Reprojection could be used for most pixels (e.g. mouseturns, pans, scrolls), and Optical Flow only for parallax-reveal pixels. To accomodate for varying amounts of parallax-reveals, you can limit the number of Optical Flow computes per frame, and render low-resolution parallax-reveal (720p or 1080p) during 4K frames, and progressive "fade in" higher resolution over the period between fully rendered frames. In subframe 2, it could be slightly blurry parallax-reveal (1/4th Optical Flow pixels), subframe 3 can be 50% sharper, and subframe 4 can be 75% sharper, and finally, the next frame is the fully rendered frame.

UE5 uses a lot of "progressive fade in" techniques (e.g. shadow and ray computations are spread temporally) so for sudden parallax-reveal behaviors that spikes GPU workload (Optical Flow is more compute-intensive than Reproojection), it can fallback to the uprez-fade-in technique to keep 500fps frame rates consistent in, say, Cyberpunk 2077.

Many of these low-lying apples will require helper APIs built into Vulkan, DirectX, and other APIs, so the APIs need to be refactored for the frame rate amplification future, to reduce processing per frame, while concurrently reducing artifacts, AND concurrently reducing latency (thanks to controller data helping do simple pixel-shifting during pans/turns/scrolls/etc)

On the CPU side, to allow low latency input, this can theoretically be shingled (4 CPU threads, with Present() offset by quarter frame time), to uncap the CPU bottleneck without increasing latency. The CPU would be responsible for collecting new input data and preparing new geometry data to send to the GPU, while the GPU was still rendering/framegenerating/reprojecting the previous frame. So there are algorithms to remove the CPU bottleneck, if APIs are improved to support shingled rendering preparation.

This should be configurable and customizable, in order to allow game developers to experiment with improved quality with lower latency. Or some hybrid of reprojection and Frame Generation, where reprojection is used for all pixels except for parallax-reveal pixels. Optical Flow would be used for parallax-reveal pixels, possibly reducing Optical Flow workload by 90% since Reprojection is much more efficient.

Reprojection is more GPU-efficient than DLSS Frame Generation: It should be part of the DLSS 4.0 tamwork

With a terabyte per second of GPU bandwidth, it can theoretically reproject a 4K frame over 1000 times a second! Remember, we were already doing reprojection back in year 2016-2017... 45->90fps. Surely we can do 100fps->500fps now with the RTX 4090 in a non-VR game on a 500Hz monitor!

This may actually produce 8x frame rate gains instead of 4x, and reduce the workload-per-frame, especially at 1080p, for 500Hz monitors, allowing 80fps Cyberpunk to go 500fps for blurless sample-and-hold, while concurrently reducing latency to possibly roughly 1/80sec (not too shabby for solo).

This would be the logical DLSS 4.0 progress, reducing motion artifacts while increasing frame rate amplification factor. But it would now require games to realtime-feed the graphics drivers its preferred world motion data (e.g. pan, turn, scroll). Basically backporting a VR-style API into non-VR use cases to reduce DLSS latency massively. Reprojection is uses less GPU resources than interpolation/extrapolation techniques, and it would be clever to combine the two.

Another idea is to just let the game run at a high framerate, but the GPU ignores rendering those in-between frames, and only uses the data provided to compute a reprojection.

Also, improvements to gametime:photontime is needed, to make sure reprojection doesn't jitter that too badly, including for VSYNC ON and for VRR.

However, optimizations to a game with a theoretical new Vulkan API assisting generic frame rate amplification technologies (e.g. games would know to do less work "This frame requires 90% less geometry data, here's the minimum data you need to send me for this Present() cycle") will massively help frame rate amplification. The software would know that the GPU is working off the previous frame, and only send partial geometry data (sufficient for 6dof movement data).

TL;DR: Blur Busters opinion for Next-Generation frame rate amplification algorithms:

1. Reduced latency by combining simpler reprojection should be added to DLSS, FSR and XeSS

2. Vulkan should add additional APIs to help support shingled frame parallelism & lower the latency & increase accuracy; e.g. inform game to essentially "just only send low resolution geometry to help me reproject the previous frame".

3. Improve gametime:photontime behavior further. Even 0.5ms jitter can now be human visible at ultra high resolutions. 4000 pixels/sec at 0.5ms error is a 2 pixel jitter (or 2 extra pixels of motion blur if jitter is faster than flicker fusion threshold)

As we start decoupling compteness-every-frame in the next 10-20 years, Microsoft / NVIDIA / AMD / OpenGL / Vulkan / DirectX needs to add frame-rate-amplification-related "helper APIs" to allow games to send dummy mostly-empty frames to the GPU to allow the frame rate amplifier to more efficiently "work" the previous frame. It can be temporarily low-geometry frames that are simply mostly ignored by GPU except to provide ground truth data (positionals) to reduce the latency of the frame rate amplifier, while reducing the processing overhead of the frame rate amplifier, while reducing artifacts AND latency concurrently. By allowing it to use less-energy-consuming algorithms like reprojection much more accurately much more often.

The rendering workflow needs to be refactored, for further improvements. Moore's Law is getting more difficult, but there's a lot of theoretical optimizations that can allow an existing RTX 4090 to exceed 500fps with Cyberpunk 2077 on a 12th or 13th gen Intel CPU -- if only we had a DirectX/Vulkan reprojection API. But everyone would have to help out (Microsoft, Vulkan.org, and NVIDIA) because it's a full refactor including APIs.

DLSS 3.0 only works as "badly" (Wright Brothers) as it does, only simply because there isn't some API help by Microsoft and Vulkan.org, and needed to be easily backwards compatible. But fundamentally, we don't need Moore's Law to improve further because a lot of low-lying apples (some used by VR developers) are not currently being used in traditional 3D-on-2D non-VR contexts.

I suspect DLSS 4.0 will go in this direction, but hopefully DLSS 4.0 with 8:1 ability will be backported to 4000 series (for developers willing to hook into the new APIs).

It's frankly neat that frame rate amplification technologies are starting to merge spatial (superresolution) & temporal (generation) techniques.

That being said, DLSS "2.0" is superior on 4090 than the 3000 series, so it's not really "2.0" when you disable Frame Generation. From what I know, NIVIDA had always planned to let you disable Frame Generation.

It's neat how GPU pipelines are slowly headed in the direction of the metaphor of video (I-Frames, B-Frames, P-Frames).

(Credit: Wikipedia on interpolation techniques built into common video compression formats)

Video streams of H.264 (e.g. Netflix) sends only 1 full frame per second, with the rest of the frames in between being "interpolated" / "estimated". But we don't notice the 23 interpolated frames between the 1 standalone frames.

Ten to twenty years ago we saw "pulsing artifacts" in video compression, but the video compression became so good.

I expect frame rate amplification technologies to become massively better over the long term with various tricks to reduce rendering workload without any visual artifacts, and to reduce latency significantly.

Eventually, I expect the quality to be just as good as non-DLSS, once Vulkan adds frame-rate-amplification helper APIs (see below for why).

However...

I wish reprojection is made available in non-VR games for low-lag Frame Generation (under <10ms penalty) algorithms.

Oculus Rift uses ASW 2.0 to convert 45fps to 90fps. It's simply a pixel shifting technology, to smooth pans/turns/scrolls.

It requires the frame rate amplification technology (frame generation) to have access to the original high-Hz controller data, such as 1000Hz mouse or 1000Hz head tracker. That way, frame generation is low-latency to your spatial movements (scrolling / pans / turns / etc).

Currently, NVIDIA's Frame Generation is a bit black box between original renders, and Frame Generation doesn't seem to take into account of intermediate controller positions, to make Frame Generation feel less laggy.

I expect that DLSS 4.0 (hopefully NVIDIA will backport it to 4000 series) will include low-lag Frame Generation by accepting additional data (1000Hz mouse movements) between original rendered frames, and perhaps allow 8:1 frame rate amplification.

I suggest NVIDIA does the following for lower-latency 4:1 frame rate amplification on an RTX 4090 (might take a year to develop a game API, in collaboration with a vendor such as Epic Unreal 5):

LOOP

- Render low-resolution frame + Super resolution algorithm (as it already does)

- Render Reprojected frame (with fresh input positional data from T+0.25)

- Render Frame Generation frame (with fresh input positional data from T+0.50)

- Render Reprojected frame (with fresh input positional data from T+0.75)

Or more complex hybrids:

In fact, Reprojection could be used for most pixels (e.g. mouseturns, pans, scrolls), and Optical Flow only for parallax-reveal pixels. To accomodate for varying amounts of parallax-reveals, you can limit the number of Optical Flow computes per frame, and render low-resolution parallax-reveal (720p or 1080p) during 4K frames, and progressive "fade in" higher resolution over the period between fully rendered frames. In subframe 2, it could be slightly blurry parallax-reveal (1/4th Optical Flow pixels), subframe 3 can be 50% sharper, and subframe 4 can be 75% sharper, and finally, the next frame is the fully rendered frame.

UE5 uses a lot of "progressive fade in" techniques (e.g. shadow and ray computations are spread temporally) so for sudden parallax-reveal behaviors that spikes GPU workload (Optical Flow is more compute-intensive than Reproojection), it can fallback to the uprez-fade-in technique to keep 500fps frame rates consistent in, say, Cyberpunk 2077.

Many of these low-lying apples will require helper APIs built into Vulkan, DirectX, and other APIs, so the APIs need to be refactored for the frame rate amplification future, to reduce processing per frame, while concurrently reducing artifacts, AND concurrently reducing latency (thanks to controller data helping do simple pixel-shifting during pans/turns/scrolls/etc)

On the CPU side, to allow low latency input, this can theoretically be shingled (4 CPU threads, with Present() offset by quarter frame time), to uncap the CPU bottleneck without increasing latency. The CPU would be responsible for collecting new input data and preparing new geometry data to send to the GPU, while the GPU was still rendering/framegenerating/reprojecting the previous frame. So there are algorithms to remove the CPU bottleneck, if APIs are improved to support shingled rendering preparation.

This should be configurable and customizable, in order to allow game developers to experiment with improved quality with lower latency. Or some hybrid of reprojection and Frame Generation, where reprojection is used for all pixels except for parallax-reveal pixels. Optical Flow would be used for parallax-reveal pixels, possibly reducing Optical Flow workload by 90% since Reprojection is much more efficient.

Reprojection is more GPU-efficient than DLSS Frame Generation: It should be part of the DLSS 4.0 tamwork

With a terabyte per second of GPU bandwidth, it can theoretically reproject a 4K frame over 1000 times a second! Remember, we were already doing reprojection back in year 2016-2017... 45->90fps. Surely we can do 100fps->500fps now with the RTX 4090 in a non-VR game on a 500Hz monitor!

This may actually produce 8x frame rate gains instead of 4x, and reduce the workload-per-frame, especially at 1080p, for 500Hz monitors, allowing 80fps Cyberpunk to go 500fps for blurless sample-and-hold, while concurrently reducing latency to possibly roughly 1/80sec (not too shabby for solo).

This would be the logical DLSS 4.0 progress, reducing motion artifacts while increasing frame rate amplification factor. But it would now require games to realtime-feed the graphics drivers its preferred world motion data (e.g. pan, turn, scroll). Basically backporting a VR-style API into non-VR use cases to reduce DLSS latency massively. Reprojection is uses less GPU resources than interpolation/extrapolation techniques, and it would be clever to combine the two.

Another idea is to just let the game run at a high framerate, but the GPU ignores rendering those in-between frames, and only uses the data provided to compute a reprojection.

Also, improvements to gametime:photontime is needed, to make sure reprojection doesn't jitter that too badly, including for VSYNC ON and for VRR.

However, optimizations to a game with a theoretical new Vulkan API assisting generic frame rate amplification technologies (e.g. games would know to do less work "This frame requires 90% less geometry data, here's the minimum data you need to send me for this Present() cycle") will massively help frame rate amplification. The software would know that the GPU is working off the previous frame, and only send partial geometry data (sufficient for 6dof movement data).

TL;DR: Blur Busters opinion for Next-Generation frame rate amplification algorithms:

1. Reduced latency by combining simpler reprojection should be added to DLSS, FSR and XeSS

2. Vulkan should add additional APIs to help support shingled frame parallelism & lower the latency & increase accuracy; e.g. inform game to essentially "just only send low resolution geometry to help me reproject the previous frame".

3. Improve gametime:photontime behavior further. Even 0.5ms jitter can now be human visible at ultra high resolutions. 4000 pixels/sec at 0.5ms error is a 2 pixel jitter (or 2 extra pixels of motion blur if jitter is faster than flicker fusion threshold)

As we start decoupling compteness-every-frame in the next 10-20 years, Microsoft / NVIDIA / AMD / OpenGL / Vulkan / DirectX needs to add frame-rate-amplification-related "helper APIs" to allow games to send dummy mostly-empty frames to the GPU to allow the frame rate amplifier to more efficiently "work" the previous frame. It can be temporarily low-geometry frames that are simply mostly ignored by GPU except to provide ground truth data (positionals) to reduce the latency of the frame rate amplifier, while reducing the processing overhead of the frame rate amplifier, while reducing artifacts AND latency concurrently. By allowing it to use less-energy-consuming algorithms like reprojection much more accurately much more often.

The rendering workflow needs to be refactored, for further improvements. Moore's Law is getting more difficult, but there's a lot of theoretical optimizations that can allow an existing RTX 4090 to exceed 500fps with Cyberpunk 2077 on a 12th or 13th gen Intel CPU -- if only we had a DirectX/Vulkan reprojection API. But everyone would have to help out (Microsoft, Vulkan.org, and NVIDIA) because it's a full refactor including APIs.

DLSS 3.0 only works as "badly" (Wright Brothers) as it does, only simply because there isn't some API help by Microsoft and Vulkan.org, and needed to be easily backwards compatible. But fundamentally, we don't need Moore's Law to improve further because a lot of low-lying apples (some used by VR developers) are not currently being used in traditional 3D-on-2D non-VR contexts.

I suspect DLSS 4.0 will go in this direction, but hopefully DLSS 4.0 with 8:1 ability will be backported to 4000 series (for developers willing to hook into the new APIs).

Last edited:

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

h.264 Netflix is 1fps, interplolated to 24fps. Where are you sourcing that info? Because that's some crazy bandwidth saving.

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Part 2 of 4

(Well, the "1" can vary, it is never "24 non-interpolated frames per second" for streaming. In other words, streams are literally like 1 or 2 or 3 full frames a second with near-perfectly predicted/interpolated frames in between.).

That being said, engineering terminology is "prediction".

Be noted the compression standards can vary in number of full original frames per second, e.g. 1 in 4, 1 in 10, 1 in 24, etc.

But we've been doing interpolation inside video files it since the VCD and DVD days already (MPEG1, MPEG2)

MPEG, MPEG2, MPEG4, and H.EVC uses different kinds of predictive motion algorithms (interpolation/estimation mathematics).

Here's a Year 1992 paper on the MPEG1 specification, one of the first video compression formats to effectively use interpolation as part of video compression:

And the older ITU-T 1988 docment on H.261, which MPEG is based off. Download the English PDF scan of the 1988 document from ITU's website, and Ctrl+F for word "predicted" and "interpolated". Famously, this standard is one of the earliest video compression formats that utilizes this.

In the late 1980s, this miraculously achieved less than 1 bit per pixel on average (e.g. data sizes less than 1/24th original) to compress a 24-bit video stream -- something that compression engineers thought was mathematically impossible at the time for real world video (rather than vector art, GIF-style or RLE-style compression). Interpolation was the math miracle that made MPEG-1 possible.

And the rest was history. H.261, H.263, H.264, H.EVC halved the previous generations' bitrate using a variety of advanced techniques, but interpolation/prediction mathematics is still a bedrock in all of them.

Also, the various multiple links above, is where I got this image of a video file structure.

Here, only green cards are full frames. The rest are interpolation-based technologies (prediction).

I = fully compressed frame

P = unidirectionally predicted frames

B = bidirectionally predicted frames

That's why if compression is interrupted (reception glitch), it takes 1 second for the image to come back, because the predicted frames can't work off missing frames.

Sometimes it only happens to a fragment of frame (e.g. black areas or smeary in a glitchy stream that instantly auto-fill at the next fully compressed frame), since the predicted compression areas needs a fully compressed frame to un-glitch. That's why video compresison glitches are funny 1-second interval behaviors.

Eventually, I bet even a theoretical Playstation 7 and Xbox 720 in year 2035 probably will also have ultra-low-lag frame rate amplification technologies that are perceptually lossless, like well-compressed video streams except in three dimensions and at 4K+ or 8K+. You can't tell apart the predicted frames anymore during Netflix (unlike back in the VCD and cheap-DVD / Bittorrent days where you saw the "compression pulsing" effects). The 1fps Netflix movies flawlessly predictive interpolated to 24fps, are perceptually lossless to most people.

Even the E-Cinema does it too -- when you go to the movie theaters, although there may be 4 or 8 full frames a second, with the rest predicted.

Why don't we see interpolation artifacts in video files anymore? That's because they interpolate as perfectly as possible and include non-interpolated pixel data as a sort of a "diff image", to correct the interpolation artifacts internally inside the video compression format. It's because the original video compressor knows the lossless images, and can keep processing the interpolation/prediction until it almost perfectly matches the non-predicted image. The original video compressors' interpolator is not as black box as a television set interpolator, because the video compressor has full access to the uncompressed image to compress it as perfectly as possible within a bitrate target. Incredible big money have been spent optimizing the bitrate.

It's borderline miraculous that we can now do an 8K H.EVC stream in a single 6Mhz channel (one single 480i NTSC television signal), thanks to the miracle of predictive/interpolated video compression mathematics -- some video compression formats are so complex they requires university algebra/calculus to understand.

That's why GPU-based frame rate amplification technologies need more data (partial geometry, 1000hz controller data, etc) to make the frame rate amplification even more perfect. Classic TV interpolators were black boxes, they didn't have much metadata on the original frames, unlike video compressors (for 2D motion) or DLSS (for 3D motion). By feeding more metadata to the frame generation methods, the frame generation can become more accurate with less horsepower-per-frame for a given required human perception threshold.

That being said, latency can be an issue. That's kind of why live digital broadcasts has more latency than live analog broadcasts, e.g. a football game on TV. Because the original broadcaster had to execute compression algorithm with the interpolation lookahead/behind, in order to keep the bandwidth within budget. This has been a problem since the 1990s when digital TV began. You can reduce compression latency with some additional algorithms (e.g. more lookbehind predictions, fewer lookahead predictions, accelerometer data helping process camera panning data better, etc), it's been refined for over the years, and the best H.264 television broadcasts on cable no longer have the compression-pulsing artifacts of the predictive stuff, but the predictive stuff is still there!

The world has perfected essentially a "2D equivalent" of frame rate amplification (Digital TV and Netflix was impossible without these predictive/interpolation mathematics).

But we're probably 10 years away from making 3D (GPU) frame rate amplification fully perceptually lossless. It's much easier to see artifacts when three dimensions are involved, especially in realtime rendering.

It's impressive how extra metadata can turn an interpolation engine more perceptually lossless.

DLSS 3.0 could be so much better in future. 3.0 is a fantastic Wright Brothers taste of a refactored GPU rendering pipeline of the 8K 1000fps 1000Hz future (even if Moore's Law almost completely halts and we're stuck with current transistor sizes). Once APIs are added to help frame rate amplification, plus combining more algorithms (including simpler reprojection), it will help everyone get higher frame rates perceptually losslessly at much lower latency, with the similar numbers of transistors as today. It is simply a refactoring of the rendering architecture away from the full-polygon-render-every-frame metaphor. Hopefully future DLSS/XeSS/FSR algorithms will have incremental progress towards this.

Yes, some purists may always want full renders (uncomfortable with interpolation, even if perfectly done), but that is not likely to achieve 8K+ 1000fps+ 1000Hz+ UE5 quality on single silicon within our lifetimes -- framerate amplification technologies combined with a refactored rendering workflow -- are absolutely essential for reaching "simultaneous retina resolution AND retina refresh rate" in a concurrently strobeless AND blurless manner.

Correct.h.264 Netflix is 1fps, interplolated to 24fps. Where are you sourcing that info? Because that's some crazy bandwidth saving.

(Well, the "1" can vary, it is never "24 non-interpolated frames per second" for streaming. In other words, streams are literally like 1 or 2 or 3 full frames a second with near-perfectly predicted/interpolated frames in between.).

That being said, engineering terminology is "prediction".

Be noted the compression standards can vary in number of full original frames per second, e.g. 1 in 4, 1 in 10, 1 in 24, etc.

But we've been doing interpolation inside video files it since the VCD and DVD days already (MPEG1, MPEG2)

MPEG, MPEG2, MPEG4, and H.EVC uses different kinds of predictive motion algorithms (interpolation/estimation mathematics).

- Google Scholar Research Papers: "Video Compression Predictive And Interpolative Techniques"

(many peer reviewed papers!)

- DOI.org Research Papers: "Video Compression Predictive And Interpolative Techniques"

(many peer reviewed papers!)

- ARXIV.org Research Papers: "Video Compression Predictive And Interpolative Techniques"

(many peer reviewed papers!)

- Wikipedia: Inter frame

"An inter frame is a frame in a video compression stream which is expressed in terms of one or more neighboring frames. The "inter" part of the term refers to the use of Inter frame prediction. This kind of prediction tries to take advantage from temporal redundancy between neighboring frames enabling higher compression rates."

- Wikipedia: Advanced Video Coding H.264

"Previously encoded pictures are used by H.264/AVC encoders to provide predictions of the values of samples in other pictures. This allows the encoder to make efficient decisions on the best way to encode a given picture.'

Here's a Year 1992 paper on the MPEG1 specification, one of the first video compression formats to effectively use interpolation as part of video compression:

MPEG1 Specification Abstract (April 1992)

The video compression technique developed by MPEG covers many applications from interactive systems on CD-ROM to delivery of video information over telecommunications networks. The MPEG video compression algorithm relies on two basic techniques: block based motion compensation for the reduction of the temporal redundancy and transform domain based compression for the reduction of spatial redundancy. Motion compensation techniques are applied with both predictive and interpolative techniques. The prediction error signal is further compressed with spatial redundancy reduction (DCT). The quality of the compressed video with the MPEG algorithm at about 1.5 Mbit/s has been compared to that of consumer grade VCR's.

And the older ITU-T 1988 docment on H.261, which MPEG is based off. Download the English PDF scan of the 1988 document from ITU's website, and Ctrl+F for word "predicted" and "interpolated". Famously, this standard is one of the earliest video compression formats that utilizes this.

In the late 1980s, this miraculously achieved less than 1 bit per pixel on average (e.g. data sizes less than 1/24th original) to compress a 24-bit video stream -- something that compression engineers thought was mathematically impossible at the time for real world video (rather than vector art, GIF-style or RLE-style compression). Interpolation was the math miracle that made MPEG-1 possible.

And the rest was history. H.261, H.263, H.264, H.EVC halved the previous generations' bitrate using a variety of advanced techniques, but interpolation/prediction mathematics is still a bedrock in all of them.

Also, the various multiple links above, is where I got this image of a video file structure.

Here, only green cards are full frames. The rest are interpolation-based technologies (prediction).

I = fully compressed frame

P = unidirectionally predicted frames

B = bidirectionally predicted frames

That's why if compression is interrupted (reception glitch), it takes 1 second for the image to come back, because the predicted frames can't work off missing frames.

Sometimes it only happens to a fragment of frame (e.g. black areas or smeary in a glitchy stream that instantly auto-fill at the next fully compressed frame), since the predicted compression areas needs a fully compressed frame to un-glitch. That's why video compresison glitches are funny 1-second interval behaviors.

Eventually, I bet even a theoretical Playstation 7 and Xbox 720 in year 2035 probably will also have ultra-low-lag frame rate amplification technologies that are perceptually lossless, like well-compressed video streams except in three dimensions and at 4K+ or 8K+. You can't tell apart the predicted frames anymore during Netflix (unlike back in the VCD and cheap-DVD / Bittorrent days where you saw the "compression pulsing" effects). The 1fps Netflix movies flawlessly predictive interpolated to 24fps, are perceptually lossless to most people.

Even the E-Cinema does it too -- when you go to the movie theaters, although there may be 4 or 8 full frames a second, with the rest predicted.

Why don't we see interpolation artifacts in video files anymore? That's because they interpolate as perfectly as possible and include non-interpolated pixel data as a sort of a "diff image", to correct the interpolation artifacts internally inside the video compression format. It's because the original video compressor knows the lossless images, and can keep processing the interpolation/prediction until it almost perfectly matches the non-predicted image. The original video compressors' interpolator is not as black box as a television set interpolator, because the video compressor has full access to the uncompressed image to compress it as perfectly as possible within a bitrate target. Incredible big money have been spent optimizing the bitrate.

It's borderline miraculous that we can now do an 8K H.EVC stream in a single 6Mhz channel (one single 480i NTSC television signal), thanks to the miracle of predictive/interpolated video compression mathematics -- some video compression formats are so complex they requires university algebra/calculus to understand.

That's why GPU-based frame rate amplification technologies need more data (partial geometry, 1000hz controller data, etc) to make the frame rate amplification even more perfect. Classic TV interpolators were black boxes, they didn't have much metadata on the original frames, unlike video compressors (for 2D motion) or DLSS (for 3D motion). By feeding more metadata to the frame generation methods, the frame generation can become more accurate with less horsepower-per-frame for a given required human perception threshold.

That being said, latency can be an issue. That's kind of why live digital broadcasts has more latency than live analog broadcasts, e.g. a football game on TV. Because the original broadcaster had to execute compression algorithm with the interpolation lookahead/behind, in order to keep the bandwidth within budget. This has been a problem since the 1990s when digital TV began. You can reduce compression latency with some additional algorithms (e.g. more lookbehind predictions, fewer lookahead predictions, accelerometer data helping process camera panning data better, etc), it's been refined for over the years, and the best H.264 television broadcasts on cable no longer have the compression-pulsing artifacts of the predictive stuff, but the predictive stuff is still there!

The world has perfected essentially a "2D equivalent" of frame rate amplification (Digital TV and Netflix was impossible without these predictive/interpolation mathematics).

But we're probably 10 years away from making 3D (GPU) frame rate amplification fully perceptually lossless. It's much easier to see artifacts when three dimensions are involved, especially in realtime rendering.

It's impressive how extra metadata can turn an interpolation engine more perceptually lossless.

DLSS 3.0 could be so much better in future. 3.0 is a fantastic Wright Brothers taste of a refactored GPU rendering pipeline of the 8K 1000fps 1000Hz future (even if Moore's Law almost completely halts and we're stuck with current transistor sizes). Once APIs are added to help frame rate amplification, plus combining more algorithms (including simpler reprojection), it will help everyone get higher frame rates perceptually losslessly at much lower latency, with the similar numbers of transistors as today. It is simply a refactoring of the rendering architecture away from the full-polygon-render-every-frame metaphor. Hopefully future DLSS/XeSS/FSR algorithms will have incremental progress towards this.

Yes, some purists may always want full renders (uncomfortable with interpolation, even if perfectly done), but that is not likely to achieve 8K+ 1000fps+ 1000Hz+ UE5 quality on single silicon within our lifetimes -- framerate amplification technologies combined with a refactored rendering workflow -- are absolutely essential for reaching "simultaneous retina resolution AND retina refresh rate" in a concurrently strobeless AND blurless manner.

Last edited:

TrunksZero

Gawd

- Joined

- Jul 15, 2021

- Messages

- 550

I see where your coming from... but I B P frames are not about sending or encoding less frames and then interpolating out frames. It's about helping the encoder be more efficient with it's encode. Allowing for greater, or less compression by determining how much compression can/should be applied with the best possible visual quality on the frames. Not sending or reducing the numbers of frames. When it makes mistakes you get macro/micro blocking. Because the encode didn't do a good job determining how to apply compression.

https://ottverse.com/i-p-b-frames-idr-keyframes-differences-usecases/

So the frame rate stays the same and it does not make or interpolate in frames in the same way something like Frame Generation or frame interpolation is on a display. But again... I can see where your going.

It will be interesting if we see some Frame Generation vs TV interpolation methods. May be a kinda hard to capture, but it would be interesting to see. From my understanding not all the TV manufactures do it the same way and not all of them have equal amounts of lag.(For instance hear Samsung's is relatively fast, so they let you turn it on when the TV is in game mode. Where as LG's is pretty slow, so by default LG doesn't let you turn it on in its game mode)

https://ottverse.com/i-p-b-frames-idr-keyframes-differences-usecases/

So the frame rate stays the same and it does not make or interpolate in frames in the same way something like Frame Generation or frame interpolation is on a display. But again... I can see where your going.

It will be interesting if we see some Frame Generation vs TV interpolation methods. May be a kinda hard to capture, but it would be interesting to see. From my understanding not all the TV manufactures do it the same way and not all of them have equal amounts of lag.(For instance hear Samsung's is relatively fast, so they let you turn it on when the TV is in game mode. Where as LG's is pretty slow, so by default LG doesn't let you turn it on in its game mode)

Last edited:

OutOfPhase

Supreme [H]ardness

- Joined

- May 11, 2005

- Messages

- 6,575

Framegen is just another arrow in your quiver of dialing in the experience you enjoy. We are free to not use it if it isn't the right choice for a particular case.

I think it will be good for a huge swath of non-twitch single-player games. Future versions of Tomb Raider, Mass Effect, Baldur's Gate, etc.

And of course I think the more traditional stuff done by DLSS et al are amazing. They work really well, and again, we can always choose to use it or not, and how aggressive it is.

Just tools.

I think it will be good for a huge swath of non-twitch single-player games. Future versions of Tomb Raider, Mass Effect, Baldur's Gate, etc.

And of course I think the more traditional stuff done by DLSS et al are amazing. They work really well, and again, we can always choose to use it or not, and how aggressive it is.

Just tools.

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Right Tool For The Right Job!

Many people can't use VR because of its flicker. If we can reduce 8K motion blur strobelessly, we will!

Real life doesn't flicker, so Holodecks shouldn't flicker, ideally.

Many people can't use VR because of its flicker. If we can reduce 8K motion blur strobelessly, we will!

Real life doesn't flicker, so Holodecks shouldn't flicker, ideally.

Last edited:

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Part 3 of 4

Interpolators in video compressors have fewer artifacts than TV-based interpolators because it was supplied data from original video frames between complete standalone compressed frames

Frame rate amplifiers can have fewer artifacts if it gets more data (Z-buffers, controller data, etc) it was supplied data from original 3D/game intent between complete standalone rendered frames

Remember need to think from the correct relativity vantage point (Einstein said everything is relative).

Move your renderthink to the correct vantage point:

For video compression and for GPU rendering respectively:

...Think about the rate of fully standalone compressed frames (and getting ground truth from the original uncompressed frames)

...Think about the rate of fully standalone rendered frames (and getting ground truth via controllers, Z-buffer, AI model of textures, and game intent).

For Oculus Rift, ASW 2.0 has fewer artifacts than ASW 1.0 because it gained more ground truth (Z-buffer was added). Headtracker controller data is part of the ground truth in the frame rate amplifier between the complete renders, to reduce latency. Then ASW 2.0 added the Z-buffer as additional context to help reduce artifacts. Extra ground truth helps both latency and artifacts, as long as the ground truth can still save resources (storage, processing, transistors) than other methods (e.g. storing uncompressed frames or complete full resolution renders)

If you've seen the glitching of ASW 1.0 versus ASW 2.0 frame rate amplification on Oculus Rift, you can see they don't look too different from H.EVC video "minor glitching" around parallax edges (H.EVC uses coding units, instead of traditional macroblocks) -- say, H.EVC loses a relatively unimportant bit, then suddenly H.EVC slightly corrupts and glitches in a weird shape (non-rectangular), usually around edges of objects. It's not a very squarey-glitch like MPEG1.

Some (not all) of the H.EVC minor-glitches are closer to an interpolation-style artifact than an MPEG1-style artifact. Both can still happen, but there are many different kinds of subtle glitching, and the venn diagram of glitch-appearance overlaps between compressors and frame rate amplifiers. Now that being said, glitches are going down and down (as long as you don't lose data).

It's really weird how the GPU (in ASW) created such object-edge glitches (during strafing during ASW) that are much more similar to a H.EVC glitch (from say, a single lost bit). Not exactly the same, but it's very different and somewhat subtler looking than old MPEG2 macroblock video-corruption artifacts, but weird glitch.

Note: Remember, newer video file formats no longer use traditional macroblocks in the same style as MPEG1. Future video file formats in ten or twenty years from now may utilize vectorized 3D geometry or various equivalents of timecoded photons. AI compression technology is now being considered as part of H.266 for example! And there is early discussion of framerateless video file formats (maybe the H.268 or H.269 era). I posted this reply on AVSFORUM about the conceptual framerateless video file format. We're not in Macroblock City, Kansas anymore, Toto.

Latency reductions requires the frames-amplifier logic to have more access to the original ground truth (e.g. mouse button & mouse movements & keyboard movements). The interpolation hot potato is misguided, since every single Netflix video uses interpolation technology as parts of its compression.

Even when you go to the cinema, interpolation is still used with lighter video compression ratios (even 300 megabit/sec H.264 and H.EVC files on a HDD) with a digital projector. Uncompressed E-Cinema is over 1 gigabytes/sec (4K 36-bit 12bpc) and would have required a >7 terabyte SSD or RAID to handle that spew, which is not what E-Cinema uses due to the cost of shipping those disks. For 8K 24fps, that's about 30 terabytes for uncompressed 36-bit over 2 hours, with a firehose of almost 5 gigabytes per second. The 3-hour epic at ~45 terabytes. Even with lossless compression, 36-bit color data doesn't compress very well (e.g. 2:1 ratios). They still use H.264 and H.EVC to compress to make all of this manageable, and in those compression formats, interpolation math germane to its function. But it's perceptually lossless, there's no macroblocking.

Interpolators/Extrapolators/Reprojectors are much less laggy and less artifacty with extra ground truth!

We were already doing 90fps reprojection on year 2015 GPUs in year 2017 VR games, and today RTX 4090 is capable of >1000fps "simple reprojection" if it was the only thing it did. Why aren't we building reprojection-helper APIs into Vulkan or DirectX, rheoretically speaking? Sure, it will require game developers to use the APIs. We should mix Optical Flow (interpolation-style techniques) with Reprojection techniques, as multipliers off each other. Adding frame rate amplification helper APIs to the 3D APIs because that is artifact-reducing, latency-reducing, and processing-reducing. Obviously, this requires developer buy in, but you can piggyback off VR developers and filter that back into non-VR, by making it almost as easy as today's workflow (eventually).

It's easier to see artifacts in 3D because of the ginormous bandwidth, and the easier sensitivity to artifacts afforded by 3D, and easier sensitivity to latency afforded by realtime interactivity. So there will always be pros/cons. But the artifacts will get less and less, until it hopefully falls below the human perception thresholds. It's an iterative improvement process, which will be helped by ground-truth APIs built into DirectX / Vulkan / etc. It may be a decade before the API vendors figure it out, but the ball's started rolling.

Yes, you can still render everything (disable DLSS), and you can still keep video uncompressed (use RAW not H.264). We have a choice. Twitch esports may remain DLSS-free obviously, but esports is only a slice of the interactive 3D game/content pie. Right Tool for Right Job.

Eureka. Now you got it!

Actually, the teamwork is more applicable than you think!I see where your coming from... but I B P frames are not about sending or encoding less frames and then interpolating out frames. It's about helping the encoder be more efficient with it's encode. Allowing for greater, or less compression by determining how much compression can/should be applied with the best possible visual quality on the frames. Not sending or reducing the numbers of frames. When it makes mistakes you get macro/micro blocking. Because the encode didn't do a good job determining how to apply compression.

Interpolators in video compressors have fewer artifacts than TV-based interpolators because it was supplied data from original video frames between complete standalone compressed frames

Frame rate amplifiers can have fewer artifacts if it gets more data (Z-buffers, controller data, etc) it was supplied data from original 3D/game intent between complete standalone rendered frames

Remember need to think from the correct relativity vantage point (Einstein said everything is relative).

Move your renderthink to the correct vantage point:

For video compression and for GPU rendering respectively:

...Think about the rate of fully standalone compressed frames (and getting ground truth from the original uncompressed frames)

...Think about the rate of fully standalone rendered frames (and getting ground truth via controllers, Z-buffer, AI model of textures, and game intent).

- Whether the ground truth is an image or a description of the image, is academic. Put that semantic aside, Issac Newton.

- Whether the artifact is macroblocking or interpolation artifact, is academic. Put that semantic aside, Issac Newton.

- Whether the interpolation math is being done for realtime content (live video, live GPU) or for prerecorded content (video files), is academic. Put that semantic aside, Issac Newton.

For Oculus Rift, ASW 2.0 has fewer artifacts than ASW 1.0 because it gained more ground truth (Z-buffer was added). Headtracker controller data is part of the ground truth in the frame rate amplifier between the complete renders, to reduce latency. Then ASW 2.0 added the Z-buffer as additional context to help reduce artifacts. Extra ground truth helps both latency and artifacts, as long as the ground truth can still save resources (storage, processing, transistors) than other methods (e.g. storing uncompressed frames or complete full resolution renders)

If you've seen the glitching of ASW 1.0 versus ASW 2.0 frame rate amplification on Oculus Rift, you can see they don't look too different from H.EVC video "minor glitching" around parallax edges (H.EVC uses coding units, instead of traditional macroblocks) -- say, H.EVC loses a relatively unimportant bit, then suddenly H.EVC slightly corrupts and glitches in a weird shape (non-rectangular), usually around edges of objects. It's not a very squarey-glitch like MPEG1.

Some (not all) of the H.EVC minor-glitches are closer to an interpolation-style artifact than an MPEG1-style artifact. Both can still happen, but there are many different kinds of subtle glitching, and the venn diagram of glitch-appearance overlaps between compressors and frame rate amplifiers. Now that being said, glitches are going down and down (as long as you don't lose data).

It's really weird how the GPU (in ASW) created such object-edge glitches (during strafing during ASW) that are much more similar to a H.EVC glitch (from say, a single lost bit). Not exactly the same, but it's very different and somewhat subtler looking than old MPEG2 macroblock video-corruption artifacts, but weird glitch.

Note: Remember, newer video file formats no longer use traditional macroblocks in the same style as MPEG1. Future video file formats in ten or twenty years from now may utilize vectorized 3D geometry or various equivalents of timecoded photons. AI compression technology is now being considered as part of H.266 for example! And there is early discussion of framerateless video file formats (maybe the H.268 or H.269 era). I posted this reply on AVSFORUM about the conceptual framerateless video file format. We're not in Macroblock City, Kansas anymore, Toto.

Latency reductions requires the frames-amplifier logic to have more access to the original ground truth (e.g. mouse button & mouse movements & keyboard movements). The interpolation hot potato is misguided, since every single Netflix video uses interpolation technology as parts of its compression.

Even when you go to the cinema, interpolation is still used with lighter video compression ratios (even 300 megabit/sec H.264 and H.EVC files on a HDD) with a digital projector. Uncompressed E-Cinema is over 1 gigabytes/sec (4K 36-bit 12bpc) and would have required a >7 terabyte SSD or RAID to handle that spew, which is not what E-Cinema uses due to the cost of shipping those disks. For 8K 24fps, that's about 30 terabytes for uncompressed 36-bit over 2 hours, with a firehose of almost 5 gigabytes per second. The 3-hour epic at ~45 terabytes. Even with lossless compression, 36-bit color data doesn't compress very well (e.g. 2:1 ratios). They still use H.264 and H.EVC to compress to make all of this manageable, and in those compression formats, interpolation math germane to its function. But it's perceptually lossless, there's no macroblocking.

Interpolators/Extrapolators/Reprojectors are much less laggy and less artifacty with extra ground truth!

We were already doing 90fps reprojection on year 2015 GPUs in year 2017 VR games, and today RTX 4090 is capable of >1000fps "simple reprojection" if it was the only thing it did. Why aren't we building reprojection-helper APIs into Vulkan or DirectX, rheoretically speaking? Sure, it will require game developers to use the APIs. We should mix Optical Flow (interpolation-style techniques) with Reprojection techniques, as multipliers off each other. Adding frame rate amplification helper APIs to the 3D APIs because that is artifact-reducing, latency-reducing, and processing-reducing. Obviously, this requires developer buy in, but you can piggyback off VR developers and filter that back into non-VR, by making it almost as easy as today's workflow (eventually).

It's easier to see artifacts in 3D because of the ginormous bandwidth, and the easier sensitivity to artifacts afforded by 3D, and easier sensitivity to latency afforded by realtime interactivity. So there will always be pros/cons. But the artifacts will get less and less, until it hopefully falls below the human perception thresholds. It's an iterative improvement process, which will be helped by ground-truth APIs built into DirectX / Vulkan / etc. It may be a decade before the API vendors figure it out, but the ball's started rolling.

Yes, you can still render everything (disable DLSS), and you can still keep video uncompressed (use RAW not H.264). We have a choice. Twitch esports may remain DLSS-free obviously, but esports is only a slice of the interactive 3D game/content pie. Right Tool for Right Job.

Eureka. Now you got it!

Last edited:

Chief Blur Buster

Owner of BlurBusters

- Joined

- Aug 18, 2017

- Messages

- 430

Part 4 of 4

I sent links to these posts to NVIDIA to see if they would do it for DLSS 4.0.

Who knows!?

The Concept of Multiple Concurrent Frame Rates In Same Scene

Different framerate for pans/turns/scrolls versus for in-world objects (e.g. enemies, NPCs, physics).

Some old games (Quake, Bioshock) updated object physics at a low frame rate independently of the display. This shows as weird stutter despite high frame rates.

Oculus ASW generates hybrid frame rates too (90fps headturns with 45fps hand tracking).

But there's actually a massively clever fix to that (keep reading...)

Reprojection is Low Compute with Ground Truth APIs (e.g. mouse position)

If you know the amount of mouse movement between original rendered frames, you can reproject (like Oculus Rift) without doing computationally-expensive analysis of the rendered frame (like Optical Flow). That way, reprojection can occur at 500fps on RTX 4090 because Oculus Rift could reproject at 90fps on a GTX 980 / GTX 1070!

Ground Truth API's (e.g. relaying translational movements to Vulkan/DirectX API or GPU drivers) will become necessary, for proper low-compute reprojection APIs. Game developers will need to hook into the APIs for low-latency reprojection.

Sidestep Moore's Law More Easily

Latency is reduced because the ground truth API's (translation data from mouse position or keyboard movements) reduces the controller-to-photons latency of important global movements (turns/pans/scrolls).

Reprojection can work with both 3rd-person/1st-person (turns) and overhead RTS (scroll/pan) games. Strobeless blur reduction in everything -- this would easily scale to our 1000fps 1000hz future without needing further Moore's Law.

Optical Flow can still be part of this, working in concert with reprojection. More data for the neural network (controller position / 3D translation position) = less lookahead needed = less frame processing needed = less lag.

For VR, We Already Have Hybrid Frame Rates In The Same Scene

For example on Oculus Rift 45fps to 90fps, sometimes certain things stutter (hand tracking 45fps) while the background scrolls smooth (90fps) via head turns.

But if we had 100fps reprojected to 500fps, then even physics objects like enemy movements would still be smooth looking, just simply more motionblurred (due to frametime persistence) than turns (due to reprojection-based frame rate amplification).

Not everything in the game world *needs* to run at the same framerate; if motion blur is acceptable for such movements.

Different things running at different frame rates on the same screen is very common with reprojection (Oculus Rift), which is ugly when some of the framerates are below stutter/flicker detection threshold.

Hybrid frame rates may not be appropriate for twitch FPS, but it is absolutely perfect for things like Microsoft Flight Simulator. Everything inside the cockpit would reproject at high frame rates (e.g. 500fps) but the world outside can flow along at a lower frame rate. This would be excellent in virtual reality, given stutters during head turns (while reaching for knobs) is much worse than stutters during world scrolling by. No stutters or blur during looking around the cockpit, where you're frantically reaching for knobs and levers, etc, and less motion sickness if you don't have stutter.

Also, hybrid frame rates will work well in solo FPS like Cyberpunk 2077. You'd have no motion blur during turns on a ultra-Hz monitor, thanks to reprojection-based frame rate amplification, 500fps Cyberpunk 2077 mouseturns despite fancy RTX going on at about ~75-100fps. Custom tweaks can be adjusted in game menus (frame rate of self movements versus framerate of world physics), since low frame rates in world physics leaves a hell lot of GPU headroom to framerate amplify via reprojection. With the right ratios, you can get perhaps 500fps+ 1080p-1440p in a game like Cyberpunk 2077 with today's RTX 4090. This is calculated based on how much GPU overhead reprojection uses on older GPUs (e.g. GTX 980), and we all know GTX 4090 is massively faster than the 980 in memory bandwidth and reprojection capability.

But if all framerates could be guaranteed perfect framepaced triple-digit, then no stuttering is visible at all! Just different amounts of persistence motion blur (if using reprojection on a non-strobed display). This will be something I will write about in my sequel to the Frame Rate Amplification Article.

Hybrid Frame Rates Stop Being Ugly if 100fps Minimum + Well Framepaced + Sample And Hold

Hybrid frame rates will probably be common in future frame rate amplification technologies, and should no longer be verboten, as long as best practices are done:

(A) Low frame rates are acceptable for small-object slower movements, but keep it triple-digit to prevent visible stutter

(B) High frame rates are mandatory for global fast movements (flick turns, pans, scrolls, etc)

That is the magic "Best Practices" that legitimizes adding low-compute reprojection to RTX 4090 in a future DLSS 4.0 graphics driver download. NVIDIA should proceed on this, and game developers can hook into APIs to provide additional ground truth (e.g. between-frame positional data from mouse movements) for low-compute reprojection.

Optional: If possible -- due to stroboscopic effect of finite frame rates -- add optional GPU motion blur effect selectively only to low-framerate objects (e.g. fast flying rocket running at only 100 frames per second, it's acceptable to motionblur its trajectory to prevent stroboscopic stepping like the mousearrow effect). This could be settings that is toggled/off (completely separate GPU motionblur setting for global turn/scroll/pan versus for individual enemy/character/object movements).

The master frame rate could be 100fps locked, and the rest of the frames generated by reprojection (and occasional Optical Flow interpolation help).

The frame rates of things like explosions could continue at 100fps to keep GPU load manageable, but things like turns (left/right) would use reprojection technology. The RTX 4090 should easily be capable of >500fps reprojection in Cyberpunk 2077 at the current 1 terabyte/sec memory bandwidth -- and this is a low lying apple just waiting to be milked by game developers!

In other words, don't use 45fps. Like old ASW on Rift.

Instead of 45fps-reproject-90fps, use min-100fps-reproject-anything-higher. Then frame rate amplification can be hybridized at different frame rates for different objects on the screen -- that becomes visually comfortable once every single object is running at triple-digit frame rates!

Technically, reprojection could in theory be created as a parallel-layer API running between the game and the graphics drivers, much like how VR APIs do it. Except it's overlaid on top of non-VR games.

A generic industry standard API would be ideal to speed up the state-of-art. Basically, telling Vulkan 6dof translation movements. It would then be the drivers' responsibility to improve frame rate amplification based on that data without needing a ful polygonal render.

A generic DirectX / Vulkan API extension for interframe data that feeds into whatever framerate amplification system the vendor users (and/or third-party plugins like say Oculus Rifts' reprojection implementation). This would apply equally to VR and non-VR use cases. Reprojection to DOTA2 could make it easy to do 500fps 500Hz, since it's just largely a 2D pixelshift reprojection -- it only needs to update the 3D frames once every 1/100sec or every 1/200sec (200fps full renders), the reprojection is adding extra frame rate that enables "Strobeless ULMB" -- blurless sample and hold.

Low-compute reprojection via ground truth APIs = makes possible excellent sample-and-hold motion blur reduction without strobing or flicker eyestrain!

With sample-and-hold, you don't have the multiple-image problem of reprojection (because VR is strobed). The major reprojection problem only occurs when you're doing this on strobed displays -- sudden double/multi-image effects -- and requires GPU motion blur effect (mandatory) to fix the image duplicating (akin to CRT 30fps at 60Hz). However, this isn't as big a problem for sample-and-hold displays, and if minimum frame rates of the lowest-framerate objects are kept at triple-digit frame rates. Then everything looks pretty smooth, even 100fps enemies running in a 500fps mouseturn! (as long as you're not jittering the gametime:photontime, aka erratic framerates).

Optical Flow and image-autocomplete neural network AIs (part of DLSS 3.0) can be used to help with reprojection parallax-reveal problems, like things revealed behind objects during regular reprojection. A fixed cap can be used (e.g. 5% of pixels) to prevent varying Optical Flow rendering workloads (fluctuations in GPU workload during frame rate amplification).

So different algorithms for a highly-variable-amount of parallax-reveal may be required, such as low resolution that gradually uprezzes over a series of generated frames between the original frames, for large parallax-reveal events (including suddenly strafing beyond a wall and everything suddenly becomes visible -- this potentially causes a full-screen parallax reveal event that can cause a sudden stutter during frame rate amplification that needs to be solved). This is probably a great deal of experimentation for the next ten years to come for researchers.

Algorithms need to spread frame rate amplification workload as consistently as possible over multiple frames -- in the game of keeping gametime:photontime consistent.

Regardless of how frame rates occur, the important thing is that for each framerate component, gametime:photontime time-relativeness needs to be sub-millisecond accurate, since jitter is extra persistence display motion blur (1 pixel error in gametime:photontime = 1 extra pixel of jitter per 1000 pixels/sec. Higher resolutions amplify this; you need 4000 pixels/sec on a 4K diplay to go the same speed as 1000 pixels/sec on an old XGA displays, and the faster pixels per second means tinier jitter margins are easier to see today than a long time ago. Fast-jitter (e.g. 100 jitters per second) is extra persistence motion blur, thanks to the stutter-to-blur continuum, demoed on TestUFO. Not everyone realizes we need to framepace gametime:photontime to submillisecond accuracy today.

Extra motion blur in enemies/NPCs is a lot less objectionable than extra motion blur during turns (FPS turn left/right) or scrolls/pans (DOTA/RTS), so it is acceptable to use reprojection technology at a higher frame rate than Optical Flow technology and/or original renders! As long as the minimum frame rate of a hybrid framerate situation is always triple digit and has no erratic component (perfect framepacing in all concurrent frame rates).

Even when random stutter/jitter disappears, it's can still be there as EXTRA motion blur. So frame rate amplification design needs to keep framepacing those additional frames correctly without jitter (caused by math errors, caused by power management, caused by Windows, caused by workload fluctuations per frame, etc).

A lot of motion sickness by people is caused by the global motion blur during pans/turns/scrolls, so fixing that solves 90% of low-framerate/blur motionsickness. Ultra high frame rates (that are also framepaced well) is key. If DLSS does not work with VRR, then this may be caused by fluctuations in rendertime / reprojectiontime causing rapid jitters in gametime:photontime. Fixing that will be important, as well as going to ultra high frame rates via low-compute reprojection APIs.

Sitting NPC's running at low framerates doesn't even stutter at 100fps. The game could simply do at least 100 Present()'s per second, with some improvements to API's (e.g. a reprojection API layer) to add large-ratio frame rate amplification.

The workflow is to focus on global motion blur (e.g. turns / scrolls / pans) via simpler reprojection. This could allow DLSS to reach the 8x or 10x frame rate amplification ratios, with an existing RTX 4090 -- no GPU upgrade needed. Could apply to FSR, XeSS, and other competing frame rate amplification technologies.

Now that being said, Right Tool For Right Job.

I sent links to these posts to NVIDIA to see if they would do it for DLSS 4.0.

Who knows!?

The Concept of Multiple Concurrent Frame Rates In Same Scene

Different framerate for pans/turns/scrolls versus for in-world objects (e.g. enemies, NPCs, physics).

Some old games (Quake, Bioshock) updated object physics at a low frame rate independently of the display. This shows as weird stutter despite high frame rates.

Oculus ASW generates hybrid frame rates too (90fps headturns with 45fps hand tracking).

But there's actually a massively clever fix to that (keep reading...)

Reprojection is Low Compute with Ground Truth APIs (e.g. mouse position)

If you know the amount of mouse movement between original rendered frames, you can reproject (like Oculus Rift) without doing computationally-expensive analysis of the rendered frame (like Optical Flow). That way, reprojection can occur at 500fps on RTX 4090 because Oculus Rift could reproject at 90fps on a GTX 980 / GTX 1070!

Ground Truth API's (e.g. relaying translational movements to Vulkan/DirectX API or GPU drivers) will become necessary, for proper low-compute reprojection APIs. Game developers will need to hook into the APIs for low-latency reprojection.

Sidestep Moore's Law More Easily

Latency is reduced because the ground truth API's (translation data from mouse position or keyboard movements) reduces the controller-to-photons latency of important global movements (turns/pans/scrolls).

Reprojection can work with both 3rd-person/1st-person (turns) and overhead RTS (scroll/pan) games. Strobeless blur reduction in everything -- this would easily scale to our 1000fps 1000hz future without needing further Moore's Law.

Optical Flow can still be part of this, working in concert with reprojection. More data for the neural network (controller position / 3D translation position) = less lookahead needed = less frame processing needed = less lag.

For VR, We Already Have Hybrid Frame Rates In The Same Scene

For example on Oculus Rift 45fps to 90fps, sometimes certain things stutter (hand tracking 45fps) while the background scrolls smooth (90fps) via head turns.

But if we had 100fps reprojected to 500fps, then even physics objects like enemy movements would still be smooth looking, just simply more motionblurred (due to frametime persistence) than turns (due to reprojection-based frame rate amplification).

Not everything in the game world *needs* to run at the same framerate; if motion blur is acceptable for such movements.

Different things running at different frame rates on the same screen is very common with reprojection (Oculus Rift), which is ugly when some of the framerates are below stutter/flicker detection threshold.

Hybrid frame rates may not be appropriate for twitch FPS, but it is absolutely perfect for things like Microsoft Flight Simulator. Everything inside the cockpit would reproject at high frame rates (e.g. 500fps) but the world outside can flow along at a lower frame rate. This would be excellent in virtual reality, given stutters during head turns (while reaching for knobs) is much worse than stutters during world scrolling by. No stutters or blur during looking around the cockpit, where you're frantically reaching for knobs and levers, etc, and less motion sickness if you don't have stutter.

Also, hybrid frame rates will work well in solo FPS like Cyberpunk 2077. You'd have no motion blur during turns on a ultra-Hz monitor, thanks to reprojection-based frame rate amplification, 500fps Cyberpunk 2077 mouseturns despite fancy RTX going on at about ~75-100fps. Custom tweaks can be adjusted in game menus (frame rate of self movements versus framerate of world physics), since low frame rates in world physics leaves a hell lot of GPU headroom to framerate amplify via reprojection. With the right ratios, you can get perhaps 500fps+ 1080p-1440p in a game like Cyberpunk 2077 with today's RTX 4090. This is calculated based on how much GPU overhead reprojection uses on older GPUs (e.g. GTX 980), and we all know GTX 4090 is massively faster than the 980 in memory bandwidth and reprojection capability.

But if all framerates could be guaranteed perfect framepaced triple-digit, then no stuttering is visible at all! Just different amounts of persistence motion blur (if using reprojection on a non-strobed display). This will be something I will write about in my sequel to the Frame Rate Amplification Article.

Hybrid Frame Rates Stop Being Ugly if 100fps Minimum + Well Framepaced + Sample And Hold

Hybrid frame rates will probably be common in future frame rate amplification technologies, and should no longer be verboten, as long as best practices are done:

(A) Low frame rates are acceptable for small-object slower movements, but keep it triple-digit to prevent visible stutter

(B) High frame rates are mandatory for global fast movements (flick turns, pans, scrolls, etc)

That is the magic "Best Practices" that legitimizes adding low-compute reprojection to RTX 4090 in a future DLSS 4.0 graphics driver download. NVIDIA should proceed on this, and game developers can hook into APIs to provide additional ground truth (e.g. between-frame positional data from mouse movements) for low-compute reprojection.

Optional: If possible -- due to stroboscopic effect of finite frame rates -- add optional GPU motion blur effect selectively only to low-framerate objects (e.g. fast flying rocket running at only 100 frames per second, it's acceptable to motionblur its trajectory to prevent stroboscopic stepping like the mousearrow effect). This could be settings that is toggled/off (completely separate GPU motionblur setting for global turn/scroll/pan versus for individual enemy/character/object movements).

The master frame rate could be 100fps locked, and the rest of the frames generated by reprojection (and occasional Optical Flow interpolation help).

The frame rates of things like explosions could continue at 100fps to keep GPU load manageable, but things like turns (left/right) would use reprojection technology. The RTX 4090 should easily be capable of >500fps reprojection in Cyberpunk 2077 at the current 1 terabyte/sec memory bandwidth -- and this is a low lying apple just waiting to be milked by game developers!

In other words, don't use 45fps. Like old ASW on Rift.

Instead of 45fps-reproject-90fps, use min-100fps-reproject-anything-higher. Then frame rate amplification can be hybridized at different frame rates for different objects on the screen -- that becomes visually comfortable once every single object is running at triple-digit frame rates!

Technically, reprojection could in theory be created as a parallel-layer API running between the game and the graphics drivers, much like how VR APIs do it. Except it's overlaid on top of non-VR games.

A generic industry standard API would be ideal to speed up the state-of-art. Basically, telling Vulkan 6dof translation movements. It would then be the drivers' responsibility to improve frame rate amplification based on that data without needing a ful polygonal render.

A generic DirectX / Vulkan API extension for interframe data that feeds into whatever framerate amplification system the vendor users (and/or third-party plugins like say Oculus Rifts' reprojection implementation). This would apply equally to VR and non-VR use cases. Reprojection to DOTA2 could make it easy to do 500fps 500Hz, since it's just largely a 2D pixelshift reprojection -- it only needs to update the 3D frames once every 1/100sec or every 1/200sec (200fps full renders), the reprojection is adding extra frame rate that enables "Strobeless ULMB" -- blurless sample and hold.

Low-compute reprojection via ground truth APIs = makes possible excellent sample-and-hold motion blur reduction without strobing or flicker eyestrain!

With sample-and-hold, you don't have the multiple-image problem of reprojection (because VR is strobed). The major reprojection problem only occurs when you're doing this on strobed displays -- sudden double/multi-image effects -- and requires GPU motion blur effect (mandatory) to fix the image duplicating (akin to CRT 30fps at 60Hz). However, this isn't as big a problem for sample-and-hold displays, and if minimum frame rates of the lowest-framerate objects are kept at triple-digit frame rates. Then everything looks pretty smooth, even 100fps enemies running in a 500fps mouseturn! (as long as you're not jittering the gametime:photontime, aka erratic framerates).