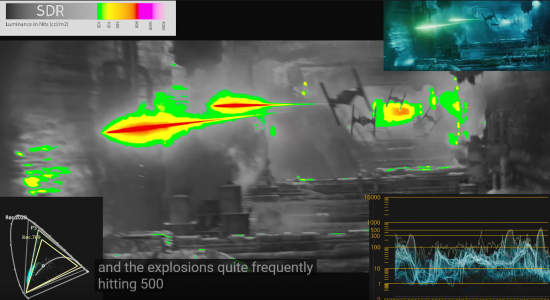

What madpistol said. I keep my screen in SDR mode with a very low OLED light setting for general work and SDR gaming (~25-30). It only enters HDR mode when HDR content is displayed in my media player software or when a game turns it on, then I let the brightness crank up. I fully realize that HDR mode should be allowed to take the pixels to 100%, but I use my screen in a dim office only so I have set my HDR max to 80% OLED light... the HDR effect is still great compared to any consumer HDR monitor. It really is. These screens aren't about getting 1000 nits in HDR... they're about everything else. The image is so ridiculously good compared to ANYTHING else on the market that having the absolute brightest pop on a bright HDR scene just doesn't seem that important.

Sure, someday maybe we can have it all on brightness, but this is by far good enough for now. Every single evening when I get home and turn it on, I'm instantly struck by how much better it is than EVERY other top end IPS or VA panel I've ever used. PC or TV... it's just a world better.

And that makes me want to baby it just a LITTLE BIT because I want to get a bunch of years out of it before having to live with any burn in.

So I would also recommend:

Use dark mode religiously on all your common web sites, set the task bar to auto hide and set it to a dark grey color.

It really doesn't hurt to have a second monitor if you need to keep static info up for many hours on end or if you have to run something with solid white backgrounds all day long. I'm personally not willing to run Word or Excel or Fusion 360 full screen all day long at static full white on my CX. I think you could for several years at a low brightness. But it's personal choice. I put those on the secondary monitor for the mundane work. But I do browse and youtube and game etc. on it all night.

Just keep the remote in front of you and TURN IT OFF when you know you are walking away for a long period of time.

Sure, someday maybe we can have it all on brightness, but this is by far good enough for now. Every single evening when I get home and turn it on, I'm instantly struck by how much better it is than EVERY other top end IPS or VA panel I've ever used. PC or TV... it's just a world better.

And that makes me want to baby it just a LITTLE BIT because I want to get a bunch of years out of it before having to live with any burn in.

So I would also recommend:

Use dark mode religiously on all your common web sites, set the task bar to auto hide and set it to a dark grey color.

It really doesn't hurt to have a second monitor if you need to keep static info up for many hours on end or if you have to run something with solid white backgrounds all day long. I'm personally not willing to run Word or Excel or Fusion 360 full screen all day long at static full white on my CX. I think you could for several years at a low brightness. But it's personal choice. I put those on the secondary monitor for the mundane work. But I do browse and youtube and game etc. on it all night.

Just keep the remote in front of you and TURN IT OFF when you know you are walking away for a long period of time.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)