MistaSparkul

2[H]4U

- Joined

- Jul 5, 2012

- Messages

- 3,465

Yeah I get them mixed up b/c I have a C1 in my living room. You are prob right. Either way same end result.

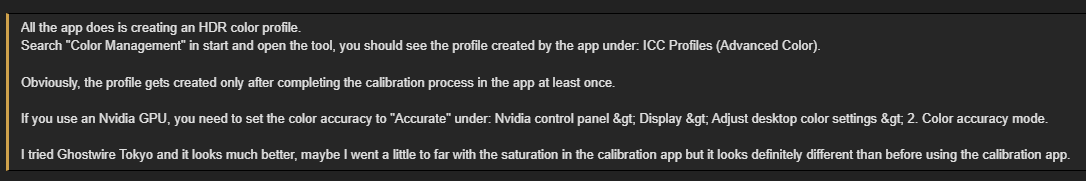

I tried switching between HGiG and DTM OFF and there is definitely zero difference between the two modes after using the Windows HDR calibration tool. This makes sense because windows auto HDR will no longer send any nit signals that's beyond what the TV can display because it is now aware of the TV's capabilities after doing the calibration so there is no need to even perform static tone mapping if the TV never receives any signal above 750/800 nits.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)