- Joined

- May 18, 1997

- Messages

- 55,634

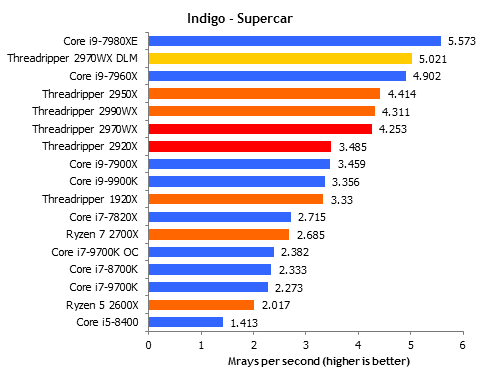

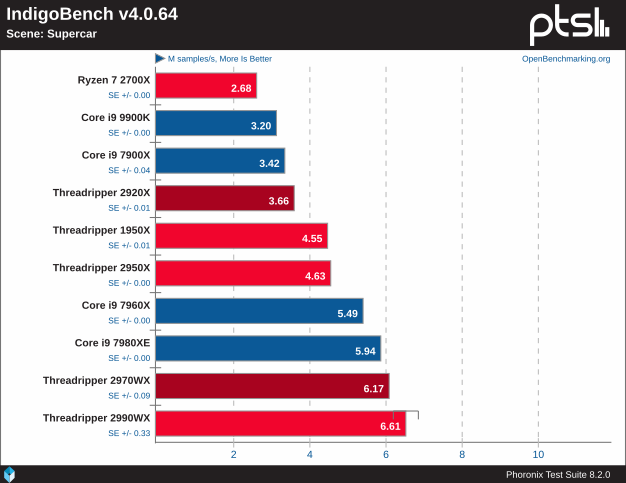

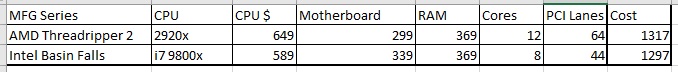

Intel Core i9-9980XE vs AMD Ryzen Threadripper

Today Intel is kicking off its newest High End Desktop processor, the Intel Core i9-9980XE Extreme Edition. This 14nm Skylake-X CPU boasts 18 Cores and 36 Threads and has an expected retail price of $1979. We compare the i9-9980XE to AMD's entire line of Threadripper CPUs to see where the 9980XE sits in the HEDT stack.

If you like our content, please support HardOCP on Patreon.

Today Intel is kicking off its newest High End Desktop processor, the Intel Core i9-9980XE Extreme Edition. This 14nm Skylake-X CPU boasts 18 Cores and 36 Threads and has an expected retail price of $1979. We compare the i9-9980XE to AMD's entire line of Threadripper CPUs to see where the 9980XE sits in the HEDT stack.

If you like our content, please support HardOCP on Patreon.

Last edited:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)