Starfield is going to be an absolute riot lol.

I'm looking forward to Starfield, but curious to see what the system requirements are really like considering the gameplay we've seen so far doesn't look much better than Fallout 4.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Starfield is going to be an absolute riot lol.

Different engine, but MSFS is a similar game that really taxes the CPU. The trick to optimizing that one is to turn up all the settings that would stress your GPU and turn down ones that would stress the CPU. Being GPU limited makes for a much more pleasant experience in general. It's almost as if these games need to offer unnecessarily taxing graphical settings for people with high-end GPUs so the GPU becomes the bottleneck.

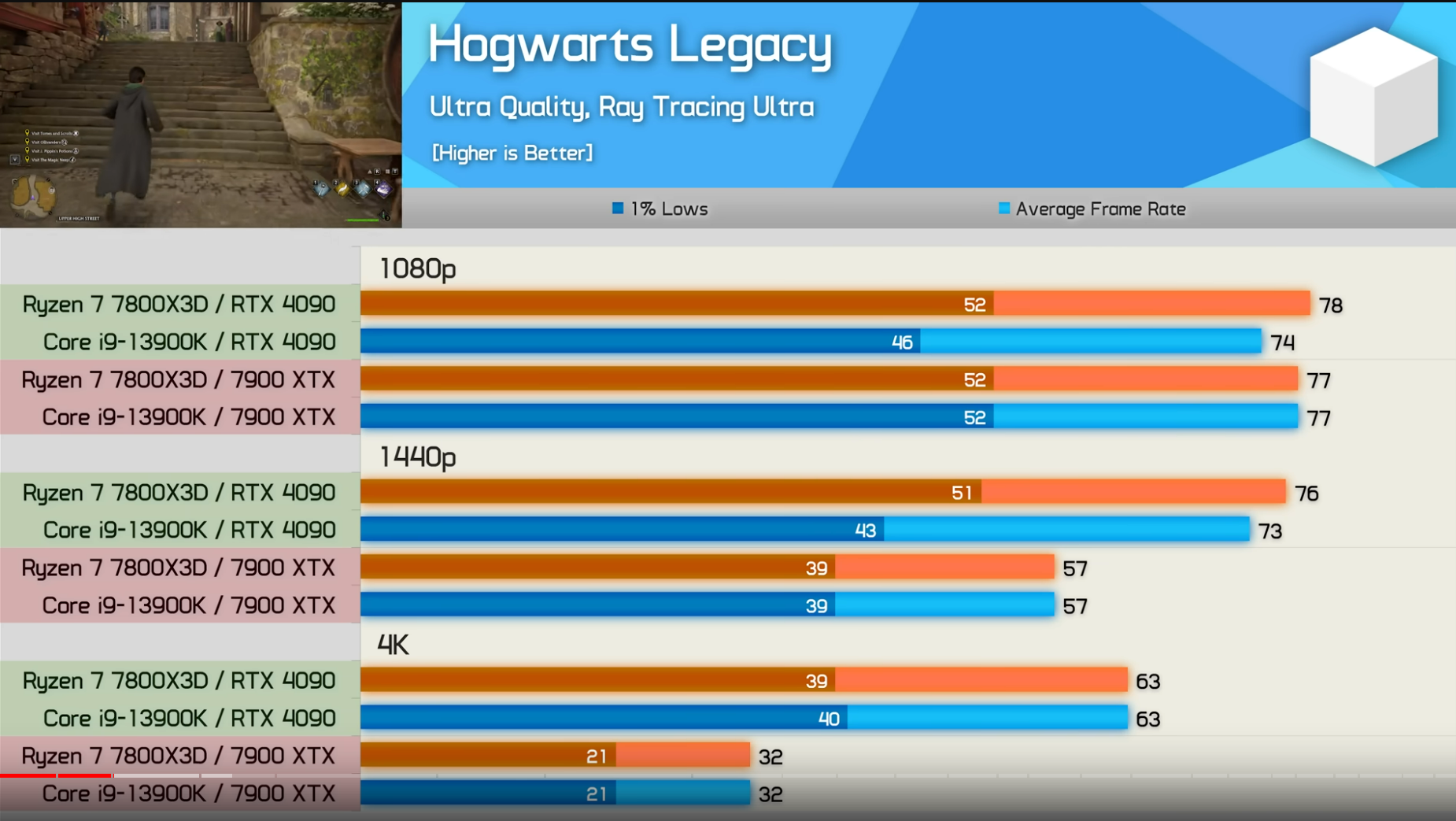

One nice feature of the 4XXX series is that frame generation seems to work quite well for games such as Hogwarts to smooth out the experience.

Honestly people have tossed around a huge variety of reasoning for the reason why games are behaving like Hogwarts. All the way to AMD must be paying the devs to do this (and have sloppy FSR/ console performance, which are AMD platforms)

I mean he did exactly what he wanted to do (pocket $1700) and you're kind of trying to talk them back into spending a boat load of money for....reasons.So, you're on this forum full of enthusiast, wondering what the best route to take is...and I'll be one of many to say...DO WHAT YOU WANT.

Don't worry about justifying your purchase; just go and do it! (Assuming you can afford it)

That's the exact opposite of what the quote you made saidI mean he did exactly what he wanted to do (pocket $1700) and you're kind of trying to talk them back into spending a boat load of money for....reasons.

You missed the entire point of my post lol.I mean he did exactly what he wanted to do (pocket $1700) and you're kind of trying to talk them back into spending a boat load of money for....reasons.

You missed the entire point of my post lol.

Also I missed the part where he made the decision to pocket 1700 so there's that.

I mean I pointed out pretty directly it wasn't a logical use of money.I mean... your entire point kind of boiled down to "I'm Yolo Swaggins and I do what I want and you should, too". While that is a good point, I couldn't justify my purchase to myself. It's not money I would be missing too much, but it wasn't worth it over my 3080 Ti. I can also think of a lot of things to use that money on instead, like a newer stove and other appliances, which I also don't need but would certainly provide me with more benefit than the card.

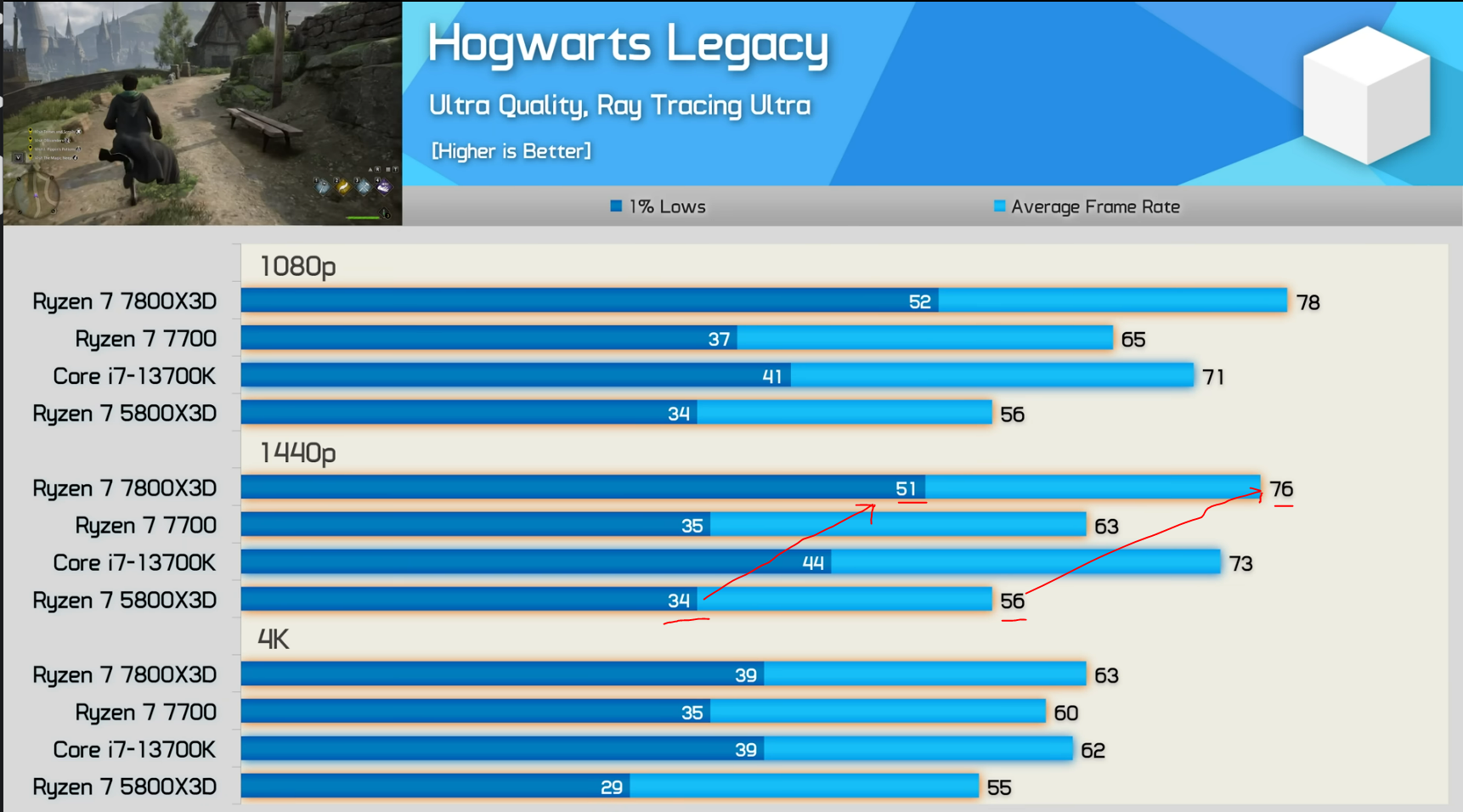

Also frankly if you're that yolo with your money, why are you not just buying the 7800X3D? I showed you the charts. Your 12th gen i7 is probably doing even worse (or maybe at parity) than my 5950X, which does worse than 5800X3D. Like you would literally go up probably 40-50%++ in performance in that game (and any derivative games) by moving up processors.

I'll say it. Custom loop is not cost effective. It never will be unless something crazy happens.

But it looks cool, right?

At the same time, going from a 3080ti to a 4090 isn't worth it either! Listen, I know.....I did it myself. Though I have no kids and my fiancée and I make enough to pay for our stupid hobbies.

Hogwarts legacy was the reason. I wasn't happy with the performance so I built a whole new PC over it....spoiler alert: it was better but not enough to merit the cost. Do I care? Nah, had the money, didn't hit me at all.....and I got my fiancée to build her first PC with my old parts. It was a win win.

Simple as that honestly. I think anyone considering a 4090 at this point needs a 13700k or preferably a 7800X3D at the minimum, because this will only get worse (in my opinion).

I'm not seeing a trend with games like Hogwarts, yet. It seems to be more the exception than the rule. The majority of games are still able to fully utilize the GPU, so at 4K resolutions CPU really doesn't matter much. I'm likely going to stick with my 5900X for now and upgrade once Zen 5 comes out.

If Hogwarts was the main reason for buying a 4090, I'd have been frustrated too.

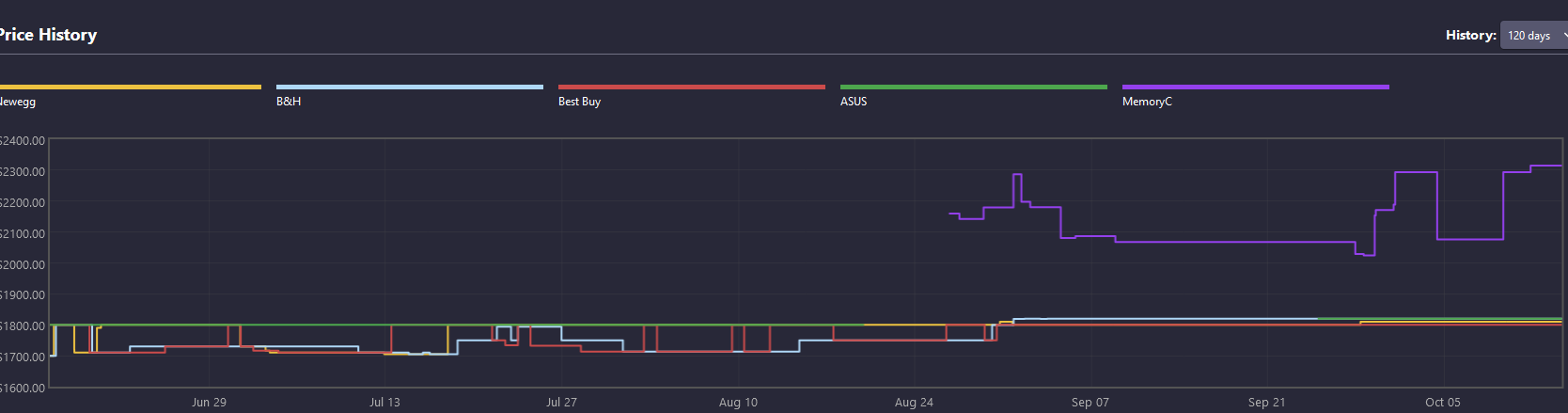

I was worried it was a "now or never" type of scenario, and that I would need it to play games going forward. We'll see if they keep rising (and Jayz is right), or if it's just minor market fluctuations, since the Zotac/PNY/Galax cards seem to be the same price. I don't think even if there was a shortage on the horizon that it would get me to actually switch though. After trying a 4090, I would say I'm actually more satisfied with my 3080 Ti than I was before, rather than the other way around. Was good to see the grass on the other side, but this is fine. I think I would upgrade my CPU before the 4090. We'll see what Intel has coming up.

Prices do seem to have bottomed out a few weeks ago. Lenovo was selling the MSI Suprim Liquid for under 90% of MSRP, that was the best price I saw. Since then prices are up a bit. The fact the 4090 Ti was cancelled, and Blackwell was pushed to 2025 may have increased demand slightly from gamers. It definitely factored into my decision. I was on a 3080 (non-Ti) and was going to wait for Blackwell, but decided to pull the trigger on a 4090 instead. I wasn't too impressed with most of the 40XX series outher than the 4090, which is an absolute beast and the best value despite being the most expensive. So far the returns are positive. In RDR2 on the 3080 I'd tweaked the settings to get a fairly consistent 60fps at 4K. On the 4090, I cranked absolutely everything to the max and it's still noticeably smoother. I don't think it's ever dipping under 60fps now.

Selfishly, I'm hoping for prices to go up again so I can sell my 3080 for a better price. We may see a slight uptick in demand from gamers as there are a lot of AAA titles coming out in the near future, then the holiday season will roll around.

Seems some poor game and setting choices led to different than normal decision making. Oh well. To each their own.

As far as Red Dead Redemption 2, there's actually a setting towards the bottom in graphics settings that you have to enable yourself, that allows you to enable other graphics settings to set to max. It contains a bunch of values that you have to manually set to "Ultra" or some equivalent. With that enabled, even with the 4090 I was almost in a slideshow. The game did look pretty good, though (just not 1.7k for 22 fps good lol).

Hmmm, something may have been up with your 4090. I maxed out all those settings as well and am getting an easy 60fps. I'll play on my 144Hz monitor without Vsync tonight and see what the averages are.

Is it possible you turned up the resolution scaling so you were rendering at 8K or similar?

This…Seems like a cool story... bro.

Seems like a cool story... bro.

If time proves that it was just an exception, I might grab it again. If not... yeah I'm not going to pay that much to upgrade for such a slight gain. Unless I get a better CPU by the time I'm ready again, I guess.When I switched from a 3090 to a 4090 the only game I didn't see a significant uptick in frames and smoothness was Hogwarts Legacy. It only felt like a slight upgrade for that game. Cyberpunk 2077 and MFSF were pretty noticeable upgrade in frame rates. Everything runs in 4k and those are the heaviest hitters I've tried on my rig.

That's probably a solid plan. The 5k series is rumored to be an even bigger upgrade over 4k than 4k was over 3k. Of course... who knows?Better off just waiting for the 5000 series.

I got tons. Wanna hear more?

Dang, I didn't even need to tell you to click Like and Subscribe down below.Dude! Yes! Why do you think I'm following this thread?

I don't think so. Nor with that Gigabyte....

* Is it me or is this boost clock kind of low, even at stock? While maxing Cyberpunk, it's pretty much pinned at 2790Mhz. Is this just a dud card? =\

...

The only problem is that I can't quite get the side doors to close without bending the adapter cabling too much for comfort, so I guess I'll just leave it open. It's going to look kind of jank, but oh fucking well. I guess it is what it is, I don't want any weird shit happening because of it bending oddly, and I'm not getting one of those right angle or U-turn adapters, have heard too many horror stories with them. Here's a quick pick so you all can shit on it lol:

As you can see, I tied the whole set of 8 pin connectors to the radiator tubing, and it works out pretty nicely. They look neater, and the adapter plugging into the GPU has a nice, gradual curve to it, and it's very stable mechanically. Since this GPU is much shorter than an air cooled 4090, I might also be able to add the metal bracket for holding the wires back, back into the case. It got in the way of my other GPUs before.

What power supply do you have? I got a cable (not an extension, an actual cable from PSU to the card) from Corsair for my RM1000X that plugs two 8-pins to the single 12vhpr and it was like $14 on Amazon direct from their store so not a chinese knockoff. It works great and is super flexible. A lot of brands have them now.

View attachment 605056

It's an EVGA P2 1200W. Great power supply but unfortunately I don't think EVGA made any of those type of adapters for it, nor do I think they will ever show up. Technically Cablemod has a 4x8 to 12Vhpwr connector available to buy, but they want $100 for that and I'm like... no lol. I kind of want to stick to the OEM solution, too. I think what I'll do is just buy some of this:

https://www.amazon.com/Plastic-Punching-Dustproof-Dust-Proof-Equipment/dp/B08P1C43KL

And just use it to seal in the gaps. That'll keep it mostly dust free, and I don't really care about looks so that's good enough for me anyway. Maybe some time down the line, I'll look for a wider case to put it into, but thus far I haven't seen many cases that I would want to "upgrade" to.

And I'm kind of getting tired of moving parts around. On the bright side, my 3080 Ti is now pumping out Stable Diffusion images a hell of a lot faster than my 2080 could manage lol, and my bedroom computer is quieter because the Gigabyte 2080 doesn't spin up fans unless it gets hot.

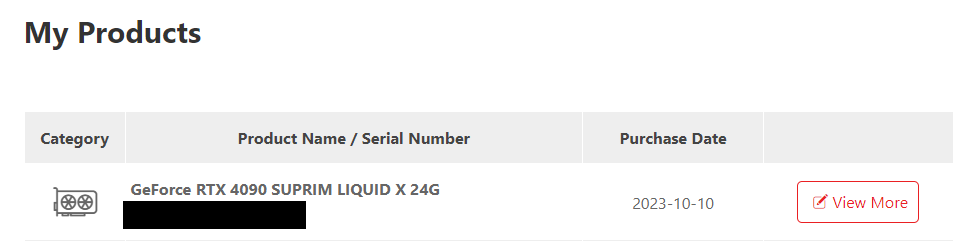

Still deciding on what replacement plan to buy for it. They said I have 15 days from purchase to decide if I want one. I'm still leaning towards the 2 year one. I'm pretty confident that this is the 4090 that I returned for "coil whine" and then it looks like they sent it off to MSI because it did have an unopened adapter (though everything else was as I left it), so essentially I'm pretty much the only owner of this thing.

Maybe I was looking at a more expensive version? The one that I saw was indeed $100. Weird. Anyway, that has a lot of negative reviews:

https://www.amazon.com/product-revi...r&reviewerType=all_reviews#reviews-filter-bar

The one that I would buy would probably be the 4x8 to 12VHWPR, which would be about $30... but there are a lot of 1 star ratings that say that their card wouldn't work properly with it, and switching to the OEM adapter completely made the issue go away... I dunno, I'll probably just stick to OEM. Thanks for the link though.

Haha, the OEM one is the only one I've had that didn't work. When I had the Asus TUF card I had to buy an aftermarket one, the card would stay unpowered with the one that came with the card with the red light at the plug on. I didn't really like the quality of the aftermarket one so I ended up buying an MSI 1050w power supply with the 12vhpr connector on both ends and that let it run, unfortunately the coil whine was so extreme I couldn't stand it and sent it back.

As long as it works and you can do your stuff its all good!

You clearly have pretty bad luck with ASUS... not sure why you keep buying them. o_0; I don't touch them with a yard stick because of the warranty horror stories, and overall they seem overpriced this gen anyway.

Anyway, I've read that there are two variants of the 12VHPWR adapter that can ship with cards. One of them is supposedly worse, so maybe you got a dud. I don't really do the brand loyalty thing, but MSI seems to be pretty solid lately. By which I mean the motherboards I've gotten from them have been pretty stable and solid boards.

The 30 series seemed okay? My 3080 Tuf - I would declare it as the best gpu I've ever had to date - although, maybe I have a small sample size - but, it was quiet, cool running - decent temps and way better than my EVGA 3060 and I don't mean - because it was a higher tier - the EVGA card was loud - and I hated it.You clearly have pretty bad luck with ASUS... not sure why you keep buying them. o_0; I don't touch them with a yard stick because of the warranty horror stories, and overall they seem overpriced this gen anyway.

Anyway, I've read that there are two variants of the 12VHPWR adapter that can ship with cards. One of them is supposedly worse, so maybe you got a dud. I don't really do the brand loyalty thing, but MSI seems to be pretty solid lately. By which I mean the motherboards I've gotten from them have been pretty stable and solid boards.

The 30 series seemed okay? My 3080 Tuf - I would declare it as the best gpu I've ever had to date - although, maybe I have a small sample size - but, it was quiet, cool running - decent temps and way better than my EVGA 3060 and I don't mean - because it was a higher tier - the EVGA card was loud - and I hated it.

I think the 40 series - Asus Tuf cards were expensive at first - but, I've seen some prices come down - and it depends on vendor. Also, it is often the most purchased (it seems) so much easier to find a used one - and considering ASUS offers transferable warranty - in Canada, at least - I would definitely consider one.

I've read a few horror stories about coil whine - but, considering they seem to sell a lot of them - it could be just the large sample of purchased cards - so, there are some out there with that? Also, other brands seem to have coil whine - I am not sure which brands have the 'least coil whine' anymore - I don't think anyone has really looked into that.

You would think one of the tech tubers would try to - but, no - it's always just a test of game vs game or sometimes comparing to an AMD card.

I am more interested in coil whine/fan noise/ temps - I want those compared.