- Joined

- Feb 8, 2005

- Messages

- 12,772

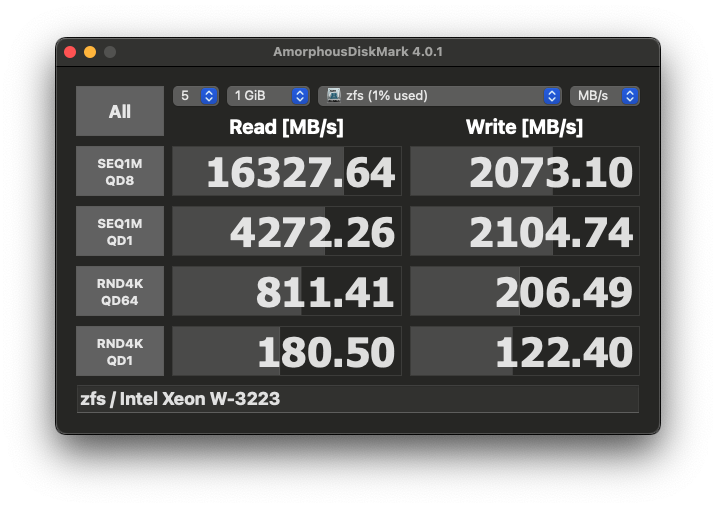

Yeah. I thought about using that config. (Heck, I also priced out a 100GbE network. $750 or so, not that bad... House is already fiber'd up too... Hrm.) Ended up deciding to have "some" resilience built in. So, I played around with ZFS. By setting the ashift to 9, I could get better speeds and more capacity than the previous 13 I was "strongly recommended to use for flash":Nice how that raid0 setup could max out even 100Gb.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)