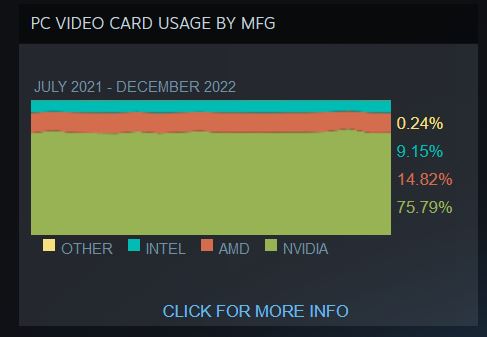

Thats quarterly shipment of new gpu, not the installed user base.The thing is, I'm not sure how that graph works. You mean in 3 months of ALL the desktops surveyed, AMD dropped 10% of ALL the GPU discrete desktop market share? I find that hard to believe as most people don't have a 3 month cycle on upgrading.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Desktop GPU Sales Hit 20-Year Low

- Thread starter erek

- Start date

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

Thats quarterly shipment of new gpu, not the installed user base.

That's what I figured. Then it makes sense because Nvidia released a new card in Q3'22 and AMD did not. I would expect that Q4'22 will be magically AMD centric as they are selling every 7900XTX they can make right now, Nvidia is essentially sold out of 4090s, and the 4080s aren't selling well at all.

The 4090 was launched Q4 (october-november-december), maybe early enough to count among shipment, but I doubt halo card can have huge effect.That's what I figured. Then it makes sense because Nvidia released a new card in Q3'22 and AMD did not.

Historically it seem when minning drop, Nvidia market share rise, has the buy anything that it is possible to buy variable is removed.

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

The 4090 was launched Q4 (october-november-december), maybe early enough to count among shipment, but I doubt halo card can have huge effect.

Historically it seem when minning drop, Nvidia market share rise, has the buy anything that it is possible to buy variable is removed.

For some reason I thought it was September...probably confused it with Ampere launch.

early october, but maybe when it concern shipment they occur a couple of weeks before they are in actual sales, maybe when mining stop OEM pre-built become a large part of the sales where I can imagine Nvidia lead larger than AMD (and used market more the target of the more enthusiast crowd)For some reason I thought it was September...probably confused it with Ampere launch.

It’s not a conspiracy theory when Nvidia puts the plan in writing and publicly states it to their board and investors only for all the news sites to put out articles about Nvidia “manipulating the market”.

There is also their public statement to the Chinese government about how they will do their best to supply their market before the new US embargo kicks in.

Please show me where Nvidia has stated, in writing, that their objective is to make GPUs so expensive that those pesky "normies" won't be able to buy them. I have never seen any such release from them that their intention is to essentially exit the consumer market by attrition, and this idea is not consistent with the fact they have been reducing, or attempting to reduce, their orders with TSMC. The opposite would be true if this were some effort by Nvidia to free up supply because they couldn't make enough.

Supplying the Chinese market is irrelevant to whether or not they don't want to serve the gaming market.

It was their August 2022 earnings call, if you register for the Nvidia site you can listen to and download the transcripts.Please show me where Nvidia has stated, in writing, that their objective is to make GPUs so expensive that those pesky "normies" won't be able to buy them. I have never seen any such release from them that their intention is to essentially exit the consumer market by attrition, and this idea is not consistent with the fact they have been reducing, or attempting to reduce, their orders with TSMC. The opposite would be true if this were some effort by Nvidia to free up supply because they couldn't make enough.

Supplying the Chinese market is irrelevant to whether or not they don't want to serve the gaming market.

Jayz broke it down pretty well if you just want to watch that instead.

But here is a link to the Transcript from a 3'rd party that may let you view them with out registering.

Here is the quote from Jensen.

"Our strategy is to reduce the sell-in -- reduce the sell-in this quarter, next quarter to let channel inventory correct. Obviously, we’re off the highs, and the macro condition turned sharply worse. And so, our first strategy is to reduce sell-in in the next couple of quarters to correct channel inventory. We’ve also instituted programs to price position our current products to prepare for next-generation products.

Ampere is the most popular GPU we’ve ever created. It is in the top 15 most popular gaming GPUs on Steam. And it remains the best GPUs in the world, and it will be very successful for some time. However, we do have exciting new next-generation coming and it’s going to be layered on top of that. And so, we’ve taken -- we’ve done two things. We’ve reduced sell-in to let channel inventory correct and we’ve implemented programs with our partners to price position the products in the channel in preparation for our next generation.

All of this we anticipate were working towards a path to being in a good shape going into next year. Okay? So, that’s what our game plan is."

Normal speak translation:

We have too many Ampere cards, we are pricing and supplying the next generation for calendar Q4 2022 and Q1 2023, in a manner that will correct that.

jlbenedict

2[H]4U

- Joined

- May 22, 2005

- Messages

- 2,207

Normal speak translation:

We have too many Ampere cards, we are pricing and supplying the next generation in a manner to reduce the overstock.

to add to normal speak... we learned from last crypto crash; prices bottomed out and GPU's were cheap... can't let the peasants enjoy our GPU's without a an arm and half-leg this cycle

I agree on all counts. I'm thinking mainly of 'serious' PC gamers, the ones who try to stay reasonably current and buy new components (or even whole systems) every few years. The family that bought a so-so gaming tower at Best Buy so the kids could play Minecraft and Call of Duty until the system breaks aren't really concerned if the PC can beat a PS5, or even if they can run some of the latest games. And that audience is also the sort that might just as easily decide a $399 PS5 is a wiser investment that prevents the kids from goofing off when they're doing their homework on the family computer.I would be curious about this, looking at steam most played:

https://store.steampowered.com/charts/mostplayed

Everything before the free to play Call of Duty would ran quite well on a $500 pre built desktop or if you choose an laptop with a GPU instead of your other choice for the laptop you would have had anyway.

The library of old title that run out of the box free of issue is bigger than ever, Epyc, Amazon give games aways, low cost indie title is a popular genre.

Keeping up to have the strongest machine to play on a oled tv, we are back to earlys 90s pricing true, but I am not sure what percentage of the world pc gamer it represents, the can on a core 2 duo with players from Vietnam to everywhere title scene is huge (maybe because you need to spend a lot to beat the PS5 experience and that why it push it in too extreme, very cheap and very uncheap).

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,945

>shits on digital foundry's detailed in-depth analysis

>compares 1080p performance on gtx1060 to 1440p (fps-capped) performance on PS5...

>follows up with tldr screed on benchmarking rigor

- If you watch digital foundry they will try to match effects on the PS5 on PC, but the problem is there are some effects that don't 100% match up. So they end up moving the graphics up on PC as a result. In other words they round up to the nearest graphics on PC. This is something Digital Foundry has always done that I'm not a fan of.

- The PS5 gets 30 fps in 4k, 1440p, and 1080p, with 40 fps in 120hz mode. The PS5 version of Requiem uses upscaling no matter the resolution, where as a GTX 1060 has no upscaling until you use FSR, and with FSR a GTX 1060 can achieve 60 fps even in medium settings.

The point I'm making here is that a GTX 1060 or equivalent owner is not going to buy a PS5. They also have less of a reason to upgrade. PS5 is "okay" but so is a GTX 1060. The only issue here is that most GTX 1060 users won't know how to use FSR.I know you've got a hard-on for platform-warrioring, but it's okay to accept that consoles are, in fact, "okay". Your battlestation will still respect you in the morning.

At 1440p the PS5 is upscaling. You wanna cry when you see a 5 year old GTX 1060 with FSR playing requiem? Not 1440p but one can assume with FSR a GTX 1060 can do better than 30fps. It's one of the problems I have with Digital Foundrys review in that they assume the PS5 isn't upscaling, but we know it does upscale. To make it fair, the GTX 1060 should also be upscaling.

When Jay made his video the GPU's were approaching MSRP. Most Nvidia GPU's are at MSRP with the exception of the RTX 4090, and AMD GPU's are sold for bellow MSRP. Prices are going to continue to fall, so he was wrong.How much was he wrong ? Was availability and/or price been significantly (and better than usual time passing affair) better since ?

There is now more 3060/3060TI than 1060 on hardware survey (more 2060/2060 super has well), we should not overread over the artifact that we combine 1060 6gb and 3gb and entered a more splitted sku world.

Last edited:

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,601

Digital Foundry doesn't "assume it isn't upscaling". They tell you it is, several times. They tell you the minimum and maximum resolution parameters for the internal resolution (information they got directly from the developers), and they tell you what percentage of 4K, that the internal resolution runs on average. In Requiem: PS5 is 55% of 4K, on average. Series X is 59%. Did you watch the video?It's one of the problems I have with Digital Foundrys review in that they assume the PS5 isn't upscaling, but we know it does upscale. To make it fair, the GTX 1060 should also be upscaling.

It was their August 2022 earnings call, if you register for the Nvidia site you can listen to and download the transcripts.

Jayz broke it down pretty well if you just want to watch that instead.

But here is a link to the Transcript from a 3'rd party that may let you view them with out registering.

Here is the quote from Jensen.

"Our strategy is to reduce the sell-in -- reduce the sell-in this quarter, next quarter to let channel inventory correct. Obviously, we’re off the highs, and the macro condition turned sharply worse. And so, our first strategy is to reduce sell-in in the next couple of quarters to correct channel inventory. We’ve also instituted programs to price position our current products to prepare for next-generation products.

Ampere is the most popular GPU we’ve ever created. It is in the top 15 most popular gaming GPUs on Steam. And it remains the best GPUs in the world, and it will be very successful for some time. However, we do have exciting new next-generation coming and it’s going to be layered on top of that. And so, we’ve taken -- we’ve done two things. We’ve reduced sell-in to let channel inventory correct and we’ve implemented programs with our partners to price position the products in the channel in preparation for our next generation.

All of this we anticipate were working towards a path to being in a good shape going into next year. Okay? So, that’s what our game plan is."

Normal speak translation:

We have too many Ampere cards, we are pricing and supplying the next generation for calendar Q4 2022 and Q1 2023, in a manner that will correct that.

It's possible I misunderstood what you were saying, because this is something I already knew. That's a temporary thing though to correct their inventory excess, and you can bet their channel partners and AIB partners put a lot of pressure to help them clear that backlog. This doesn't indicate they're not interested in servicing the consumer market, though, which is what I interpreted your initial comment to indicate, and if I had that wrong, then I apologize. In any case, this is a multi billion dollar market, and Nvidia will continue to happily sell into it as long as people are willing to buy. A strategy to clear an inventory backlog is not the same thing as a desire to exit a market.

Yeah come April or May, AMD and Nvidia will suddenly have price "corrections" we'll see normal'ish stock on the mid-range options and rumors of the 4080 and 4090TI variants. Back to business as usual, and by usual I mean something closer to 2019-2020 figures, none of that 2020-2022 nonsense.It's possible I misunderstood what you were saying, because this is something I already knew. That's a temporary thing though to correct their inventory excess, and you can bet their channel partners and AIB partners put a lot of pressure to help them clear that backlog. This doesn't indicate they're not interested in servicing the consumer market, though, which is what I interpreted your initial comment to indicate, and if I had that wrong, then I apologize. In any case, this is a multi billion dollar market, and Nvidia will continue to happily sell into it as long as people are willing to buy. A strategy to clear an inventory backlog is not the same thing as a desire to exit a market.

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,601

I don't think there will any price corrections. I think they will just release new, lower performance parts to fill out the lineup. And by the time they get to the $500 and less cards----the improvements will probably be small, compared to the 3070, 3060 ti, and 3060.Yeah come April or May, AMD and Nvidia will suddenly have price "corrections" we'll see normal'ish stock on the mid-range options and rumors of the 4080 and 4090TI variants. Back to business as usual, and by usual I mean something closer to 2019-2020 figures, none of that 2020-2022 nonsense.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,945

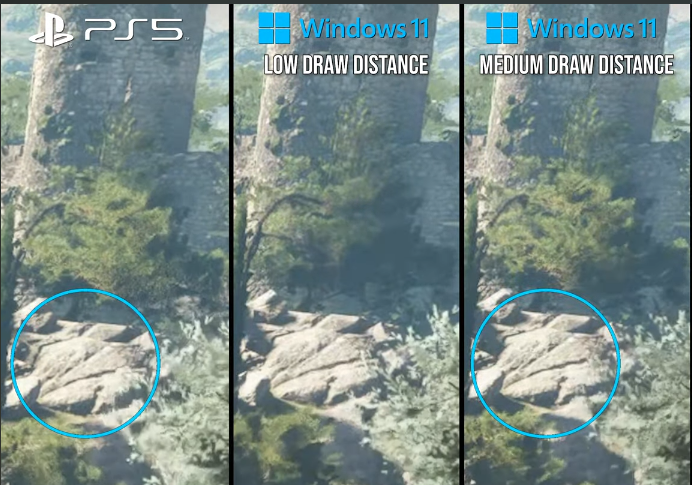

They only mentioned it twice near the beginning and end where they did use DLSS briefly and mentioned it was far superior to the PS5's 1440p. They also didn't test FSR though not officially supported. So why didn't they use upscaling on PC throughout the video if they wanted a fair comparison? Also this snapshot illustrates my point in that they matched up this scene with medium on PC, but to me it looks like low. But the problem is that the PC can't match these graphic settings, not without messing with the ini file, which Digital Foundry did do, but still doesn't look 1:1 to me.Digital Foundry doesn't "assume it isn't upscaling". They tell you it is, several times. They tell you the minimum and maximum resolution parameters for the internal resolution (information they got directly from the developers), and they tell you what percentage of 4K, that the internal resolution runs on average.

I feel that Digital Foundry tries to push their viewers like JaystwoCents to buy new GPU's. It's a promotional video of sorts but for GPUs.

What part of the video was that? They did mention the PS5 using TAAU but I can't find anything else. You know what 55% of 4K is? It's 1080p.In Requiem: PS5 is 55% of 4K, on average. Series X is 59%. Did you watch the video?

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

You know what 55% of 4K is? It's 1080p.

1080p = 2,073,600 pixels

4K = 8,294,400 pixels

1080p is 25% of 4K

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

Yep. 4k = 4x 1080p. 8k = 4x 4k, or 16x 1080p.1080p = 2,073,600 pixels

4K = 8,294,400 pixels

1080p is 25% of 4K

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

or 16x 1080p.

we're gonna need some good upscalers

GoldenTiger

Fully [H]

- Joined

- Dec 2, 2004

- Messages

- 29,671

we're gonna need some good upscalers

So, editing an INI to more-closely match settings for the purpose of rigor is no bueno...They only mentioned it twice near the beginning and end where they did use DLSS briefly and mentioned it was far superior to the PS5's 1440p. They also didn't test FSR though not officially supported. So why didn't they use upscaling on PC throughout the video if they wanted a fair comparison? Also this snapshot illustrates my point in that they matched up this scene with medium on PC, but to me it looks like low. But the problem is that the PC can't match these graphic settings, not without messing with the ini file, which Digital Foundry did do, but still doesn't look 1:1 to me.

But, they should replace game DLLs with sketchy ones downloaded from some random-ass Discord -- WHICH REQUIRE EDITING INI FILES TO INSTALL -- so they can add a feature the game doesn't have in what is supposed to be a like-for-like performance benchmark.

Right...

I feel that Digital Foundry tries to push their viewers like JaystwoCents to buy new GPU's. It's a promotional video of sorts but for GPUs.

What part of the video was that? They did mention the PS5 using TAAU but I can't find anything else. You know what 55% of 4K is? It's 1080p.

https://www.eurogamer.net/digitalfo...tech-showcase-that-pushes-the-consoles-hard-1~Digital Foundry~ said:Both PS5 and Series X each stay in place at a native 1440p resolution, reconstructing up to 4K using a temporal solution. For Xbox Series S, we're again sticking to 900p as the native figure, in line with Innocence.

Also stated in their video. And their other video. And their other video's writeup.

No big shifts in Steam hardware survey...The thing is, I'm not sure how that graph works. You mean in 3 months of ALL the desktops surveyed, AMD dropped 10% of ALL the GPU discrete desktop market share? I find that hard to believe as most people don't have a 3 month cycle on upgrading. If someone had an AMD GPU in Q2'22, it's pretty likely they still had one in Q3'22. Likewise, even if someone upgraded from an older Nvidia card to a 4XXX one, they are still dropping one and replacing it which is a wash.

Unless the graph speaks specifically to GPU sales (which the graph doesn't indicate). Then it makes sense that Nvidia sales would be significantly higher because they released a new card in that timeframe where AMD did not.

NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

That's exactly what happened with Turing/RTX 20 Series, as I recall, made worse by how that generation didn't even have a card that thoroughly eclipsed Pascal in framerate on top of offering ray-tracing.I don't think there will any price corrections. I think they will just release new, lower performance parts to fill out the lineup. And by the time they get to the $500 and less cards----the improvements will probably be small, compared to the 3070, 3060 ti, and 3060.

The price correction was with Ampere/RTX 30 Series, and we all know what happened there, to the point of Ada Lovelace/RTX 40 Series being Turing all over again (except the 4090 is an undisputed performance champ in everything and thus keeps flying off the shelves).

Price drops prior to the next generation generally don't happen unless a competitor shakes up the market at a given price bracket, and AMD doesn't seem too keen on doing that right now.

Intel, on the other hand... we'll see if they have the determination to fix up their drivers and at least see the GPU division through to Battlemage's release.

Beaflag VonRathburg

Gawd

- Joined

- Aug 3, 2015

- Messages

- 644

Its probably becasue Intel's iGPU is pretty damn great for everyday computing, photoshop, and video editing. And also, dedicated GPUs have been pretty long lasting in useful performance, since about 2012.

If you're talking desktop only, that's a strong point. If you look at the broader market, think about the technological advances in consoles, smart phones, etc... We were still all using flip phones in 2005 and the hot thing in 2006 was the Motorola Razr. The overall desktop market is in a downtrend as well since that time simply because there's so many more options 17 years later.

DukenukemX

Supreme [H]ardness

- Joined

- Jan 30, 2005

- Messages

- 7,945

It's all opinion. Did they match it up perfectly?So, editing an INI to more-closely match settings for the purpose of rigor is no bueno...

They used Nvidia mainly, so DLSS is supported in the game and they did test it once in the video. FSR is not but there's absolutely nothing wrong with downloading DDLs to get the feature. Remember SweetFX and how people would use SMAA? The only reason FSR is mentioned is because Nvidia somehow lost the switch that enables DLSS on a 1060.But, they should replace game DLLs with sketchy ones downloaded from some random-ass Discord -- WHICH REQUIRE EDITING INI FILES TO INSTALL -- so they can add a feature the game doesn't have in what is supposed to be a like-for-like performance benchmark.

Right...

I didn't see it in the video and you're pointing now to a webpage. It doesn't matter anyway because facts are a GTX 1060 can get better frame rates than a PS5, and therefore owners do not need to upgrade to either a PS5 or a newer GPU. This is the something everyone here needs to understand in that newer GPUs have low value for people who own older cards because it works fine at 1080p. Even without FSR a GTX 1060 gets 30fps on low settings and that's mostly like a PS5. What old graphics card can't play Plague Tale: Requiem? To get the same framerate as a PS5 at 1440p without using DLSS or FSR you need a AMD RX 6600. Most people use 1080p and to get 60fps in Requiem you need a Vega 64 or a GTX 1080, which still get better frame rates in Requiem than a PS5 at 1440P. Requiem represents how demanding this generation of games are going to be like and it's not hard to see why people aren't upgrading and buying new GPU's.https://www.eurogamer.net/digitalfo...tech-showcase-that-pushes-the-consoles-hard-1

Also stated in their video. And their other video. And their other video's writeup.

I was going by the 4k vs 2k values, but now looking at all the pixels I was wrong.1080p = 2,073,600 pixels

4K = 8,294,400 pixels

1080p is 25% of 4K

Last edited:

chameleoneel

Supreme [H]ardness

- Joined

- Aug 15, 2005

- Messages

- 7,601

Hey you know what-----I realized those numbers I stated (55% of 4k and 59% of 4K)-----that was from the video for Fortnite with Lumen. I apologize for confusing the two videos.They only mentioned it twice near the beginning and end where they did use DLSS briefly and mentioned it was far superior to the PS5's 1440p. They also didn't test FSR though not officially supported. So why didn't they use upscaling on PC throughout the video if they wanted a fair comparison? Also this snapshot illustrates my point in that they matched up this scene with medium on PC, but to me it looks like low. But the problem is that the PC can't match these graphic settings, not without messing with the ini file, which Digital Foundry did do, but still doesn't look 1:1 to me.

I feel that Digital Foundry tries to push their viewers like JaystwoCents to buy new GPU's. It's a promotional video of sorts but for GPUs.

View attachment 539248

What part of the video was that? They did mention the PS5 using TAAU but I can't find anything else. You know what 55% of 4K is? It's 1080p.

However, Digital Foundry does say at 49 seconds into the video, Requiem is 1440p internal, upscaled to 4K.

You are right-----one of the benefits with PC gaming, is that we can choose the resolution and the graphics settings. and whether or not we want upscaling. There is a lot of flexibility, there, to balance framerate with visuals.

If we are ok with 1080p and/or lower graphics settings, we can use older graphics cards. its one of the great things about PC gaming. Right now, I have an RTX 2080 and an RX 6600 XT. And I am gaming on a 1080p monitor

There are a couple of PS5 games now, which have true 1080p modes (no downscaling), for higher framerates. And Outer Wilds lets you choose 1440p/60 with a steady 60fps, Instead of 4K (dynamic resolution), which doesn't have a steady framerate. There are also some games using 40fps modes, for 120hz TVs/monitors, instead of simply using 30fps.

I hope to see more options like this for console gaming, in the future.

Well....this thread's title does say desktop GPUsIf you're talking desktop only, that's a strong point. If you look at the broader market, think about the technological advances in consoles, smart phones, etc... We were still all using flip phones in 2005 and the hot thing in 2006 was the Motorola Razr. The overall desktop market is in a downtrend as well since that time simply because there's so many more options 17 years later.

I would imagine that AMD's orders for console GPUs have not shrank----likely have increased. But, that's a different market than Desktop GPUs.

Last edited:

Yeah come April or May, AMD and Nvidia will suddenly have price "corrections" we'll see normal'ish stock on the mid-range options and rumors of the 4080 and 4090TI variants. Back to business as usual, and by usual I mean something closer to 2019-2020 figures, none of that 2020-2022 nonsense.

I'm expecting more or less a repeat of Turing, since the launch conditions were more or less the same. In any case, I think the bottom line is if you can wait, wait. Better deals will be coming. The volume isn't there to justify current pricing levels.

I did see on Nvidia's most recent presser, they said the 3000 series was now the "mainstream" offering, which was an interesting way to put it, especially considering they're hard to find and still priced poorly.

You know the GPU market is in a rough state when Linus from LTT says that consoles like the PS5 and Xbox Series X may represent a better investment given soaring GPU prices. (It's towards the end of the clip.)

And he has a good argument. We're now at the point where an NVIDIA xx70 series card is selling for at least $799. You're not even at the flagship level and you're already spending enough that a PS5 or XSX looks like a bargain. However large or small NVIDIA's profit margins are, that's not helping the PC gaming market one bit — it's walling off the high-end experience. And even a 3070 might be difficult to justify for someone who just wants the latest CoD game to run decently. And it'll definitely run decently on a cheaper and more accessible console.

And he has a good argument. We're now at the point where an NVIDIA xx70 series card is selling for at least $799. You're not even at the flagship level and you're already spending enough that a PS5 or XSX looks like a bargain. However large or small NVIDIA's profit margins are, that's not helping the PC gaming market one bit — it's walling off the high-end experience. And even a 3070 might be difficult to justify for someone who just wants the latest CoD game to run decently. And it'll definitely run decently on a cheaper and more accessible console.

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

You know the GPU market is in a rough state when Linus from LTT says that consoles like the PS5 and Xbox Series X may represent a better investment given soaring GPU prices. (It's towards the end of the clip.)

And he has a good argument. We're now at the point where an NVIDIA xx70 series card is selling for at least $799. You're not even at the flagship level and you're already spending enough that a PS5 or XSX looks like a bargain. However large or small NVIDIA's profit margins are, that's not helping the PC gaming market one bit — it's walling off the high-end experience. And even a 3070 might be difficult to justify for someone who just wants the latest CoD game to run decently. And it'll definitely run decently on a cheaper and more accessible console.

I get everything you're saying and it's correct from a gamers perspective.

But the traditional GPU reviews doesn't make sense anymore. These cards do wayyyyy more than just play a 3D games. People are being sold gaming performance when that's probably 33% of what it can do. Imagine only using 33% of what a card can do after paying $1600 for a 4090. GPU reviews should be three parts, gaming, productivity and research. I know Linus can do it, but people generally want to see FPS bars, not render times and folding. I think it's important for people to look beyond gaming to find value in the current GPU market. The 4000 series dominates the 3000 series in productivity, but we will recommend a 3000 series card because of price without considering that it will also be used for work.

I feel they could (and tend to be) different reviewer for different audience, puget system will have their review, phoronix their linux benchmark, some their VR only review.GPU reviews should be three parts, gaming, productivity and research

Lot of audience out there that only care about gaming performance to build review too.

kirbyrj

Fully [H]

- Joined

- Feb 1, 2005

- Messages

- 30,693

I get everything you're saying and it's correct from a gamers perspective.

But the traditional GPU reviews doesn't make sense anymore. These cards do wayyyyy more than just play a 3D games. People are being sold gaming performance when that's probably 33% of what it can do. Imagine only using 33% of what a card can do after paying $1600 for a 4090. GPU reviews should be three parts, gaming, productivity and research. I know Linus can do it, but people generally want to see FPS bars, not render times and folding. I think it's important for people to look beyond gaming to find value in the current GPU market. The 4000 series dominates the 3000 series in productivity, but we will recommend a 3000 series card because of price without considering that it will also be used for work.

That's as bad as one of Jensen's "just buy it" nonsensical ramblings. If you don't have the interest in productivity and research you're saying you should just magically go develop the skills and ability to do it because otherwise you're wasting your money on a GPU. Meanwhile, a person who wants to play games at 4K at high quality and framerates just has to pay the piper because the card "could" be used for productivity and research. EVEN THOUGH, Nvidia makes other cards for productivity and research in the form of the Quadro cards.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

GPU reviews should be three parts, gaming, productivity and research

yes - i'd like to see this for budget non quadro/whatever stuff - see how the cards do in AI etc - especially as 3090 performance comes down in price and tier

they do how good is quadro or tesla at gaming etc videos, just do the other too

Gideon

2[H]4U

- Joined

- Apr 13, 2006

- Messages

- 3,558

I get everything you're saying and it's correct from a gamers perspective.

But the traditional GPU reviews doesn't make sense anymore. These cards do wayyyyy more than just play a 3D games. People are being sold gaming performance when that's probably 33% of what it can do. Imagine only using 33% of what a card can do after paying $1600 for a 4090. GPU reviews should be three parts, gaming, productivity and research. I know Linus can do it, but people generally want to see FPS bars, not render times and folding. I think it's important for people to look beyond gaming to find value in the current GPU market. The 4000 series dominates the 3000 series in productivity, but we will recommend a 3000 series card because of price without considering that it will also be used for work.

Most don't care it can do anything else, they want a gaming card. In fact most would be happy if that's all it could do and was cheaper due to that fact.

NightReaver

2[H]4U

- Joined

- Apr 20, 2017

- Messages

- 3,816

Agreed. This is just getting silly with the amount of justification people are going through for this level of greed. No. Just no.That's as bad as one of Jensen's "just buy it" nonsensical ramblings. If you don't have the interest in productivity and research you're saying you should just magically go develop the skills and ability to do it because otherwise you're wasting your money on a GPU. Meanwhile, a person who wants to play games at 4K at high quality and framerates just has to pay the piper because the card "could" be used for productivity and research. EVEN THOUGH, Nvidia makes other cards for productivity and research in the form of the Quadro cards.

The problem is that, like it or not, gaming is still the main reason most people buy these GPUs. A home user typically doesn't buy a dedicated GPU to speed up render times for their wedding video, or to assist with a homebrew AI project; they're doing it because they want faster frame rates in games. As good as an RTX 4070 Ti might be for media editing and accelerated computing, those perks generally only matter to professionals who probably aren't reading these reviews.I get everything you're saying and it's correct from a gamers perspective.

But the traditional GPU reviews doesn't make sense anymore. These cards do wayyyyy more than just play a 3D games. People are being sold gaming performance when that's probably 33% of what it can do. Imagine only using 33% of what a card can do after paying $1600 for a 4090. GPU reviews should be three parts, gaming, productivity and research. I know Linus can do it, but people generally want to see FPS bars, not render times and folding. I think it's important for people to look beyond gaming to find value in the current GPU market. The 4000 series dominates the 3000 series in productivity, but we will recommend a 3000 series card because of price without considering that it will also be used for work.

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

These are no longer being priced as gaming cards. The gaming shouldn't cost this much.Most don't care it can do anything else, they want a gaming card. In fact most would be happy if that's all it could do and was cheaper due to that fact.

Krenum

Fully [H]

- Joined

- Apr 29, 2005

- Messages

- 19,193

The more prices increase, the less I care.

WorldExclusive

[H]F Junkie

- Joined

- Apr 26, 2009

- Messages

- 11,548

Again, Nvidia isn't pricing the products as gaming cards. When the cards were $500 for a x80, it was a gaming card. Now it's a high end professional card without the workstation price.The problem is that, like it or not, gaming is still the main reason most people buy these GPUs. A home user typically doesn't buy a dedicated GPU to speed up render times for their wedding video, or to assist with a homebrew AI project; they're doing it because they want faster frame rates in games. As good as an RTX 4070 Ti might be for media editing and accelerated computing, those perks generally only matter to professionals who probably aren't reading these reviews.

Gamers are saying the current prices are too much and I agree. So where's the value for a 4000 series card? It's definitely not in gaming.

Gamer focused cards need to exist if possible. The 58000x3D is a good example of a stripped down product focused on gaming and not as good in productivity. I would like to see a more affordable GPUs dedicated to gaming.

jlbenedict

2[H]4U

- Joined

- May 22, 2005

- Messages

- 2,207

I would like to see a more affordable GPUs dedicated to gaming.

Unfortunately "gaming market" smaller than "professional/creators market" ?

( or is it not? )

Last edited:

I don't know that you could really do a "just gaming" video card. GPGPU functionality is as useful for games as it is for accelerating a machine learning system; you can't really scale back other performance elements without significantly affecting game performance.Again, Nvidia isn't pricing the products as gaming cards. When the cards were $500 for a x80, it was a gaming card. Now it's a high end professional card without the workstation price.

Gamers are saying the current prices are too much and I agree. So where's the value for a 4000 series card? It's definitely not in gaming.

Gamer focused cards need to exist if possible. The 58000x3D is a good example of a stripped down product focused on gaming and not as good in productivity. I would like to see a more affordable GPUs dedicated to gaming.

And that leaves us in the same boat as before. Whether or not the pricing is friendly to gaming, it remains true that NVIDIA designed and is pitching cards like the 4070 Ti toward gamers. Changing the focus, and asking that reviewers change their focus, still leaves PC gamers stuck in a stagnant market. You either wait for an RTX 4060 that maybe, hopefully costs under $500 or you make do with two-year-old GPUs that still sell at their original prices.

staknhalo

Supreme [H]ardness

- Joined

- Jun 11, 2007

- Messages

- 6,924

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)