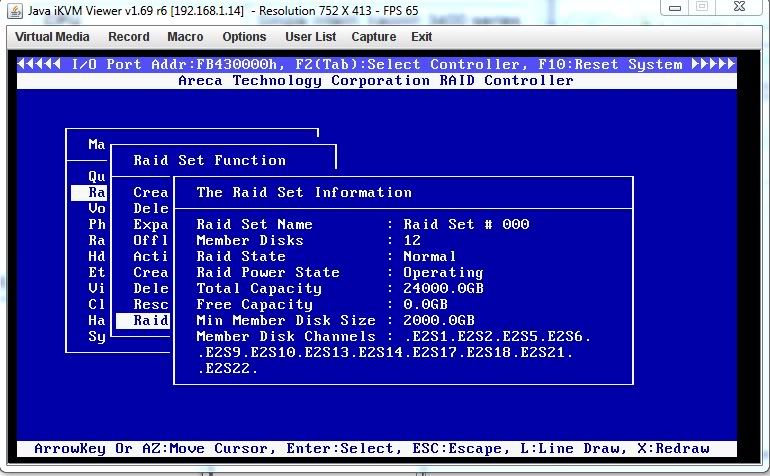

Too vague - you haven't stated whether you chose "Foreground" or "Background" initialization, but it sounds like you chose background in which case the timing is normal. I always init in Foreground mode unless you need the space available right away.

Thank you for the input - I did choose background. I was planning to start moving data to it after it was fully initialized, so that was my noobie mistake. I appreciate the pointers you've given me above - I've followed them exactly which got me this far! So I will just have to wait until the initialization is over with.

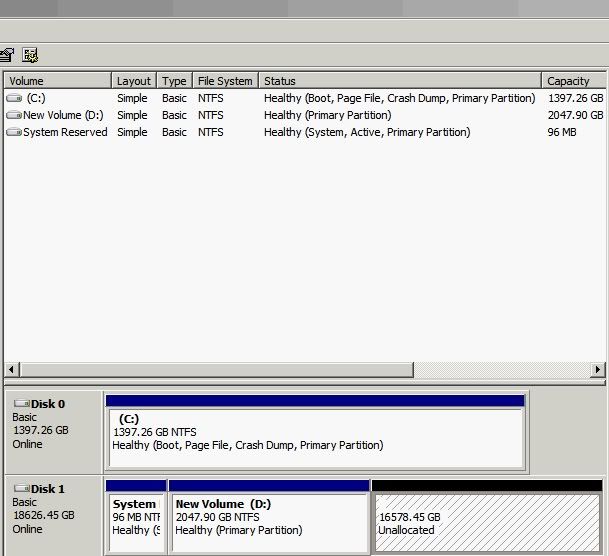

Any recommendations on how to have these settings to allow for best read performance?

Thanks

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)