D

Deleted member 312202

Guest

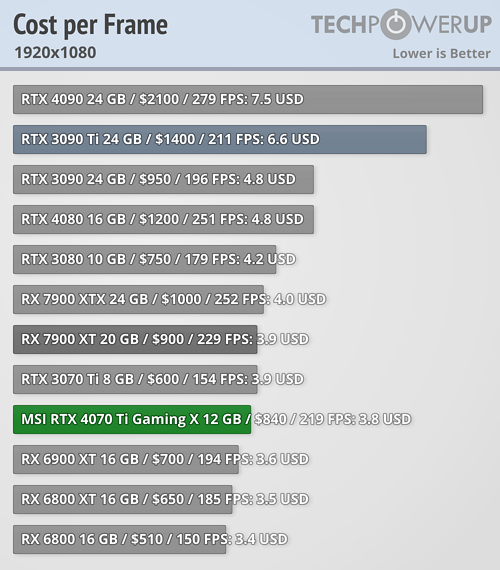

I just got an Asus TUF OC 4070 Ti and it's performing beyond my expectations in 4K. Everything is set to max/ultra on Call of Duty MW3, Forza Motorsport, and a few other games with very playable frame rates.

Last edited by a moderator:

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)