Really, they are. They are the normal of the business world. AMD is the exception through their own failures- and act no different when they succeed.

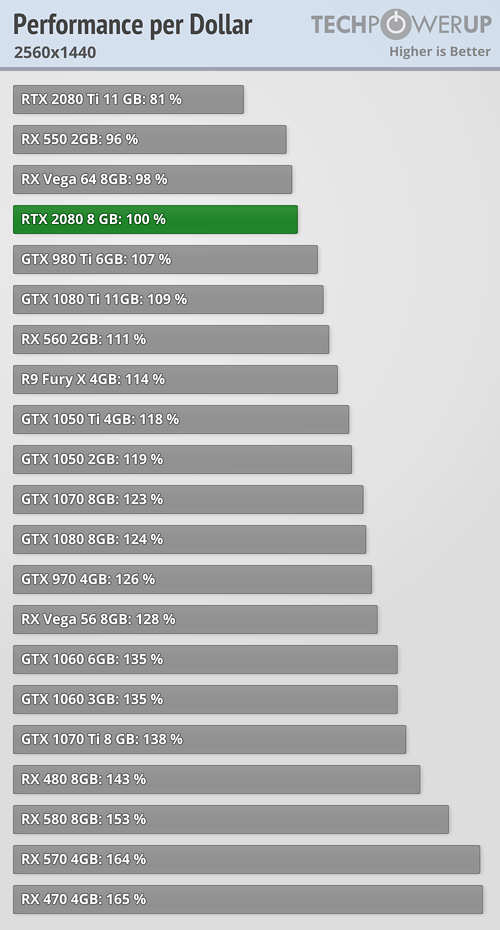

Please give examples of this. I have never seen AMD nerf performance on all in order to make their products look even better. This was a big part of the AdoeredTV video. Games sponsored by AMD always seem to run great for both companies. Then we had the GPP attempt. Also, we can talk about freesync vs. Gsync. Sure, Nvidia has opened it up now, but I kind of feel is was due in part to people running the 2400/2200g Freesync glitch. It was really hard for Nvidia to justify the lockout after that.

I will say that both companies have misrepresented their product lineup - ie the Rx570-like rx580s, DDR4 1030, a version of the RX 560 that had less cores if I remember correct and so on.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)