NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

The frustrating part is that 10GbE has been around for over a decade, but nobody's really put it into consumer-level equipment until Apple of all companies started making it more of a thing with their newer Macs.Well, that's not optimal for too many users either. Hardcore computing is all at 25, 40 or 100 Gbit, not to mention fiber. While consumers and homelabs are at best stuck with 2.5.

My guess is very few of those 10 Gbit ports will ever run 10 Gbit.

This is doubly frustrating when certain things you might want to homelab, like a Proxmox VE Ceph cluster for high availability and live migrations without a SAN (and the inverted pyramid of doom that comes with a single SAN as opposed to the hosts distributing storage with each other), state 10GbE as a MINIMUM requirement and would presumably benefit from further upgrades to 25/40/100GbE and so on. (Also, you're almost certainly working with fiber and some flavor of QSFP+ or QSFP28 transceiver at that point.)

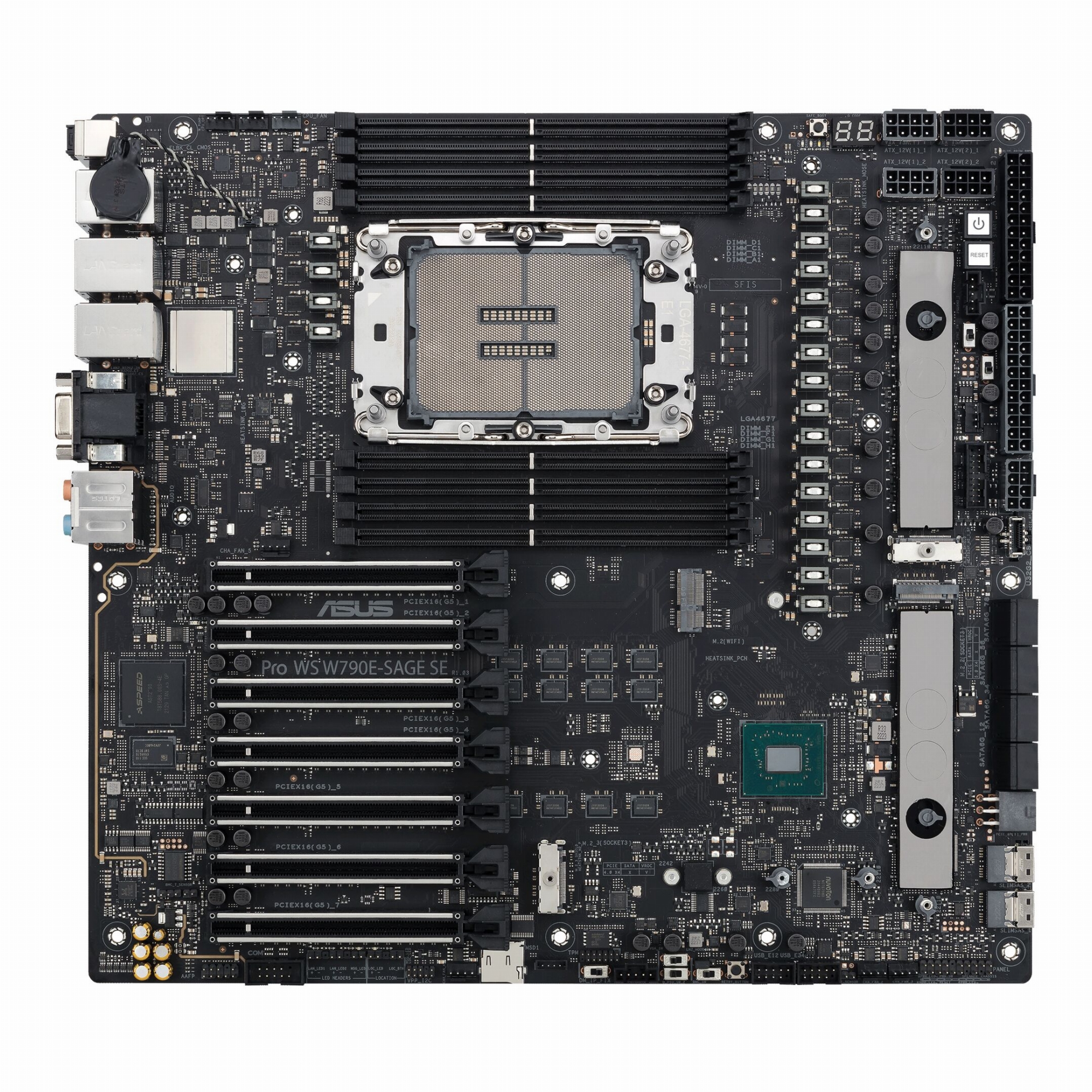

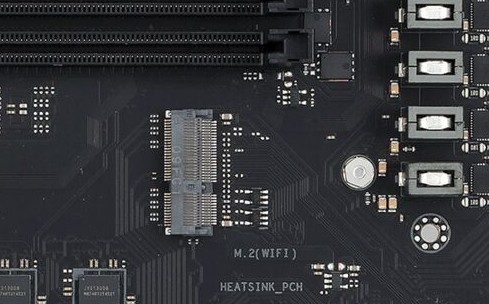

The CNVs on modern boards are usually soldered down, not a replaceable mini-PCIe or M.2 card like you find on old Mac Pros, laptops and so forth. Kind of a shame, since that'd keep the upgradability without the wasted footprint of a full PCIe card.I just like having WiFi on the board without taking up a PCI-E slot.

You don't really need WiFi... until you do. In my opinion for every modern computational device: WiFi is a given, like installing your OS on solid-state storage or USB-C ports. Every device should have it. Should you feel inclined to disable it? sure.

Having it on the board is just less hassle and is usually upgradable with different M.2 WiFi cards.

But I realize that some people just want a board that does the bare minimum, no built-in sound, no USB, no ethernet: Just a CPU-to-PCI-slot converter. I respect that, but its very old fashioned in my mind.

I'm definitely more of the old-fashioned sentiment with regard to upgradability; there was a lengthy discussion on that somewhere else before being deleted for being an off-topic tangent in the thread.

Being able to choose exactly which hardware goes into a system allows me to tailor it best to whatever OS I want to run on it, as anything that isn't Windows tends to be a real crapshoot under Linux/BSD, and doubly so if we're talking a Hackintosh build (not that that'll be a thing any more once Apple drops Intel support like they did PowerPC and 68k beforehand).

However, the lack of PCIe lanes on modern motherboards effectively forces you into using what's integrated for the most part, and hoping you don't have weird conflicts with your OS of choice or that changes in technology don't leave you feeling like you bet on the wrong horse. (Case in point: old HPE servers with P420i or similar RAID controllers integrated that lack a true HBA/IT mode that works nicely with ZFS. The solution there is basically "unplug your drive backplanes from the motherboard and plug them into an add-on LSI SAS HBA flashed with IT mode firmware", making the integrated RAID controller a waste of space.)

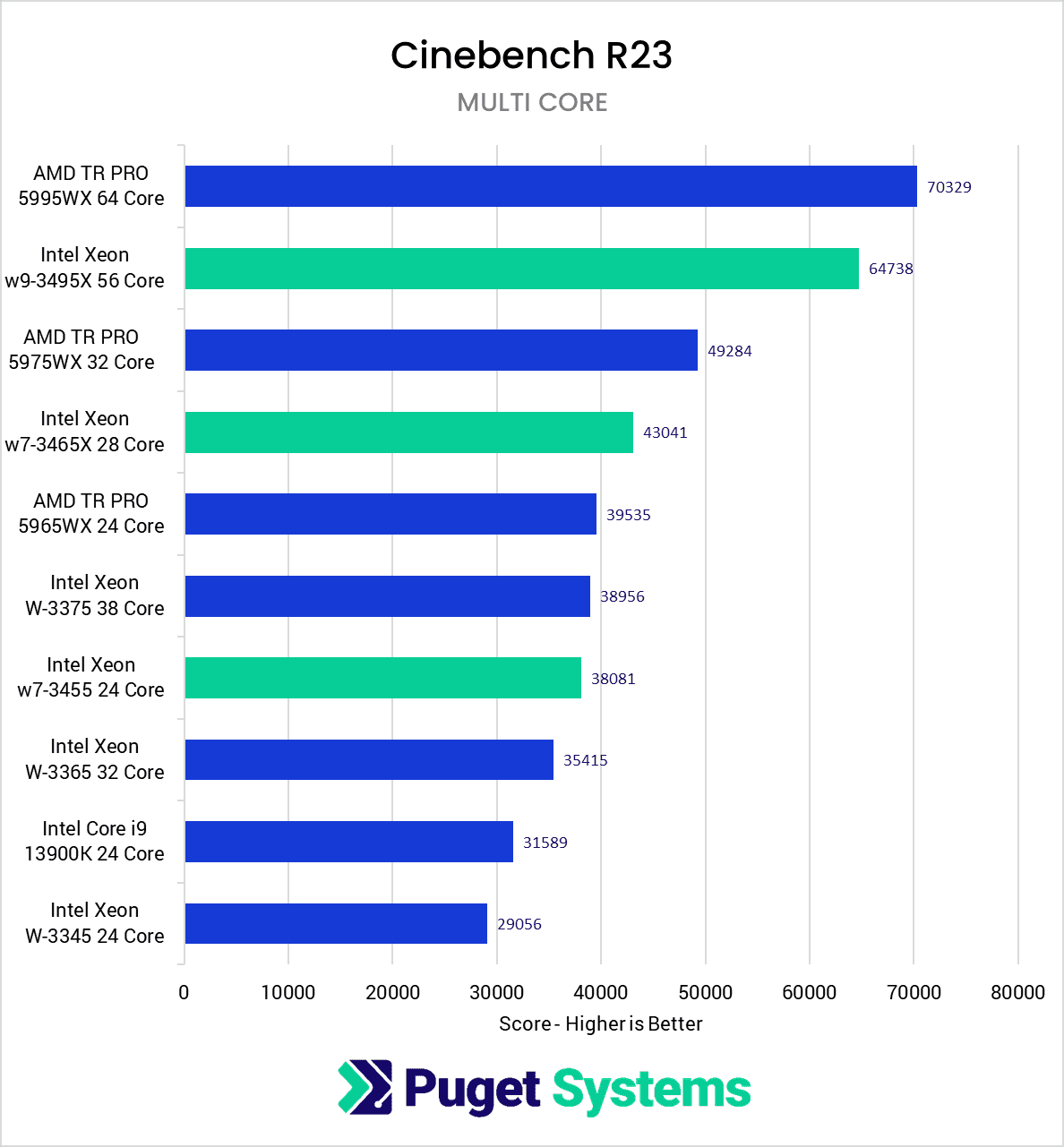

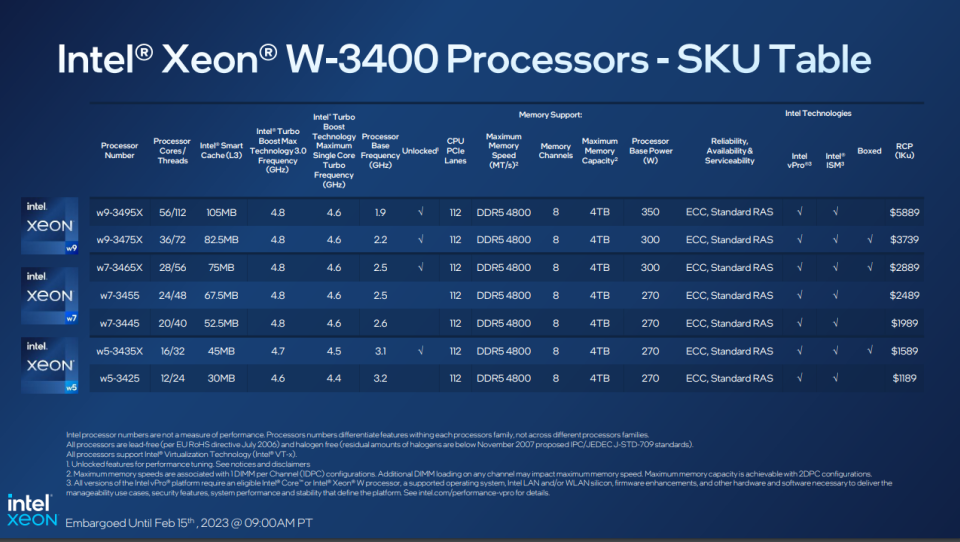

As an aside, how's your Threadripper/X399 build holding up? Got a cheap 1950X setup on the way, figured it'd be a good platform for budget homelabbing without the jank that comes with off-lease HPE or Dell EMC servers like absurd boot times and fans that sound like jet engines spooling up.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)