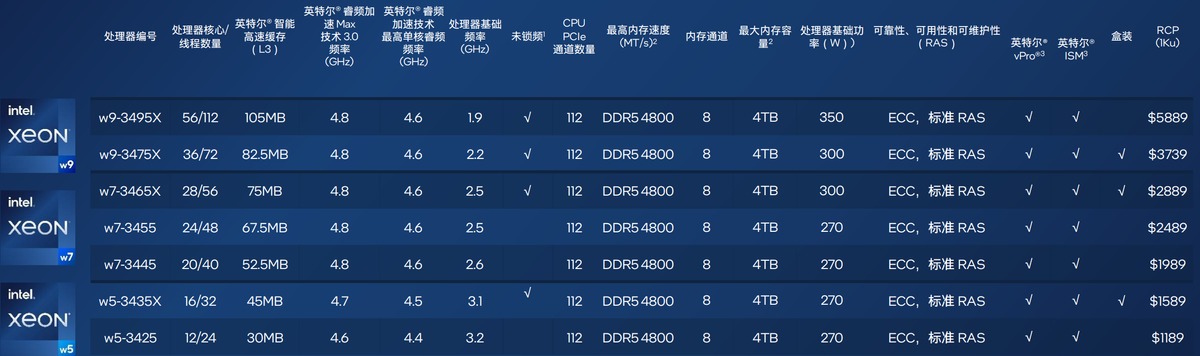

"Only" 4 channel and 6 cores open the door for reasonable pricing (has it would be more of a pure workstation affair and fully leaving alone the high end type regardless of how low you price it), but I also suspect that the motherboard + CPU will be stupid expensive even if one of them look reasonably priced at first, the W790 board will be pricey I imagine.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Has Intel Abandoned the HEDT Market?

- Thread starter Zarathustra[H]

- Start date

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

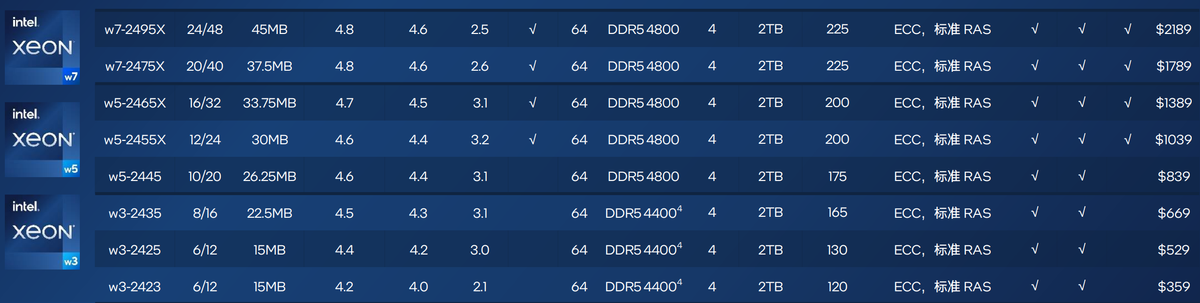

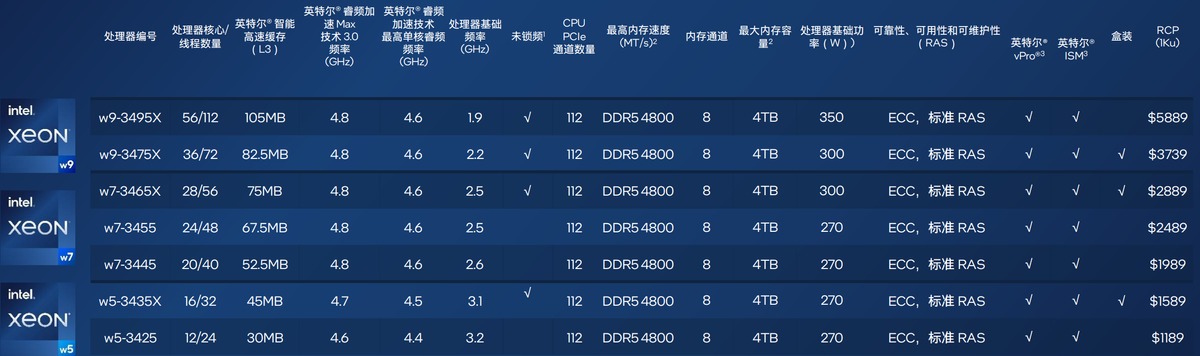

Supposed Leaked pricing

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

Well, it's not egregious, but will definitely need a little extra saving up...

Agreed. The overlap on a couple is weird but not badThat is almost reasonable. Compared with what they wanted for Xeon scalable before AMD clobbered them.

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

Intel is claiming XMP support for memory. XMP-enabled RDIMMS from G.Skill/Corsair/etc.? Please oh please... Would be really nice to have some better than JEDEC spec latency options.

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

More boards from Asus

https://videocardz.com/press-releas...e-and-ace-motherboards-built-for-overclocking

https://videocardz.com/press-releas...e-and-ace-motherboards-built-for-overclocking

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

the 2400 series is monolithic, 3400 series is EMIB

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

2 ATX & 4 EPS12V connectors? How much CPU power is this thing rated for‽

Would it be because it would need 7 time how much is needed to fully support a pci 5.0 x16 slot ?2 ATX & 4 EPS12V connectors? How much CPU power is this thing rated for‽

Easily a $1000+ boards.

Intel is claiming XMP support for memory. XMP-enabled RDIMMS from G.Skill/Corsair/etc.? Please oh please... Would be really nice to have some better than JEDEC spec latency options.

XMP for registered RAM?

Yup. Possible!!XMP for registered RAM?

G

Guessing $800 for the ACE, and $1100 for the full.Easily a $1000+ boards.

It’s for over locking xeons. How much power you got??2 ATX & 4 EPS12V connectors? How much CPU power is this thing rated for‽

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

Not necessarily. There's extra PCIe power connectors on the boards that provide supplemental power to those slots. That's been going on since at least the dawn of SLI/Crossfire.Would it be because it would need 7 time how much is needed to fully support a pci 5.0 x16 slot ?

The Asrock board quotes DDR5-6800+, which means either faster modules already exist, or overclocking the RCD chip in addition to the memory is viable (one of the articles I read was unsure if this could be done).Yup. Possible!!

From reading the limited board information, it seems that the boards are hardwired into a particular memory channel configuration. While both 4- and 8- channel CPUs are supported, a 2400 in an 8-ch board would be limited to only being able to use 4 of the 8 slots, while in a 4-ch board with 8 slots, a 3400 would be limited to 4 channels, but could use all the slots. Makes it a little more challenging to think about getting a 2400 as a starter in an 8-ch board to upgrade to the 3495 down the line when it's cheap. It's also looking like the SP Xeons may not be socket compatible, either, but someone else can take that risk for us (would love to be able to slot a Max in, someday, but the 3495 is probably the fastest option of all SPR).

So true. EPS12V is 300W, so that's a mighty amount of power. I remember reading about the struggles of the Threadripper OCs to supply and cool chips pulling >500W, and that only used 2 connectors. Looking at some of the labeling on the Asus boards, it seems likely that the boards are built to manage two PSUs themselves instead of using a separate power board to combine them.It’s for over locking xeons. How much power you got??

Somewhat unfortunately, the top SKU is a full GHz slower that the 13900K which really puts a damper on the all-P-core dream. It requires a $2000 SKU to match the 7950X's multithreaded performance and none of them are faster for lightly-threaded workloads, so you need to really want the lanes.

The game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

The game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

Always true on xeons. You pick a balance between GHz and core counts. As for power,,, you pay to play.Somewhat unfortunately, the top SKU is a full GHz slower that the 13900K which really puts a damper on the all-P-core dream. It requires a $2000 SKU to match the 7950X's multithreaded performance and none of them are faster for lightly-threaded workloads, so you need to really want the lanes.

The game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

Right, and the added cost of not just cooling down the rig but the environment too! Have to factor in a dedicated minisplit for the room this sits in. Benefit in colder climates no additional heat required for comfort! :-PAlways true on xeons. You pick a balance between GHz and core counts. As for power,,, you pay to play.

Not necessarily. There's extra PCIe power connectors on the boards that provide supplemental power to those slots. That's been going on since at least the dawn of SLI/Crossfire.

I think this is a relatively recent thing. My Asus DSEB-DG and P6T6 WS never had them, nor did my Gigabyte X79S-UP5, they were all workstation boards with multi-GPU support. My X670E ProArt has one though but that could be because of the Thunderbolt power delivery support.

It is. And you’re also right - thunderbolt/etc have to spit out power too, which takes more.I think this is a relatively recent thing. My Asus DSEB-DG and P6T6 WS never had them, nor did my Gigabyte X79S-UP5, they were all workstation boards with multi-GPU support. My X670E ProArt has one though but that could be because of the Thunderbolt power delivery support.

Oh and I should point out - we don’t have reliable benchmarks on any of these yet. Just the server grade chips, which are different somewhat, and some leaked pre-release kit with known issues. So no guarantees yet.Somewhat unfortunately, the top SKU is a full GHz slower that the 13900K which really puts a damper on the all-P-core dream. It requires a $2000 SKU to match the 7950X's multithreaded performance and none of them are faster for lightly-threaded workloads, so you need to really want the lanes.

The game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

German Muscle

Supreme [H]ardness

- Joined

- Aug 2, 2005

- Messages

- 6,943

It is pretty much on point with my 7980xeThe game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

Grebuloner

[H]ard|Gawd

- Joined

- Jul 31, 2009

- Messages

- 2,040

For workstation-focused boards, perhaps. For enthusiasts, (whom I think the Asus boards are also targeting given the OC options, stock CPUs will only go to the low 400W range), I'm looking at an nForce 590 SLI board on my wall with a molex connector at the bottom for SLI.I think this is a relatively recent thing. My Asus DSEB-DG and P6T6 WS never had them, nor did my Gigabyte X79S-UP5, they were all workstation boards with multi-GPU support. My X670E ProArt has one though but that could be because of the Thunderbolt power delivery support.

For workstation-focused boards, perhaps. For enthusiasts, (whom I think the Asus boards are also targeting given the OC options, stock CPUs will only go to the low 400W range), I'm looking at an nForce 590 SLI board on my wall with a molex connector at the bottom for SLI.

I'm assuming the EVGA board, with the molex in the bottom corner, pretty sure that was due to the two chip setup of the 590 chipset and how the traces were run. They attached one x16 slot to the mcp and one to the spp to get full bandwidth.

Good ol' nVidia making things complicated to run SLI. On my DSEB-DG I could only run Crossfire, so I had a Precision 690 at the time too with a riser board with the nVidia switch chip on it to allow SLI to work with my Quadros at the time. Funny thing is Crossfire worked as well in the riser board.

Do we much confidence the 2465x ($1389) with twice the P-core than a 12900k f there is any improvement and a ready to give it a lot of power, could it not be close ?It requires a $2000 SKU to match the 7950X's multithreaded performance and none of them are faster for lightly-threaded workloads, so you need to really want the lanes.

Considering than a 8 P-core only 12900k would do a tiny bit more than half of a 7950x R23 score.

Will have to see we never saw how an high count alderlake p-core type scale, it could go into power-heat issues.

Do we much confidence the 2465x ($1389) with twice the P-core than a 12900k f there is any improvement and a ready to give it a lot of power, could it not be close ?

Considering than a 8 P-core only 12900k would do a tiny bit more than half of a 7950x R23 score.

Will have to see we never saw how an high count alderlake p-core type scale, it could go into power-heat issues.

To me the high power numbers indicate that Intel will keep the all-thread workload processor frequency up pretty long.

Whether IPC is as effective as in latest Zen is something we'll find out soon.

NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

Here's the thing: you CAN overclock now.Somewhat unfortunately, the top SKU is a full GHz slower that the 13900K which really puts a damper on the all-P-core dream. It requires a $2000 SKU to match the 7950X's multithreaded performance and none of them are faster for lightly-threaded workloads, so you need to really want the lanes.

The game is different if you're willing to overclock, but 16 p-cores at 5.x GHz is going to be upwards of 500W which is not exactly consumer levels of power consumption.

Intel hasn't offered an overclockable Xeon in ages, and prior to Sapphire Rapids, nobody in their right mind would buy a Xeon over a Threadripper/EPYC build because AMD clearly had the edge in that market, and are now price-gouging as badly as Intel does to nobody's surprise (while also neglecting Threadripper completely in favor of EPYC).

While Sapphire Rapids is almost certainly well out of my budget in the short term, I'm looking forward to what these systems can do once depreciation hits and they start trickling down in the used market, be it enthusiast board builds or the typical HPE/Dell EMC/etc. stuff that tends to go off-lease in a few years.

I don't necessarily need the high-core-count configurations for a desktop/workstation (servers are another story when you start going crazy with VMs), but I do want to hit high clock speeds for single-threaded performance's sake and I want more PCIe lanes, something that mainstream desktop platforms are falling short on now that a typical gaming PC motherboard has just three or even only two PCIe slots avaliable, and of those, it's usually the 16 CPU lanes being optionally bifurcated into x8/x8 while the third is just PCIe 3.0 x4 electrically. (Before anyone points out how the RTX 4080 and 4090 are trying to make 4-slot GPU heatsinks the norm: a waterblock will solve that quite easily.)

The ball's now back in AMD's court to stop neglecting Threadripper and the HEDT market.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

No built in WiFi

Leaked geekbench numbers:

https://videocardz.com/newz/intel-2...otted-on-geekbench-slower-than-core-i9-13900k

https://videocardz.com/newz/intel-2...otted-on-geekbench-slower-than-core-i9-13900k

NamelessPFG

Gawd

- Joined

- Oct 16, 2016

- Messages

- 893

I'd like to think that anyone building HEDT at this point won't bother with any sort of integrated NIC and instead upgrade their network as standards improve, so you're not stuck with Wi-Fi 6(E) when Wi-Fi 7 releases, and so on.No built in WiFi

There's all sorts of PCIe and USB Wi-Fi adapters that you can buy out there, not to mention Ethernet bridges.

If anything, I'm happy that these boards are sporting at least one 10GBASE-T port as a rule (something that is still frustratingly not the case on all but $1,000~ MSRP top-of-the-line consumer boards), often two, sometimes with a bonus IPMI port thrown in for good measure, and of course, there's plenty of PCIe lanes available for when you want to join the big leagues and try out QSFP28 Ethernet and/or InfiniBand.

But as for why they didn't bother with a Wi-Fi CNV like the consumer platforms, I guess it's down to lack of board space at this point. Those W790 boards look pretty packed thanks to the CPU socket, all the memory channels, PCIe lanes running below, and the VRMs required to keep it all juiced.

Those are the flawed results I mentioned. It’s clearly a QS or ES cpu that isn’t boosting fully for some reason. No one knows why- need to see release hardware parts.Leaked geekbench numbers:

https://videocardz.com/newz/intel-2...otted-on-geekbench-slower-than-core-i9-13900k

If anything, I'm happy that these boards are sporting at least one 10GBASE-T port as a rule (something that is still frustratingly not the case on all but $1,000~ MSRP top-of-the-line consumer boards), often two, sometimes with a bonus IPMI port thrown in for good measure, and of course, there's plenty of PCIe lanes available for when you want to join the big leagues and try out QSFP28 Ethernet and/or InfiniBand.

Well, that's not optimal for too many users either. Hardcore computing is all at 25, 40 or 100 Gbit, not to mention fiber. While consumers and homelabs are at best stuck with 2.5.

My guess is very few of those 10 Gbit ports will ever run 10 Gbit.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

Yikes.... here I was expecting something to blow current TR5000 out of the waterLeaked geekbench numbers:

https://videocardz.com/newz/intel-2...otted-on-geekbench-slower-than-core-i9-13900k

I'd like to think that anyone building HEDT at this point won't bother with any sort of integrated NIC and instead upgrade their network as standards improve, so you're not stuck with Wi-Fi 6(E) when Wi-Fi 7 releases, and so on.

There's all sorts of PCIe and USB Wi-Fi adapters that you can buy out there, not to mention Ethernet bridges.

If anything, I'm happy that these boards are sporting at least one 10GBASE-T port as a rule (something that is still frustratingly not the case on all but $1,000~ MSRP top-of-the-line consumer boards), often two, sometimes with a bonus IPMI port thrown in for good measure, and of course, there's plenty of PCIe lanes available for when you want to join the big leagues and try out QSFP28 Ethernet and/or InfiniBand.

But as for why they didn't bother with a Wi-Fi CNV like the consumer platforms, I guess it's down to lack of board space at this point. Those W790 boards look pretty packed thanks to the CPU socket, all the memory channels, PCIe lanes running below, and the VRMs required to keep it all juiced.

I just like having WiFi on the board without taking up a PCI-E slot.

You don't really need WiFi... until you do. In my opinion for every modern computational device: WiFi is a given, like installing your OS on solid-state storage or USB-C ports. Every device should have it. Should you feel inclined to disable it? sure.

Having it on the board is just less hassle and is usually upgradable with different M.2 WiFi cards.

But I realize that some people just want a board that does the bare minimum, no built-in sound, no USB, no ethernet: Just a CPU-to-PCI-slot converter. I respect that, but its very old fashioned in my mind.

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)