Cr4ckm0nk3y

Gawd

- Joined

- Jul 30, 2009

- Messages

- 847

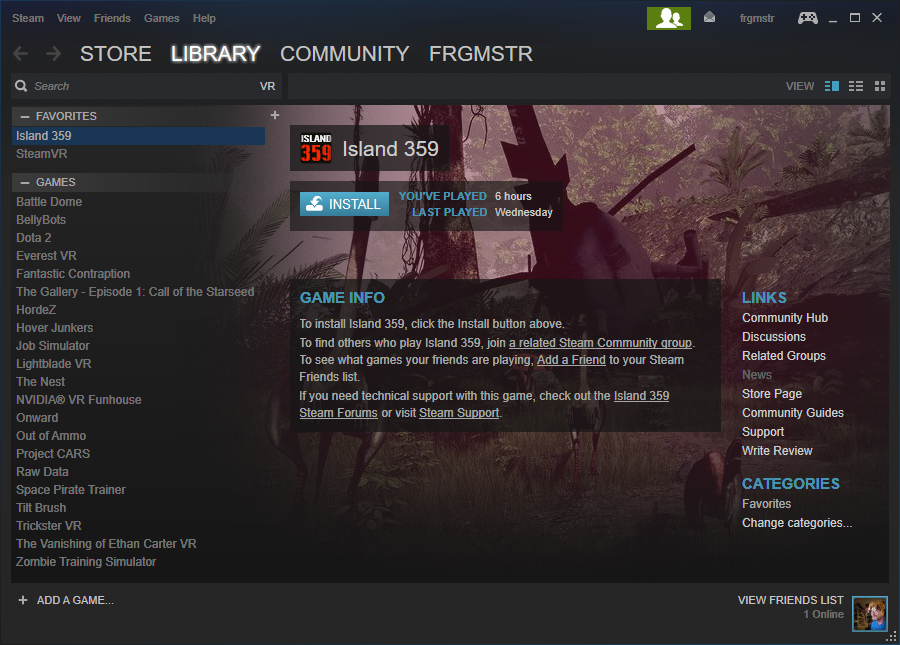

I don't really consider the current batch of "VR" games much more than cheap arcade games. Sure better examples are coming, but they are still hacking together the tools that inherently come with DX12/Vulkan. We are talking about ATW everywhere with APIs that don't currently support the functionality directly.

OK, I take back my previous comment. This quote of yours is the silliest thing ever.

It is clear you have no idea what you are talking about. Do you even own a VIVE/Rift or have you tried any VR games in depth?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)