[H] reviews have ALWAYs had a small sample size of games tested.

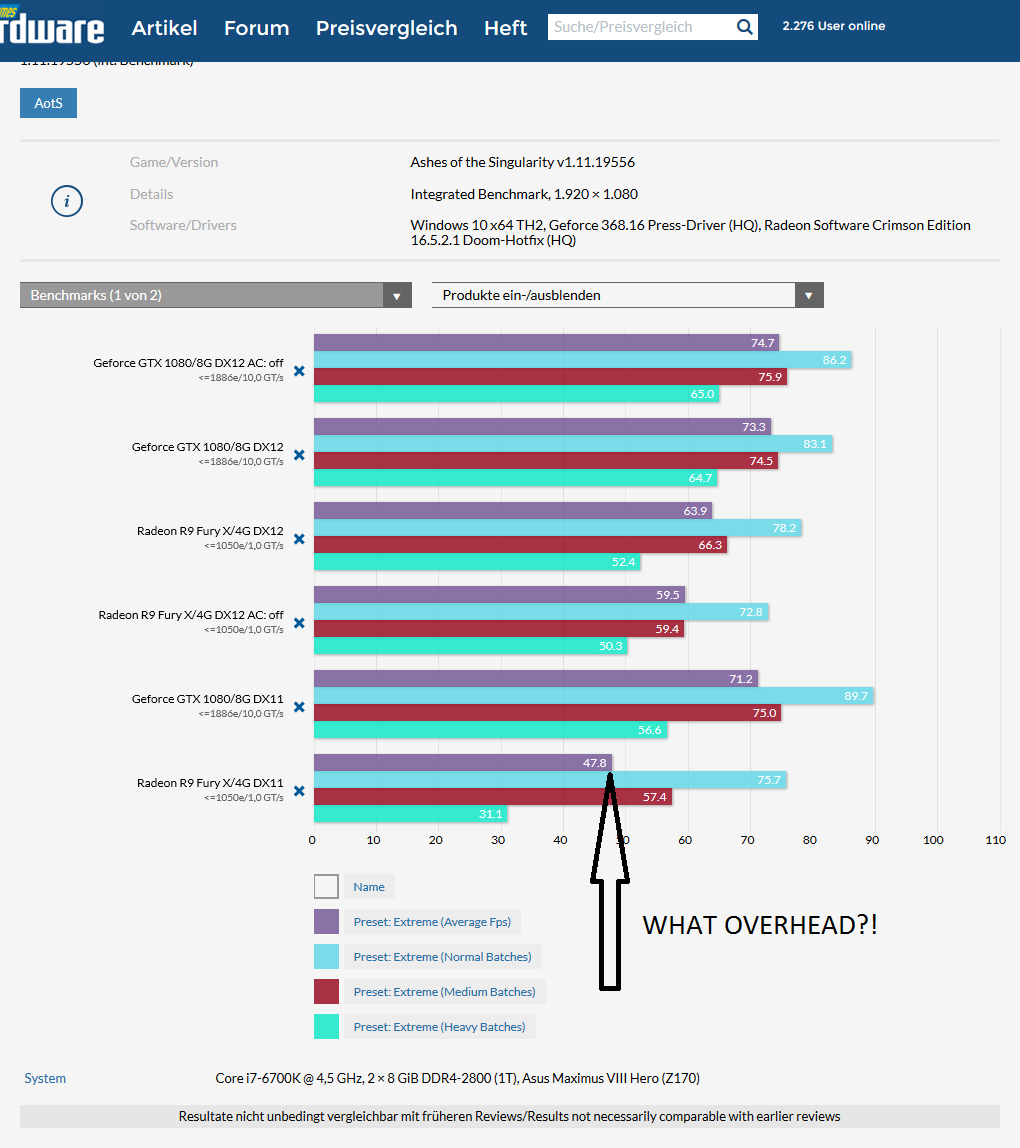

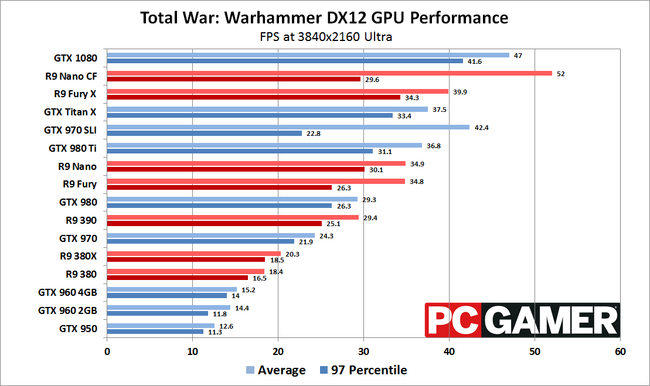

You people don't understand basic maths if you think a small handful of games can really be used as a reliable metric to judge GPU hardware on. I merely raised an example, if you took DX12 Quantum Break where a 390 = 980Ti, Hitman DX12 etc and now your small sample size is totally AMD biased. NV GPUs would look shit in comparison.

Do you people not see how having a handful of games, and selecting mostly titles sponsored by NVIDIA skews the results in their favor? Really? Is it that hard a concept?

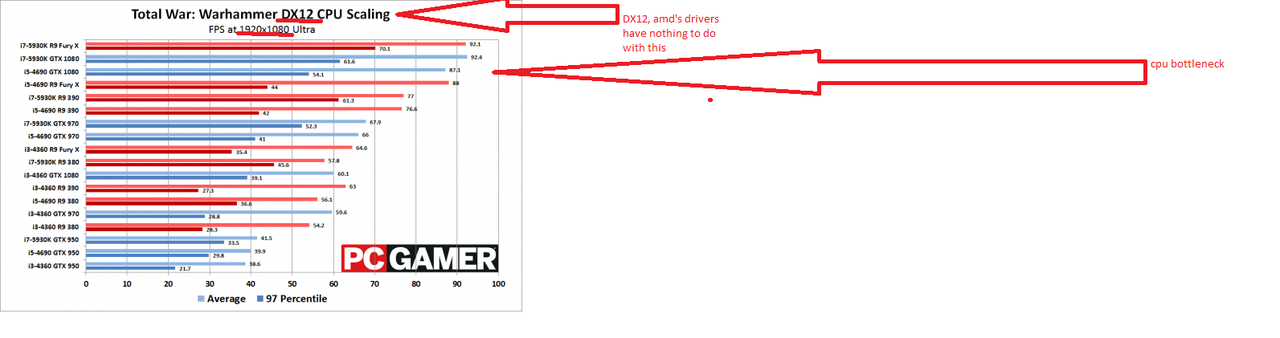

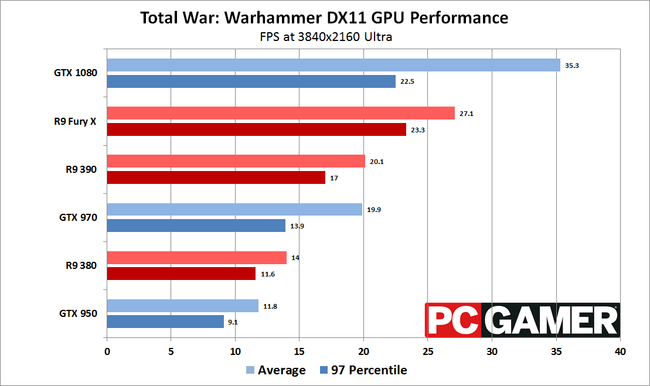

Just to give you another example.

Fury X = 1080 in DX12 Warhammer.

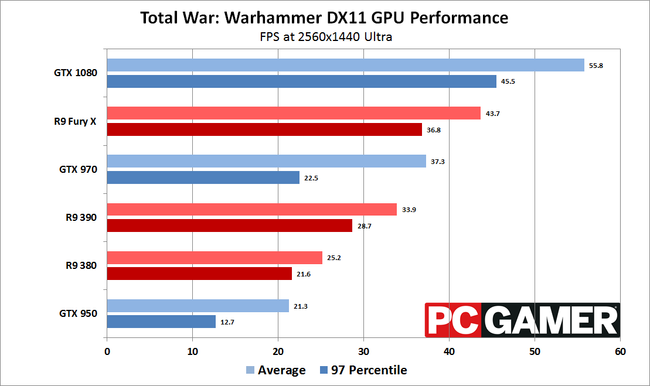

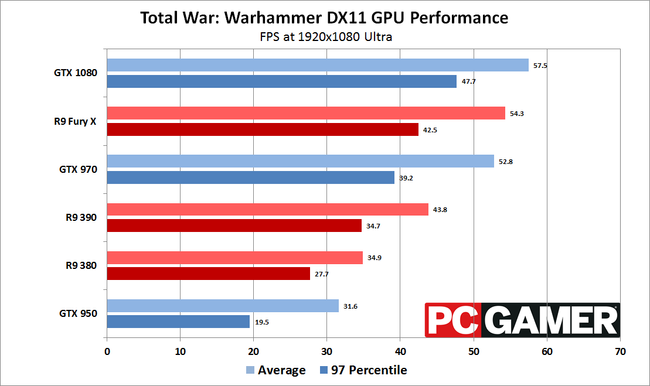

The current game selection again, 5 NV sponsored titles out of 6 tested.

Good to see how AMD GPUs perform in NV sponsored games for sure. AMD doesn't appreciate it and I can see why they won't engage with this site.

Love your bias

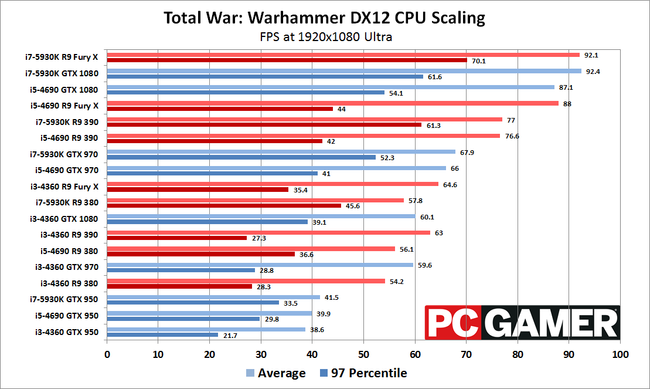

Total War: Warhammer benchmarks strike fear into CPUs | PC Gamer

Showing us the a CPU bottlenecked settings of a game now?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)