fter all the Fury X wasnt as bad a card as you all painted it to be. Yes is was expensive but so have Nvidia cards been in the past without all the negative verbage that the fury x got. This is the impressions I often get from reading your reviews. When Nvidia gets something wrong you will talk about it but in a much more prudent manner. With Amd, there seems to be no respect.

Navigation

Install the app

How to install the app on iOS

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

More options

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

AMD Polaris GCN 4.0 Macau China Event

- Thread starter FrgMstr

- Start date

Willypants

Limp Gawd

- Joined

- Sep 12, 2015

- Messages

- 141

Wasn't today supposed to be the first day of the Macau event? Anyone hear anything?

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

fter all the Fury X wasnt as bad a card as you all painted it to be. Yes is was expensive but so have Nvidia cards been in the past without all the negative verbage that the fury x got. This is the impressions I often get from reading your reviews. When Nvidia gets something wrong you will talk about it but in a much more prudent manner. With Amd, there seems to be no respect.

It's not a bad card. I don't think Kyle suggested it was a bad card (or Brent, whoever did the review), just that he pointed out several flaws, and considering the state of the competition (best nvidia card in YEARS) it was not very appetizing

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

This is 1 crazy ass thread. Man you guys are trying [H]ard to call them Bias.

I guess they missed the Nvidia 1080 GTX Paper Launch memo?

I guess they missed the Nvidia 1080 GTX Paper Launch memo?

Like pascal is high clocked maxwell.

And Fury X is just a Hawaii just bigger with water cooling right? Use some logic man

fter all the Fury X wasnt as bad a card as you all painted it to be. Yes is was expensive but so have Nvidia cards been in the past without all the negative verbage that the fury x got. This is the impressions I often get from reading your reviews. When Nvidia gets something wrong you will talk about it but in a much more prudent manner. With Amd, there seems to be no respect.

You need more power than the 980ti, it needs water cooling, its slower marginally over all based on resolutions. It literally gave nothing more than what was already out there for months before hand.

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

You need more power than the 980ti, it needs water cooling, its slower marginally over all based on resolutions. It literally gave nothing more than what was already out there for months before hand.

On top of this you can overclock most 980Tis ti around 1450mhz, which closes the compute gap significantly, and just widens the geometry perf gap. No need for watercooling

Frank Burns

n00b

- Joined

- Mar 16, 2016

- Messages

- 49

It's not a bad card. I don't think Kyle suggested it was a bad card (or Brent, whoever did the review), just that he pointed out several flaws, and considering the state of the competition (best nvidia card in YEARS) it was not very appetizing

Lack of OC was it's mayor downside in my opinion, Advantage was badass water cooler!

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

Lack of OC was it's mayor downside in my opinion, Advantage was badass water cooler!

That and the size was the only advantage. The Downside was the price. AMD priced Nano/Fury/Fury-X way too high.

Frank Burns

n00b

- Joined

- Mar 16, 2016

- Messages

- 49

That and the size was the only advantage. The Downside was the price. AMD priced Nano/Fury/Fury-X way too high.

The price would have been ok if the card overclocked like initially advertised! AMD did booboo there, bad AMD!

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

Lack of OC was it's mayor downside in my opinion, Advantage was badass water cooler!

Lack of OC isnt very meaningful because it's entirely dependent on how aggressively it's clocked at stock.

The point is its main advantage was 8.6tflops vs 6 on 980ti

Advantage disappears when you overclock the 980ti

For me personally, watercooler is a huge downside.

It was priced too high, I agree

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

The price would have been ok if the card overclocked like initially advertised! AMD did booboo there, bad AMD!

Don't get me wrong, when you buy a card overclocks vary. That is not to say that Fury-X didnt suck when it comes to overclocking.

From what it sounds like now the 1080 GTX might be a bad overclocker as well. Have to wait for some AIB reviews.

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

10-15% oc was the norm before maxwellDon't get me wrong, when you buy a card overclocks vary. That is not to say that Fury-X didnt suck when it comes to overclocking.

From what it sounds like now the 1080 GTX might be a bad overclocker as well. Have to wait for some AIB reviews.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

the biggest draw back for the fury-x was 4gb vram imo. if it would have had 8 i think the whole thing goes a lot differently.

I don't. We have seen Numerous benchmarks where 4GB did not hold back the Fury-X @ 4k.

If the 980's in SLI Where fine for 4k why wasnt the Fury-X?

The stock price means JACK guys, yes AMD did have "good" news better than previous calls, but they are still not healthy, The reason why the stock price went up, is because this was the first time in years they beat the street lol. When you can't go down much more the only way is up.

Ive been reading your posts on here , rage and beyond3d for years and Im sorry but you sir have no objectivity whatsoever. You have clearly been in Nvidia's pants like forever.

You need more power than the 980ti, it needs water cooling, its slower marginally over all based on resolutions. It literally gave nothing more than what was already out there for months before hand.

Sure but show a bit more respect or at leat show as much as you show the competition when they fail.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

I don't. We have seen Numerous benchmarks where 4GB did not hold back the Fury-X @ 4k.

If the 980's in SLI Where fine for 4k why wasnt the Fury-X?

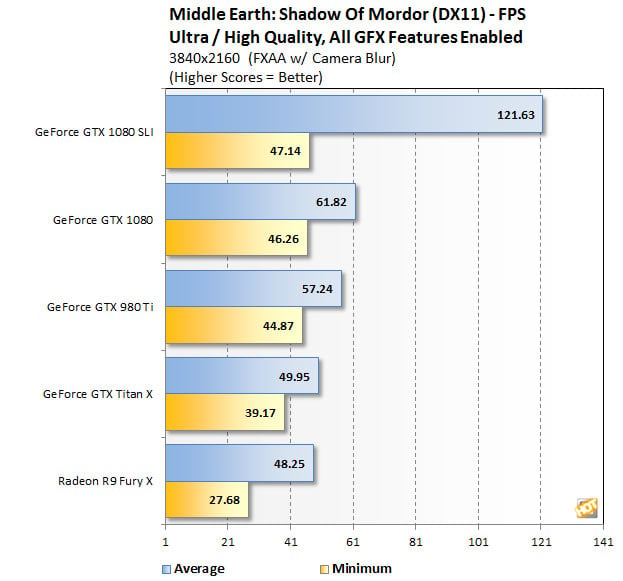

It REALLY depends. My two Titan X cards in SLI struggle in some games at 4K.

Struggle = Have to settle for 'high' instead of 'ultra' in order to maintain over 45 FPS with 60 FPS average.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

It REALLY depends. My two Titan X cards in SLI struggle in some games at 4K.

Struggle = Have to settle for 'high' instead of 'ultra' in order to maintain over 45 FPS with 60 FPS average.

And that has nothing to do with memory, It has to do with running out of GPU horsepower.

Memory was never a problem.

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

And that has nothing to do with memory, It has to do with running out of GPU horsepower.

Memory was never a problem.

Eh, depends on the game and how memory intensive it is. There are games where 1080P can go over 4GB, there are others where 4K runs without going over 3GB. The end result is that memory is good to have, but it is not as 'crucial' to high-resolution gameplay as some think.

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

Eh, depends on the game and how memory intensive it is. There are games where 1080P can go over 4GB, there are others where 4K runs without going over 3GB. The end result is that memory is good to have, but it is not as 'crucial' to high-resolution gameplay as some think.

Very true. Also lets not forget Fury-x crossfire had better scaling an results in 4k gaming over Titan-X. So if anything you would of had a better gaming experience in 4k with Fury-X in crossfire.

Conclusion - AMD Radeon R9 Fury X CrossFire at 4K Review

"In our experiences today we have found that AMD Radeon R9 Fury X CrossFire at $1300 competes well with the GeForce GTX 980 Ti SLI at the same price. In some instances that same money will buy you a better experience on Fury X CrossFire, even compared to the more expensive GTX TITAN X SLI"

Of course that is if crossfire is working!

KazeoHin

[H]F Junkie

- Joined

- Sep 7, 2011

- Messages

- 9,000

Very true. Also lets not forget Fury-x crossfire had better scaling an results in 4k gaming over Titan-X. So if anything you would of had a better gaming experience in 4k with Fury-X in crossfire.

Conclusion - AMD Radeon R9 Fury X CrossFire at 4K Review

"In our experiences today we have found that AMD Radeon R9 Fury X CrossFire at $1300 competes well with the GeForce GTX 980 Ti SLI at the same price. In some instances that same money will buy you a better experience on Fury X CrossFire, even compared to the more expensive GTX TITAN X SLI"

Of course that is if crossfire is working!

My hope is that AMD will push MultiGPU harder and harder, and CFX will become a standard feature.

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

Very true. Also lets not forget Fury-x crossfire had better scaling an results in 4k gaming over Titan-X. So if anything you would of had a better gaming experience in 4k with Fury-X in crossfire.

Conclusion - AMD Radeon R9 Fury X CrossFire at 4K Review

"In our experiences today we have found that AMD Radeon R9 Fury X CrossFire at $1300 competes well with the GeForce GTX 980 Ti SLI at the same price. In some instances that same money will buy you a better experience on Fury X CrossFire, even compared to the more expensive GTX TITAN X SLI"

Of course that is if crossfire is working!

Cf generally appears to scale better, although the 1080sli results I've seen have been **insanely** impressive. It's not really about the Fury X per se.

Im curious how big a problem the 4gb vram is in rise of the tongue raider for instance, that games maxes out my 6gb

Frankly I like AMD's thoughts about eventually eliminating crossfire and instead treating multiple GPUs as a single larger one. This would eliminate all of the draw backs we have today (wasted RAM, micro stuttering, special profiles). However, you'd need some fancy interconnect designs and logic to make it work. But I think that should be the future of multi GPU.

Also, lol at Tongue Raider...

Also, lol at Tongue Raider...

Brackle

Old Timer

- Joined

- Jun 19, 2003

- Messages

- 8,566

My hope is that AMD will push MultiGPU harder and harder, and CFX will become a standard feature.

Well thats the problem. MultiGPU is all on the developer. So you have to pray for them to make it a standard feature.

DX12 only too.

They will only fail if somehow they screw up the launch and launch late I have been saying that for a least a month now.Sure but show a bit more respect or at leat show as much as you show the competition when they fail.

Ive been reading your posts on here , rage and beyond3d for years and Im sorry but you sir have no objectivity whatsoever. You have clearly been in Nvidia's pants like forever.

After reading your posts I can say the something about your views on AMD/ATi, not to mention, I can also say you have no idea of what you talk about? Fair enough?

Just like everyone here that thinks [H] is bias, that's bologna, you know it, I know it, everyone that has been here for more than 5 years knows it, how [H] got blacklisted by nV and the ugliness ensued after that.

What you want is what AMD wants, a review that is favorable to give you reason for your purchase to be good, guess what real world doesn't work that way. Best yet, no site really gave a "favorable" review to the FuryX, its a power hunger, late, inefficient series (withholding the nano for two of those stats), Just as the r3xx series was too. AMD had nothing to offer that was compelling enough for buyers, that is why their marketshare didn't rebound after their launch, even almost a year after, they only gained 3%.... come on, everyone saw what AMD's last years cards were. The best part about it before launch, AMD's fake pr performance slides? Yeah like that is really great when they turn off AA? WTF so AMD's own mistakes helped the over hype of FuryX and ultimately led to its down fall. Then you have when AMD states their cards uses a certain amount of wattage, and that's not the real power usage, they will go higher? All of these things AMD does reflects on them. Just as what nV did in the past reflects on nV. Only a person that has been so ingrained in their minds can't distinguish these things. Lets not even get to Async and how AMD's marketing flat out didn't know what the hell they were talking about and used developer statements about performance improvements in console games as a total increase from low level API's as Async improvements.

Only a person that doesn't understand under utilization of cores shouldn't be that great would say anything against that (and if they truly are more than 10% underutilized ya got a shit GPU man), but yet AMD knew full well what they wanted to market and lied about it because the general public doesn't' understand those things.

Lets get to gameworks and OpenGLU, guess what AMD is leveraging opengpu just as nV has done with gameworks, or trying to lets see if its pans out. These are companies man, and they will do anything and everything to get into your pocket, its like prostitute looking for their next meal. They only flash ya what looks good and rest you just don't want to see it cause its ugly or diseased.

Last edited:

Actually to me HardOCP not being brain washed or influence by the sleazy marketing demonstrations and actually sitting down one on one with the actual hardware and testing how it performs is way more important.

Reality check with games for some - developers make games - not AMD or Nvidia. Developers decide what to put inside of their games . Nvidia just has a better relationship and more asset help with the develoupers, that is one of AMD's weakness's, AMD is addressing this - how effective that will be will be seen. HardOCP tests and uses some of the most played games like GTA V, which uses both AMD and Nvidia technologies - Nvidia does much better. BF4 is a AMD technology game from the start with Mantle etc. - Nvidia does better now. Give credit where credit is due, Nvidia improved their BF4 performance tremendously. Witcher 3 is an awesome game, looks great etc. Very popular, why would you not include that game - gamers would probably want to know how it plays. HardOCP is more towards current facts and not try to predict future performance using conjecture. It would have been very hard to know that the 7970 would crush the 680 after a few years ago so one could not just do a review and know the future. So if you want to know how the current technology runs to day with the lease amount of BS then HardOCP is for you.

AMD has made great strides in API development which should benefit everyone, Nvidia now supports Vulkan and are working with developers to make it better for the games they are developing. That does not mean Nvidia is actively trying to destroy AMD unfairly but in a competitive way that can be the goal.

Been reading HardOCP for awhile and I have mostly AMD video cards and cpu's - wow HardOCP has really influenced my buying decisions. The truth of the matter they have

Reality check with games for some - developers make games - not AMD or Nvidia. Developers decide what to put inside of their games . Nvidia just has a better relationship and more asset help with the develoupers, that is one of AMD's weakness's, AMD is addressing this - how effective that will be will be seen. HardOCP tests and uses some of the most played games like GTA V, which uses both AMD and Nvidia technologies - Nvidia does much better. BF4 is a AMD technology game from the start with Mantle etc. - Nvidia does better now. Give credit where credit is due, Nvidia improved their BF4 performance tremendously. Witcher 3 is an awesome game, looks great etc. Very popular, why would you not include that game - gamers would probably want to know how it plays. HardOCP is more towards current facts and not try to predict future performance using conjecture. It would have been very hard to know that the 7970 would crush the 680 after a few years ago so one could not just do a review and know the future. So if you want to know how the current technology runs to day with the lease amount of BS then HardOCP is for you.

AMD has made great strides in API development which should benefit everyone, Nvidia now supports Vulkan and are working with developers to make it better for the games they are developing. That does not mean Nvidia is actively trying to destroy AMD unfairly but in a competitive way that can be the goal.

Been reading HardOCP for awhile and I have mostly AMD video cards and cpu's - wow HardOCP has really influenced my buying decisions. The truth of the matter they have

harmattan

Supreme [H]ardness

- Joined

- Feb 11, 2008

- Messages

- 5,129

I've been to more "industry" events over the years than I can count. They can be good for networking and some of the peer workshops where you discuss with other experts in the field can be useful. And heckfire, it can be fun to have your food/drinks/entertainment paid for (although there's so much regulatory clearance nowadays in my industry it's almost not worth the effort going). Otherwise, the company presentations are normally high-level tripe designed to promote a product and groupthink (as an aside the show Silicon Valley gets this to a tee). I can't remember a product demo or reveal I've been to where I haven't wanted to roll my eyes at a point.

I'd much rather be at my desk getting down and dirty with a product without a business relations rep pouring honey in my ear.

I'd much rather be at my desk getting down and dirty with a product without a business relations rep pouring honey in my ear.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,076

Place looks awesome.

북극성 보러 남쪽 나라로 : 2016 마카오/타이완 출장기 - (1) 첫째 날 (Live)

북극성 보러 남쪽 나라로 : 2016 마카오/타이완 출장기 - (1) 첫째 날 (Live)

limitedaccess

Supreme [H]ardness

- Joined

- May 10, 2010

- Messages

- 7,594

FYI, June 29th 9am EST NDA expiration in the above images.

cageymaru

Fully [H]

- Joined

- Apr 10, 2003

- Messages

- 22,076

FYI, June 29th 9am EST NDA expiration in the above images.

Ha ha I thought someone would pickup on that.

KG-Prime90

Limp Gawd

- Joined

- Apr 29, 2013

- Messages

- 251

rise of the tongue raider

pendragon1

Extremely [H]

- Joined

- Oct 7, 2000

- Messages

- 52,026

lara's o-face?!

TheLAWNoob

Limp Gawd

- Joined

- Jan 10, 2016

- Messages

- 330

lara's o-face?!

#Dying

udneekgnim

Limp Gawd

- Joined

- Mar 10, 2010

- Messages

- 256

My hope is that AMD will push MultiGPU harder and harder, and CFX will become a standard feature.

won't happen because multi gpu support is very dependent on the developer and multi gpu is too much of a niche for most developers to focus resources on

They are not doing smaller sample sizes though

[H] reviews have ALWAYs had a small sample size of games tested.

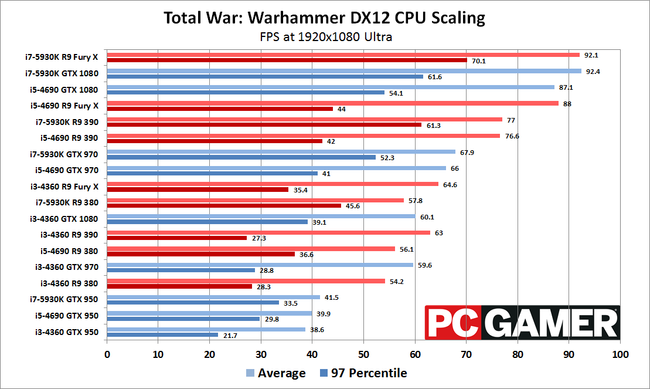

You people don't understand basic maths if you think a small handful of games can really be used as a reliable metric to judge GPU hardware on. I merely raised an example, if you took DX12 Quantum Break where a 390 = 980Ti, Hitman DX12 etc and now your small sample size is totally AMD biased. NV GPUs would look shit in comparison.

Do you people not see how having a handful of games, and selecting mostly titles sponsored by NVIDIA skews the results in their favor? Really? Is it that hard a concept?

Just to give you another example.

Fury X = 1080 in DX12 Warhammer.

The current game selection again, 5 NV sponsored titles out of 6 tested.

Good to see how AMD GPUs perform in NV sponsored games for sure. AMD doesn't appreciate it and I can see why they won't engage with this site.

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

[H] reviews have ALWAYs had a small sample size of games tested.

You people don't understand basic maths if you think a small handful of games can really be used as a reliable metric to judge GPU hardware on. I merely raised an example, if you took DX12 Quantum Break where a 390 = 980Ti, Hitman DX12 etc and now your small sample size is totally AMD biased. NV GPUs would look shit in comparison.

Do you people not see how having a handful of games, and selecting mostly titles sponsored by NVIDIA skews the results in their favor? Really? Is it that hard a concept?

Just to give you another example.

Fury X = 1080 in DX12 Warhammer.

The current game selection again, 5 NV sponsored titles out of 6 tested.

Good to see how AMD GPUs perform in NV sponsored games for sure. AMD doesn't appreciate it and I can see why they won't engage with this site.

I'll bite. Let's use quantum break as an example, do you think that's indicative of overall performance?

It isn't.

Hitman more so, and in my own personal testing, 980ti is faster than Fury X in the built in benchmark (see my clocks) by roughly 12%.

AotS is the same, flop for flop, maxwell is faster.

Hitman's built in benchmark produces scores that don't accurately reflect gameplay btw, see the review both here on and on pcgameshardware.de

Go look at computerbase.de 1080 review, also pcgameshardware.de, they tested like 30 games man.

Ieldra

I Promise to RTFM

- Joined

- Mar 28, 2016

- Messages

- 3,539

You could even argue that there's in an inherent bias in all reviews using reference maxwell cards, because it ignores the 25% oc headroom that is usually on tap.

At the end of the day one size does not fit all, but demanding that 'fringe games' be used in the benchmark suite is just odd. For every nvidia sponsored game in which amd cards have issues there are ten in which they don't, but since nobody is whining you dony hear about it.

I mean look at the backlash over doom, bunch of idiots. Claiming they 'gimped' it after the beta...

At the end of the day one size does not fit all, but demanding that 'fringe games' be used in the benchmark suite is just odd. For every nvidia sponsored game in which amd cards have issues there are ten in which they don't, but since nobody is whining you dony hear about it.

I mean look at the backlash over doom, bunch of idiots. Claiming they 'gimped' it after the beta...

[H] reviews have ALWAYs had a small sample size of games tested.

You people don't understand basic maths if you think a small handful of games can really be used as a reliable metric to judge GPU hardware on. I merely raised an example, if you took DX12 Quantum Break where a 390 = 980Ti, Hitman DX12 etc and now your small sample size is totally AMD biased. NV GPUs would look shit in comparison.

Do you people not see how having a handful of games, and selecting mostly titles sponsored by NVIDIA skews the results in their favor? Really? Is it that hard a concept?

Just to give you another example.

Fury X = 1080 in DX12 Warhammer.

The current game selection again, 5 NV sponsored titles out of 6 tested.

Good to see how AMD GPUs perform in NV sponsored games for sure. AMD doesn't appreciate it and I can see why they won't engage with this site.

LOL you do realize that graph its cpu bottlenecked right?

Bahanime,

Games behave differently when actually being played! Do you realize AOTS when being played there is almost no difference between AMD and nV hardware (comparitively equal) but in the benchmark AMD is in the lead?

Also this happens in Hitman where different areas of the game favor different IHV's?

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)