SPARTAN VI

[H]F Junkie

- Joined

- Jun 12, 2004

- Messages

- 8,762

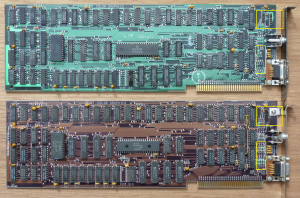

Looks like JPEG compression artifacts to me, but you can see what appears to be the gold fingers:

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

Waiting for Digital Jesus to dissect this and take it apart.

those are the "groves" the fingers are supposed to go in.

blah blah blah guess we see if thats what they look like when they are actually announcedFingers don't go in grooves (or groves), they are etched from from the copper on the PCB and plated.

The absurdities that occur when people can't admit to making a mistake.

We have a low quality image of the PCB edge connector, that if you zoom in you can see some of the fingers.

But sure rationalize whatever nonsense you can come up with avoid admitting a mistake, and stick with your original claim.

I can only fit 11".

Agree, the blurring used to mask the bad color fills...those are the "groves" the fingers are supposed to go in. the tab is one consistant color all the way across. combine that with the other irregularities, im saying fake.

Maybe went HBM for the half length card required like the old Fury Nano and the rest...So either Nvidia has gotten a LOT better at making smaller PCB's or they are just saying screw it?? I mean maybe there was a lot of junk space on older PCB's just to hold spacing and shrouds?

Also exactly how much heat is this recirculating back into the case anyway?

Maybe went HBM for the half length card required like the old Fury Nano and the rest...

That was my first thought as well when people stated half sized card., don't you want the power connectors directly onto the board? But then I was thinking why would the flag ship product be a half sized card? You want to spread everything out so you don't localize heat too much, half sized cards come later with the 3060 type models.I'm looking at this and wondering about the power connectors. There's no conformation in the pictures (and no visible connectors, either), but the renders seem to like the idea that the power sockets are on the rear of the heatsink, meaning wires are running through the sink and behind the fan to the PCB. While "neat," this just doesn't seem like the best engineered design possible vs. a GTX 670-like option?

Or these could just be fake PCBs designed to test the fitment of the fansink prototypes...

That was my first thought as well when people stated half sized card., don't you want the power connectors directly onto the board? But then I was thinking why would the flag ship product be a half sized card? You want to spread everything out so you don't localize heat too much, half sized cards come later with the 3060 type models.

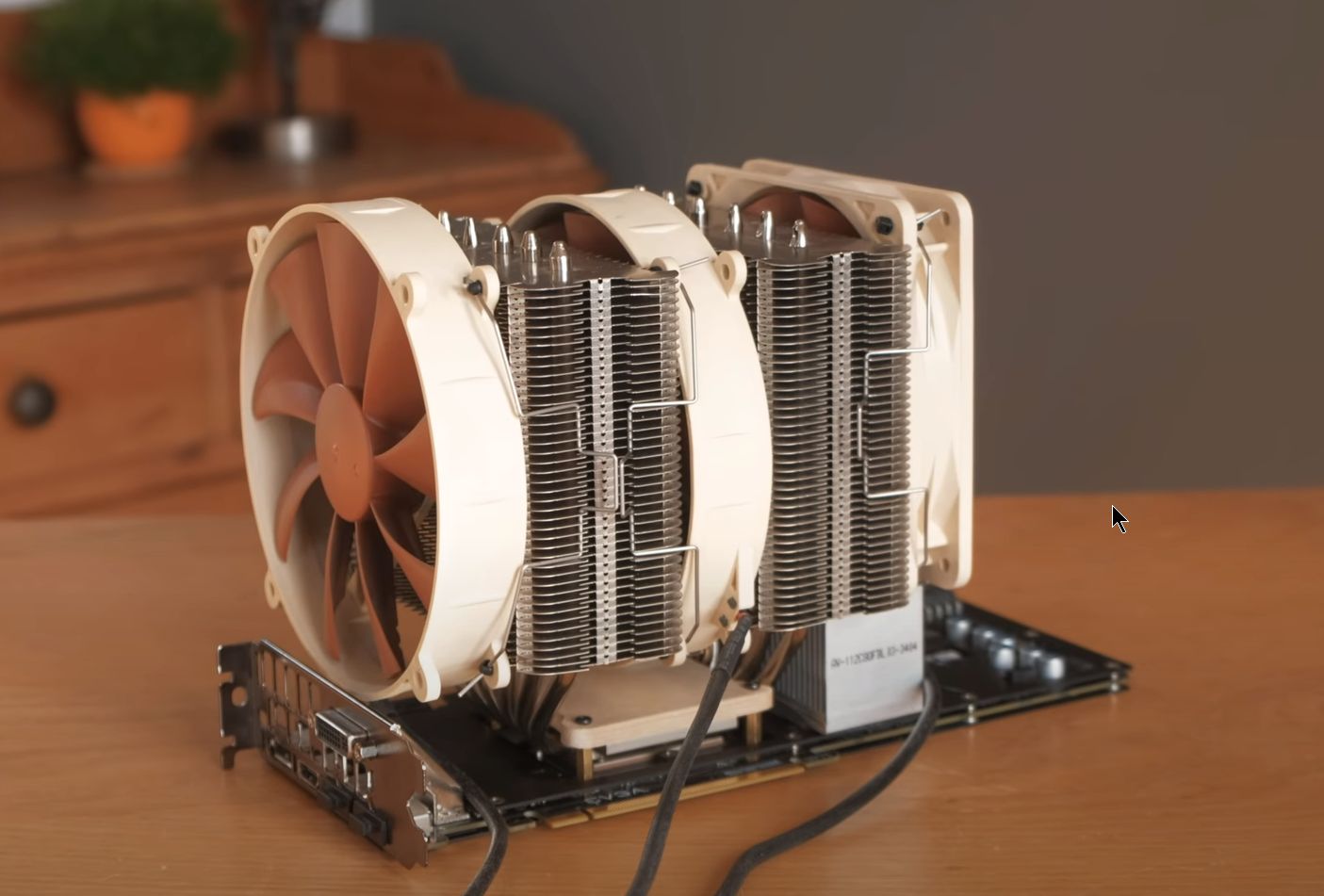

Conclusion there is no way this is a half sized card, and if real that isn't a push pull design, it is blowing air on both halves

That was my first thought as well when people stated half sized card., don't you want the power connectors directly onto the board? But then I was thinking why would the flag ship product be a half sized card? You want to spread everything out so you don't localize heat too much, half sized cards come later with the 3060 type models.

Conclusion there is no way this is a half sized card, and if real that isn't a push pull design, it is blowing air on both halves

Half sized card? That thing is still like a brick! Remember what cards used to look like? They were like half inch thick and one third shorter. Now they take up half the case and are like 3 inches thick!

This is almost correct. Based on my sources at a chinese textile mill + a father high up at Nintendo, Ampere will break down as so:But the 3080 wont be the flagship card. This is considered mid-tier/high end. The flagship card will be the 3080ti. Just like it was for the 900, 1000, 2000 series.

Cards back then didn't require auxiliary power, only drawing 30-40W from the slot. The card that introduced us to the dust buster, the FX 5800 Ultra, was a 70W card. Top-end cards these days use 250-300W.Half sized card? That thing is still like a brick! Remember what cards used to look like? They were like half inch thick and one third shorter. Now they take up half the case and are like 3 inches thick!

Well what happened?! CPUs are not following the same trend, aye? Bollocks!!

If you include the memory slots, VRMs, and heat dissipation surface areas that today’s CPUs require, you’ll see that they easily rival GPUs.Well thank you, Sir. You seem very educated on this subject.

What about size though? CPUs have stayed relatively the same in size vs 20 years, but look what happened to GPU's! Morbidity obese!! Are we looking at bigger units in the next 5 years with dedicated power supplies to keep them running?

The more heat an electronic circuit generates, the quicker you need to dissipate it before it burns itself to death. You increase the rate of dissipation in basically two primary ways: pushing it through a heat conductive material with as large a surface area possible preferably with the aid of air pressure from a fan or fans, or by exchanging the heat with another medium such as water. In either case you are taking up a certain volume of space within a PC environment, and the former is easier and cheaper to manage. Unfortunately that means you can't get effective cooling in a single slot solution since you are severely limited in the surface area of the heatsink you can use.Thank you, sir. I have 9800gt, which is arguably longer than mid tier video card, but when compared to mid tier gtx460, the latter is twice as thick, and requires two power plugs, vs one on 9800gt.

When taking into consideration more recent GPU's, only the most lowly brethren sport single sloth configuration..

Taco has feeling we are heading in the wrong direction.

More performance = more power needed = better cooling capacity required. You can't beat physics.

That's what's bothering taco.

Thank you, sir. I have 9800gt, which is arguably longer than mid tier video card, but when compared to mid tier gtx460, the latter is twice as thick, and requires two power plugs, vs one on 9800gt.

Edit: photos for comparison.

View attachment 251910View attachment 251911View attachment 251912

When taking into consideration more recent GPU's, only the most lowly brethren sport single sloth configuration..

Taco has feeling we are heading in the wrong direction.

You might need a block on both sides or two blocks, be interested if GPU is on one side and the VRMs and Ram on the other side of the board. I would think very fast DDR6 will be producing a lot of heat.You can always just water cool it to get some of that space back, but then you got to have room for radiators, either way it's a matter of where you want stuff to take up space.

Or VLB cards of the 90's

View attachment 251906

Power is also provided by the big ass motherboard you have to set a CPU in. Memory is also modular for the CPU. A GPU also needs all that stuff.I am broken record on this, but The GPU form factor could really use a radical shift.

The x86 PC general purpose card cage came into being with the first IBM PC, there was never any expectation that this would be needing to consume/cool 200W+ loads. Even in 1990's you can see the VLB graphics cards didn't even have passive heat sinks, so they were consuming insignificant power.

Now high end GPUs consume hundreds of watts, and in most gaming PCs, they consume much more than CPU. Yet the CPU has Vastly superior airflow, and cooling path.

In a PC tower, the CPU has a near ideal airflow. Intake fan in front, in line with Tower cooler, and in line with exhaust fan at the back.

OTOH. The GPU cooling is a mess. Being vertical card slot based, it doesn't really matter if it takes more slots, the are limited to flat blow down designs which are quite inferior to tower coolers. And there is no decent cooling path. There might be a front intake fan, but it might also be partially obstructed by a PSU shroud, and then even if it isn't, air flow is not that good, because there is no outlet at the back to allow hot air to escape. Blower cards try to make their own exhaust path, but they don't really have enough throughput to cool high end GPUs, so their main benefit is for ventilating cramped cases for better CPU thermals. So instead we have multiple fan open air blow down coolers than dump heat back in the case for making the best of a bad situation.

Ideally the GPU needs a cooling solution and air path more like the CPU. With dedicated intake and exhaust and tower cooling in between them.

Though it will never happen because we tend to get stuck with standards of the past, no matter how ill-suited they are for today's needs.

/rant

Initially this odd cooler design looked interesting, as there was some thought that it could be a push-pull design, which could yield some nice GPU cooling resuts (but still would be dumping heat in the case), but it seems evident now that this isn't push-pull, but more of a pointless gimmick, so no progress in any real way.

He's talking about reorienting it, not moving it to another state... Like a pcie extension riser that allows it oriented horizontal instead of vertical so you can put a similar heat sink like a CPU instead of making them 2-3 slots and still crammed. Lay it flat and have a 60+mm tall heatsink on it with a fan (or two) blowing right through it.Power is also provided by the big ass motherboard you have to set a CPU in. Memory is also modular for the CPU. A GPU also needs all that stuff.

So what would you suggest in how to do it better? You could potentially take the GPU out of the case, but you still need to provide it power. The further away the GPU is from the interface the more latency you introduce. We could make it completely modular like the CPU, but that would potentially double the space needed inside your PC case, making the internal temperature argument moot.

A picture of how that is supposed to work would be great.He's talking about reorienting it, not moving it to another state... Like a pcie extension riser that allows it oriented horizontal instead of vertical so you can put a similar heat sink like a CPU instead of making them 2-3 slots and still crammed. Lay it flat and have a 60+mm tall heatsink on it with a fan (or two) blowing right through it.

Okay, I can picture it now. And it looks ridiculous. I can't imagine it really being practical.Ever seen a mini it's case with a 90 degree pcie adapter so the GPU can lay flat to keep the height down? Like that, except instead of keeping the height down, install a full size cooler and keep it tall. If you have seen them, I'm sure I can track down a picture somewhere. Obviously some imagination is still required as nobody actually makes it the way he's describing.

Edit:

https://www.tweaktown.com/reviews/6932/azza-mini-itx-gaming-chassis-review/index.html

This is one of the cases I have at the house. If you look at the photo gallery you can see the GPU laying flat. If designed properly and "regular height case" you could slap a nice tall cooler on it. Obviously the orientation isn't quite right on it for it to work, it would have to be designed to do it.

This is almost correct. Based on my sources at a chinese textile mill + a father high up at Nintendo, Ampere will break down as so:

3080 - Mid-tier

3080 Ti - Mid-High

3080 Te (Tear Extractor™) - self explanatory

A picture of how that is supposed to work would be great.