luckylinux

Limp Gawd

- Joined

- Mar 19, 2012

- Messages

- 225

I just built a new server out of second hand components (minus the RAM which is new).

- Motherboard: Supermicro X11SSL-F (updated to BIOS 2.6)

- CPU: Intel Xeon E3 1240 V5

- RAM: 4 x Crucial MTA9ASF2G72AZ-3G2B1 (DDR4-3200 ECC UDIMM 1Rx8 CL22)

- PSU: Seasonic G-360

- Cooler: Noctua NH-L12S

All 64GB of RAM are properly detected in BIOS. The RAM runs only at 2133 MHz though (probably CPU limitation).

It never occurred to me before when I was building any other system, but with this one, it boots, but as soon as I enter Debian Linux and try to do some stress test (e.g. compile a Linux Kernel to do a thermal load on the CPU) it just automatically reboots.

Other things I tried:

- Remove some of the DIMMs -> Server refuses to boot (5 short beeps + 1 long beep = no system memory detected)

- Ran Passmark Memtest86 free -> No errors detected in Memtest86 (I only ran it for ~1 hour), but LOTS of Uncorrectable ECC Errors (in all channels/DIMMs) A1/A2/B1/B2

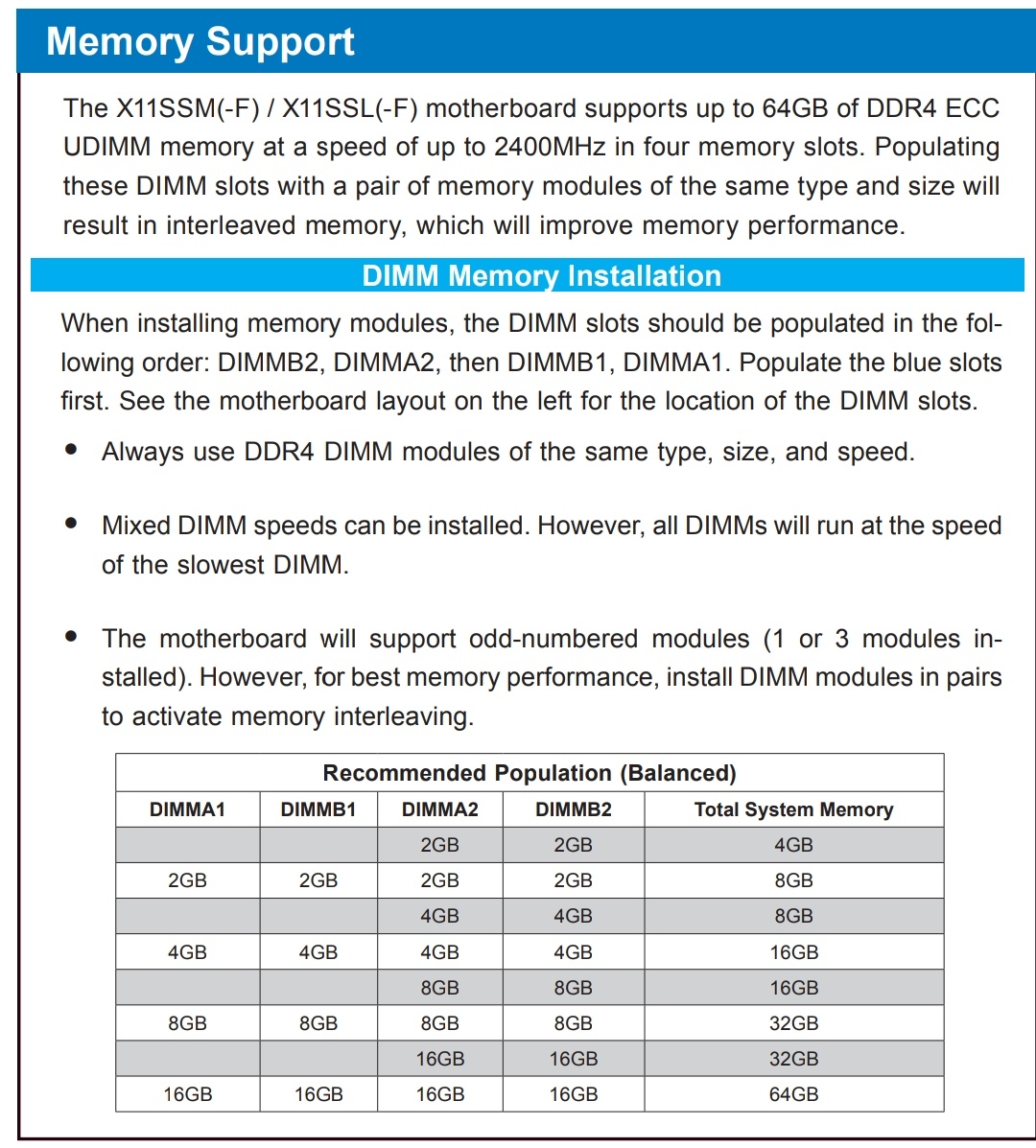

I discovered that while the memory is listed a compatible with Supermicro X11SSL-F on Crucial website, the Motherboard manual states only that:

I assume these were the only memory configurations available when the motherboard came out. Maybe other configurations are also supported ?

Side question: why is my 16GB DIMM listed as 1R8? Shouldn't it be 1R16 since it's a 16GB DIMM?

I will be able to build another similar system in the early future, which (hopefully) will yield a different result, as I cannot determine who is faulty here:

- Motherboard ? No bent pins though ...

- CPU ? Might try to remove cooler, wiggle CPU a bit, and reinstall cooler, maybe that will help a bit

- RAM ? Is the single rank the issue here ?

- PSU ?

Thank you for your help .

.

- Motherboard: Supermicro X11SSL-F (updated to BIOS 2.6)

- CPU: Intel Xeon E3 1240 V5

- RAM: 4 x Crucial MTA9ASF2G72AZ-3G2B1 (DDR4-3200 ECC UDIMM 1Rx8 CL22)

- PSU: Seasonic G-360

- Cooler: Noctua NH-L12S

All 64GB of RAM are properly detected in BIOS. The RAM runs only at 2133 MHz though (probably CPU limitation).

It never occurred to me before when I was building any other system, but with this one, it boots, but as soon as I enter Debian Linux and try to do some stress test (e.g. compile a Linux Kernel to do a thermal load on the CPU) it just automatically reboots.

Other things I tried:

- Remove some of the DIMMs -> Server refuses to boot (5 short beeps + 1 long beep = no system memory detected)

- Ran Passmark Memtest86 free -> No errors detected in Memtest86 (I only ran it for ~1 hour), but LOTS of Uncorrectable ECC Errors (in all channels/DIMMs) A1/A2/B1/B2

I discovered that while the memory is listed a compatible with Supermicro X11SSL-F on Crucial website, the Motherboard manual states only that:

| Max Memory Possible | 4GB DRAM Technology | 8GB DRAM Technology |

| Single Rank UDIMM | 16GB (4x 4GB DIMMs) | 32GB (4x 8GB DIMMs) |

| Dual Rank UDIMMs | 32GB (4x 8GB DIMMs) | 64GB (4x 16GB DIMMs) |

I assume these were the only memory configurations available when the motherboard came out. Maybe other configurations are also supported ?

Side question: why is my 16GB DIMM listed as 1R8? Shouldn't it be 1R16 since it's a 16GB DIMM?

I will be able to build another similar system in the early future, which (hopefully) will yield a different result, as I cannot determine who is faulty here:

- Motherboard ? No bent pins though ...

- CPU ? Might try to remove cooler, wiggle CPU a bit, and reinstall cooler, maybe that will help a bit

- RAM ? Is the single rank the issue here ?

- PSU ?

Thank you for your help

![[H]ard|Forum](/styles/hardforum/xenforo/logo_dark.png)