horrorshow

Lakewood Original

- Joined

- Dec 14, 2007

- Messages

- 9,453

If I can get a 480 for $200, I'm sold.

Follow along with the video below to see how to install our site as a web app on your home screen.

Note: This feature may not be available in some browsers.

because you cant accept nvidia is using mid tier gpus to be sold as high end parts it doesnt mean it isnt true. a 560ti(240usd) replacement sold for 380usd. a 570ti448(280usd) replacement sold for 500usd a year later a true high end part sold for 650-1000usd when the older high end was 500usd.hmm nope how do you know its overpriced?

it seems that it could have more TPCs based on Nvidia P100 dataPS the SM layout itself hasn't changed it got wider but thats it, which helps throughput but increases latency so that latency has to be hidden by more registers which it does have that.

ALU performance per ALU will only change based on frequency. The rest of the chip can change when through put and latency is addressed.

if there is a 15-20% gain (which is possible,specially with the extra cache and the better gemeometry processor and probably more ROPs) then it should be around 290x/390 perfomance for a r9 380 replacement, another 10% SP would put it above R9 390xAMD will not say they are getting 2.5 perf /watt unless its the best they are getting. And that is the same figures as GF, Samsung and AMD has stated they are getting for the 14nm node. If ALU amounts are dropping or staying the same (which way you want to look at is up to you, comparative to nV parts they are dropping % wise, and what they are replacing they are staying the same) on polaris which at this point its a given for their entire line up, we can figure out what the best case is for Polaris and how much extra through put they are getting extra over what they are replacing, and guess what it ends up around 10% more performance at most I would put it 15% more than Tonga.

it is that many people keep comparing GP104 to what could be a GP106 equivalentThis is exactly what I have been saying, they shouldn't be compared as they are in two totally separate brackets.

Its a Tonga replacement not a 290x replacement, its clearly seen as that because of the ALU amounts.

These are not its a high end parts.

still there is missing information like the shared cache for SIMDs and CUsThe underlying changes to Polaris will have better performance in many areas that is a given, how much in those areas we don't know outside of the 10% -15% increase in throughput.

because you cant accept nvidia is using mid tier gpus to be sold as high end parts it doesnt mean it isnt true. a 560ti(240usd) replacement sold for 380usd. a 570ti448(280usd) replacement sold for 500usd a year later a true high end part sold for 650-1000usd when the older high end was 500usd.

it seems that it could have more TPCs based on Nvidia P100 data

if there is a 15-20% gain (which is possible,specially with the extra cache and the better gemeometry processor and probably more ROPs) then it should be around 290x/390 perfomance for a r9 380 replacement, another 10% SP would put it above R9 390x

it is that many people keep comparing GP104 to what could be a GP106 equivalent

still there is missing information like the shared cache for SIMDs and CUs

I am not comparing them due to die size, rather how they measure they GPUs use their name for their GPUs and how they prioritize bus width for each segmentThe tiers are setup by performance, not the size of the GPU, just like in the past, the 7900 which was a much smaller gpu than the 1900xtx was in the same performance level at that time with games coming out at that time so it could demand the same cost premium as the x1900xtx. Just as the 4xxx series of chips were smaller and more perf/watt, perf/mm2 over the gtx 2xx series.

In any case Pascal which is to be released soon is around 300mm^2 so it fits into the performance (high end) bracket. These are not the enthusiast bracket. So I don't see where the problem is if they charge the same amount as last generation as long as the performance boost is there.

none talks about local cache, nor how the SIMD areSome of that information is verified already.

Assuming none of the repeated leaks are fake, the only way Polaris 10 can compete with the Fury X is if AMD puts two of them on the same card. That looks increasingly likely.

So, in a couple months, in order of decreasing performance, something like:

Radeon Pro Duo (unchanged)

490x2 (dual polaris 10)

490x (polaris 10)

480x2 (dual binned polaris 10?)

480x (binned polaris 10)

470x and lower (various levels of polaris 11, maybe some dual GPU cards here too)

Everybody in enthusiast forums like [H] wants the best and baddest. But remember (rumored) polaris 10 performance comes in roughly equivalent to a 390/GTX970, so you can imagine getting a 390x2 with dramatically lower heat and power usage-- and that could be pretty interesting when compared against the GP104, depending on pricing.

I am not comparing them due to die size, rather how they measure they GPUs use their name for their GPUs and how they prioritize bus width for each segment

yea if it were comparison With gpu only, the gpu core perf/watt increase would be quite good since Fiji xt core had higher power draw than a 390,but the memory system also uses power and would change the numbersBracket prices don't change much, unless there is a need to change a bracket price (increased wafer cost being one of them), bus size doesn't matter if the GPU can utilize or is more efficient at using the available bandwidth.

Re branding happens, using a high bracket chip in a lower bracket, at times no big deal, how many times has AMD done the same?

Caching information for end users isn't that important, AMD won't talk much about this till they are ready to release, but in any event 2.5 perf/watt, gives you an over all idea of what they were going for. The reason why that 2.5 perf/watt wasn't in comparison to Fiji, is because Fiji used HBM, which by using HBM gave AMD a 30% power reduction right off the bat (1.75 perf per watt increase) and Polaris is not using HBM.

Then why they have internal codenames with same nomenclature? it is another way to divide SKUsChip code names and bus widths aren't really correlated to market positioning or branding. Those are based on competition and relative performance.

Hence the same chip can serve in many different products as those dynamics change over time.

Oh it's definitely not ideal. Thing is, Vega ain't ready yet and AMD needs some way to answer to GP104.

yea if it were comparison With gpu only, the gpu core perf/watt increase would be quite good since Fiji xt core had higher power draw than a 390,but the memory system also uses power and would change the numbers

Then why they have internal codenames with same nomenclature? it is another way to divide SKUs

And anither difference is between a midrange gpu gm204/gk104 and the high end gm200/gk110 there is a 30-35% gap in performance like there was with gf110/100 and gf114/104

Sounds like it may be 3870x2 all over again then. AMD couldn't touch G80 with a single GPU and so started their dual-GPU card pedigree (although I think there was a dual-GPU trident or matrox card in the late 90s if I recall, but not counting that one)

ATi itself had the Rage Fury MAXX. It wasn't all that super great, but it existed.

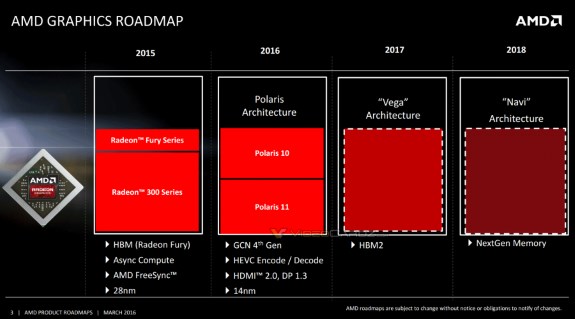

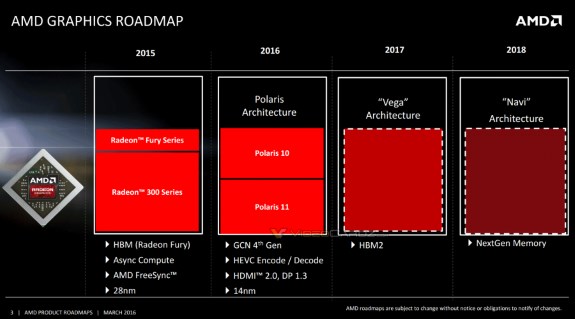

This is the latest AMD roadmap.. It seems to put pretty conclusively to rest the delineation between Polaris 10 and 11.

The split point woud be about the 380X/390. I'll speculate that 390 and up are Polaris 10.

Razor1 posted that yesterday. Completely agree with TaintedSquirrel, the only way AMD can compete with the Fury X using Polaris GPUs on GDDR5 is to put multiples on a single card. And I think that is what they will do.

The dotted line doesn't mean Vega will replace their entire lineup. It will be their Fury or Rage highest-end card, to compete with GP102 in early 2017.

Razor1 posted that yesterday. Completely agree with TaintedSquirrel, the only way AMD can compete with the Fury X using Polaris GPUs on GDDR5 is to put multiples on a single card. And I think that is what they will do.

The dotted line doesn't mean Vega will replace their entire lineup. It will be their Fury or Rage highest-end card, to compete with GP102 in early 2017.

Multi die GPUs are the future if you like it or not. I for one, welcome our multi die, multi gpu overlords! As long as the downsides/compatibility/latency and the rest is sorted in DX12/Vulkan/whatever you want then I’m happy.

The per die performance will be sufficient if they mature 14nm a year or so to do it at 4k as it is, so it won't really matter for dx11 compatibility looking forward. Perhaps these first two releases more than latter though if at all. Either way, a 390x isn't anything to sneeze at anyway in worst case.

If they market it right, making mgpu for the midrange, they will force the market. Plus the marketing is a dream, almost anyone in the midrange would jump at a dual card if given the choice between an okay single and a dual that pulls well ahead when working, or does well even when not.

Someone has to do it first. DX12 makes this easier for them too.

Devs always follow hardware, that's how it is.

And If they can make a solution where this load is handled by hardware, the card is 'seen' as a single card, you never know... little bit like voodoo2 style.

You're completely missing how this would be implemented. It's effectively gluing 2 chips together so they appear and work as one. You wouldn't need Crossfire support any more than you would for a single card. This likely won't be a board with a GPU on each end. It will look more like a fiji with the GPU and 4 HBM stacks, but instead will have 2 GPUs on the package with the HBM. That would likely allow a level of interconnection that makes Crossfire irrelevant.Would you pay $500 for a dual card that has a (much less than) 30% chance of working well, or a single Pascal that will always perform at 980Ti level and above, possibly more if overclocked?

If rumours are true, you're talking about 2x 390x level of performance best case scenario, vs 20-30% faster than a 980Ti. AFAIK the 980Ti is around 30% faster than a 390x, and 30% faster on top of that will mean around 70% faster than a stock 390x.

You're talking about sometimes double performance of a 390x, vs a constant 70% improvement over the 390x at the same price point.

I still don't see a reason to go the AMD route unless they can afford to optimise CFX for nearly EVERY RELEVANT game.

Not at the same price point, no. AMD would need to price substantially below NV for that to be attractive. And obviously they don't want to do that, but what's the alternative-- not compete at all until Vega?You're talking about sometimes double performance of a 390x, vs a constant 70% improvement over the 390x at the same price point.

Tell that to DX11 support.

Hardly surprising it will be a 2H story as they're launching around June?And here's the official confirmation from AMD:

AMD confirms Polaris is not for the high-end market

" AMD also confirmed the new 14nm Polaris architecture is on track to ship towards the middle of this year. "We remain on track to introduce our new 14-nanometer FinFET based Polaris GPUs midyear. Polaris delivers double the performance per watt of our current mainstream offerings, which we believe provides us with significant opportunities to gain share." In response to a question by an analyst from JPMorgan Securities about channel fill and Polaris related revenue, AMD CEO Lisa Su replied the majority of Polaris is a second half of the year story."

Maybe, but AMD demoed the cards first, it's just nobody has seen one publicly. They could be really ugly or the form factor is a dead giveaway. If they all come out looking like nanos...Certainly, but on the other hand we have leaked pictures of actual GP104 chips floating around, so it seems NV may be ahead on actual production as well.